Technology peripherals

Technology peripherals

AI

AI

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Written before&The author’s personal understanding

Currently, in the entire autonomous driving system, the perception module plays a vital role when driving on the road Only after the autonomous driving vehicle obtains accurate sensing results through the perception module can the downstream control module in the autonomous driving system make timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks.

The BEV perception algorithm based on pure vision has received widespread attention from industry and academia because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks. . In recent years, many visual perception algorithms based on BEV space have emerged one after another and have demonstrated excellent perception performance on public data sets.

Currently, perception algorithms based on BEV space can be roughly divided into two types of algorithm models based on the way to construct BEV features:

- One type is the forward BEV feature represented by the LSS algorithm Construction method: This type of perception algorithm model first uses the depth estimation network in the perception model to predict the semantic feature information and discrete depth probability distribution of each pixel of the feature map, and then uses external methods to combine the obtained semantic feature information and discrete depth probability. The semantic frustum features are constructed by product operation, and BEV pooling and other methods are used to finally complete the construction process of BEV spatial features.

- The other type is the reverse BEV feature construction method represented by the BEVFormer algorithm. This type of perception algorithm model first explicitly generates 3D voxel coordinate points in the perceived BEV space, and then uses the camera's The internal and external parameters project the 3D voxel coordinate points back to the image coordinate system, and extract and aggregate the pixel features at the corresponding feature positions to construct the BEV features in the BEV space.

Although both algorithms can accurately generate features in BEV space and achieve 3D perception results, there are the following two problems in current 3D target perception algorithms based on BEV space, such as the BEVFormer algorithm. :

- Question 1: Since the overall framework of the BEVFormer perception algorithm model adopts the Encoder-Decoder network structure, the main idea is to use the Encoder module to obtain the features in the BEV space, and then use the Decoder module to predict the final perception result. And by calculating the loss between the output perception result and the true value target, the process of predicting the BEV spatial characteristics of the model is realized. However, the parameter update method of this network model will rely too much on the perceptual performance of the Decoder module, which may lead to the problem that the BEV features output by the model are not aligned with the true value BEV features, thus further restricting the final performance of the perceptual model.

- Question 2: Since the Decoder module of the BEVFormer perception algorithm model still uses the self-attention module->cross-attention module-> feedforward neural network steps in the Transformer to complete the construction of Query features and output the final As for the detection results, the entire process is still a black box model, lacking good interpretability. At the same time, there is also great uncertainty in the one-to-one matching process between Object Query and the true value target during the model training process.

In order to solve the problems of the BEVFormer perception algorithm model, we improved it and proposed a 3D detection algorithm model CLIP-BEVFormer based on surround images. By introducing the contrastive learning method, we enhanced the model's ability to construct BEV features and achieved leading-level perceptual performance on the nuScenes data set.

Article link: https://arxiv.org/pdf/2403.08919.pdf

Overall architecture & details of the network model

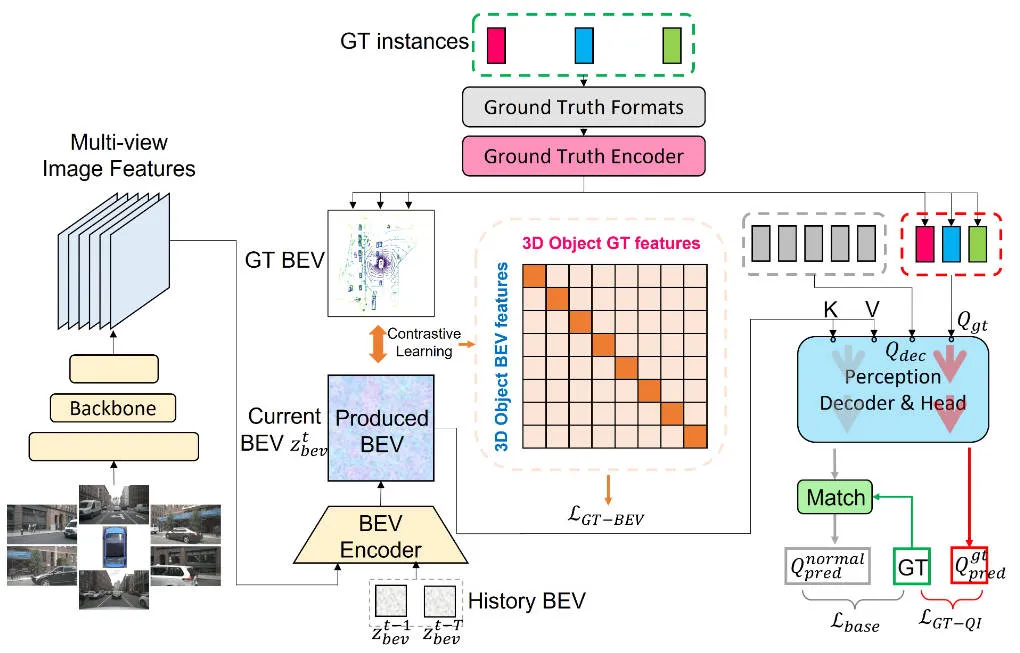

In details Before introducing the details of the CLIP-BEVFormer perception algorithm model proposed in this article, the following figure shows the overall network structure of the CLIP-BEVFormer algorithm.

The overall flow chart of the CLIP-BEVFormer perception algorithm model proposed in this article

The overall flow chart of the CLIP-BEVFormer perception algorithm model proposed in this article

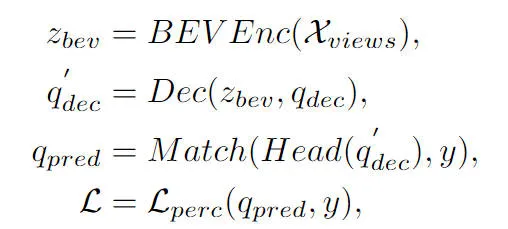

It can be seen from the overall flow chart of the algorithm that the CLIP-BEVFormer algorithm model proposed in this article is based on the BEVFormer algorithm model. Based on the improvement, here is a brief review of the implementation process of the BEVFormer perception algorithm model. First, the BEVFormer algorithm model inputs the surround image data collected by the camera sensor, and uses the 2D image feature extraction network to extract the multi-scale semantic feature information of the input surround image. Secondly, the Encoder module containing temporal self-attention and spatial cross-attention is used to complete the conversion process of 2D image features to BEV spatial features. Then, a set of Object Query is generated in the form of normal distribution in the 3D perception space and sent to the Decoder module to complete the interactive utilization of spatial features with the BEV space features output by the Encoder module. Finally, the feedforward neural network is used to predict the semantic features queried by Object Query, and the final classification and regression results of the network model are output. At the same time, during the training process of the BEVFormer algorithm model, the one-to-one Hungarian matching strategy is used to complete the distribution process of positive and negative samples, and classification and regression losses are used to complete the update process of the overall network model parameters. The overall detection process of the BEVFormer algorithm model can be expressed by the following mathematical formula:

Among them, in the formula represents the Encoder feature extraction module in the BEVFormer algorithm, represents the Decoder decoding module in the BEVFormer algorithm, represents the true value target label in the data set, and represents the current BEVFormer algorithm model. Output 3D perception results.

Generation of true value BEV

As mentioned above, most of the existing 3D target detection algorithms based on BEV space do not have explicit alignment The generated BEV spatial features are supervised, which leads to the problem that the BEV features generated by the model may be inconsistent with the real BEV features. This difference in the distribution of BEV spatial features will restrict the final perceptual performance of the model. Based on this consideration, we proposed the Ground Truth BEV module. Our core idea in designing this module is to enable the BEV features generated by the model to be aligned with the current true value BEV features, thereby improving the performance of the model.

Specifically, as shown in the overall network framework diagram, we use a ground truth encoder () to encode the category label and spatial bounding box position information of any ground truth instance on the BEV feature map. Encoding, the process can be expressed by a formula in the following form:

The formula has a feature dimension of the same size as the generated BEV feature map, representing the encoded feature information of a true value target. During the encoding process, we adopted two forms, one is a large language model (LLM), and the other is a multi-layer perceptron (MLP). Through experimental results, we found that the two methods basically achieved the same performance.

In addition, in order to further enhance the boundary information of the true value target on the BEV feature map, we crop the true value target on the BEV feature map according to its spatial position, and perform the cropping The features use pooling operations to construct the corresponding feature information representation. The process can be expressed in the following form:

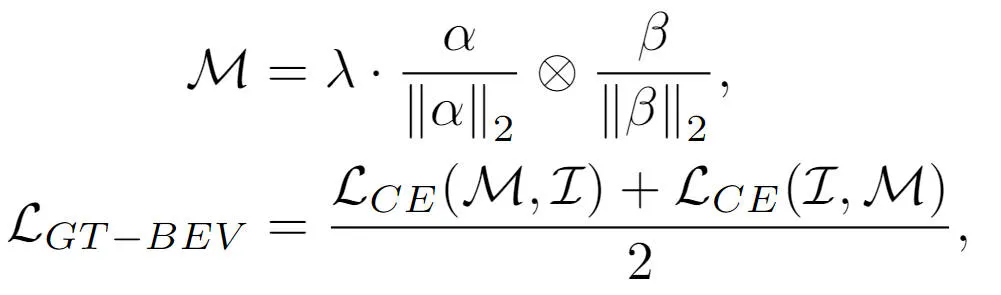

Finally, in order to further align the BEV features generated by the model with the true value BEV features, we adopted The comparative learning method is used to optimize the element relationship and distance between the two types of BEV features. The optimization process can be expressed in the following form:

where the and in the formula represent respectively The similarity matrix between the generated BEV features and the true value BEV features represents the logical scale factor in contrastive learning, represents the multiplication operation between matrices, and represents the cross-entropy loss function. Through the above contrastive learning method, the method we propose can provide clearer feature guidance for the generated BEV features and improve the perceptual ability of the model.

True value target query interaction

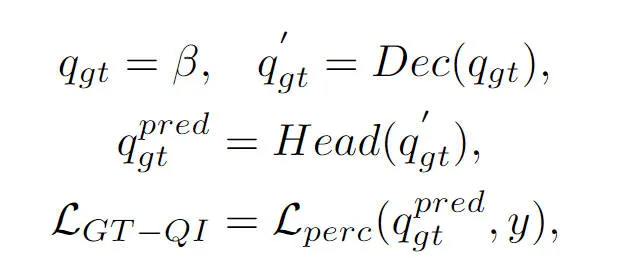

This part is also mentioned in the previous article. The Object Query in the BEVFormer perception algorithm model interacts with the generated BEV features through the Decoder module to obtain the corresponding target query characteristics, but the process as a whole is still a black box process, lacking a complete process understanding. To address this problem, we introduced the truth value query interaction module, which uses the truth value target to execute the BEV feature interaction of the Decoder module to stimulate the learning process of model parameters. Specifically, we introduce the truth target encoding information output by the truth encoder () module into Object Query to participate in the decoding process of the Decoder module. As normal Object Query, we participate in the same self-attention module, cross-attention module and The feedforward neural network outputs the final perception result. However, it should be noted that during the decoding process, all Object Query uses parallel computing to prevent the leakage of true value target information. The entire truth value target query interaction process can be abstractly expressed in the following form:

Among them, in the formula represents the initialized Object Query, and represents the truth value Object Query process respectively. The output results of the Decoder module and the sensing detection head. By introducing the interaction process of the true value target in the model training process, the true value target query interaction module we proposed can realize the interaction between the true value target query and the true value BEV feature, thereby assisting the parameter update process of the model Decoder module.

Experimental results & evaluation indicators

Quantitative analysis part

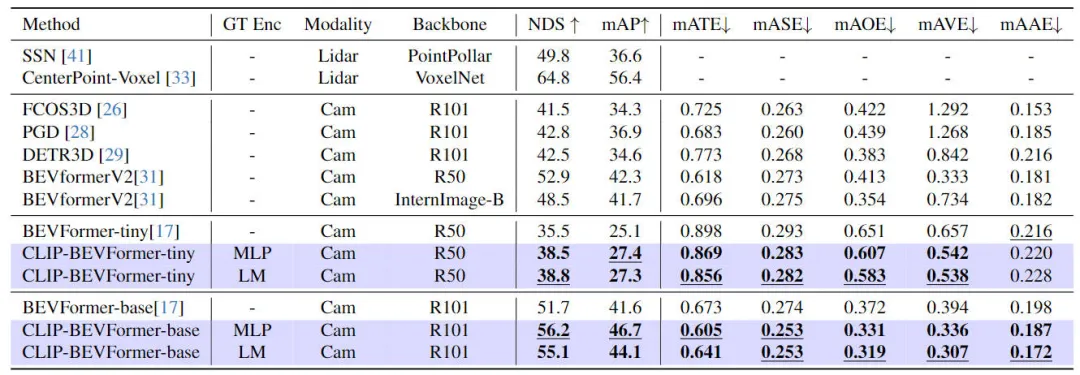

In order to verify the effectiveness of the CLIP-BEVFormer algorithm model we proposed sex, we conducted relevant experiments on the nuScenes data set from the perspectives of 3D perception effects, long-tail distribution of target categories in the data set, and robustness. The following table shows the differences between our proposed algorithm model and other 3D perception algorithm models. Accuracy comparison on nuScenes dataset.

Comparative results between the method proposed in this article and other perception algorithm models

In this part of the experiment, we evaluated the perceived performance under different model configurations. Specifically, we applied the CLIP-BEVFormer algorithm model to the tiny and base variants of BEVFormer. In addition, we also explored the impact of using pre-trained CLIP models or MLP layers as ground truth target encoders on model perceptual performance. It can be seen from the experimental results that whether it is the original tiny or base variant, after applying the CLIP-BEVFormer algorithm we proposed, the NDS and mAP indicators have stable performance improvements. In addition, through the experimental results, we can find that the algorithm model we proposed is not sensitive to whether the MLP layer or the language model is selected for the ground truth target encoder. This flexibility can make the CLIP-BEVFormer algorithm we proposed more efficient. Adaptable and easy to deploy on the vehicle. In summary, the performance indicators of various variants of our proposed algorithm model consistently indicate that the proposed CLIP-BEVFormer algorithm model has good perceptual robustness and can achieve excellent detection performance under different model complexity and parameter amounts. .

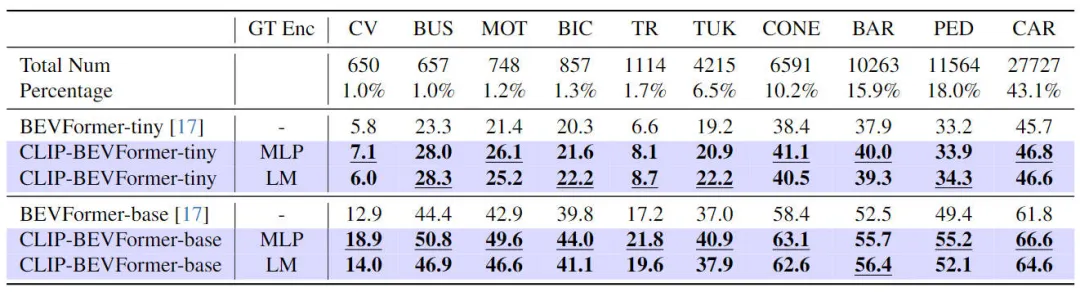

In addition to verifying the performance of our proposed CLIP-BEVFormer on 3D perception tasks, we also conducted long-tail distribution experiments to evaluate the robustness of our algorithm in the face of long-tail distributions in the data set. Stickiness and generalization ability, the experimental results are summarized in the following table

Performance of the proposed CLIP-BEVFormer algorithm model on long-tail problems

passed the above It can be seen from the experimental results in the table that the nuScenes data set shows a huge imbalance in the number of categories. Some categories such as (construction vehicles, buses, motorcycles, bicycles, etc.) account for a very low proportion, but for cars The proportion is very high. We evaluate the perceptual performance of the proposed CLIP-BEVFormer algorithm model on feature categories by conducting relevant experiments with long-tail distributions, thereby verifying its processing ability to solve less common categories. It can be seen from the above experimental data that the proposed CLIP-BEVFormer algorithm model has achieved performance improvements in all categories, and in categories that account for a very small proportion, the CLIP-BEVFormer algorithm model has demonstrated obvious substantive performance Improve.

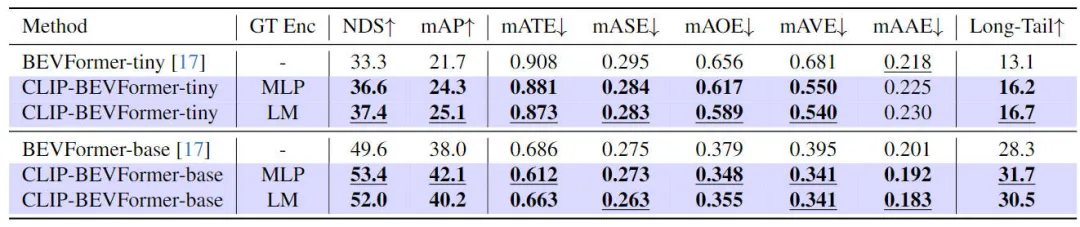

Considering that autonomous driving systems in real environments need to face problems such as hardware failures, severe weather conditions, or sensor failures that are easily caused by man-made obstacles, we further experimentally verified the robustness of the proposed algorithm model. Specifically, in order to simulate the sensor failure problem, we randomly blocked the camera of a camera during the model implementation inference process, so as to simulate the scene where the camera may fail. The relevant experimental results are shown in the table below

Robustness experimental results of the proposed CLIP-BEVFormer algorithm model

Robustness experimental results of the proposed CLIP-BEVFormer algorithm model

It can be seen from the experimental results that no matter under the model parameter configuration of tiny or base, the CLIP-BEVFormer algorithm model we proposed is always better than the baseline model of the same configuration of BEVFormer, which verifies that our algorithm model performs well in simulation Superior performance and excellent robustness under sensor failure conditions.

Qualitative analysis part

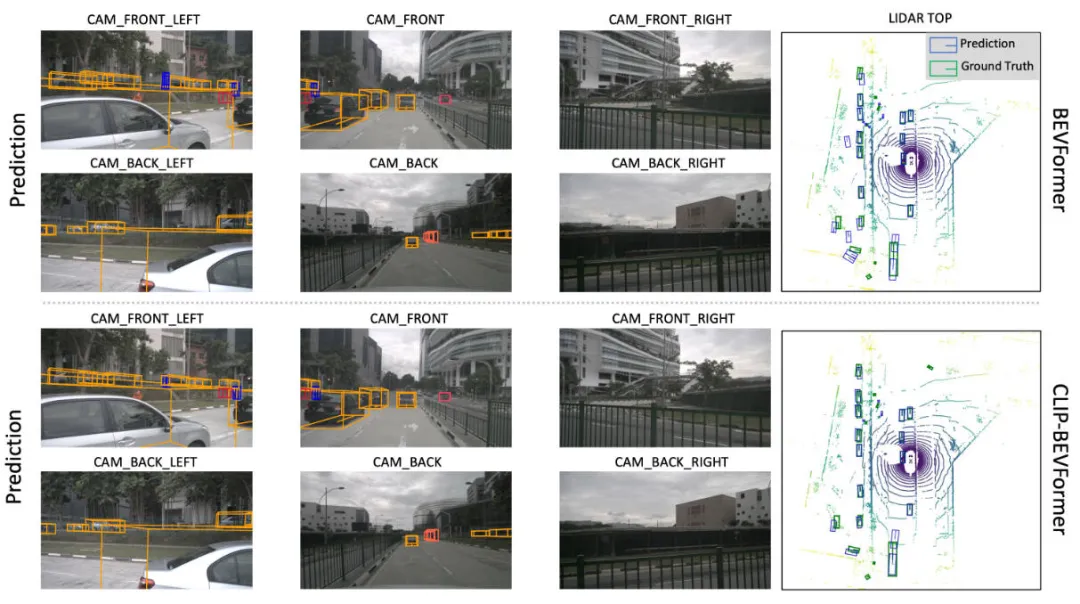

The following figure shows the visual comparison of the perception results of our proposed CLIP-BEVFormer algorithm model and the BEVFormer algorithm model. It can be seen from the visual results that the perception results of the CLIP-BEVFormer algorithm model we proposed are closer to the true value target, indicating the effectiveness of the true value BEV feature generation module and the true value target query interaction module we proposed.

Visual comparison of the perception results of the proposed CLIP-BEVFormer algorithm model and the BEVFormer algorithm model

Conclusion

In this article, in view of the lack of display supervision in the process of generating BEV feature maps in the original BEVFormer algorithm and the uncertainty of interactive query between Object Query and BEV features in the Decoder module, we proposed the CLIP-BEVFormer algorithm model and started from Experiments were conducted on the 3D perception performance of the algorithm model, target long-tail distribution, and robustness to sensor failures. A large number of experimental results show the effectiveness of the CLIP-BEVFormer algorithm model we proposed.

The above is the detailed content of CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

The latest from Oxford University! Mickey: 2D image matching in 3D SOTA! (CVPR\'24)

Apr 23, 2024 pm 01:20 PM

Project link written in front: https://nianticlabs.github.io/mickey/ Given two pictures, the camera pose between them can be estimated by establishing the correspondence between the pictures. Typically, these correspondences are 2D to 2D, and our estimated poses are scale-indeterminate. Some applications, such as instant augmented reality anytime, anywhere, require pose estimation of scale metrics, so they rely on external depth estimators to recover scale. This paper proposes MicKey, a keypoint matching process capable of predicting metric correspondences in 3D camera space. By learning 3D coordinate matching across images, we are able to infer metric relative

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

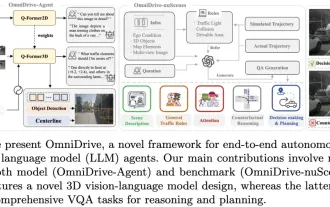

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

LLM is all done! OmniDrive: Integrating 3D perception and reasoning planning (NVIDIA's latest)

May 09, 2024 pm 04:55 PM

Written above & the author’s personal understanding: This paper is dedicated to solving the key challenges of current multi-modal large language models (MLLMs) in autonomous driving applications, that is, the problem of extending MLLMs from 2D understanding to 3D space. This expansion is particularly important as autonomous vehicles (AVs) need to make accurate decisions about 3D environments. 3D spatial understanding is critical for AVs because it directly impacts the vehicle’s ability to make informed decisions, predict future states, and interact safely with the environment. Current multi-modal large language models (such as LLaVA-1.5) can often only handle lower resolution image inputs (e.g.) due to resolution limitations of the visual encoder, limitations of LLM sequence length. However, autonomous driving applications require

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images