A review revealing basic models and robot development paths

Without changing the original meaning, robots are a technology that can have unlimited possibilities, especially when combined with smart technology. Recently, some large-scale models with revolutionary applications are expected to become the intelligent hub of robots, helping robots to perceive and understand the world, make decisions and plan

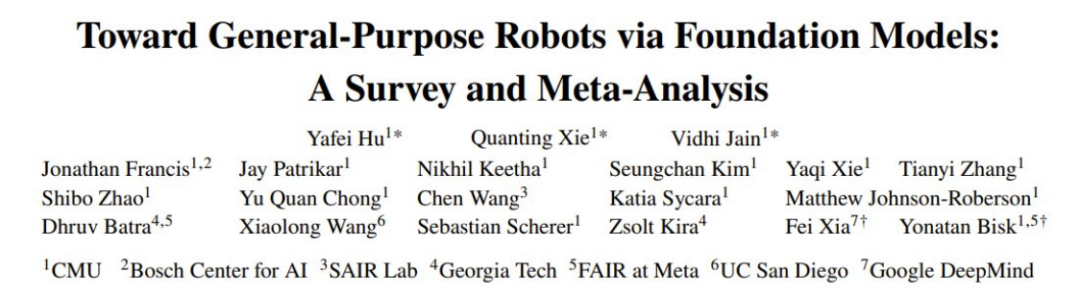

Recently, CMU's Yonatan Bisk and Google DeepMind A joint team led by Fei Xia released a review report introducing the application and development of basic models in the field of robotics. The first aurthor of the report is Yafei Hu, a fourth-year doctoral student at CMU whose research focuses on the intersection of robotics and artificial intelligence. Collaborating with him is Quanting Xie, who focuses on exploring embodied intelligence through basic models.

Paper address: https://arxiv.org/pdf/2312.08782.pdf

Development Robots that can autonomously adapt to different environments have always been a dream of mankind, but this is a long and challenging road. Previously, robot perception systems using traditional deep learning methods usually required a large amount of labeled data to train supervised learning models, and the cost of labeling large data sets through crowdsourcing was very high.

In addition, due to the limited generalization ability of classic supervised learning methods, in order to deploy these models to specific scenarios or tasks, these trained models usually require carefully designed domain adaptation. technology, which in turn often requires further data collection and annotation steps. Similarly, classical robot planning and control approaches often require careful modeling of the world, the agent's own dynamics, and/or the dynamics of other agents. These models are usually built for each specific environment or task, and when conditions change, the model needs to be rebuilt. This shows that the migration performance of the classic model is also limited.

In fact, for many use cases, building effective models is either too expensive or simply impossible. Although deep (reinforcement) learning-based motion planning and control methods help alleviate these problems, they still suffer from distribution shift and reduced generalization ability.

Although there are many challenges in developing general-purpose robotic systems, the fields of natural language processing (NLP) and computer vision (CV) have made rapid progress recently, including for NLP Large language model (LLM), diffusion model for high-fidelity image generation, powerful visual model and visual language model for CV tasks such as zero-shot/few-shot generation.

The so-called "foundation model" is actually a large pre-training model (LPTM). They have powerful visual and verbal abilities. Recently, these models have also been applied in the field of robotics and are expected to give robotic systems open-world perception, task planning and even motion control capabilities. In addition to using existing vision and/or language basic models in the field of robotics, some research teams are developing basic models for robot tasks, such as action models for manipulation or motion planning models for navigation. These basic robot models demonstrate strong generalization capabilities and can adapt to different tasks and even specific solutions. There are also researchers who directly use vision/language basic models for robot tasks, which shows the possibility of integrating different robot modules into a single unified model.

Although vision and language basic models have promising prospects in the field of robotics, and new robot basic models are also being developed, there are still many challenges in the field of robotics that are difficult to solve.

From the perspective of actual deployment, models are often unreproducible, unable to generalize to different robot forms (multi-embodied generalization) or difficult to accurately understand which behaviors in the environment is feasible (or acceptable). In addition, most studies use Transformer-based architectures, focusing on semantic perception of objects and scenes, task-level planning, and control. Other parts of the robot system are less studied, such as basic models for world dynamics or basic models that can perform symbolic reasoning. These require cross-domain generalization capabilities.

Finally, we also need more large-scale real-world data and high-fidelity simulators that support diverse robotic tasks.

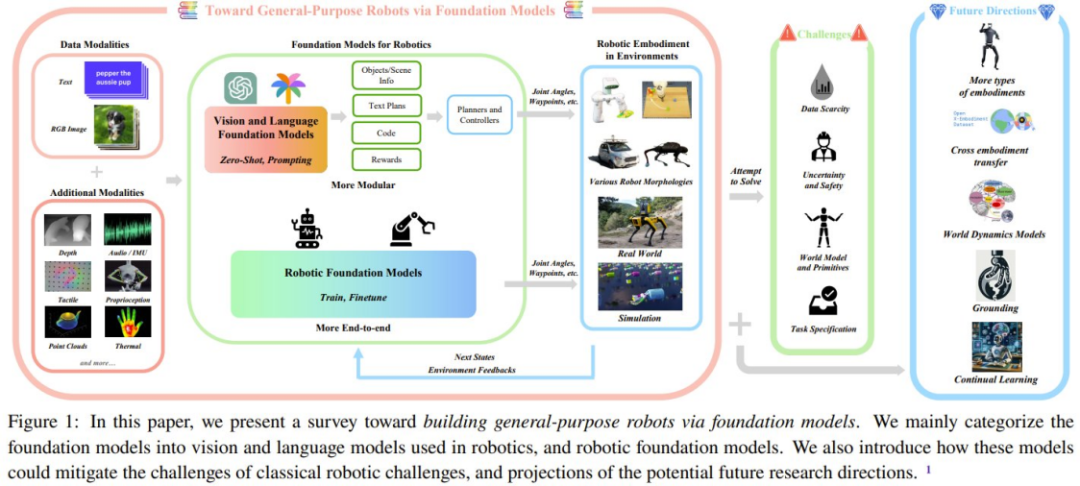

This review paper summarizes the basic models used in the field of robotics, with the goal of understanding how the basic models can help solve or alleviate the core challenges in the field of robotics.

In this review, the term "foundation models" used by researchers includes two aspects of the field of robotics: (1) the main existing visual and language models, mainly Achieved through zero-shot and contextual learning; (2) Use robot-generated data to specifically develop and apply basic models to solve robot tasks. The researchers summarized the methods of the basic model in relevant papers and conducted a meta-analysis of the experimental results of these papers

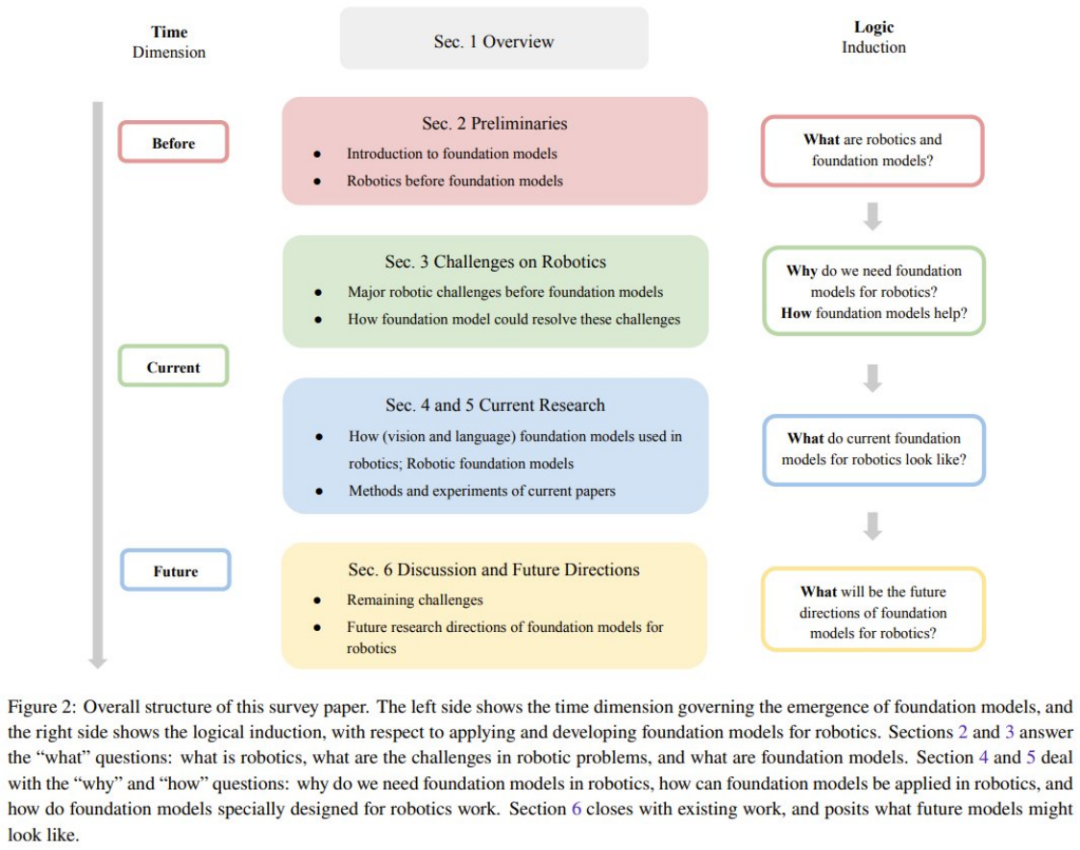

Overall structure of the review

Preliminary knowledge

In order to help readers better understand For the content of this review, the team first provides a section of preparatory knowledge

They will first introduce the basic knowledge of robotics and the current best technology. The main focus here is on methods used in the field of robotics before the era of basic models. Here is a brief explanation, please refer to the original paper for details.

- #The main components of the robot can be divided into three parts: perception, decision-making and planning, and action generation. The team divides robot perception into passive perception, active perception and state estimation.

- #In the robot decision-making and planning section, the researchers introduced classic planning methods and learning-based planning methods.

- There are also classic control methods and learning-based control methods for machine action generation.

Next, the team will introduce basic models and mainly focus on the fields of NLP and CV. The models involved include: LLM, VLM, visual basic model, text conditional image Generate models.

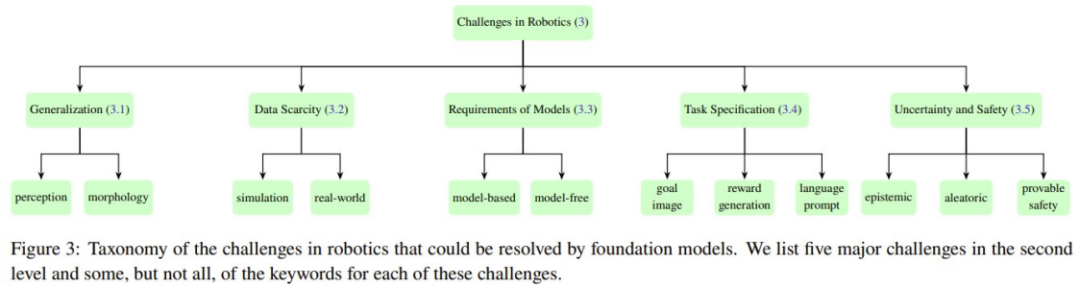

Challenges facing the field of robotics

Five core challenges faced by different modules of a typical robotic system. Figure 3 shows the classification of these five challenges.

Generalization

Robot systems tend to Difficulty accurately sensing and understanding their environment. They also lack the ability to generalize training results on one task to another, which further limits their usefulness in the real world. In addition, due to different robot hardware, it is also difficult to transfer the model to different forms of robots. The generalization problem can be partially solved by using the base model for robots. Further questions such as generalization to different robot forms remain to be answered.

Data Scarcity

To develop reliable robot models, large-scale, high-quality data is crucial. Efforts are already underway to collect large-scale data sets from the real world, including autonomous driving, robot operation trajectories, etc. And collecting robot data from human demonstrations is expensive. However, the process of collecting sufficient and extensive data in the real world is further complicated by the diversity of tasks and environments. There are also security concerns about collecting data in the real world. In addition, in the real world, it is very difficult to collect data on a large scale, and it is even more difficult to collect the Internet-scale image/text data used to train the basic model.

To address these challenges, many research efforts have attempted to generate synthetic data in simulated environments. These simulated environments can provide a very realistic virtual world, allowing robots to learn and apply their skills in situations close to real-life scenarios. However, there are some limitations to using simulated environments, especially in terms of object diversity, which make it difficult to directly apply the skills learned to real-world situations

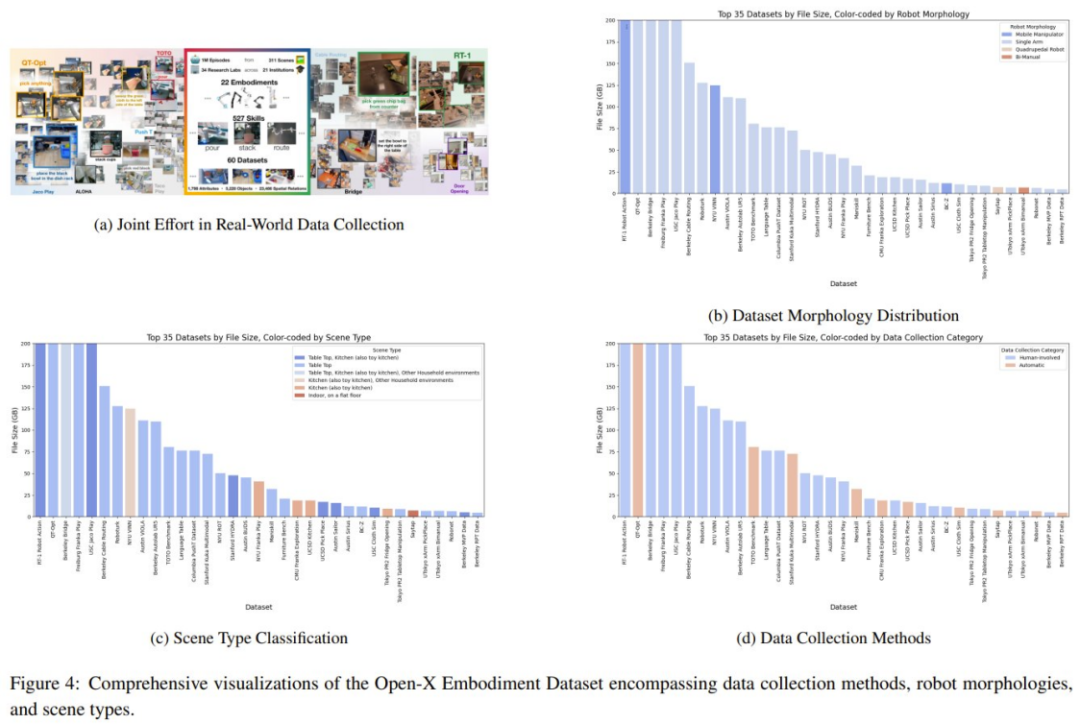

A promising The method is collaborative data collection, which collects data from different laboratory environments and robot types together, as shown in Figure 4a. However, the team took an in-depth look at the Open-X Embodiment Dataset and discovered that there were some limitations in terms of data type availability.

Model and primitive requirements

Classical planning and control methods usually require carefully designed environment and robot models. Previous learning-based methods (such as imitation learning and reinforcement learning) trained policies in an end-to-end manner, that is, obtaining control outputs directly based on sensory inputs, thus avoiding the need to build and use models. These methods can partially solve the problem of relying on explicit models, but they are often difficult to generalize to different environments and tasks.

There are two problems that need to be solved: (1) How to learn strategies that are independent of the model and can generalize well? (2) How to learn excellent world models to apply classic model-based methods?

Task specification

To achieve a general-purpose agent, a key challenge is to understand the task specification and translate it into Rooted in the robot's current understanding of the world. Typically, these task specifications are provided by the user, who only has a limited understanding of the limitations of the robot's cognitive and physical capabilities. This raises many questions, including what best practices can be provided for these task specifications, and whether drafting these specifications is natural and simple enough. It is also challenging to understand and resolve ambiguities in task specifications based on the robot's understanding of its capabilities.

Uncertainty and Safety

In order to deploy robots in the real world, a key challenge is to deal with Uncertainty inherent in the environment and task specifications. Depending on the source, uncertainty can be divided into epistemic uncertainty (uncertainty caused by lack of knowledge) and accidental uncertainty (noise inherent in the environment).

The cost of uncertainty quantification (UQ) may be so high that research and applications are unsustainable, and it may also prevent downstream tasks from being solved optimally. Given the massively over-parameterized nature of the underlying model, in order to achieve scalability without sacrificing model generalization performance, it is crucial to provide UQ methods that preserve the training scheme while changing the underlying architecture as little as possible. Designing robots that can provide reliable confidence estimates of their own behavior and, in turn, intelligently request clearly stated feedback remains an unsolved challenge.

Despite recent progress, ensuring that robots have the ability to learn from experience to fine-tune their strategies and stay safe in new environments remains challenging.

Overview of Current Research Methods

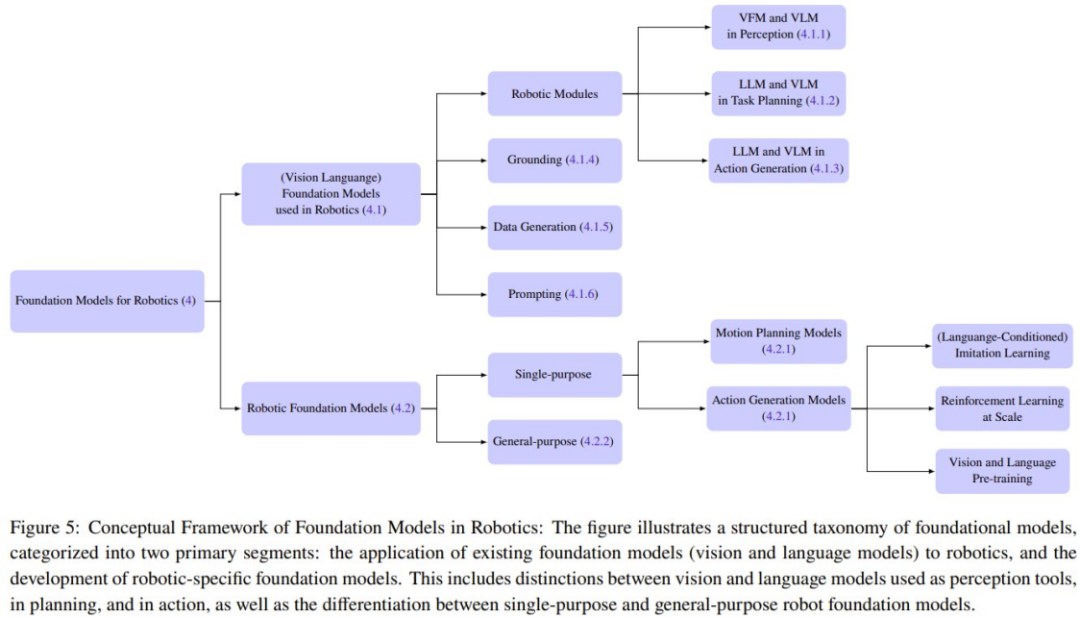

This article also summarizes current research methods for base models of robots. The team divided the basic models used in the field of robotics into two categories: basic models for robots and rewritten content: Robot Basic Model (RFM).

The robot's basic model refers to the use of visual and language basic models for robots in a zero-sample manner without additional fine-tuning or training. The basic robot model can be warm-started through visual-language pre-training initialization, or the model can be trained directly on the robot data set

Detailed classification

Basic model for robots

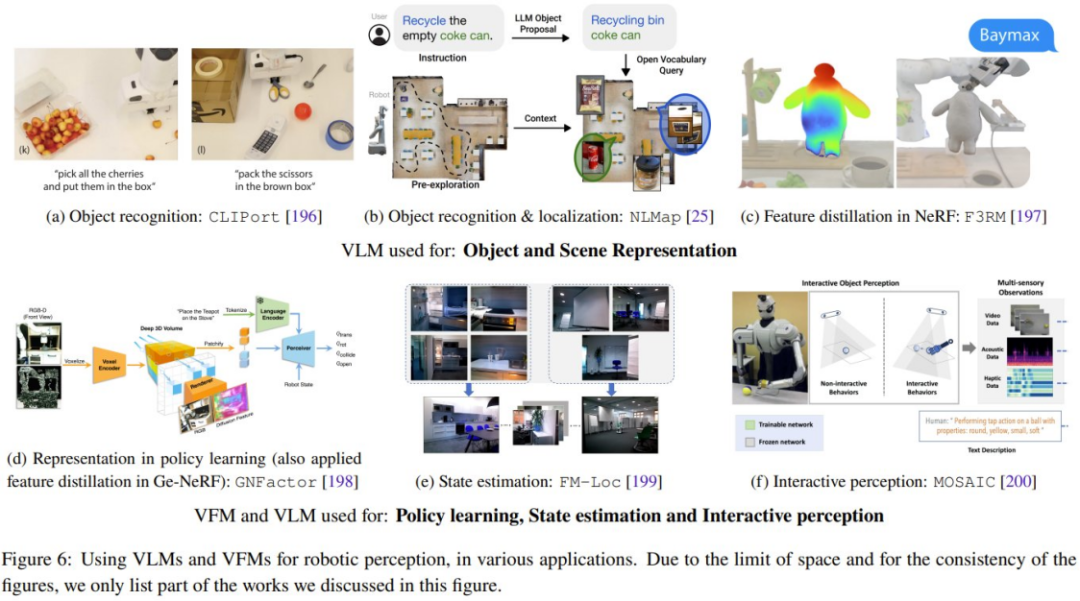

This content is rewritten as follows: This part of the content focuses on the field of robots Apply vision and language basic models to zero-shot situations. Among them, it mainly includes deploying VLM zero-sample into robot perception applications, and applying LLM's context learning capabilities to task-level and motion-level planning and action generation. Figure 6 shows some typical research work

The rewritten content is: Robot Basic Model (RFM)

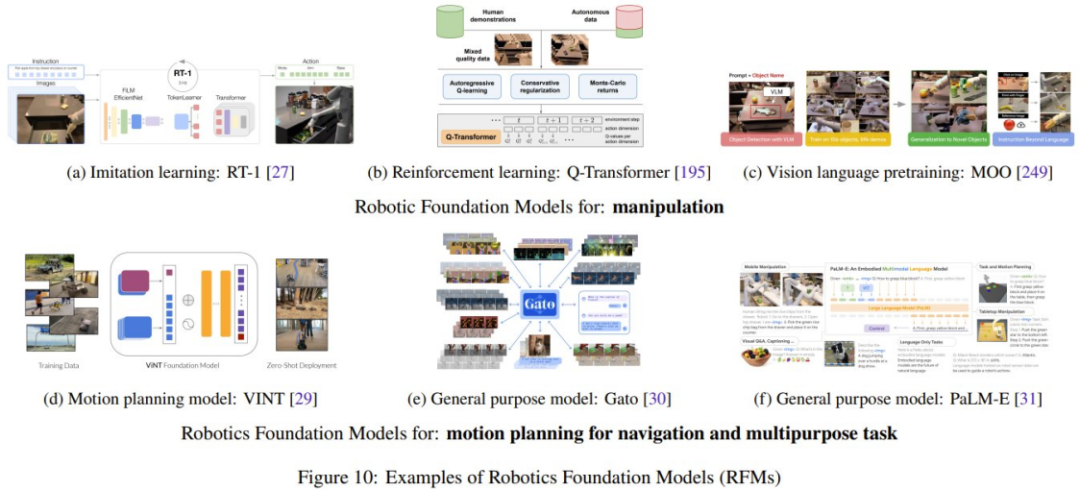

As the robotics dataset grows containing state-action pairs from real robots, what is being rewritten is: Robot Base Model (RFM) Category Success is also becoming more and more likely. These models feature the use of robotic data to train the model to solve robotic tasks.

The research team summarized the different types of RFM in the discussion. The first is an RFM capable of performing specific tasks in a single robot module, also known as a single-objective robot base model. For example, the ability to generate RFMs that control low-level actions of a robot or models that can generate higher-level motion planning. The article also introduces an RFM that can perform tasks in multiple robot modules, that is, a universal model that can perform perception, control, and even non-robotic tasks

The basic model is used in solving robot problems What role does the challenge aspect play?

The previous article lists the five major challenges facing the field of robotics. Here we describe how basic models can help address these challenges.

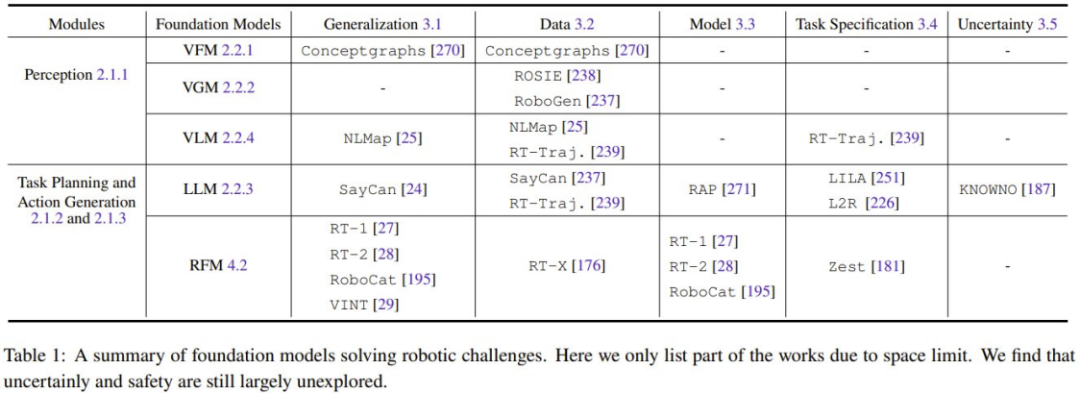

All basic models related to visual information (such as VFM, VLM and VGM) can be used in the robot’s perception module. LLM, on the other hand, is more versatile and can be used for planning and control. The rewritten content is: Robot basic model (RFM) is usually used in planning and action generation modules. Table 1 summarizes the underlying models for solving different robotics challenges.

As can be seen from the table, all basic models can well generalize the tasks of different robot modules. In particular, LLM performs well in task specification. RFM, on the other hand, is good at dealing with the challenge of dynamic models, since most RFMs are model-free methods. For robot perception capabilities, generalization capabilities and model challenges are interrelated. If the perception model already has good generalization capabilities, then there is no need to obtain more data for domain adaptation or additional fine-tuning

In addition, there is a lack of research on security challenges. , this will be an important future research direction.

Overview of Current Experiments and Evaluations

This section summarizes the current research results on datasets, benchmarks, and experiments.

Datasets and Benchmarks

There are limitations to relying solely on knowledge learned from language and vision datasets . As some research results show, some concepts such as friction and weight cannot be easily learned through these modalities alone.

Therefore, in order to enable robotic agents to better understand the world, the research community is not only adapting basic models from the language and vision domains, but also advancing the development of training and fine-tuning these models. A large and diverse multi-modal robot dataset.

Currently these efforts can be divided into two main directions: on the one hand, collecting data from the real world, and on the other hand, collecting data from the simulated world and migrating it to the real world. Each direction has its advantages and disadvantages. Datasets collected from the real world include RoboNet, Bridge Dataset V1, Bridge-V2, Language-Table, RT-1, etc. Commonly used simulators include Habitat, AI2THOR, Mujoco, AirSim, Arrival Autonomous Racing Simulator, Issac Gym, etc.

##Meta-Analysis of current methods

Another major contribution of this team is a meta-analysis of experiments in the papers mentioned in this review report, which can help clarify the following issues:

1. What tasks are people working on?

2. What data sets or simulators were used to train the model? What are the robot platforms used for testing?

3. What basic models are used by the research community? How effective is it in solving the task?

4. Which base models are more commonly used among these methods?

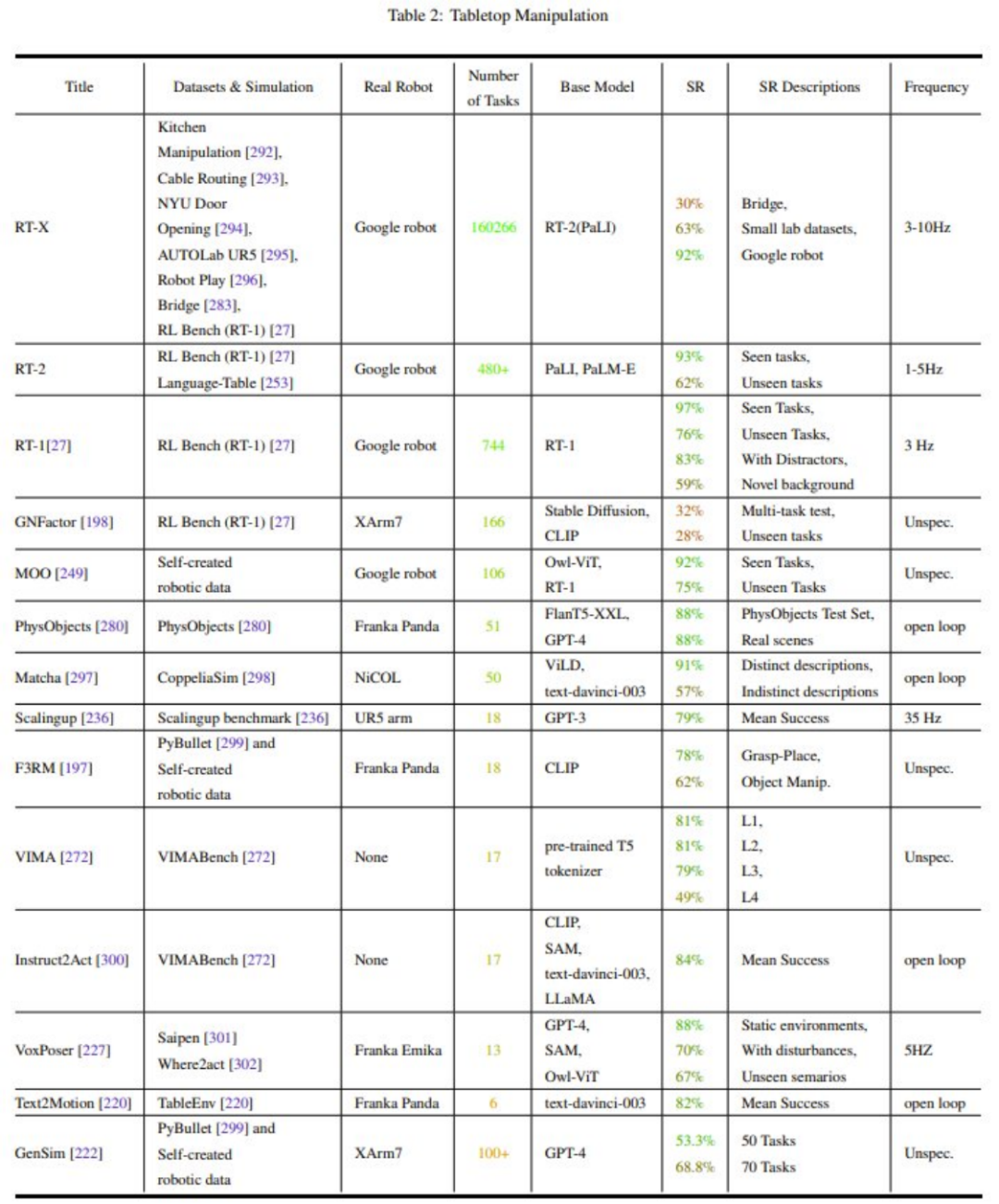

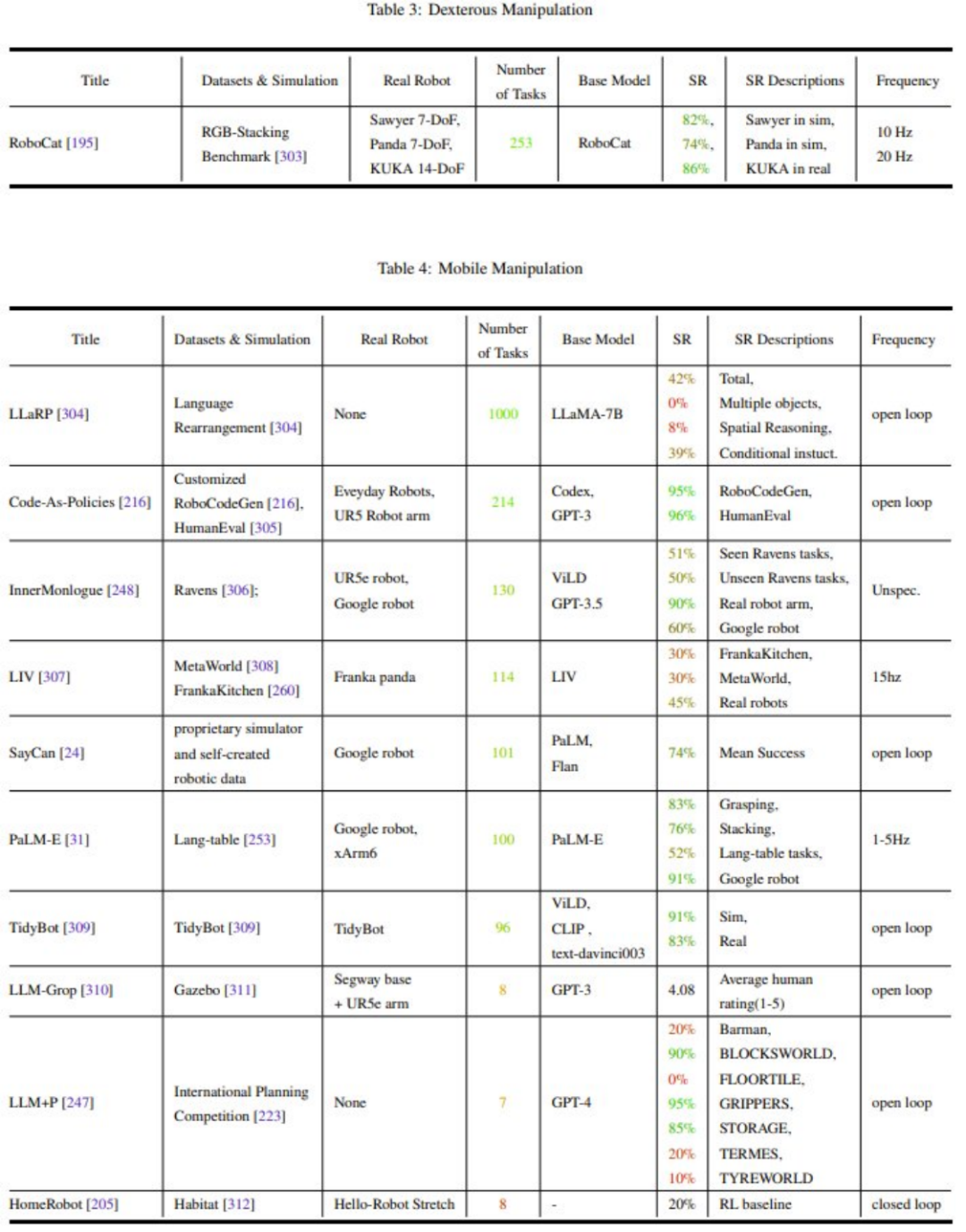

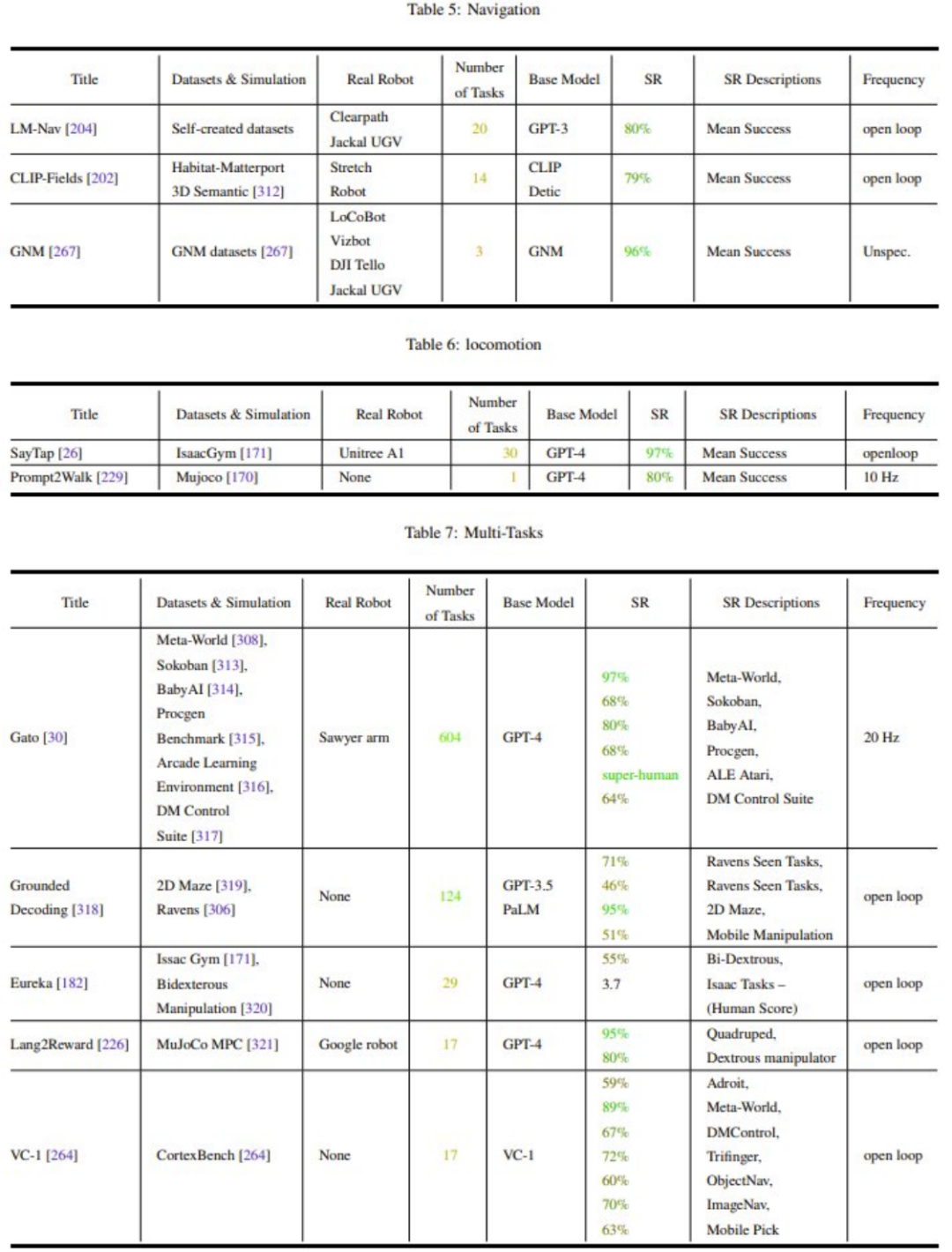

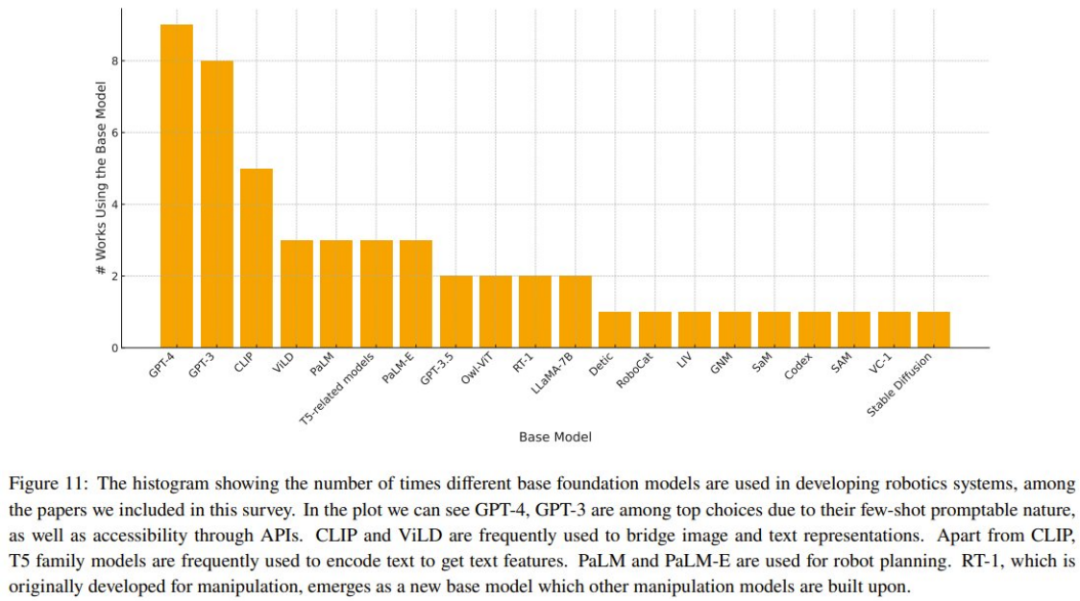

Table 2-7 and Figure 11 show the analysis results.

The research community’s attention to robot operation tasks (Manipulation) is unbalanced Generalization and robustness need to be improved The exploration of low-level actions (Low-level Control) is very limited The control frequency is too low ( The lack of unified test benchmarks (Metrics) and test platforms (Simulation or Hardware) makes comparison very difficult. The team summarized some challenges that still need to be solved and research directions worth discussing: Discussion and future directions

The above is the detailed content of A review revealing basic models and robot development paths. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.