Technology peripherals

Technology peripherals

AI

AI

Method for indoor frame estimation using panoramic visual self-attention model

Method for indoor frame estimation using panoramic visual self-attention model

Method for indoor frame estimation using panoramic visual self-attention model

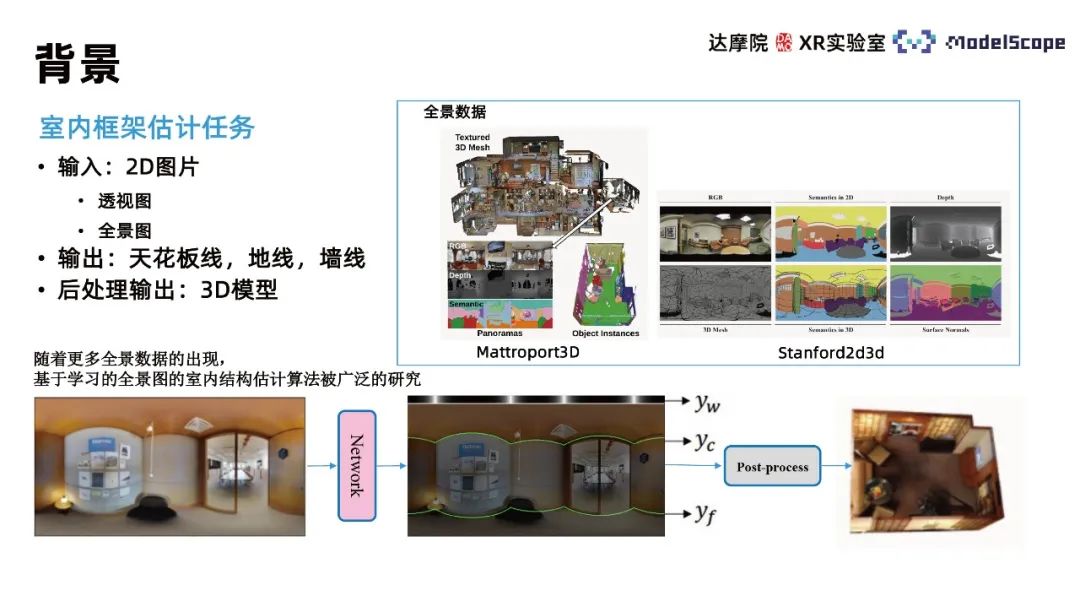

1. Research background

This method mainly focuses on the task of indoor estimation layout estimation, and the task inputs 2D images. , output a three-dimensional model of the scene described by the picture. Considering the complexity of directly outputting a 3D model, this task is generally broken down into outputting the information of three lines: wall lines, ceiling lines, and ground lines in the 2D image, and then reconstructing the 3D model of the room through post-processing operations based on the line information. . The three-dimensional model can be further used in specific application scenarios such as indoor scene reproduction and VR house viewing in the later stage. Different from the depth estimation method, this method restores the spatial geometric structure based on the estimation of indoor wall lines. The advantage is that it can make the geometric structure of the wall flatter; the disadvantage is that it cannot restore the geometric information of detailed items such as sofas and chairs in indoor scenes.

Depending on the input image, it can be divided into perspective-based and panorama-based methods. Compared with perspective views, panoramas have a larger viewing angle and richer image information. With the popularization of panoramic acquisition equipment, panoramic data is becoming more and more abundant, so there are currently many algorithms for indoor frame estimation based on panoramic images that have been widely studied

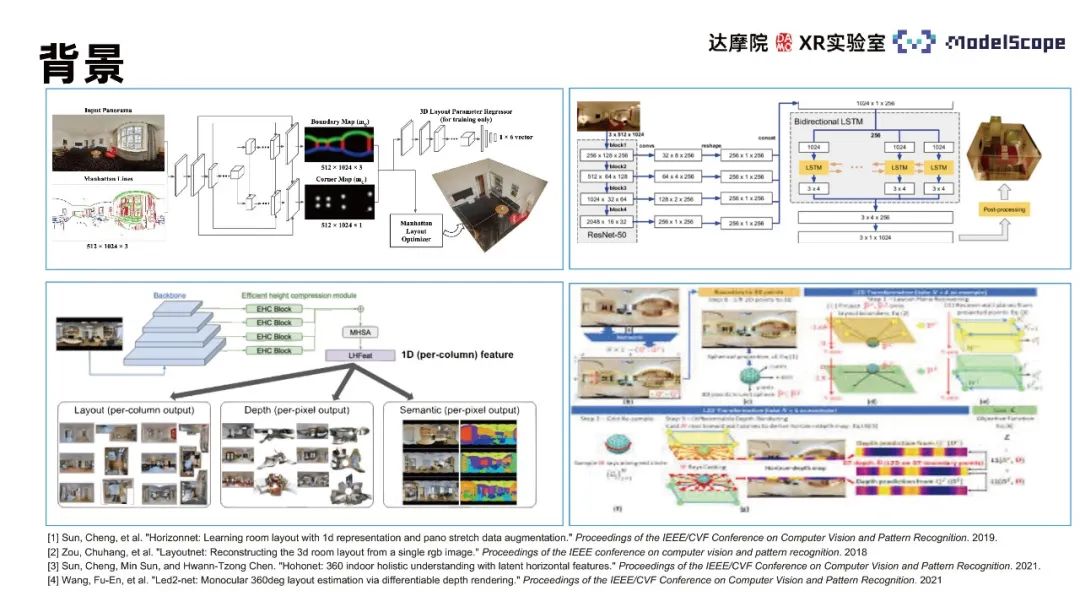

Relevant algorithms mainly include LayoutNet, HorizonNet, HohoNet and Led2-Net, etc. Most of these methods are based on convolutional neural networks. The wall line prediction effect is poor in locations with complex structures, such as noise interference, self-occlusion, etc. Prediction results such as discontinuous wall lines and incorrect wall line positions. In the wall line position estimation task, only focusing on local feature information will lead to this type of error. It is necessary to use the global information in the panorama to consider the position distribution of the entire wall line for estimation. The CNN method performs better in the task of extracting local features, and the Transformer method is better at capturing global information. Therefore, the Transformer method can be applied to indoor frame estimation tasks to improve task performance.

#Due to the dependence of training data, the effect of estimating the panoramic indoor frame by applying the Transformer based on perspective pre-training alone is not ideal. The PanoViT model maps the panorama to the feature space in advance, uses the Transformer to learn the global information of the panorama in the feature space, and also considers the apparent structure information of the panorama to complete the indoor frame estimation task.

2. Method introduction and result display

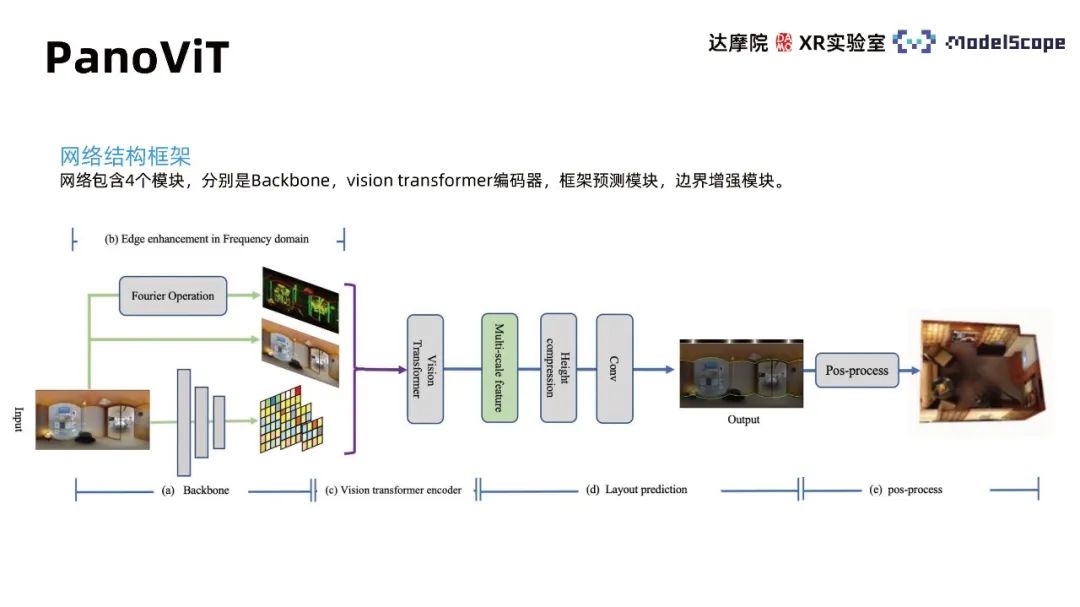

1. PanoViT

Network The structural framework contains 4 modules, namely Backbone, vision transformer decoder, frame prediction module, and boundary enhancement module. The Backbone module maps the panorama to the feature space, the vison transformer encoder learns global correlations in the feature space, and the frame prediction module converts the features into wall line, ceiling line, and ground line information. Post-processing can further obtain the three-dimensional model of the room and its boundaries. The enhancement module highlights the role of boundary information in panoramic images for indoor frame estimation.

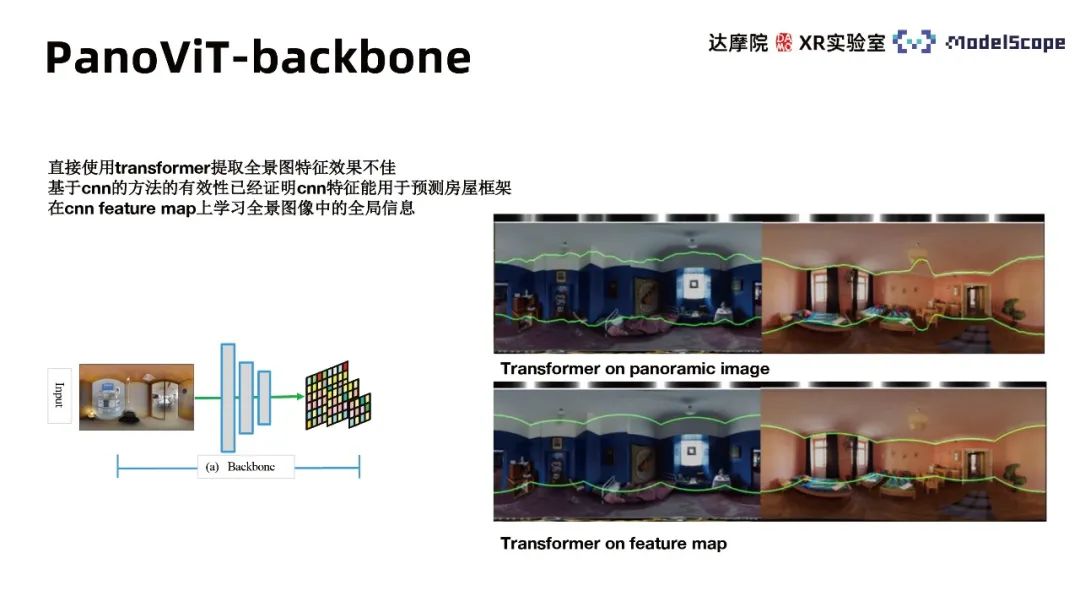

① Backbone module

Since the direct use of transformer to extract panoramic features is not effective, it has been proven that the CNN-based method is effective Effectiveness, i.e. CNN features can be used to predict house frames. Therefore, we use the backbone of CNN to extract feature maps of different scales of the panorama, and learn the global information of the panoramic image in the feature maps. Experimental results show that using transformer in feature space is significantly better than applying it directly on the panorama

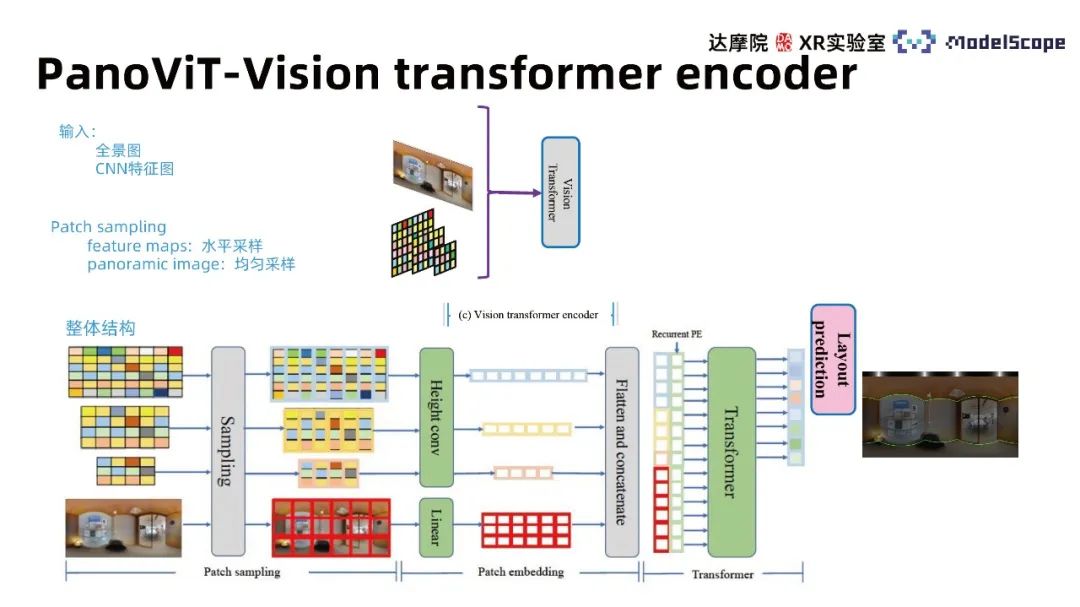

② Vision transformer encoder module

The main architecture of Transformer can be mainly divided into three modules, including patch sampling, patch embedding and transformer’s multi-head attention. The input considers both the panoramic image feature map and the original image and uses different patch sampling methods for different inputs. The original image uses the uniform sampling method, and the feature map uses the horizontal sampling method. The conclusion from HorizonNet believes that horizontal features are of higher importance in the wall line estimation task. Referring to this conclusion, the feature map features are compressed in the vertical direction during the embedding process. The Recurrent PE method is used to combine features of different scales and learn in the transformer model of multi-head attention to obtain a feature vector with the same length as the horizontal direction of the original image. The corresponding wall line distribution can be obtained through different decoder heads.

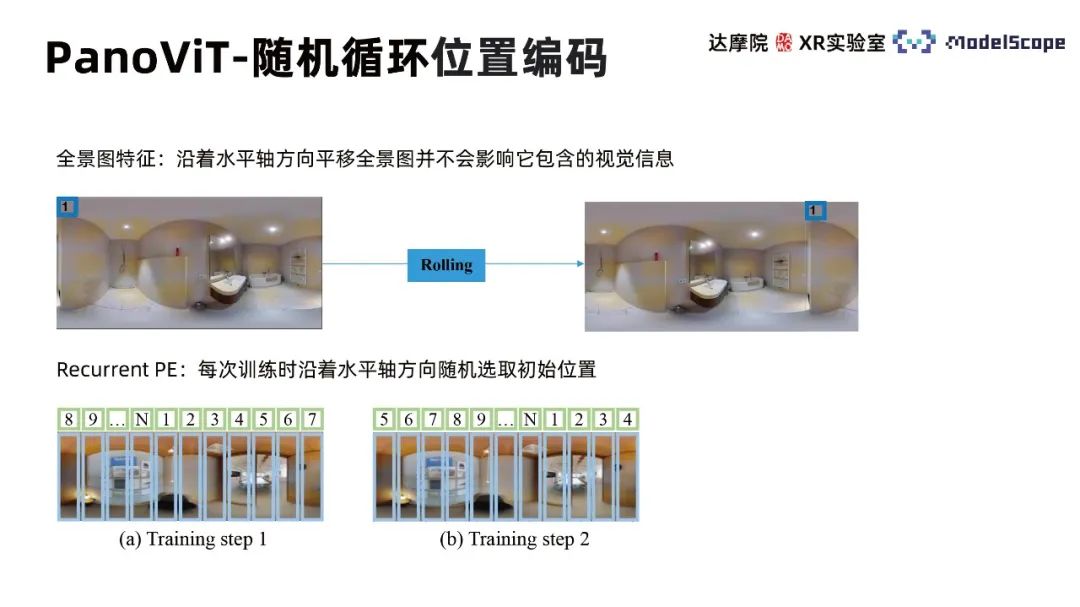

Random cyclic position encoding (Recurrent Position Embedding) takes into account that the displacement of the panorama along the horizontal direction does not change the characteristics of the visual information of the image, so each training The initial position is randomly selected along the horizontal axis, so that the training process pays more attention to the relative position between different patches rather than the absolute position.

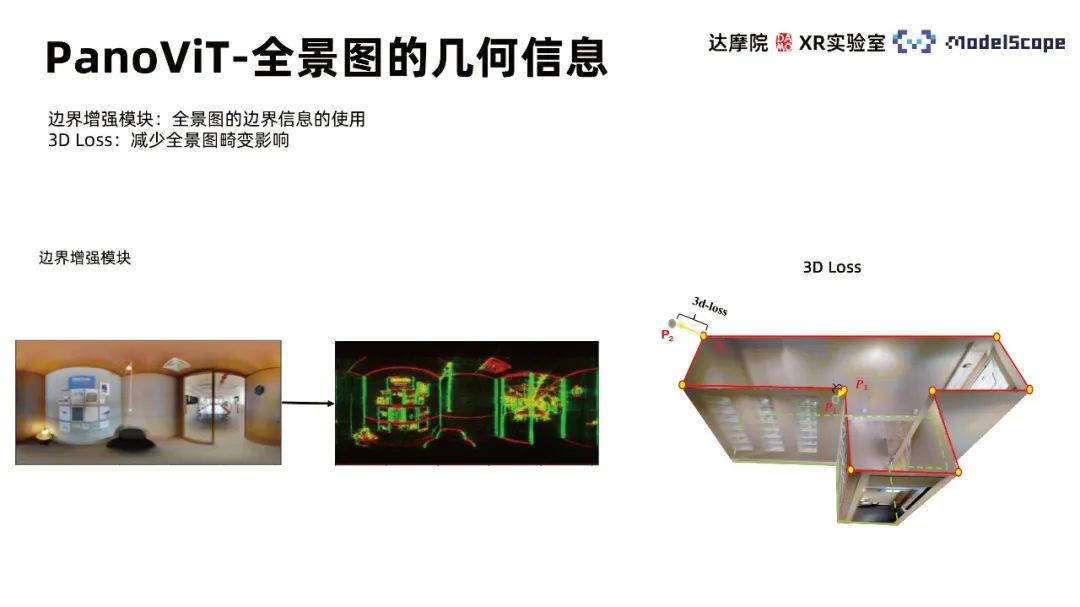

③ Geometric information of panorama

Full utilization of geometric information in panorama can contribute to indoor frame estimation task performance improvement. The boundary enhancement module in the PanoViT model emphasizes how to use the boundary information in the panorama, and 3D Loss helps reduce the impact of panorama distortion.

The boundary enhancement module takes into account the linear characteristics of the wall lines in the wall line detection task. The line information in the image is of prominent importance, so it is necessary to highlight the boundary information so that the network can understand the distribution of the center lines of the image. . Use the boundary enhancement method in the frequency domain to highlight the panorama boundary information, obtain the frequency domain representation of the image based on fast Fourier transform, use a mask to sample in the frequency domain space, and transform back to the image with highlighted boundary information based on the inverse Fourier transform . The core of the module lies in the mask design. Considering that the boundary corresponds to high-frequency information, the mask first selects a high-pass filter; and samples different frequency domain directions according to the different directions of different lines. This method is simpler to implement and more efficient than the traditional LSD method.

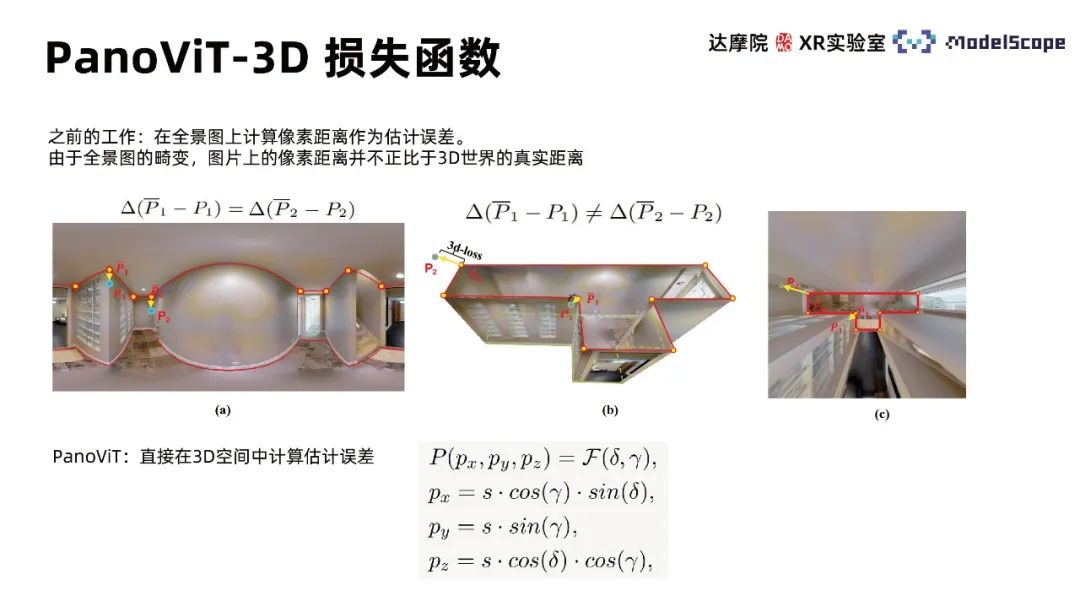

Previous work calculated the pixel distance on the panorama as an estimation error. Due to the distortion of the panorama, the pixel distance on the picture is not proportional to the real distance in the 3D world. PanoViT uses a 3D loss function to calculate the estimation error directly in 3D space.

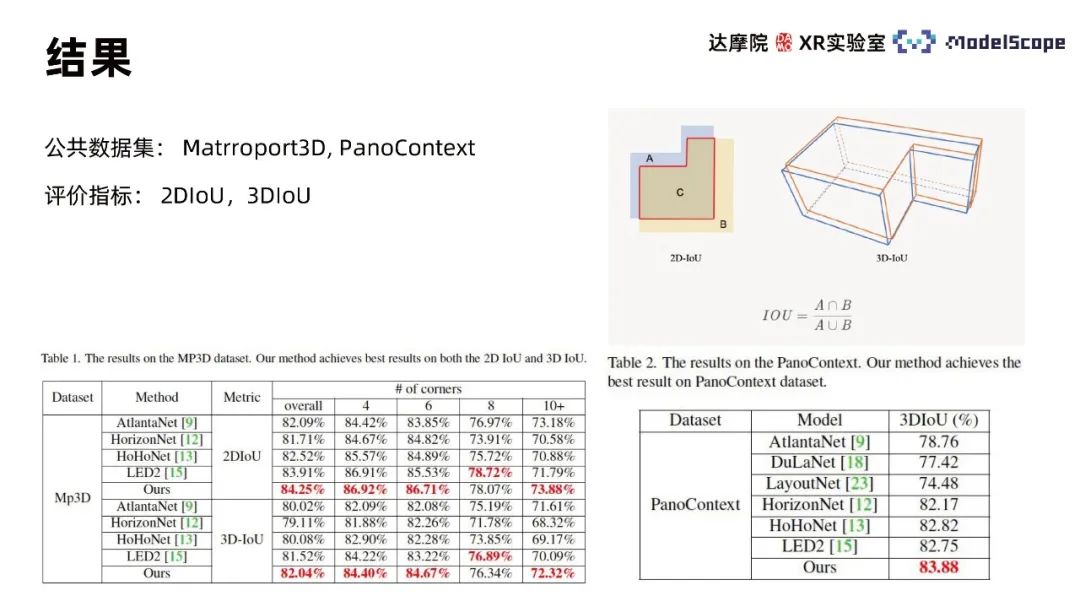

2. Model results

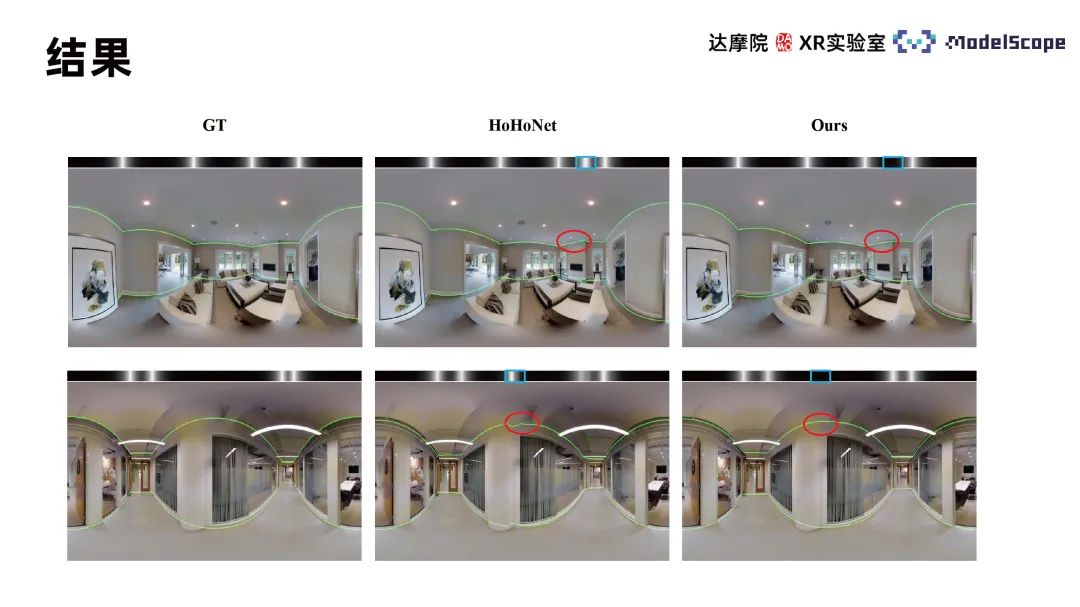

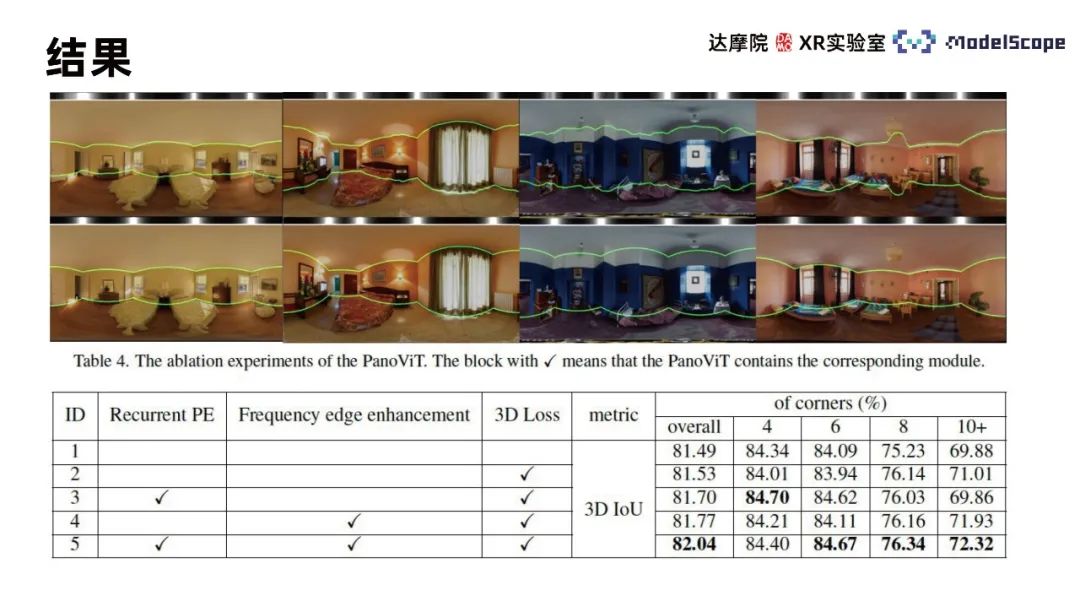

Use Martroport3D and PanoContext public data sets to conduct experiments, using 2DIoU and 3DIoU as evaluation indicators, and Compare with SOTA method. The results show that PanoViT's model evaluation indicators on the two data sets have basically reached the optimal level, and are only slightly inferior to LED2 on specific indicators. By comparing the model visualization results with Hohonet, it can be found that PanoViT can accurately identify the direction of wall lines in complex scenes. By comparing the Recurrent PE, boundary enhancement and 3D Loss modules in ablation experiments, the effectiveness of these modules can be verified

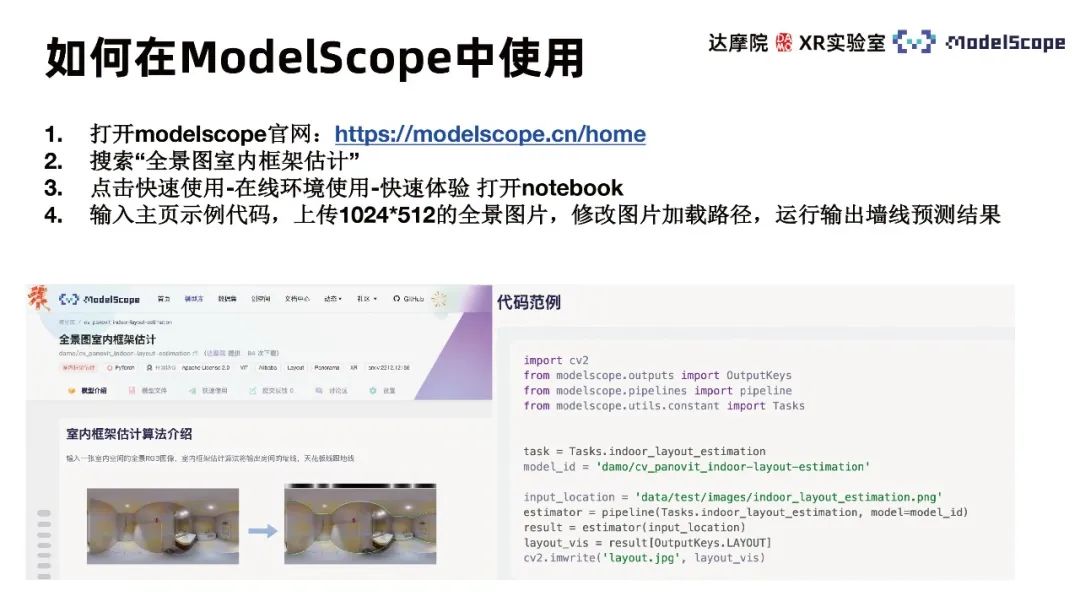

3. How to use in ModelScope

- Open the modelscope official website: https://modelscope.cn/home.

- Search for "Panorama Indoor Frame Estimation".

- Click Quick Use-Online Environment Use-Quick Experience to open the notebook.

- Enter the homepage sample code, upload a 1024*512 panoramic image, modify the image loading path, and run to output the wall line prediction results.

The above is the detailed content of Method for indoor frame estimation using panoramic visual self-attention model. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

1. The historical development of multi-modal large models. The photo above is the first artificial intelligence workshop held at Dartmouth College in the United States in 1956. This conference is also considered to have kicked off the development of artificial intelligence. Participants Mainly the pioneers of symbolic logic (except for the neurobiologist Peter Milner in the middle of the front row). However, this symbolic logic theory could not be realized for a long time, and even ushered in the first AI winter in the 1980s and 1990s. It was not until the recent implementation of large language models that we discovered that neural networks really carry this logical thinking. The work of neurobiologist Peter Milner inspired the subsequent development of artificial neural networks, and it was for this reason that he was invited to participate in this project.

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

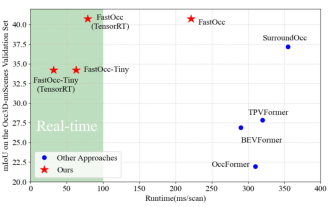

Add SOTA in real time and skyrocket! FastOcc: Faster inference and deployment-friendly Occ algorithm is here!

Mar 14, 2024 pm 11:50 PM

Add SOTA in real time and skyrocket! FastOcc: Faster inference and deployment-friendly Occ algorithm is here!

Mar 14, 2024 pm 11:50 PM

Written above & The author’s personal understanding is that in the autonomous driving system, the perception task is a crucial component of the entire autonomous driving system. The main goal of the perception task is to enable autonomous vehicles to understand and perceive surrounding environmental elements, such as vehicles driving on the road, pedestrians on the roadside, obstacles encountered during driving, traffic signs on the road, etc., thereby helping downstream modules Make correct and reasonable decisions and actions. A vehicle with self-driving capabilities is usually equipped with different types of information collection sensors, such as surround-view camera sensors, lidar sensors, millimeter-wave radar sensors, etc., to ensure that the self-driving vehicle can accurately perceive and understand surrounding environment elements. , enabling autonomous vehicles to make correct decisions during autonomous driving. Head