Technology peripherals

Technology peripherals

It Industry

It Industry

Meta plans to lay off members of Metaverse Reality Labs department, news comes out

Meta plans to lay off members of Metaverse Reality Labs department, news comes out

Meta plans to lay off members of Metaverse Reality Labs department, news comes out

According to a report from Reuters, Meta plans to lay off the chip development team (FAST) of Reality Labs, a related department of the Metaverse.

According to two people familiar with the matter, Meta employees have received messages on the internal forum Workplace Notice of layoffs. It is said that Meta will publish the company's notification of their final status early Wednesday morning local time. A Meta spokesman declined to comment.

According to public information, Meta’s FAST department has approximately 600 employees. The division has been working on developing custom chips to provide Meta devices with unique features and improve performance to differentiate them from other VR/AR devices

However, despite Meta's efforts, they have not been able to produce them To produce a chip that can compete with external products, so their devices still use Qualcomm chips

Meta used the Qualcomm Snapdragon XR2 Gen 2 chip in the Quest 3 released last week, equipped with 8GB of memory. Meta said that the graphics performance of Quest 3 is twice that of Quest 2, and even better than Quest Pro equipped with the previous generation XR2 chip. The 128GB version of Quest 3 is priced at US$499.99 (approximately RMB 3,655). , while the 512GB version is priced at US$649.99 (approximately RMB 4,751)

Advertising Statement: The external jump links contained in the article (including but not limited to hyperlinks, QR codes, passwords, etc.) are used to convey more information and save selection. Time and results are for reference only. All articles on this site contain this statement.

The above is the detailed content of Meta plans to lay off members of Metaverse Reality Labs department, news comes out. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is the 1nm chip made in China or the United States?

Nov 06, 2023 pm 01:30 PM

Is the 1nm chip made in China or the United States?

Nov 06, 2023 pm 01:30 PM

It is not certain who made the 1nm chip. From a research and development perspective, the 1nm chip was jointly developed by Taiwan, China and the United States. From a mass production perspective, this technology is not yet fully realized. The main person in charge of this research is Dr. Zhu Jiadi of MIT, who is a Chinese scientist. Dr. Zhu Jiadi said that the research is still in its early stages and is still a long way from mass production.

First in China: Changxin Memory launches LPDDR5 DRAM memory chip

Nov 28, 2023 pm 09:29 PM

First in China: Changxin Memory launches LPDDR5 DRAM memory chip

Nov 28, 2023 pm 09:29 PM

News from this site on November 28. According to the official website of Changxin Memory, Changxin Memory has launched the latest LPDDR5DRAM memory chip. It is the first domestic brand to launch independently developed and produced LPDDR5 products, achieving a breakthrough in the domestic market and also making Changxin Storage's product layout in the mobile terminal market is more diversified. This website noticed that Changxin Memory LPDDR5 series products include 12Gb LPDDR5 particles, POP packaged 12GBLPDDR5 chips and DSC packaged 6GBLPDDR5 chips. The 12GBLPDDR5 chip has been verified on models of mainstream domestic mobile phone manufacturers such as Xiaomi and Transsion. LPDDR5 is a product launched by Changxin Storage for the mid-to-high-end mobile device market.

New affordable Meta Quest 3S VR headset appears on FCC, suggesting imminent launch

Sep 04, 2024 am 06:51 AM

New affordable Meta Quest 3S VR headset appears on FCC, suggesting imminent launch

Sep 04, 2024 am 06:51 AM

The Meta Connect 2024event is set for September 25 to 26, and in this event, the company is expected to unveil a new affordable virtual reality headset. Rumored to be the Meta Quest 3S, the VR headset has seemingly appeared on FCC listing. This sugge

The first open source model to surpass GPT4o level! Llama 3.1 leaked: 405 billion parameters, download links and model cards are available

Jul 23, 2024 pm 08:51 PM

The first open source model to surpass GPT4o level! Llama 3.1 leaked: 405 billion parameters, download links and model cards are available

Jul 23, 2024 pm 08:51 PM

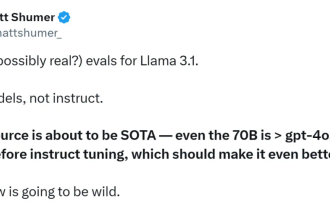

Get your GPU ready! Llama3.1 finally appeared, but the source is not Meta official. Today, the leaked news of the new Llama large model went viral on Reddit. In addition to the basic model, it also includes benchmark results of 8B, 70B and the maximum parameter of 405B. The figure below shows the comparison results of each version of Llama3.1 with OpenAIGPT-4o and Llama38B/70B. It can be seen that even the 70B version exceeds GPT-4o on multiple benchmarks. Image source: https://x.com/mattshumer_/status/1815444612414087294 Obviously, version 3.1 of 8B and 70

It is reported that TSMC's advanced packaging customers are chasing orders significantly, and monthly production capacity is planned to increase by 120% next year

Nov 13, 2023 pm 12:29 PM

It is reported that TSMC's advanced packaging customers are chasing orders significantly, and monthly production capacity is planned to increase by 120% next year

Nov 13, 2023 pm 12:29 PM

News from this site on November 13, according to Taiwan Economic Daily, TSMC’s CoWoS advanced packaging demand is about to explode. In addition to NVIDIA, which has confirmed expanded orders in October, heavyweight customers such as Apple, AMD, Broadcom, and Marvell have also recently pursued orders significantly. According to reports, TSMC is working hard to accelerate the expansion of CoWoS advanced packaging production capacity to meet the needs of the above-mentioned five major customers. Next year's monthly production capacity is expected to increase by about 20% from the original target to 35,000 pieces. Analysts said that TSMC's five major customers have placed large orders, which shows that artificial intelligence applications have become widely popular, and major manufacturers are interested in artificial intelligence chips. The demand has increased significantly. Inquiries on this site found that the current CoWoS advanced packaging technology is mainly divided into three types - CoWos-S

Six quick ways to experience the newly released Llama 3!

Apr 19, 2024 pm 12:16 PM

Six quick ways to experience the newly released Llama 3!

Apr 19, 2024 pm 12:16 PM

Last night Meta released the Llama38B and 70B models. The Llama3 instruction-tuned model is fine-tuned and optimized for dialogue/chat use cases and outperforms many existing open source chat models in common benchmarks. For example, Gemma7B and Mistral7B. The Llama+3 model improves data and scale and reaches new heights. It was trained on more than 15T tokens of data on two custom 24K GPU clusters recently released by Meta. This training dataset is 7 times larger than Llama2 and contains 4 times more code. This brings the capability of the Llama model to the current highest level, which supports text lengths of more than 8K, twice that of Llama2. under

Llama3 comes suddenly! The open source community is boiling again: the era of free access to GPT4-level models has arrived

Apr 19, 2024 pm 12:43 PM

Llama3 comes suddenly! The open source community is boiling again: the era of free access to GPT4-level models has arrived

Apr 19, 2024 pm 12:43 PM

Llama3 is here! Just now, Meta’s official website was updated and the official announced Llama 38 billion and 70 billion parameter versions. And it is an open source SOTA after its launch: Meta official data shows that the Llama38B and 70B versions surpass all opponents in their respective parameter scales. The 8B model outperforms Gemma7B and Mistral7BInstruct on many benchmarks such as MMLU, GPQA, and HumanEval. The 70B model has surpassed the popular closed-source fried chicken Claude3Sonnet, and has gone back and forth with Google's GeminiPro1.5. As soon as the Huggingface link came out, the open source community became excited again. The sharp-eyed blind students also discovered immediately

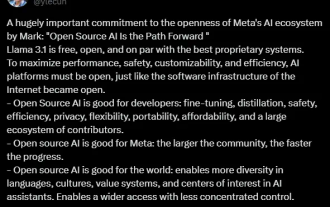

The strongest model Llama 3.1 405B is officially released, Zuckerberg: Open source leads a new era

Jul 24, 2024 pm 08:23 PM

The strongest model Llama 3.1 405B is officially released, Zuckerberg: Open source leads a new era

Jul 24, 2024 pm 08:23 PM

Just now, the long-awaited Llama 3.1 has been officially released! Meta officially issued a voice that "open source leads a new era." In the official blog, Meta said: "Until today, open source large language models have mostly lagged behind closed models in terms of functionality and performance. Now, we are ushering in a new era led by open source. We publicly released MetaLlama3.1405B, which we believe It is the largest and most powerful open source basic model in the world. To date, the total downloads of all Llama versions have exceeded 300 million times, and we have just begun.” Meta founder and CEO Zuckerberg also wrote an article. Long article "OpenSourceAIIsthePathForward",