Learn about Druid caching technology

Druid is an open source distributed data storage technology for real-time data analysis. It has the characteristics of high performance, low latency, and scalability. In order to further improve the performance and reliability of Druid, the Druid development team developed caching technology. This article mainly introduces the relevant knowledge of Druid caching.

1. Druid cache overview

Druid cache is divided into two types: one is the result cache on the Broker, and the other is the data cache on the Historical node. The role of caching is mainly to reduce the time it takes Druid to query data and reduce the query load.

- Result cache on Broker

The result cache on Broker is the cache of query results. Once the results are cached, subsequent queries can be directly retrieved from the cache. Obtain. The result cache is stored on the Broker's local disk, and the query result lifecycle is configurable and is 5 minutes by default. Query caching is generally used in scenarios that require high query response speed.

- Data cache on Historical node

The data cache on Historical node is a cache of data blocks. The Historical node is responsible for storing data blocks. When the Historical node receives a query request, if the queried data block is already in the local cache, the Historical node reads the data block directly from the cache and returns the result. If the data block is not in the cache, the Historical node needs to obtain the data block from other nodes in the cluster or data source and cache it. Data caching is one of the most important features of Druid, and can greatly improve query performance and response speed in many scenarios.

2. How to use Druid cache

You need to pay attention to the following points when using cache in Druid:

- Enable caching in queries

Druid does not enable caching by default, and you need to explicitly specify the cache when querying. When querying, you can enable result caching or data block caching by setting corresponding parameters. The query parameters are as follows:

(1) useResultCache: set to true to enable result caching, the default is false;

(2) useCache: set to true to enable data block caching, the default is false .

- Configuring cache

Druid’s cache is configurable, and users can set the size, life cycle and other parameters of the cache according to their actual needs. The parameters of the cache configuration are as follows:

(1) QueryCacheSize: The maximum size of the result cache, the default value is 500MB;

(2) segmentQueryCacheSize: The maximum size of the data block cache, the default is 0;

(3) resultCacheMaxSizeBytes: The maximum size of a single query result cache, the default is 10485760 bytes (10MB);

(4) resultCacheExpire: The life cycle of the query result cache, the default is 5 minutes.

3. Cache optimization

The optimization of Druid cache mainly includes the following points:

- Cache clearing strategy

When caching When the maximum capacity is reached or certain conditions are met, part of the cache needs to be cleared. By default, Druid cache clears some expired caches to free up more space. In addition, users can define their own clearing strategies and implement corresponding interfaces.

- Reasonably set the cache size

The setting of the cache size directly affects the storage capacity and efficiency of the cache. If the cache size is set too small, the cache will not be able to store enough data blocks or query results, thus affecting the performance of Druid queries; if the cache size is set too large, too many memory resources will be occupied, resulting in reduced query performance. Therefore, it needs to be adjusted according to the actual scenario to achieve optimal performance.

- Reasonably set the cache life cycle

If the cache life cycle is set too long, the memory resources occupied by the cache will not be released for a long time, affecting the performance of Druid queries; cache life cycle If it is too short, the cache hit rate will be reduced, which will also affect the performance of Druid queries. Therefore, the cache life cycle needs to be adjusted according to actual scenarios to achieve optimal performance.

Summary:

Druid caching is an important way to optimize Druid query performance. Result caching and data block caching each have different advantages and disadvantages, and users need to choose the appropriate caching method based on specific scenarios. When using Druid cache, you need to pay attention to cache enablement and configuration, and adjust and optimize it according to actual scenarios.

The above is the detailed content of Learn about Druid caching technology. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

How to view and refresh dns cache in Linux

Mar 07, 2024 am 08:43 AM

How to view and refresh dns cache in Linux

Mar 07, 2024 am 08:43 AM

DNS (DomainNameSystem) is a system used on the Internet to convert domain names into corresponding IP addresses. In Linux systems, DNS caching is a mechanism that stores the mapping relationship between domain names and IP addresses locally, which can increase the speed of domain name resolution and reduce the burden on the DNS server. DNS caching allows the system to quickly retrieve the IP address when subsequently accessing the same domain name without having to issue a query request to the DNS server each time, thereby improving network performance and efficiency. This article will discuss with you how to view and refresh the DNS cache on Linux, as well as related details and sample code. Importance of DNS Caching In Linux systems, DNS caching plays a key role. its existence

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

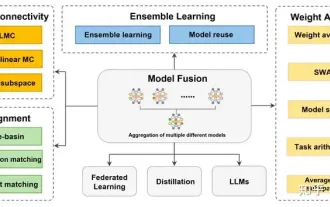

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion

Advanced Usage of PHP APCu: Unlocking the Hidden Power

Mar 01, 2024 pm 09:10 PM

Advanced Usage of PHP APCu: Unlocking the Hidden Power

Mar 01, 2024 pm 09:10 PM

PHPAPCu (replacement of php cache) is an opcode cache and data cache module that accelerates PHP applications. Understanding its advanced features is crucial to utilizing its full potential. 1. Batch operation: APCu provides a batch operation method that can process a large number of key-value pairs at the same time. This is useful for large-scale cache clearing or updates. //Get cache keys in batches $values=apcu_fetch(["key1","key2","key3"]); //Clear cache keys in batches apcu_delete(["key1","key2","key3"]);2 .Set cache expiration time: APCu allows you to set an expiration time for cache items so that they automatically expire after a specified time.

The relationship between CPU, memory and cache is explained in detail!

Mar 07, 2024 am 08:30 AM

The relationship between CPU, memory and cache is explained in detail!

Mar 07, 2024 am 08:30 AM

There is a close interaction between the CPU (central processing unit), memory (random access memory), and cache, which together form a critical component of a computer system. The coordination between them ensures the normal operation and efficient performance of the computer. As the brain of the computer, the CPU is responsible for executing various instructions and data processing; the memory is used to temporarily store data and programs, providing fast read and write access speeds; and the cache plays a buffering role, speeding up data access speed and improving The computer's CPU is the core component of the computer and is responsible for executing various instructions, arithmetic operations, and logical operations. It is called the "brain" of the computer and plays an important role in processing data and performing tasks. Memory is an important storage device in a computer.

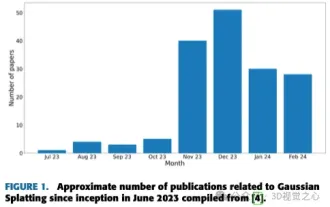

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

More than just 3D Gaussian! Latest overview of state-of-the-art 3D reconstruction techniques

Jun 02, 2024 pm 06:57 PM

Written above & The author’s personal understanding is that image-based 3D reconstruction is a challenging task that involves inferring the 3D shape of an object or scene from a set of input images. Learning-based methods have attracted attention for their ability to directly estimate 3D shapes. This review paper focuses on state-of-the-art 3D reconstruction techniques, including generating novel, unseen views. An overview of recent developments in Gaussian splash methods is provided, including input types, model structures, output representations, and training strategies. Unresolved challenges and future directions are also discussed. Given the rapid progress in this field and the numerous opportunities to enhance 3D reconstruction methods, a thorough examination of the algorithm seems crucial. Therefore, this study provides a comprehensive overview of recent advances in Gaussian scattering. (Swipe your thumb up