Technology peripherals

Technology peripherals

AI

AI

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

The paper for Stable Diffusion 3 is finally here!

This model was released two weeks ago and uses the same DiT (Diffusion Transformer) architecture as Sora. It caused quite a stir upon release.

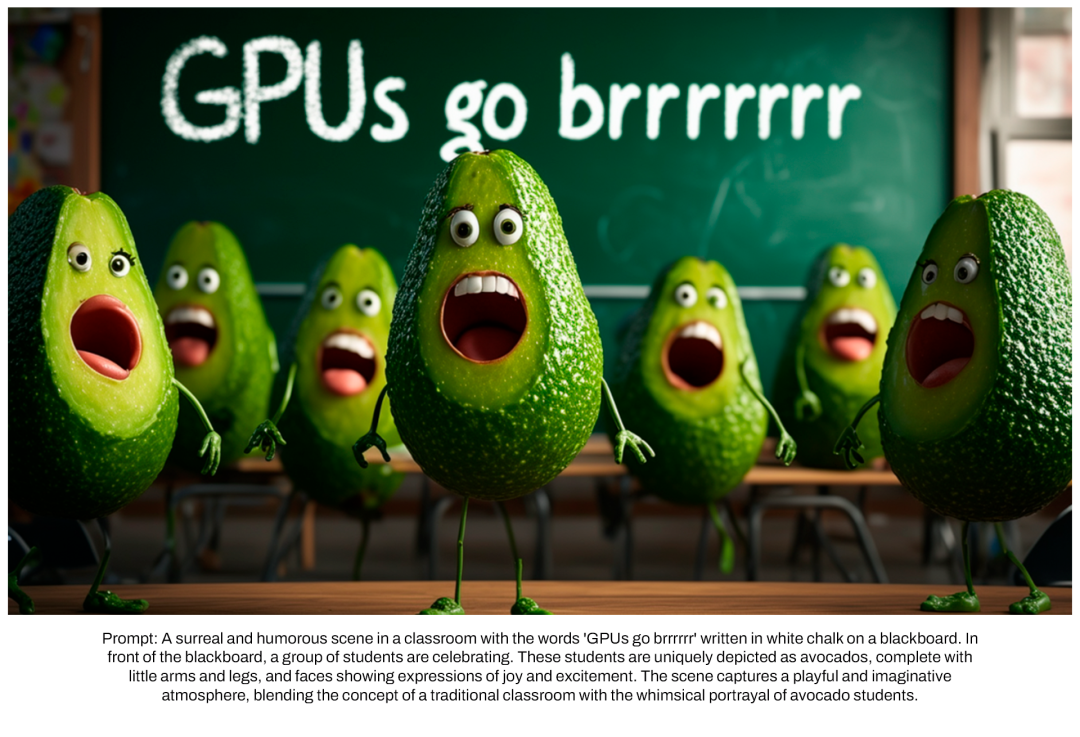

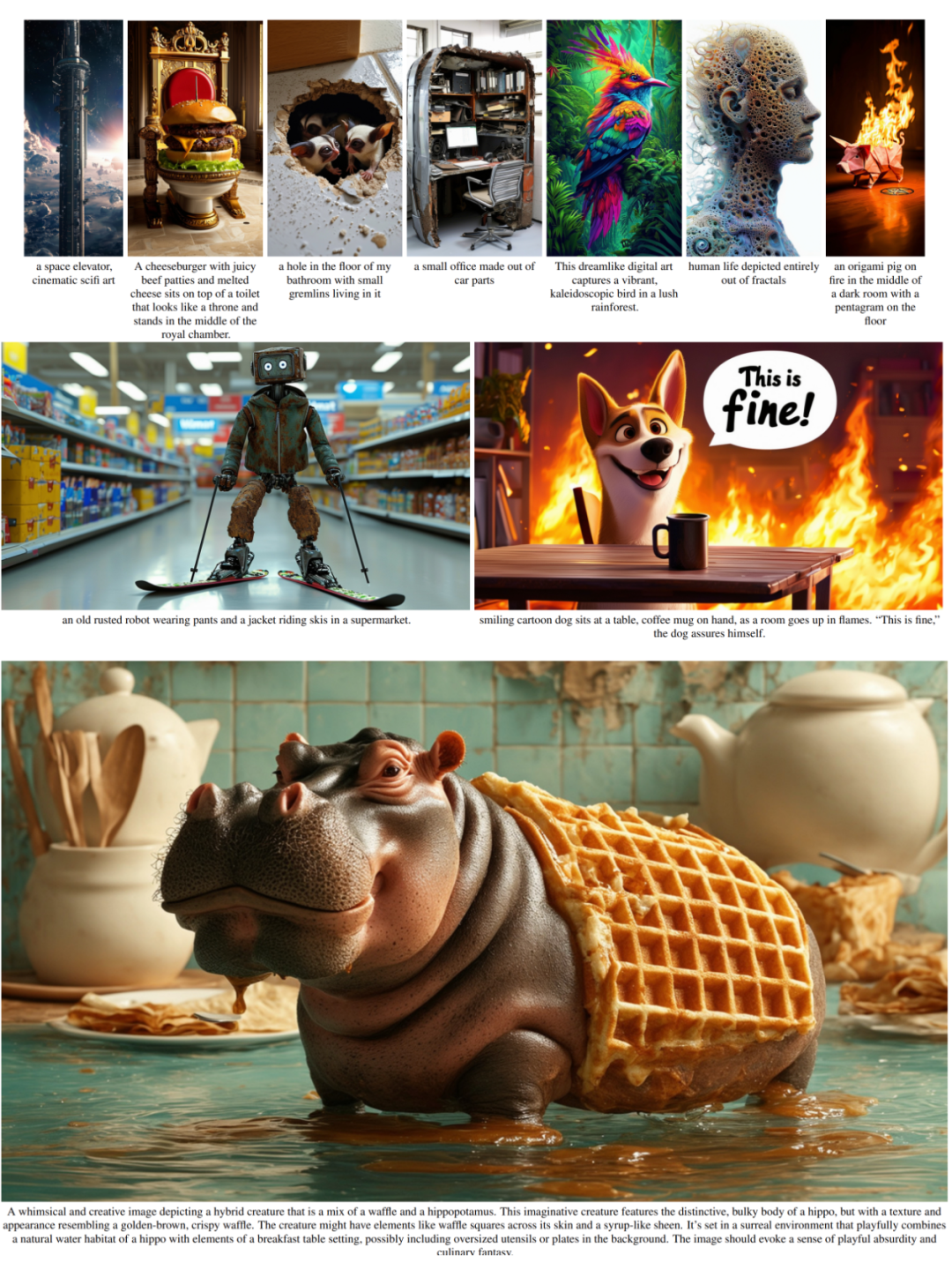

Compared with the previous version, the quality of images generated by Stable Diffusion 3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. Condition.

Stability AI pointed out that Stable Diffusion 3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly lowering the threshold for using large AI models.

In a newly released paper, Stability AI said that in human preference-based evaluations, Stable Diffusion 3 outperformed current state-of-the-art text-to-image generation systems such as DALL・E 3. Midjourney v6 and Ideogram v1. Soon, they will make the experimental data, code, and model weights of the study publicly available.

In the paper, Stability AI revealed more details about Stable Diffusion 3.

- ##Paper title: Scaling Rectified Flow Transformers for High-Resolution Image Synthesis

- Paper link: https://stabilityai-public-packages.s3.us-west-2.amazonaws.com/Stable Diffusion 3 Paper.pdf

Architectural details

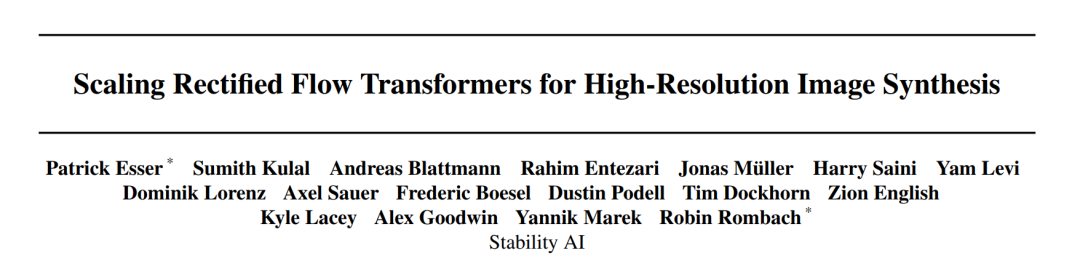

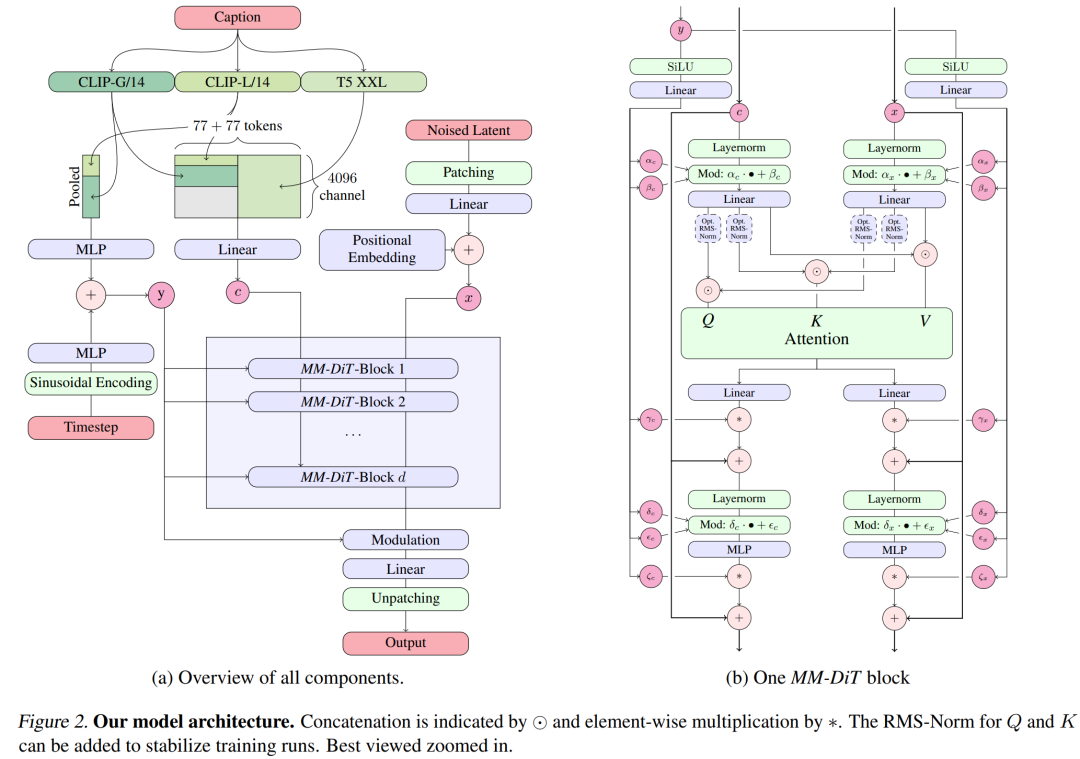

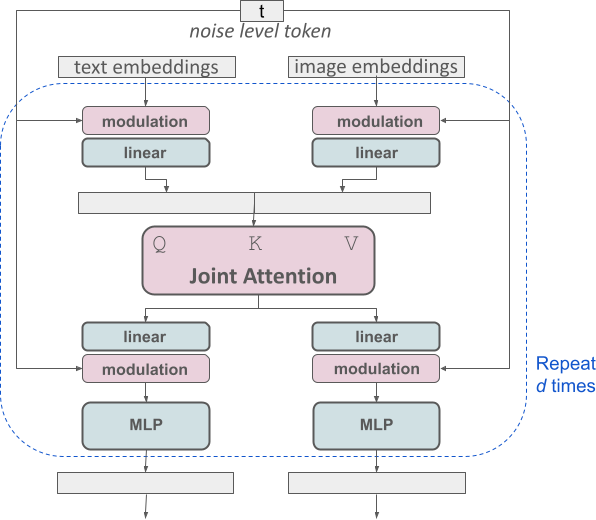

For text-to-image generation, the Stable Diffusion 3 model must consider both text and image modes. Therefore, the authors of the paper call this new architecture MMDiT, referring to its ability to handle multiple modalities. As with previous versions of Stable Diffusion, the authors use pre-trained models to derive suitable text and image representations. Specifically, they used three different text embedding models—two CLIP models and T5—to encode text representations, and an improved autoencoding model to encode image tokens.

Stable Diffusion 3 model architecture.

Improved multimodal diffusion transformer: MMDiT block.

The SD3 architecture is based on DiT proposed by Sora core R&D member William Peebles and Xie Saining, assistant professor of computer science at New York University. Since text embedding and image embedding are conceptually very different, the authors of SD3 use two different sets of weights for the two modalities. As shown in the figure above, this is equivalent to setting up two independent transformers for each modality, but combining the sequences of the two modalities for attention operations, so that both representations can work in their own space, Another representation is also taken into account.

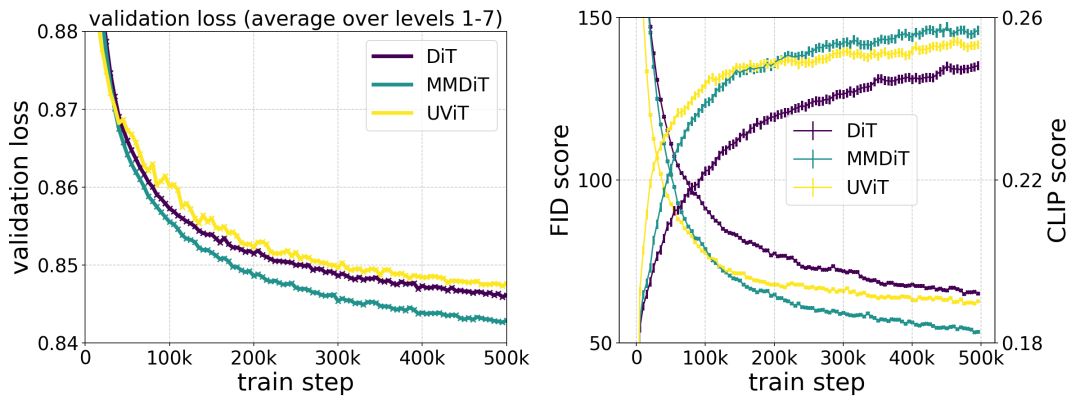

The author's proposed MMDiT architecture outperforms mature textual frameworks such as UViT and DiT when measuring visual fidelity and text alignment during training. to the image backbone.

In this way, information can flow between image and text tokens, thereby improving the overall understanding of the model and improving the typography of the generated output. As discussed in the paper, this architecture is also easily extensible to multiple modalities such as video.

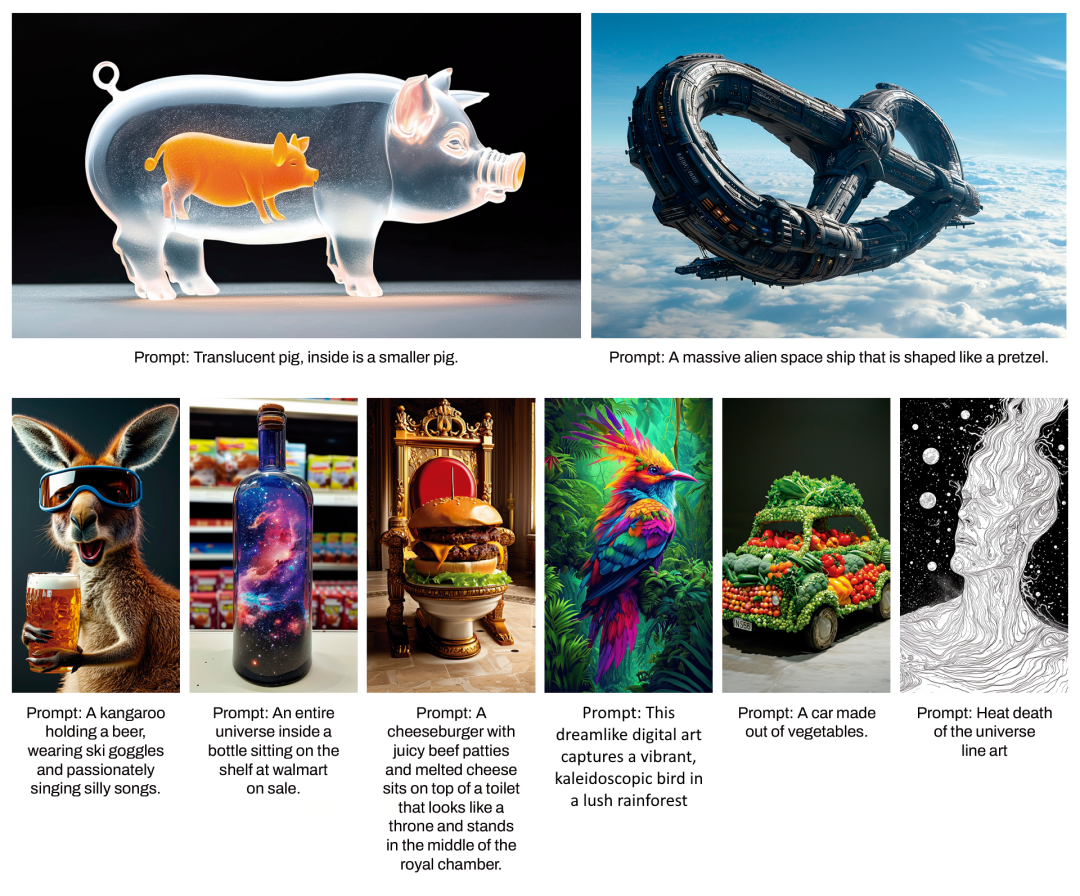

Thanks to Stable Diffusion 3’s improved prompt following capabilities, the new model has the ability to produce images that focus on a variety of different themes and qualities, At the same time, it can also handle the style of the image itself with a high degree of flexibility.

Improve Rectified Flow through re-weighting

Stable Diffusion 3 uses the Rectified Flow (RF) formula. During the training process, Data and noise are connected in a linear trajectory. This makes the inference path straighter, thus reducing sampling steps. In addition, the authors also introduce a new trajectory sampling scheme during the training process. They hypothesized that the middle part of the trajectory would pose a more challenging prediction task, so the scheme gave more weight to the middle part of the trajectory. They compared using multiple datasets, metrics and sampler settings and tested their proposed method against 60 other diffusion trajectories such as LDM, EDM and ADM. The results show that while the performance of previous RF formulations improves with few sampling steps, their relative performance decreases as the number of steps increases. In contrast, the reweighted RF variant proposed by the authors consistently improves performance.

Extended Rectified Flow Transformer model

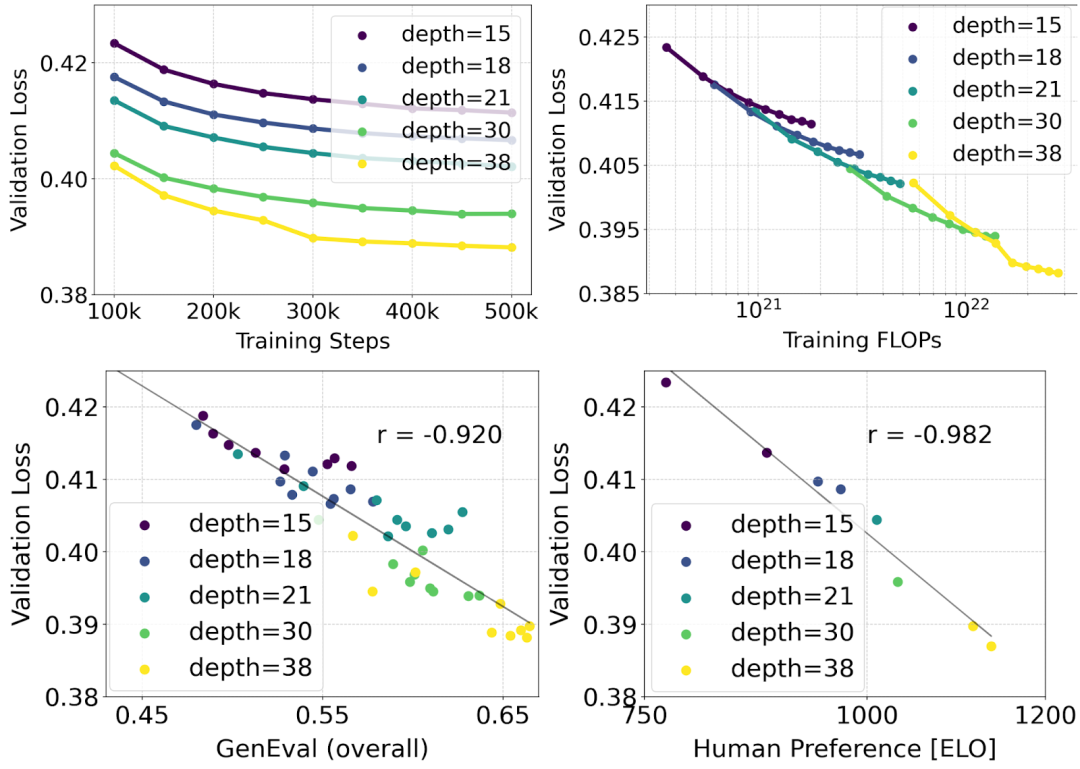

The author uses the reweighted Rectified Flow formula and MMDiT backbone pair Text-to-image synthesis is studied in scaling. They trained models ranging from 15 blocks with 450M parameters to 38 blocks with 8B parameters and observed that the validation loss decreased smoothly with increasing model size and training steps (first part of the figure above OK). To examine whether this translated into meaningful improvements in model output, the authors also evaluated the automatic image alignment metric (GenEval) and the human preference score (ELO) (second row above). The results show a strong correlation between these metrics and validation loss, suggesting that the latter is a good predictor of the overall performance of the model. Furthermore, the scaling trend shows no signs of saturation, making the authors optimistic about continuing to improve model performance in the future.

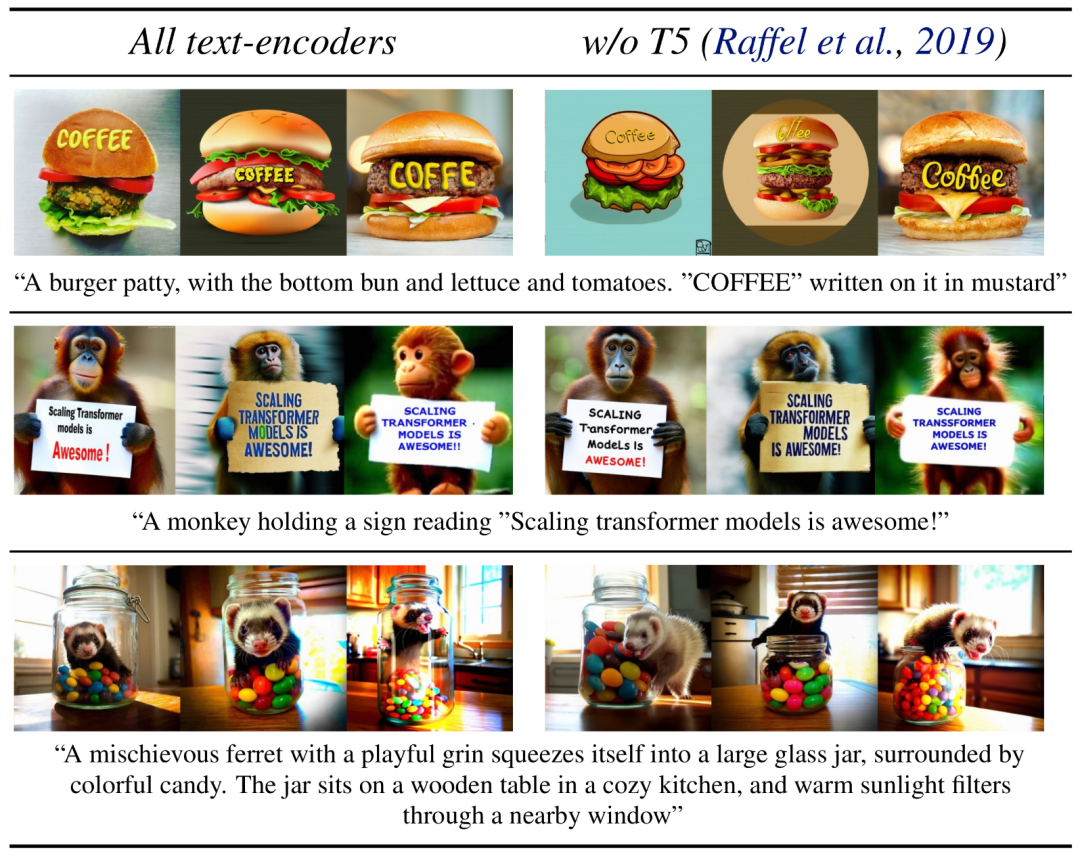

Flexible text encoder

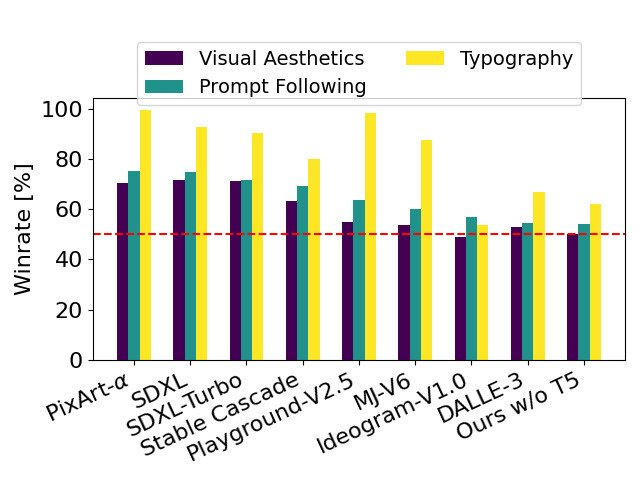

By removing memory intensive 4.7B parameter T5 text encoder for inference, SD3 memory Demand can be significantly reduced with minimal performance loss. As shown, removing this text encoder has no impact on visual aesthetics (50% win rate without T5) and only slightly reduces text consistency (46% win rate). However, the authors recommend adding T5 when generating written text to fully utilize the performance of SD3, because they observed that without adding T5, the performance of generating typesetting dropped even more (win rate 38%), as shown in the following figure:

#Removing T5 for inference will only result in a significant decrease in performance when presenting very complex prompts involving many details or large amounts of written text. The image above shows three random samples of each example.

Model performance

The author compared the output image of Stable Diffusion 3 with various other open source models (including SDXL, SDXL Turbo, Stable Cascade, Playground v2.5 and Pixart-α) as well as closed-source models such as DALL-E 3, Midjourney v6 and Ideogram v1 were compared to evaluate performance based on human feedback. In these tests, human evaluators are given examples of output from each model and judged on how well the model output follows the context of the prompt given (prompt following), how well the text is rendered according to the prompt (typography), and which image Images with higher visual aesthetics are selected for the best results.

#Using SD3 as the benchmark, this chart outlines its win rate based on human evaluation of visual aesthetics, prompt following, and text layout.

From the test results, the author found that Stable Diffusion 3 is equivalent to or even better than the current state-of-the-art text-to-image generation systems in all the above aspects.

In early unoptimized inference testing on consumer hardware, the largest 8B parameter SD3 model fit the RTX 4090's 24GB VRAM, using 50 sampling steps to generate a resolution of 1024x1024 Image takes 34 seconds.

Additionally, at initial release, Stable Diffusion 3 will be available in multiple variants, ranging from 800m to 8B parametric models to further eliminate hardware barriers.

Please refer to the original paper for more details.

Reference link: https://stability.ai/news/stable-diffusion-3-research-paper

The above is the detailed content of The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play