Application of deep learning in Ctrip search word meaning analysis

About the author

The big data and AI R&D team of Ctrip Tourism R&D Department provides the tourism division with a wealth of AI technology products and technical capabilities.

1. Background introduction

Search is one of the most important aspects of e-commerce. Most users use search to find the products they want. Therefore, search is the most direct way for users to express their intentions. , and one of the traffic sources with the highest conversion rate. The vast majority of e-commerce searches are completed by entering search terms (Query) into the search box. Therefore, the meaning analysis and intent understanding of the search terms have become an important part of the search.

Mainstream search word meaning analysis and query understanding require steps such as error correction, synonym replacement, word segmentation, part-of-speech tagging, entity recognition, intent recognition, word importance weighting, and word loss. Take the search in the tourism scenario as an example, as shown in Figure 1. When the user enters "Yunnan Xiangge Lira" as Query in the search box, the search engine first needs to correct the search term. This is to facilitate the subsequent steps. Parse out the content the user wants to search for; if necessary, synonym replacement will be performed. Then, perform word segmentation and part-of-speech tagging on the search terms to identify "Yunnan" as a province and "Shangri-La" as a city or hotel brand. Then, entity recognition will be performed to recall the corresponding entities of "Yunnan" and "Shangri-La" in the background database. id.

At this time, a disagreement emerged. "Shangri-La" may be both a city and a hotel brand. When users search, whether the correct categories and entities can be predicted is of great significance to the accurate display of search results and improving user experience. Therefore, we must identify the category that the user really wants to search for and find the corresponding entity. Otherwise, results that the user does not want may be given in the front row of the search list page. Judging from people's prior knowledge, when users search for "Yunnan Shangri-La", it is very likely that they want to search for a city. The intention identification step is to realize this function and identify the user's true search intention, which represents the city's "Shangri-La".

You can then enter the recall step of the search. The recall is mainly responsible for finding products or content related to the intent of the search term. After obtaining the IDs of "Yunnan" and "Shangri-La" in the previous steps, you can easily recall products or content related to both "Yunnan" and "Shangri-La". However, sometimes, the recall results are empty or too rare, and the user experience is not good at this time. Therefore, when the recall results are empty or too rare, word loss and secondary recall operations are often required. In addition, some words that are omissible, or words that interfere with the search, can also be processed by losing words.

The so-called lost words are to throw away the relatively unimportant or loosely related words in the search terms and recall them again. So how do you measure the importance or closeness of each word? Here we need to introduce the Term Weighting module, which treats each word as a term and calculates the weight of each term through algorithms or rules. The weight of each term directly determines the order of term importance and closeness. For example, assuming that the term weight of "Yunnan" is 0.2 and the term weight of "Shangri-La" is 0.8, then if you need to lose words, you should lose "Yunnan" first and keep "Shangri-La".

Figure 1 Search word meaning analysis and Query understanding steps

Traditional search intent identification will use vocabulary matching, category probability statistics, and artificial settings Set rules. Traditional Term Weighting also uses vocabulary matching and statistical methods. For example, based on the titles and contents of all products, data such as TF-IDF, mutual information between preceding and following words, and left and right neighbor entropy are calculated and directly stored into dictionaries and scores, providing For online use, it can be used to assist judgment based on some rules. For example, industry proper nouns directly give higher term weights, and particles directly give lower term weights.

However, traditional search intent recognition and Term Weighting algorithms cannot achieve high accuracy and recall rates, especially cannot handle some rare search terms, so some new technologies are needed to improve these two module’s precision and recall, as well as improving its ability to adapt to rare search terms. In addition, due to the high frequency of access, search word meaning analysis requires a very fast response speed. In the travel search scenario, the response speed often needs to reach the millisecond level close to single digits, which is a big challenge for the algorithm.

2. Problem Analysis

In order to improve accuracy and recall, we use deep learning to improve search intent recognition and Term Weighting algorithms. Deep learning can effectively solve intent recognition and Term Weighting in various situations through sample learning. In addition, the introduction of large-scale pre-trained language models for natural language processing can further strengthen the capabilities of deep learning models, reduce the amount of sample labeling, and make it possible to apply deep learning in search, which originally had high labeling costs.

However, the problem faced by deep learning is that due to the high complexity of the model and the deep number of neural network layers, the response speed cannot meet the high requirements of search. Therefore, we use model distillation and model compression to reduce the complexity of the model and reduce the time consumption of the deep learning model while slightly reducing the accuracy and recall rate, thereby ensuring faster response speed and higher performance.

3. Intent recognition

Category recognition is the main component of intent recognition. Category recognition in intent recognition is a method in which after the search word query is segmented, the segmentation result is marked with the category it belongs to and the corresponding probability value is given. Analyzing the intent of the user's search terms is helpful in analyzing the user's direct search needs, thereby helping to improve the user experience. For example, when a user searches for "Yunnan Shangri-La" on the travel page, the category corresponding to "Shangri-La" entered by the user is "city" instead of "hotel brand", which guides subsequent search strategies to be biased towards city intentions.

In the travel scenario, search terms with ambiguous categories entered by users account for about 11% of the total, including a large number of search terms without word segmentation. "No word segmentation" means that there are no more detailed segments after word segmentation processing, and "category ambiguity" means that the search term itself has multiple possible categories. For example, when a user inputs "Shangri-La", there is no more detailed segmentation, and there are multiple categories such as "city" and "hotel brand" in the corresponding category data.

If the search term itself is a combination of multiple words, the category can be clarified through the context of the search term itself, and the search term itself will be prioritized as the identification target. If the category cannot be determined from the search terms themselves, we will first add the user's recent historical search terms that are different from each other, as well as recent product category click records. If the above information is not available, we will add positioning stations as supplementary corpus. The original search terms are processed to obtain the Query R to be identified.

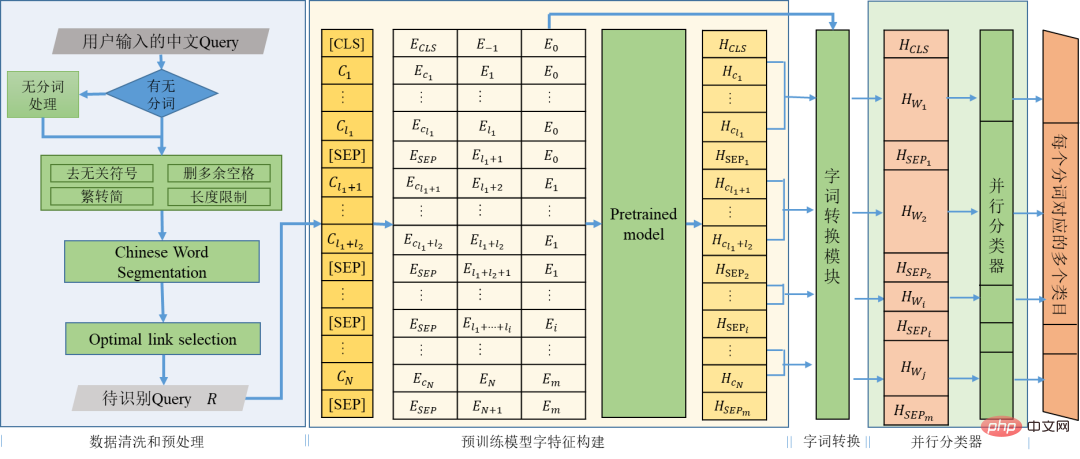

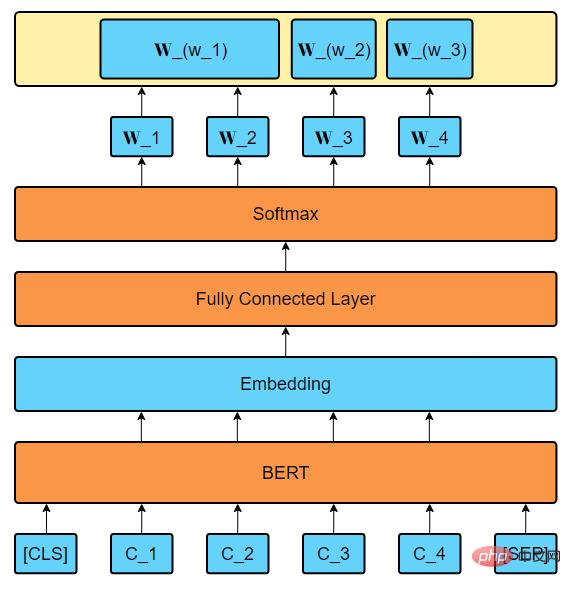

In recent years, pre-trained language models have shone in many natural language processing tasks. In category recognition, we use the training network parameters of the pre-trained model to obtain the word feature Outputbert containing contextual semantics; using the word conversion module, the word feature is combined with position coding:

Obtain the character fragment corresponding to the word segmentation, such as:

means that the length corresponding to the i-th word segmentation is li character characteristics. Based on the character fragment Wi, the word conversion module aggregates the features Hwi of each word. Aggregation methods can be max-pooling, min-pooling, mean-pooling, etc. Experiments show that max-pooling has the best effect. The output of the module is the word feature OutputR of the search word R; the matching categories covered in the category database are given for each fragment in the word feature OutputR of the search word through a parallel classifier. , and give the matching probability of the corresponding category.

Figure 2 Schematic diagram of the overall structure of category recognition

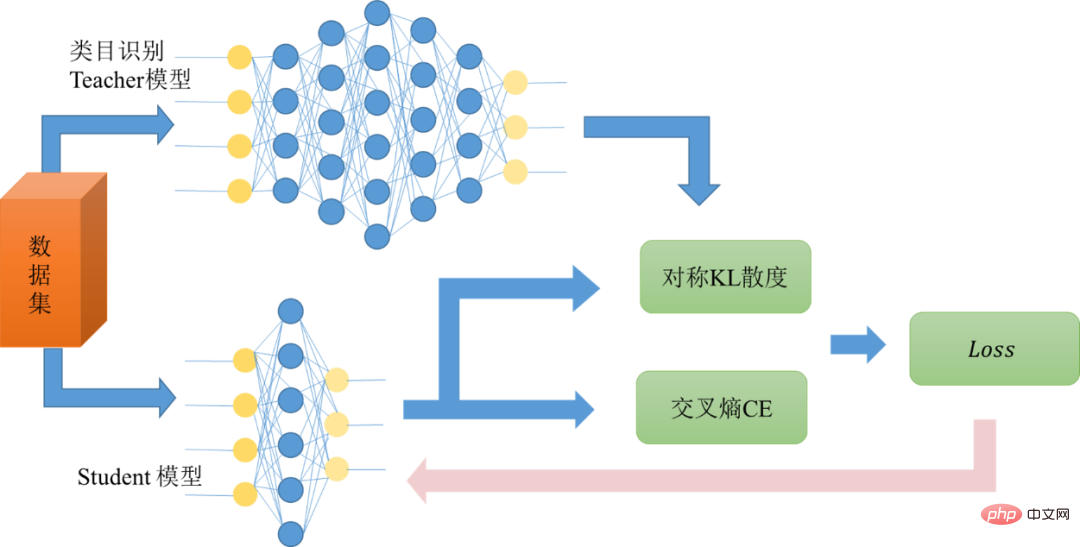

The category recognition model is based on the BERT-base 12-layer model. Since the model It is too large and does not meet the response speed requirements for online operation. We performed knowledge distillation on the model to transform the network from a large network into a small network, retaining performance close to that of a large network while meeting the requirements for online operation. Delay request.

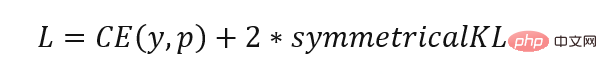

The originally trained category recognition model is used as the teacher network, and the output result of the teacher network is used as the target of the student network. The student network is trained so that the result p of the student network is close to q. Therefore, we can change the loss function Written as:

Here CE is cross entropy (Cross Entropy), symmetricalKL is symmetrical KL divergence (Kullback–Leibler divergence), y is the one-hot encoding of the real label, q is the output result of the teacher network, and p is the output result of the student network.

Figure 3 Schematic diagram of knowledge distillation

After knowledge distillation, category recognition can still achieve a relatively high level in the end. It has high accuracy and recall rate, and at the same time, the overall response time of 95 lines can be about 5ms.

After category identification, entity linking and other steps are required to complete the final intent identification process. For specific content, please refer to the article "Exploration and Practice of Ctrip Entity Link Technology" , which will not be elaborated in this article.

4. Term WeightingFor the search terms entered by the user, different terms have different importance to the user’s core semantic appeal. In the secondary recall ranking of search, you need to focus on terms with high importance, and at the same time, you can ignore terms with low importance when losing words. By calculating the term weight of each search term entered by the user, the product closest to the user's intention is recalled twice to improve the user experience. First of all, we need to find real feedback data from online users as annotation data. The user's input in the search box and clicks on associated words reflect the user's emphasis on the words in the search phrase to a certain extent. Therefore, we use the input and click data on associated words, manual screening and secondary annotation as the annotation of the Term Weighting model. data. In terms of data preprocessing, the annotated data we can obtain are phrases and their corresponding keywords. In order to make the distribution of weights not too extreme, a certain amount of small weights are given to non-keywords, and The remaining weight is assigned to each word of the keyword. If a certain phrase appears multiple times in the data and the corresponding keywords are different, the weights of these keywords will be assigned based on the frequency of the keyword, and further Assign a weight to each word. The model part mainly tries BERT as a feature extraction method, and further fits the weight of each term. For a given input, convert it into a form that BERT can accept, compress the tensor after BERT through the fully connected layer, obtain a one-dimensional vector, and then perform Softmax processing, and use this vector to weight the result vector For fitting, the specific model framework is shown in the figure below:

Figure 4 Term Weighting model framework

Since Chinese BERT is based on characters, the weights of all words in each term need to be summed to finally obtain the weight of the term. In the entire model framework, excluding some training hyperparameters, the adjustable parts mainly include two parts: First, when generating Embedding through BERT, you can choose the last layer of BERT, or the first layer of comprehensive BERT Embedding is generated by layer and last layer; secondly, in the selection of loss function, in addition to using MSE loss to measure the gap between the predicted weight and the actual weight, we also try to use the sum of the predicted weights of non-important words as the loss to calculate , but this loss is more suitable for situations where there is only a single keyword. The model eventually outputs each term weight in the form of a decimal. For example, the term weight results of ["Shanghai", "'s", "Disney"] are [0.3433, 0.1218, 0.5349]. This model serves search and has strict response speed requirements. Since the BERT model is relatively large overall, it is difficult to meet the response speed requirements in the inference part. Therefore, similar to the category recognition model, we further distill the trained BERT model to meet online requirements. In this project, a few layers of transformers are used to fit the effect of the BERT-base 12-layer transformer. In the end, the overall inference speed of the model is about 10 times faster at the loss of an acceptable part of the performance. In the end, the overall 95 lines of the Term Weighting online service can reach about 2ms. 5. Future and ProspectsAfter using deep learning, travel search has greatly improved its word meaning analysis capabilities for rare long-tail search terms. In the current real online search scenarios, deep learning methods are generally combined with traditional search word meaning analysis methods. This can not only ensure the stability of the performance of common search terms in the head, but also strengthen the generalization ability. In the future, search word meaning analysis is committed to bringing a better search experience to users. With the upgrading of hardware technology and AI technology, high-performance computing and intelligent computing are becoming more and more mature. The intention of search word meaning analysis is Identification and Term Weighting will be developed towards higher performance goals in the future. In addition, larger-scale pre-training models and pre-training models in the tourism field will help further improve the accuracy and recall rate of the model. The introduction of more user information and knowledge will help improve the effect of intent recognition. Online users’ Feedback and model iteration help improve the effectiveness of Term Weighting. These are the directions we will try in the future. In addition to intent recognition and Term Weighting, other search functions, such as part-of-speech tagging, error correction, etc., can also use deep learning technology in the future to achieve more powerful functions while meeting the response speed requirements. and better results.The above is the detailed content of Application of deep learning in Ctrip search word meaning analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to search for users in Xianyu

Feb 24, 2024 am 11:25 AM

How to search for users in Xianyu

Feb 24, 2024 am 11:25 AM

How does Xianyu search for users? In the software Xianyu, we can directly find the users we want to communicate with in the software. But I don’t know how to search for users. Just view it among the users after searching. Next is the introduction that the editor brings to users about how to search for users. If you are interested, come and take a look! How to search for users in Xianyu? Answer: View details among the searched users. Introduction: 1. Enter the software and click on the search box. 2. Enter the user name and click Search. 3. Select [User] under the search box to find the corresponding user.

How to use Baidu advanced search

Feb 22, 2024 am 11:09 AM

How to use Baidu advanced search

Feb 22, 2024 am 11:09 AM

How to use Baidu Advanced Search Baidu search engine is currently one of the most commonly used search engines in China. It provides a wealth of search functions, one of which is advanced search. Advanced search can help users search for the information they need more accurately and improve search efficiency. So, how to use Baidu advanced search? The first step is to open the Baidu search engine homepage. First, we need to open Baidu’s official website, which is www.baidu.com. This is the entrance to Baidu search. In the second step, click the Advanced Search button. On the right side of the Baidu search box, there is

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

WPS table cannot find the data you are searching for, please check the search option location

Mar 19, 2024 pm 10:13 PM

WPS table cannot find the data you are searching for, please check the search option location

Mar 19, 2024 pm 10:13 PM

In the era dominated by intelligence, office software has also become popular, and Wps forms are adopted by the majority of office workers due to their flexibility. At work, we are required not only to learn simple form making and text entry, but also to master more operational skills in order to complete the tasks in actual work. Reports with data and using forms are more convenient, clear and accurate. The lesson we bring to you today is: The WPS table cannot find the data you are searching for. Why please check the search option location? 1. First select the Excel table and double-click to open it. Then in this interface, select all cells. 2. Then in this interface, click the "Edit" option in "File" in the top toolbar. 3. Secondly, in this interface, click "

How to search for stores on mobile Taobao How to search for store names

Mar 13, 2024 am 11:00 AM

How to search for stores on mobile Taobao How to search for store names

Mar 13, 2024 am 11:00 AM

The mobile Taobao app software provides a lot of good products. You can buy them anytime and anywhere, and everything is genuine. The price tag of each product is clear. There are no complicated operations at all, making you enjoy more convenient shopping. . You can search and purchase freely as you like. The product sections of different categories are all open. Add your personal delivery address and contact number to facilitate the courier company to contact you, and check the latest logistics trends in real time. Then some new users are using it for the first time. If you don’t know how to search for products, of course you only need to enter keywords in the search bar to find all the product results. You can’t stop shopping freely. Now the editor will provide detailed online methods for mobile Taobao users to search for store names. 1. First open the Taobao app on your mobile phone,

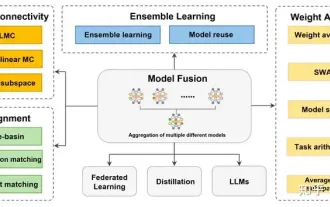

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion