Get Amazon product information using Python

Introduction

Compared with domestic shopping websites, the Amazon website can directly use the most basic requests of python to make requests. The access is not too frequent, and the data we want can be obtained without triggering the protection mechanism. This time, we will briefly introduce the basic crawling process through the following three parts:

Use the get request of requests to obtain the page content of the Amazon list and details page

-

Use css/xpath to parse the obtained content and obtain key data

The role of dynamic IP and how to use it

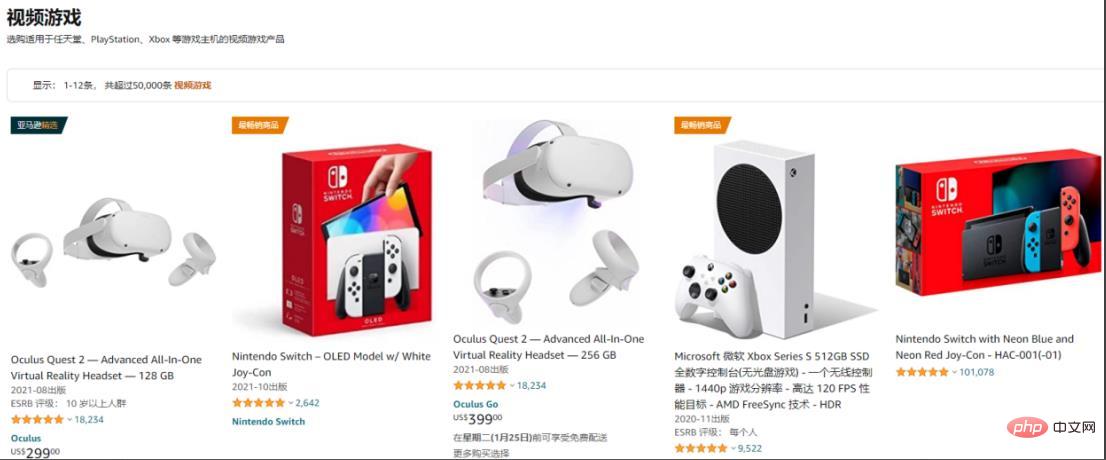

1. Obtain information from the Amazon list page

Take the game area as an example:

Get the product information that can be obtained in the list, Such as product name, details link, and further access to other content.

Use requests.get() to obtain the web page content, set the header, and use the xpath selector to select the content of the relevant tags:

import requests

from parsel import Selector

from urllib.parse import urljoin

spiderurl = 'https://www.amazon.com/s?i=videogames-intl-ship'

headers = {

"authority": "www.amazon.com",

"user-agent": "Mozilla/5.0 (iPhone; CPU iPhone OS 10_3_3 like Mac OS X) AppleWebKit/603.3.8 (KHTML, like Gecko) Mobile/14G60 MicroMessenger/6.5.19 NetType/4G Language/zh_TW",

}

resp = requests.get(spiderurl, headers=headers)

content = resp.content.decode('utf-8')

select = Selector(text=content)

nodes = select.xpath("//a[@title='product-detail']")

for node in nodes:

itemUrl = node.xpath("./@href").extract_first()

itemName = node.xpath("./div/h2/span/text()").extract_first()

if itemUrl and itemName:

itemUrl = urljoin(spiderurl,itemUrl)#用urljoin方法凑完整链接

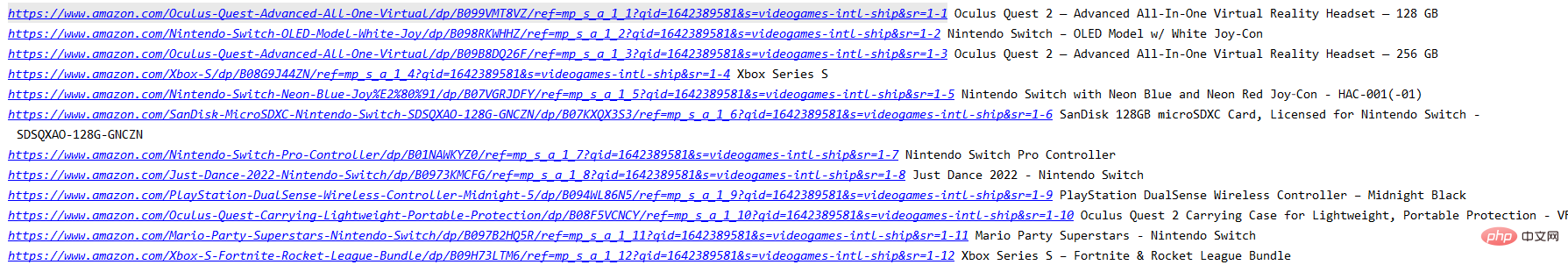

print(itemUrl,itemName)The information currently available on the current list page that has been obtained at this time :

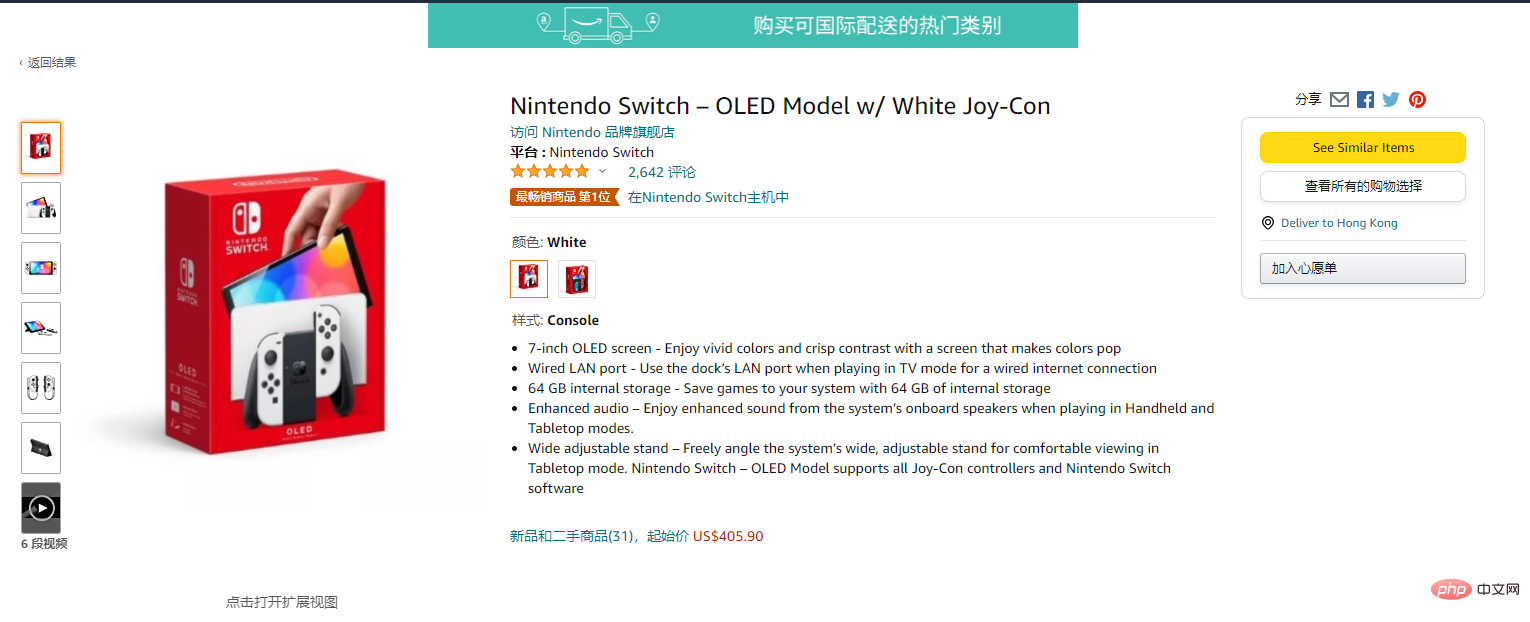

2. Get the details page information

Enter the details page:

res = requests.get(itemUrl, headers=headers)

content = res.content.decode('utf-8')

Select = Selector(text=content)

itemPic = Select.css('#main-image::attr(src)').extract_first()

itemPrice = Select.css('.a-offscreen::text').extract_first()

itemInfo = Select.css('#feature-bullets').extract_first()

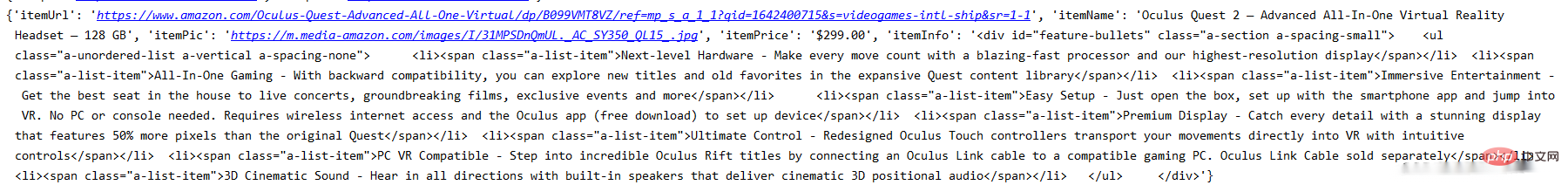

data = {}

data['itemUrl'] = itemUrl

data['itemName'] = itemName

data['itemPic'] = itemPic

data['itemPrice'] = itemPrice

data['itemInfo'] = itemInfo

print(data)

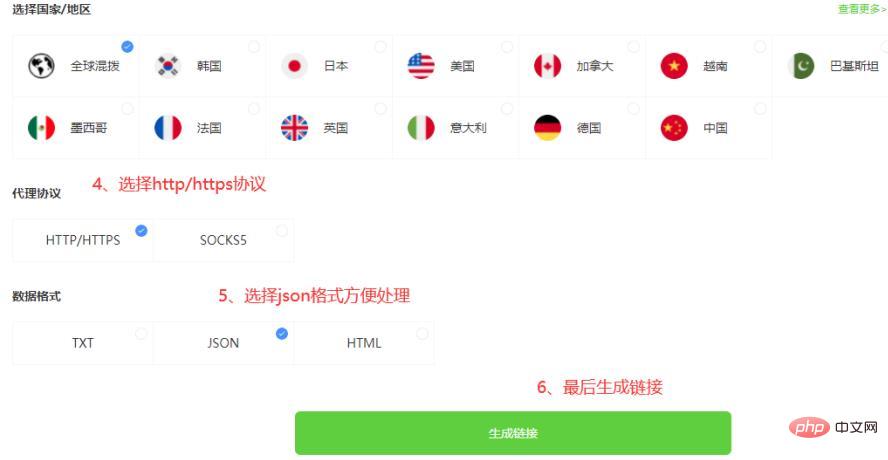

3. Proxy Settings

Currently, domestic access to Amazon will be very unstable, and there is a high probability that I will not be able to connect. If you really need to crawl Amazon’s information, it’s best to use some stable proxies. I use ipidea’s proxy here, which can get 500M traffic for free. If there is a proxy, the access success rate will be higher and the speed will be faster. The URL is here:http://www.ipidea.net/?utm-source=PHP&utm-keyword=?PHP

There are two ways to use the proxy, One is to obtain the IP address through the API, and also use the account password. The method is as follows:3.1.1 API to obtain the agent

3.1.2 api obtain ip code

def getProxies():

# 获取且仅获取一个ip

api_url = '生成的api链接'

res = requests.get(api_url, timeout=5)

try:

if res.status_code == 200:

api_data = res.json()['data'][0]

proxies = {

'http': 'http://{}:{}'.format(api_data['ip'], api_data['port']),

'https': 'http://{}:{}'.format(api_data['ip'], api_data['port']),

}

print(proxies)

return proxies

else:

print('获取失败')

except:

print('获取失败')3.2.1 Account password acquisition agent (registration address: http: //www.ipidea.net/?utm-source=PHP&utm-keyword=?PHP)

Because it is account and password verification, you need to go to the account center to fill in the information to create a sub-account:

# 获取账密ip

def getAccountIp():

# 测试完成后返回代理proxy

mainUrl = 'https://api.myip.la/en?json'

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"User-Agent": "Mozilla/5.0 (iPhone; CPU iPhone OS 10_3_3 like Mac OS X) AppleWebKit/603.3.8 (KHTML, like Gecko) Mobile/14G60 MicroMessenger/6.5.19 NetType/4G Language/zh_TW",

}

entry = 'http://{}-zone-custom{}:proxy.ipidea.io:2334'.format("帐号", "密码")

proxy = {

'http': entry,

'https': entry,

}

try:

res = requests.get(mainUrl, headers=headers, proxies=proxy, timeout=10)

if res.status_code == 200:

return proxy

except Exception as e:

print("访问失败", e)

pass四、全部代码

# coding=utf-8

import requests

from parsel import Selector

from urllib.parse import urljoin

def getProxies():

# 获取且仅获取一个ip

api_url = '生成的api链接'

res = requests.get(api_url, timeout=5)

try:

if res.status_code == 200:

api_data = res.json()['data'][0]

proxies = {

'http': 'http://{}:{}'.format(api_data['ip'], api_data['port']),

'https': 'http://{}:{}'.format(api_data['ip'], api_data['port']),

}

print(proxies)

return proxies

else:

print('获取失败')

except:

print('获取失败')

spiderurl = 'https://www.amazon.com/s?i=videogames-intl-ship'

headers = {

"authority": "www.amazon.com",

"user-agent": "Mozilla/5.0 (iPhone; CPU iPhone OS 10_3_3 like Mac OS X) AppleWebKit/603.3.8 (KHTML, like Gecko) Mobile/14G60 MicroMessenger/6.5.19 NetType/4G Language/zh_TW",

}

proxies = getProxies()

resp = requests.get(spiderurl, headers=headers, proxies=proxies)

content = resp.content.decode('utf-8')

select = Selector(text=content)

nodes = select.xpath("//a[@title='product-detail']")

for node in nodes:

itemUrl = node.xpath("./@href").extract_first()

itemName = node.xpath("./div/h2/span/text()").extract_first()

if itemUrl and itemName:

itemUrl = urljoin(spiderurl,itemUrl)

proxies = getProxies()

res = requests.get(itemUrl, headers=headers, proxies=proxies)

content = res.content.decode('utf-8')

Select = Selector(text=content)

itemPic = Select.css('#main-image::attr(src)').extract_first()

itemPrice = Select.css('.a-offscreen::text').extract_first()

itemInfo = Select.css('#feature-bullets').extract_first()

data = {}

data['itemUrl'] = itemUrl

data['itemName'] = itemName

data['itemPic'] = itemPic

data['itemPrice'] = itemPrice

data['itemInfo'] = itemInfo

print(data)通过上面的步骤,可以实现最基础的亚马逊的信息获取。

目前只获得最基本的数据,若想获得更多也可以自行修改xpath/css选择器去拿到你想要的内容。而且稳定的动态IP能是你进行请求的时候少一点等待的时间,无论是编写中的测试还是小批量的爬取,都能提升工作的效率。以上就是全部的内容。

The above is the detailed content of Get Amazon product information using Python. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

VS Code can run on Windows 8, but the experience may not be great. First make sure the system has been updated to the latest patch, then download the VS Code installation package that matches the system architecture and install it as prompted. After installation, be aware that some extensions may be incompatible with Windows 8 and need to look for alternative extensions or use newer Windows systems in a virtual machine. Install the necessary extensions to check whether they work properly. Although VS Code is feasible on Windows 8, it is recommended to upgrade to a newer Windows system for a better development experience and security.

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP originated in 1994 and was developed by RasmusLerdorf. It was originally used to track website visitors and gradually evolved into a server-side scripting language and was widely used in web development. Python was developed by Guidovan Rossum in the late 1980s and was first released in 1991. It emphasizes code readability and simplicity, and is suitable for scientific computing, data analysis and other fields.

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

VS Code can be used to write Python and provides many features that make it an ideal tool for developing Python applications. It allows users to: install Python extensions to get functions such as code completion, syntax highlighting, and debugging. Use the debugger to track code step by step, find and fix errors. Integrate Git for version control. Use code formatting tools to maintain code consistency. Use the Linting tool to spot potential problems ahead of time.

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

In VS Code, you can run the program in the terminal through the following steps: Prepare the code and open the integrated terminal to ensure that the code directory is consistent with the terminal working directory. Select the run command according to the programming language (such as Python's python your_file_name.py) to check whether it runs successfully and resolve errors. Use the debugger to improve debugging efficiency.

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

VS Code extensions pose malicious risks, such as hiding malicious code, exploiting vulnerabilities, and masturbating as legitimate extensions. Methods to identify malicious extensions include: checking publishers, reading comments, checking code, and installing with caution. Security measures also include: security awareness, good habits, regular updates and antivirus software.