Python crawler audio data example

1: Foreword

This time we crawled the information of each channel of all radio stations under the popular column of Himalaya and various information of each audio data in the channel, and then put the crawled data Save to mongodb for subsequent use. This time the amount of data is around 700,000. Audio data includes audio download address, channel information, introduction, etc., there are many.

I had my first interview yesterday. The other party was an artificial intelligence big data company. I was going to do an internship during the summer vacation of my sophomore year. They asked me to have crawled audio data, so I came to analyze Himalaya. The audio data crawls down. At present, I am still waiting for three interviews, or to be notified of the final interview news. (Because I can get certain recognition, I am very happy regardless of success or failure)

2: Running environment

IDE: Pycharm 2017

Python3.6

- ##pymongo 3.4.0

- requests 2.14.2

- lxml 3.7.2

- BeautifulSoup 4.5.3

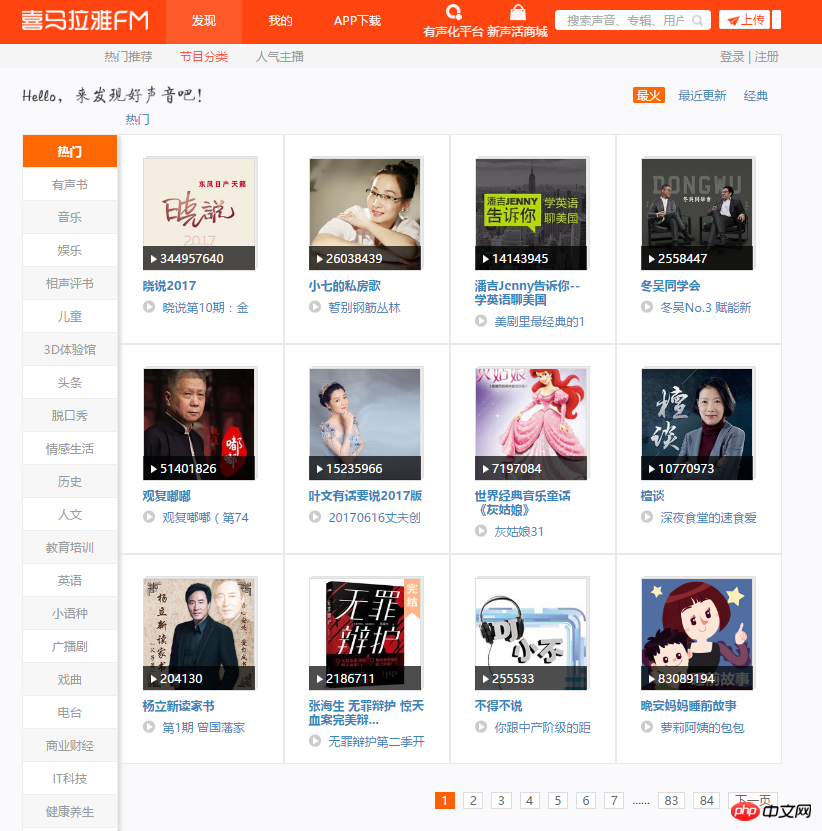

1. First enter the main page of this crawl. You can see 12 channels on each page. There are a lot of audios under each channel, and there are many paginations in some channels. Crawl plan: Loop through 84 pages, parse each page, grab the name of each channel, picture link, and save the channel link to mongodb.

Popular Channels

Popular Channels2. Open the developer mode, analyze the page, and quickly get the location of the data you want. The following code realizes grabbing the information of all popular channels and saving it to mongodb.

start_urls = ['http://www.ximalaya.com/dq/all/{}'.format(num) for num in range(1, 85)]for start_url in start_urls:html = requests.get(start_url, headers=headers1).text

soup = BeautifulSoup(html, 'lxml')for item in soup.find_all(class_="albumfaceOutter"):content = {'href': item.a['href'],'title': item.img['alt'],'img_url': item.img['src']

}

print(content) Analysis Channel

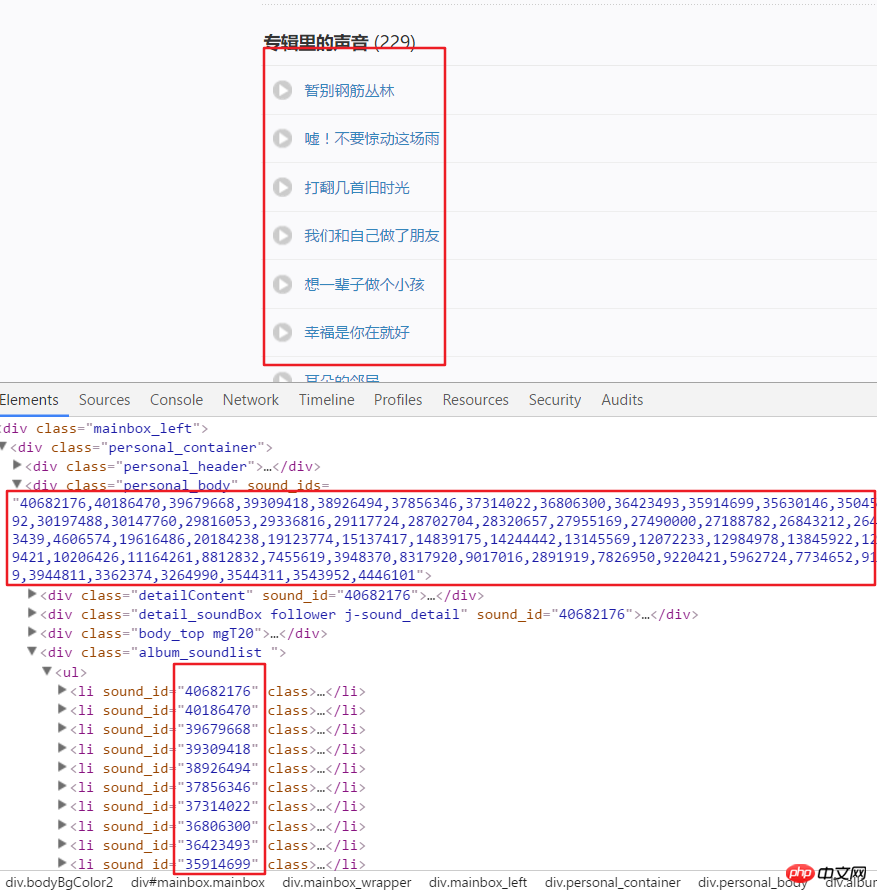

Analysis Channel3. The following is to start to obtain all the audio data in each channel. The United States was obtained through the parsing page. Channel link. For example, we analyze the page structure after entering this link. It can be seen that each audio has a specific ID, which can be obtained from the attributes in a div. Use split() and int() to convert to individual IDs.

Channel page analysis

Channel page analysis4. Then click an audio link, enter developer mode, refresh the page and click XHR, then click a json The link to see this includes all the details of this audio.

html = requests.get(url, headers=headers2).text

numlist = etree.HTML(html).xpath('//div[@class="personal_body"]/@sound_ids')[0].split(',')for i in numlist:

murl = 'http://www.ximalaya.com/tracks/{}.json'.format(i)html = requests.get(murl, headers=headers1).text

dic = json.loads(html) Audio page analysis

Audio page analysishtml = requests.get(url, headers=headers2).text

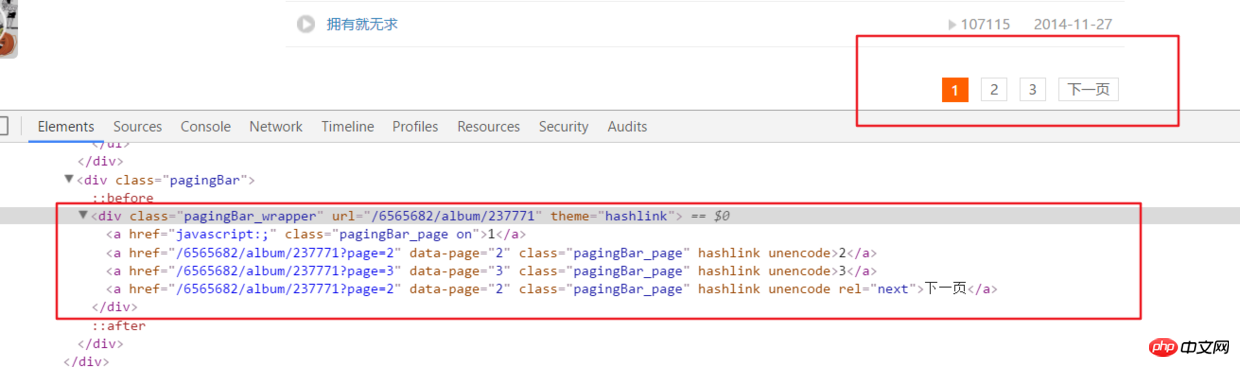

ifanother = etree.HTML(html).xpath('//div[@class="pagingBar_wrapper"]/a[last()-1]/@data-page')if len(ifanother):num = ifanother[0]

print('本频道资源存在' + num + '个页面')for n in range(1, int(num)):

print('开始解析{}个中的第{}个页面'.format(num, n))

url2 = url + '?page={}'.format(n)# 之后就接解析音频页函数就行,后面有完整代码说明 Paging

Paging6. All code

__author__ = '布咯咯_rieuse'import jsonimport randomimport timeimport pymongoimport requestsfrom bs4 import BeautifulSoupfrom lxml import etree

clients = pymongo.MongoClient('localhost')

db = clients["XiMaLaYa"]

col1 = db["album"]

col2 = db["detaile"]

UA_LIST = [] # 很多User-Agent用来随机使用可以防ban,显示不方便不贴出来了

headers1 = {} # 访问网页的headers,这里显示不方便我就不贴出来了

headers2 = {} # 访问网页的headers这里显示不方便我就不贴出来了def get_url():

start_urls = ['http://www.ximalaya.com/dq/all/{}'.format(num) for num in range(1, 85)]for start_url in start_urls:

html = requests.get(start_url, headers=headers1).text

soup = BeautifulSoup(html, 'lxml')for item in soup.find_all(class_="albumfaceOutter"):

content = {'href': item.a['href'],'title': item.img['alt'],'img_url': item.img['src']

}

col1.insert(content)

print('写入一个频道' + item.a['href'])

print(content)

another(item.a['href'])

time.sleep(1)def another(url):

html = requests.get(url, headers=headers2).text

ifanother = etree.HTML(html).xpath('//div[@class="pagingBar_wrapper"]/a[last()-1]/@data-page')if len(ifanother):

num = ifanother[0]

print('本频道资源存在' + num + '个页面')for n in range(1, int(num)):

print('开始解析{}个中的第{}个页面'.format(num, n))

url2 = url + '?page={}'.format(n)

get_m4a(url2)

get_m4a(url)def get_m4a(url):

time.sleep(1)

html = requests.get(url, headers=headers2).text

numlist = etree.HTML(html).xpath('//div[@class="personal_body"]/@sound_ids')[0].split(',')for i in numlist:

murl = 'http://www.ximalaya.com/tracks/{}.json'.format(i)

html = requests.get(murl, headers=headers1).text

dic = json.loads(html)

col2.insert(dic)

print(murl + '中的数据已被成功插入mongodb')if __name__ == '__main__':

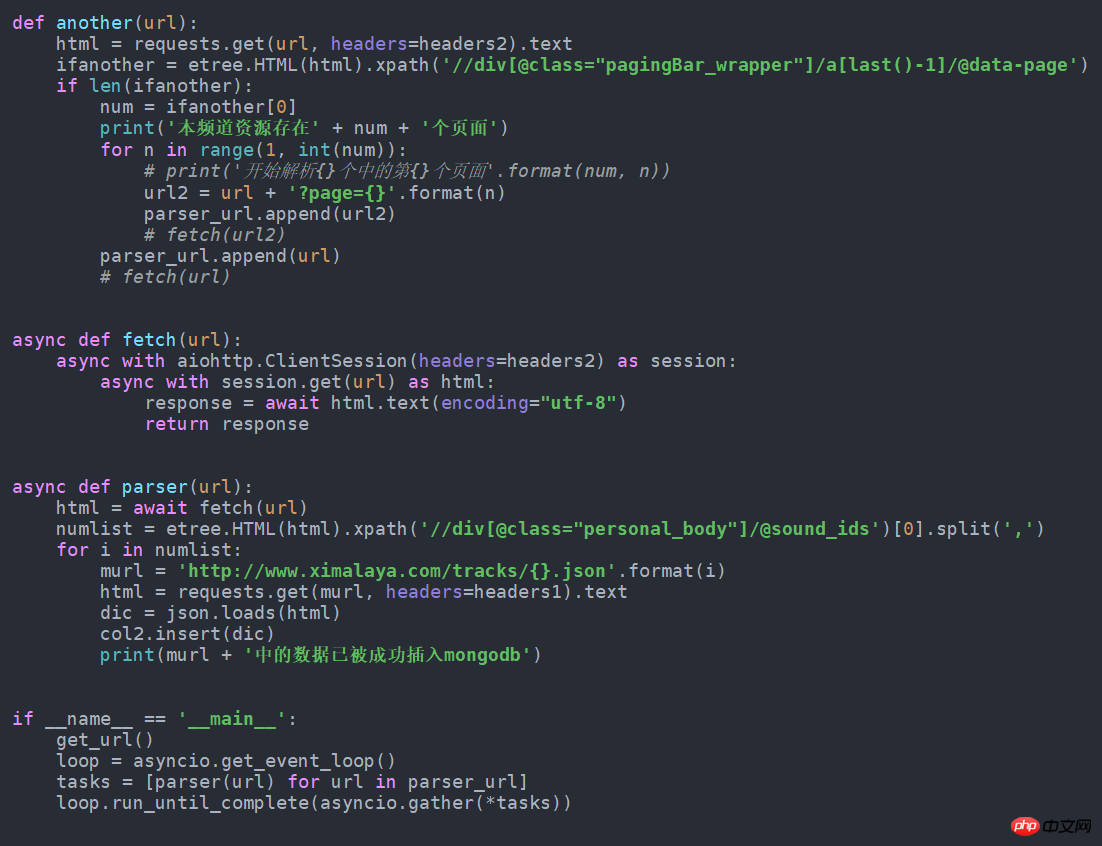

get_url()7. If it can be faster if it is changed to asynchronous form, just change it to the following. I tried to get nearly 100 more pieces of data per minute than normal. This source code is also in github.

asynchronous

asynchronousThe above is the detailed content of Python crawler audio data example. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP originated in 1994 and was developed by RasmusLerdorf. It was originally used to track website visitors and gradually evolved into a server-side scripting language and was widely used in web development. Python was developed by Guidovan Rossum in the late 1980s and was first released in 1991. It emphasizes code readability and simplicity, and is suitable for scientific computing, data analysis and other fields.

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

VS Code can run on Windows 8, but the experience may not be great. First make sure the system has been updated to the latest patch, then download the VS Code installation package that matches the system architecture and install it as prompted. After installation, be aware that some extensions may be incompatible with Windows 8 and need to look for alternative extensions or use newer Windows systems in a virtual machine. Install the necessary extensions to check whether they work properly. Although VS Code is feasible on Windows 8, it is recommended to upgrade to a newer Windows system for a better development experience and security.

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

VS Code can be used to write Python and provides many features that make it an ideal tool for developing Python applications. It allows users to: install Python extensions to get functions such as code completion, syntax highlighting, and debugging. Use the debugger to track code step by step, find and fix errors. Integrate Git for version control. Use code formatting tools to maintain code consistency. Use the Linting tool to spot potential problems ahead of time.

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

Running Python code in Notepad requires the Python executable and NppExec plug-in to be installed. After installing Python and adding PATH to it, configure the command "python" and the parameter "{CURRENT_DIRECTORY}{FILE_NAME}" in the NppExec plug-in to run Python code in Notepad through the shortcut key "F6".

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

VS Code extensions pose malicious risks, such as hiding malicious code, exploiting vulnerabilities, and masturbating as legitimate extensions. Methods to identify malicious extensions include: checking publishers, reading comments, checking code, and installing with caution. Security measures also include: security awareness, good habits, regular updates and antivirus software.