Implementation code for puppeteer simulated login and capture page

This time I will bring you the implementation code of puppeteer's simulated login and capture page. What are the precautions for the implementation of puppeteer's simulated login and capture page. The following is a practical case, let's take a look.

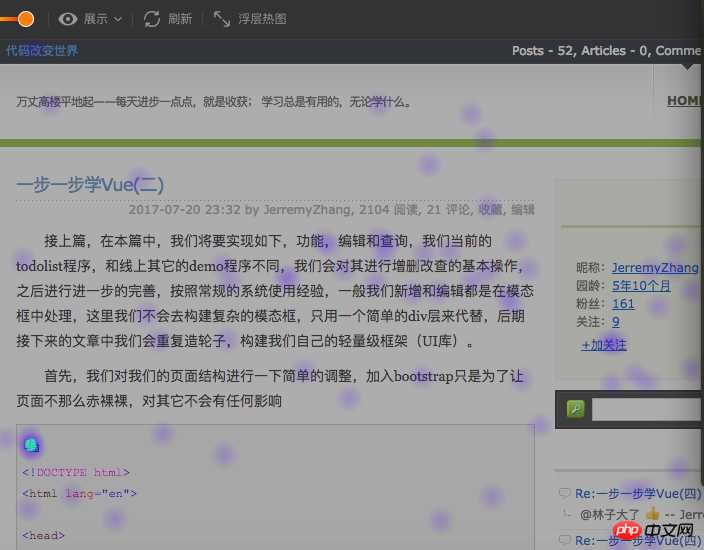

About heat map

In the website analysis industry, website heat map can well reflect the user's operating behavior on the website. Specific analysis User preferences, targeted optimization of the website, an example of a heat map (from ptengine)

You can clearly see in the above picture where the user’s focus is Well, we do not pay attention to the function of the heat map in the product. This article will make a simple analysis and summary of the implementation of the heat map.

Mainstream implementation methods of heat maps

Generally, the following stages are required to implement heat map display: 1. Obtain the website Page

2. Obtain processed user data

3. Draw heat map

This article mainly focuses on stage 1 to introduce in detail the mainstream implementation method of obtaining website pages in heat map

4. Use iframe to directly embed the user website

5. Grab the user page and save it locally, and embed local resources through iframe (the so-called local resources are considered to be the analysis tool side)

There are two ways. Advantages and Disadvantages of Each

First of all, the first one is to embed directly into the user website. This has certain restrictions. For example, if the user website does not allow iframe nesting in order to prevent iframe hijacking (set meta X-FRAME-OPTIONS to sameorgin Or set the http header directly, or even control it directly through js if(window.top !== window.self){ window.top.location = window.location;} ), in this case you need The client's website has to do some work before it can be loaded by the iframe of the analysis tool, and it may not be so convenient to use because not all website users who need to detect and analyze the website can manage the website.

The second method is to directly capture the website page to the local server, and then browse the page captured on the local server. In this case, the page has already come over, and we can do whatever we want. First, we Bypassing the problem that X-FRAME-OPTIONS is sameorgin, we only need to solve the problem of js control. For the captured pages, we can handle it through special correspondence (such as removing the corresponding js control, or adding our own js); however, this method also has many shortcomings: 1. It cannot crawl the spa page, cannot crawl the page that requires user login authorization, cannot crawl the page where the user has set a clear setting, etc.

Both methods have https and http resources. Another problem caused by the same-origin policy is that the https station cannot load http resources, so for the best compatibility, the heat map analysis tool needs to be applied with the http protocol. , of course, specific sub-station optimization can be carried out according to the customer websites visited.

How to optimize the crawling of website pages

Here we do some optimization based on puppeteer to improve the crawling of the problems encountered in crawling website pages The probability of success mainly optimizes the following two pages:

1.spa page

The spa page is considered mainstream on the current page, but it is always well-known for its Unfriendly to search engines; the usual page crawler is actually a simple crawler, and the process usually involves initiating an http get request to the user's website (should be the user's website server). This crawling method itself has problems. First of all, the direct request is to the user server. The user server should have many restrictions on non-browser agents and need to be bypassed. Secondly, the request returns the original content, which needs to be bypassed. The part rendered through js in the browser cannot be obtained (of course, after the iframe is embedded, js execution will still make up for this problem to a certain extent). Finally, if the page is a spa page, then only the template is obtained at this time. In the heat map The display effect is very unfriendly. In response to this situation, if it is done based on puppeteer, the process becomes

puppeteer starts the browser to open the user website-->page rendering-->returns the rendered result. Simply use pseudo code to implement it as follows:

const puppeteer = require('puppeteer');

async getHtml = (url) =>{

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url);

return await page.content();

}In this way, the content we get is the rendered content, regardless of the rendering method of the page (client-side rendering or server-side rendering)

Pages that require loginThere are actually many situations for pages that require login:

需要登录才可以查看页面,如果没有登录,则跳转到login页面(各种管理系统)

对于这种类型的页面我们需要做的就是模拟登录,所谓模拟登录就是让浏览器去登录,这里需要用户提供对应网站的用户名和密码,然后我们走如下的流程:

访问用户网站-->用户网站检测到未登录跳转到login-->puppeteer控制浏览器自动登录后跳转到真正需要抓取的页面,可用如下伪代码来说明:

const puppeteer = require("puppeteer");

async autoLogin =(url)=>{

const browser = await puppeteer.launch();

const page =await browser.newPage();

await page.goto(url);

await page.waitForNavigation();

//登录

await page.type('#username',"用户提供的用户名");

await page.type('#password','用户提供的密码');

await page.click('#btn_login');

//页面登录成功后,需要保证redirect 跳转到请求的页面

await page.waitForNavigation();

return await page.content();

}登录与否都可以查看页面,只是登录后看到内容会所有不同 (各种电商或者portal页面)

这种情况处理会比较简单一些,可以简单的认为是如下步骤:

通过puppeteer启动浏览器打开请求页面-->点击登录按钮-->输入用户名和密码登录 -->重新加载页面

基本代码如下图:

const puppeteer = require("puppeteer");

async autoLoginV2 =(url)=>{

const browser = await puppeteer.launch();

const page =await browser.newPage();

await page.goto(url);

await page.click('#btn_show_login');

//登录

await page.type('#username',"用户提供的用户名");

await page.type('#password','用户提供的密码');

await page.click('#btn_login');

//页面登录成功后,是否需要reload 根据实际情况来确定

await page.reload();

return await page.content();

}总结

明天总结吧,今天下班了。

补充(还昨天的债):基于puppeteer虽然可以很友好的抓取页面内容,但是也存在这很多的局限

1.抓取的内容为渲染后的原始html,即资源路径(css、image、javascript)等都是相对路径,保存到本地后无法正常显示,需要特殊处理(js不需要特殊处理,甚至可以移除,因为渲染的结构已经完成)

2.通过puppeteer抓取页面性能会比直接http get 性能会差一些,因为多了渲染的过程

3.同样无法保证页面的完整性,只是很大的提高了完整的概率,虽然通过page对象提供的各种wait 方法能够解决这个问题,但是网站不同,处理方式就会不同,无法复用。

相信看了本文案例你已经掌握了方法,更多精彩请关注php中文网其它相关文章!

推荐阅读:

The above is the detailed content of Implementation code for puppeteer simulated login and capture page. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Scrapy case analysis: How to crawl company information on LinkedIn

Jun 23, 2023 am 10:04 AM

Scrapy case analysis: How to crawl company information on LinkedIn

Jun 23, 2023 am 10:04 AM

Scrapy is a Python-based crawler framework that can quickly and easily obtain relevant information on the Internet. In this article, we will use a Scrapy case to analyze in detail how to crawl company information on LinkedIn. Determine the target URL First, we need to make it clear that our target is the company information on LinkedIn. Therefore, we need to find the URL of the LinkedIn company information page. Open the LinkedIn website, enter the company name in the search box, and

Example of scraping Instagram information using PHP

Jun 13, 2023 pm 06:26 PM

Example of scraping Instagram information using PHP

Jun 13, 2023 pm 06:26 PM

Instagram is one of the most popular social media today, with hundreds of millions of active users. Users upload billions of pictures and videos, and this data is very valuable to many businesses and individuals. Therefore, in many cases, it is necessary to use a program to automatically scrape Instagram data. This article will introduce how to use PHP to capture Instagram data and provide implementation examples. Install the cURL extension for PHP cURL is a tool used in various

Use PHP to implement a program to capture Zhihu questions and answers

Jun 13, 2023 pm 11:21 PM

Use PHP to implement a program to capture Zhihu questions and answers

Jun 13, 2023 pm 11:21 PM

As a very popular knowledge sharing community, Zhihu has many users contributing a large number of high-quality questions and answers. For people studying and working, this content is very helpful for solving problems and expanding their horizons. If you want to organize and utilize this content, you need to use scrapers to obtain relevant data. This article will introduce how to use PHP to write a program to crawl Zhihu questions and answers. Introduction Zhihu is a platform with very rich content, including but not limited to questions, answers, columns, topics, users, etc. we can pass

Using Java crawlers: Practical methods and techniques for efficiently extracting web page data

Jan 05, 2024 am 08:15 AM

Using Java crawlers: Practical methods and techniques for efficiently extracting web page data

Jan 05, 2024 am 08:15 AM

Java crawler practice: Methods and techniques for quickly crawling web page data Introduction: With the development of the Internet, massive information is stored in web pages, and it becomes increasingly difficult for people to obtain useful data from it. Using crawler technology, we can quickly and automatically crawl web page data and extract the useful information we need. This article will introduce methods and techniques for crawler development using Java, and provide specific code examples. 1. Choose the appropriate crawler framework. In the Java field, there are many excellent crawler frameworks to choose from, such as Jso

Nginx redirection configuration parsing to implement URL forwarding and crawling

Jul 04, 2023 pm 06:37 PM

Nginx redirection configuration parsing to implement URL forwarding and crawling

Jul 04, 2023 pm 06:37 PM

Nginx redirection configuration parsing to implement URL forwarding and crawling Introduction: In web application development, we often encounter situations where URLs need to be redirected. As a high-performance web server and reverse proxy server, Nginx provides powerful redirection functions. This article will analyze the redirection configuration of Nginx and show how to implement URL forwarding and crawling functions through code examples. 1. Basic concepts Redirection refers to the process of forwarding a URL request to another URL. In Nginx

How to use Scrapy to parse and scrape website data

Jun 23, 2023 pm 12:33 PM

How to use Scrapy to parse and scrape website data

Jun 23, 2023 pm 12:33 PM

Scrapy is a Python framework for scraping and parsing website data. It helps developers easily crawl website data and analyze it, enabling tasks such as data mining and information collection. This article will share how to use Scrapy to create and execute a simple crawler program. Step 1: Install and configure Scrapy Before using Scrapy, you need to install and configure the Scrapy environment first. Scrapy can be installed by running: pipinstallscra

How to use PHP and phpSpider to accurately crawl specific website content?

Jul 22, 2023 pm 08:29 PM

How to use PHP and phpSpider to accurately crawl specific website content?

Jul 22, 2023 pm 08:29 PM

How to use PHP and phpSpider to accurately crawl specific website content? Introduction: With the development of the Internet, the amount of data on the website is increasing, and it is inefficient to obtain the required information through manual operations. Therefore, we often need to use automated crawling tools to obtain the content of specific websites. The PHP language and phpSpider library are one of the very practical tools. This article will introduce how to use PHP and phpSpider to accurately crawl specific website content, and provide code examples. 1. Installation

Nginx redirection configuration tutorial to implement URL forwarding and crawling

Jul 05, 2023 am 11:42 AM

Nginx redirection configuration tutorial to implement URL forwarding and crawling

Jul 05, 2023 am 11:42 AM

Nginx redirection configuration tutorial to implement URL forwarding and crawling. Nginx is a high-performance open source web server that can also be used to implement functions such as reverse proxy, load balancing, and URL redirection. In this article, we will introduce how to implement URL redirection and crawling through Nginx configuration, and provide relevant code examples. 1. URL forwarding URL forwarding refers to forwarding a URL request to another URL address. In Nginx, we can implement URL through configuration