Technology peripherals

Technology peripherals

AI

AI

58 lines of code scale Llama 3 to 1 million contexts, any fine-tuned version is applicable

58 lines of code scale Llama 3 to 1 million contexts, any fine-tuned version is applicable

58 lines of code scale Llama 3 to 1 million contexts, any fine-tuned version is applicable

Llama 3, the majestic king of open source, original context window actually only has...8k, which made me swallow the words "really delicious" on my lips again. .

Today, when 32k is the starting point and 100k is common, is this deliberately leaving room for contributions to the open source community?

The open source community will certainly not miss this opportunity:

Now with only 58 lines of code, any fine-tuned version of Llama 3 70b can be automatically extended 1048k(One million)Context.

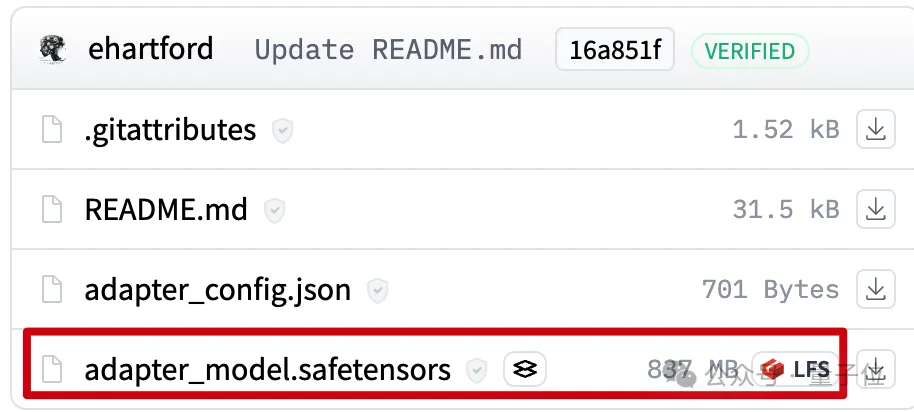

Behind the scenes is a LoRA, extracted from a fine-tuned version of Llama 3 70B Instruct that extends the context, The file is only 800mb .

Next, using Mergekit, you can run it with other models of the same architecture or merge it directly into the model.

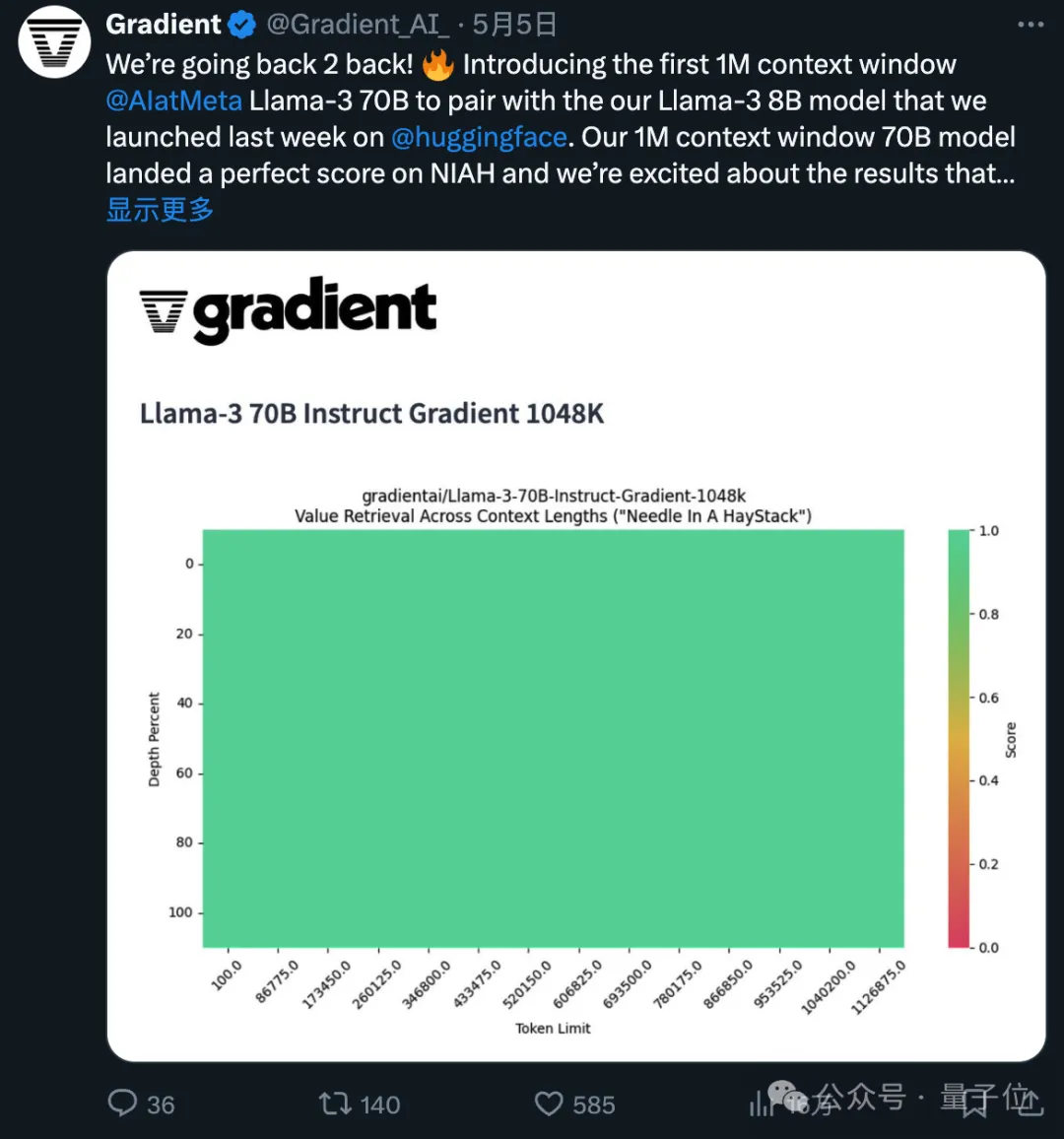

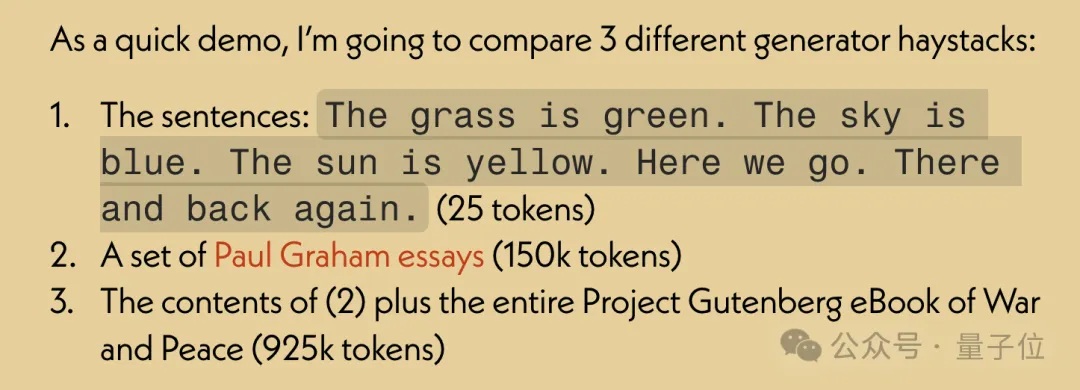

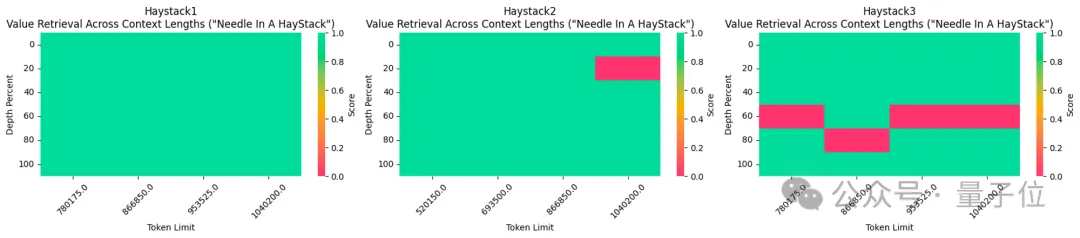

The fine-tuned version of the 1048k context used has just achieved an all-green (100% accuracy) score in the popular needle-in-a-haystack test.

It must be said that the speed of progress of open source is exponential.

How to make 1048k contextual LoRA

First, the 1048k contextual version of Llama 3 fine-tuning model comes from Gradient AI, an enterprise AI solutions startup.

The corresponding LoRA comes from developer Eric Hartford. By comparing the differences between the fine-tuned model and the original version, the parameters are extracted Variety.

He first produced a 524k contextual version, and then updated the 1048k version.

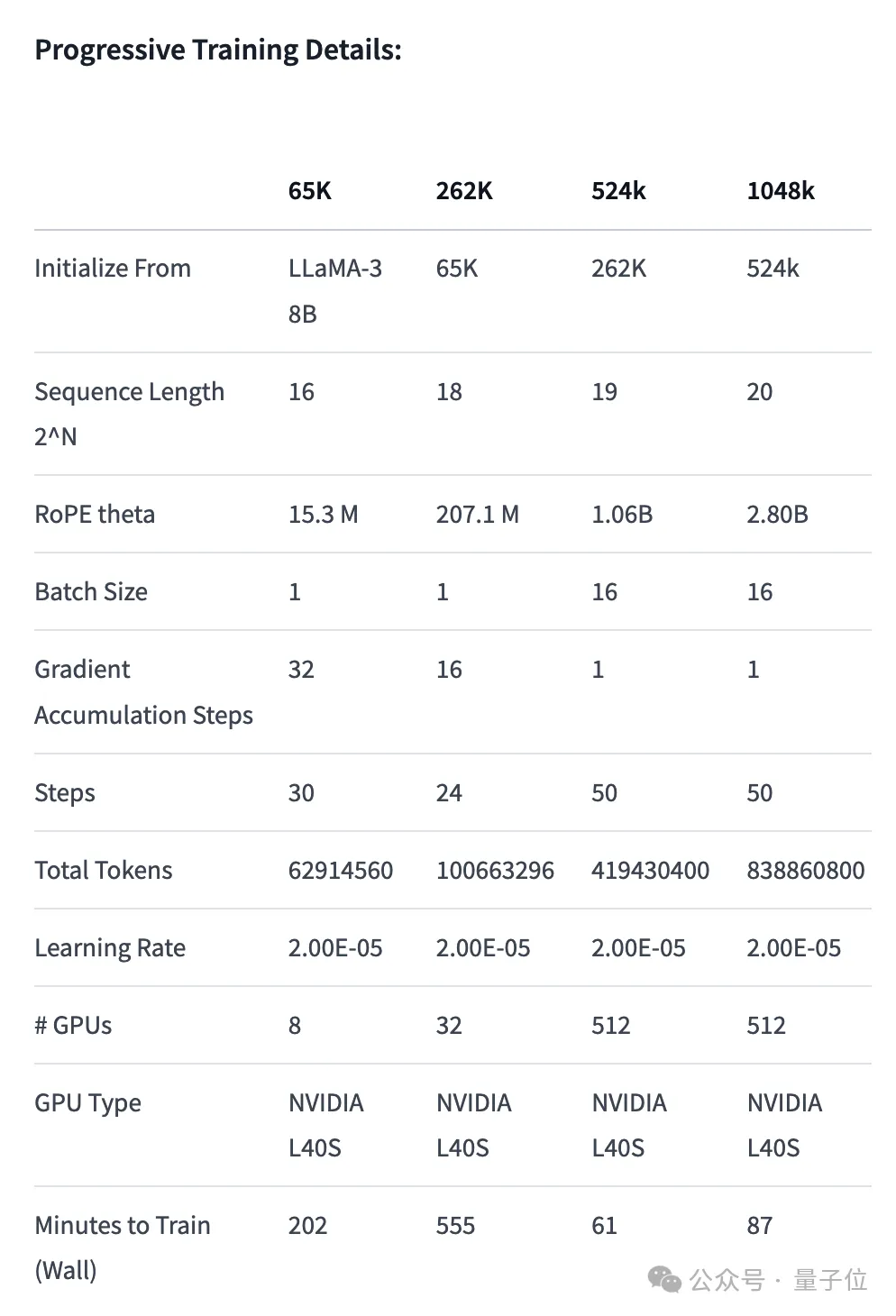

First of all, the Gradient team continued training based on the original Llama 3 70B Instruct and obtained Llama-3-70B-Instruct-Gradient-1048k.

The specific method is as follows:

- Adjust position encoding: Initialize RoPE theta with NTK-aware interpolation Optimal scheduling, optimization to prevent loss of high-frequency information after extending the length

- Progressive training: Proposed by the UC Berkeley Pieter Abbeel team The Blockwise RingAttention method extends the context length of the model

It is worth noting that the team layered parallelization on top of Ring Attention through a custom network topology to better utilize large GPU clusters to cope with device-to-device The network bottleneck caused by transferring many KV blocks between nodes.

Ultimately, the training speed of the model was increased by 33 times.

#In long text retrieval performance evaluation, only in the most difficult version, errors are prone to occur when the "needle" is hidden in the middle of the text.

After having the fine-tuned model with extended context, use the open source tool Mergekit to compare the fine-tuned model and the basic model, and extract the difference in parameters as LoRA.

Also using Mergekit, you can merge the extracted LoRA into other models with the same architecture.

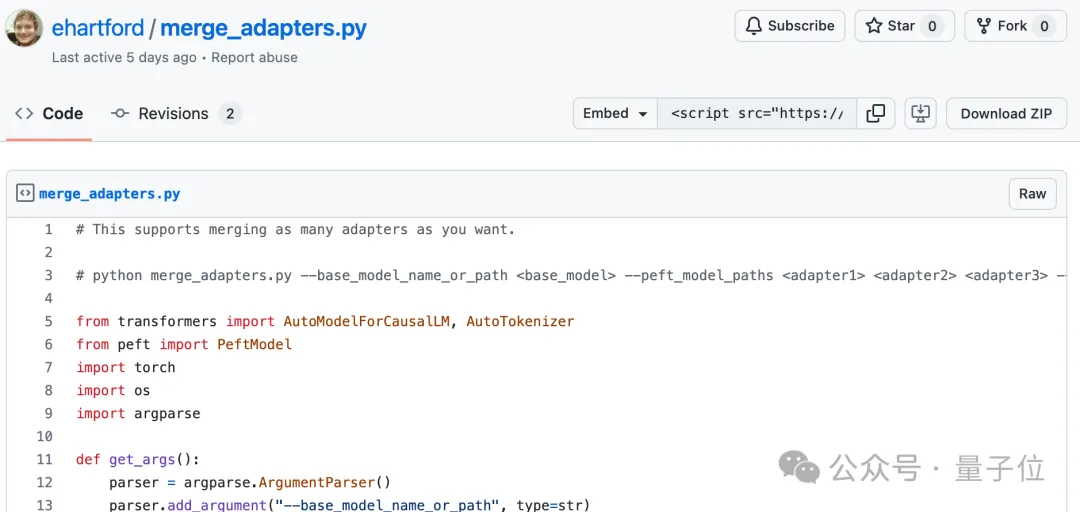

The merge code is also open sourced by Eric Hartford on GitHub, with only 58 lines.

It is unclear whether this LoRA merge will work with Llama 3, which is fine-tuned on Chinese.

However, it can be seen that the Chinese developer community has paid attention to this development.

524k version LoRA: https://huggingface.co/cognitivecomputations/Llama-3-70B-Gradient-524k-adapter

1048k version LoRA: https://huggingface.co/cognitivecomputations/Llama-3-70B-Gradient-1048k-adapter

Merge code: https://gist.github.com/ehartford/731e3f7079db234fa1b79a01e09859ac

The above is the detailed content of 58 lines of code scale Llama 3 to 1 million contexts, any fine-tuned version is applicable. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to update code in git

Apr 17, 2025 pm 04:45 PM

How to update code in git

Apr 17, 2025 pm 04:45 PM

Steps to update git code: Check out code: git clone https://github.com/username/repo.git Get the latest changes: git fetch merge changes: git merge origin/master push changes (optional): git push origin master

How to download git projects to local

Apr 17, 2025 pm 04:36 PM

How to download git projects to local

Apr 17, 2025 pm 04:36 PM

To download projects locally via Git, follow these steps: Install Git. Navigate to the project directory. cloning the remote repository using the following command: git clone https://github.com/username/repository-name.git

How to use git commit

Apr 17, 2025 pm 03:57 PM

How to use git commit

Apr 17, 2025 pm 03:57 PM

Git Commit is a command that records file changes to a Git repository to save a snapshot of the current state of the project. How to use it is as follows: Add changes to the temporary storage area Write a concise and informative submission message to save and exit the submission message to complete the submission optionally: Add a signature for the submission Use git log to view the submission content

What to do if the git download is not active

Apr 17, 2025 pm 04:54 PM

What to do if the git download is not active

Apr 17, 2025 pm 04:54 PM

Resolve: When Git download speed is slow, you can take the following steps: Check the network connection and try to switch the connection method. Optimize Git configuration: Increase the POST buffer size (git config --global http.postBuffer 524288000), and reduce the low-speed limit (git config --global http.lowSpeedLimit 1000). Use a Git proxy (such as git-proxy or git-lfs-proxy). Try using a different Git client (such as Sourcetree or Github Desktop). Check for fire protection

How to merge code in git

Apr 17, 2025 pm 04:39 PM

How to merge code in git

Apr 17, 2025 pm 04:39 PM

Git code merge process: Pull the latest changes to avoid conflicts. Switch to the branch you want to merge. Initiate a merge, specifying the branch to merge. Resolve merge conflicts (if any). Staging and commit merge, providing commit message.

How to update local code in git

Apr 17, 2025 pm 04:48 PM

How to update local code in git

Apr 17, 2025 pm 04:48 PM

How to update local Git code? Use git fetch to pull the latest changes from the remote repository. Merge remote changes to the local branch using git merge origin/<remote branch name>. Resolve conflicts arising from mergers. Use git commit -m "Merge branch <Remote branch name>" to submit merge changes and apply updates.

How to solve the efficient search problem in PHP projects? Typesense helps you achieve it!

Apr 17, 2025 pm 08:15 PM

How to solve the efficient search problem in PHP projects? Typesense helps you achieve it!

Apr 17, 2025 pm 08:15 PM

When developing an e-commerce website, I encountered a difficult problem: How to achieve efficient search functions in large amounts of product data? Traditional database searches are inefficient and have poor user experience. After some research, I discovered the search engine Typesense and solved this problem through its official PHP client typesense/typesense-php, which greatly improved the search performance.

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

How to delete a repository by git

Apr 17, 2025 pm 04:03 PM

To delete a Git repository, follow these steps: Confirm the repository you want to delete. Local deletion of repository: Use the rm -rf command to delete its folder. Remotely delete a warehouse: Navigate to the warehouse settings, find the "Delete Warehouse" option, and confirm the operation.