Technology peripherals

Technology peripherals

AI

AI

The model will evolve after merging, and directly win SOTA! Transformer author's new entrepreneurial achievements are popular

The model will evolve after merging, and directly win SOTA! Transformer author's new entrepreneurial achievements are popular

The model will evolve after merging, and directly win SOTA! Transformer author's new entrepreneurial achievements are popular

Use the ready-made models on Huggingface to "save up" -

can you directly combine them to create new powerful models? !

The Japanese large model company sakana.ai was very creative (it was the company founded by one of the "Transformer Eight") and came up with such a coup to evolve the merged model.

This method can not only automatically generate a new basic model, but alsoThe performance is absolutely bad:

They Utilizing a large model of Japanese mathematics containing 7 billion parameters, it achieved state-of-the-art results on relevant benchmarks, surpassing previous models such as the 70 billion parameter Llama-2.

The most important thing is that arriving at such a model does not require any gradient training, so the computing resources required are greatly reduced.

NVIDIA scientist Jim Fan praised after reading it:

This is one of the most imaginative papers I have read recently.

Merge and evolve, automatically generate new basic models

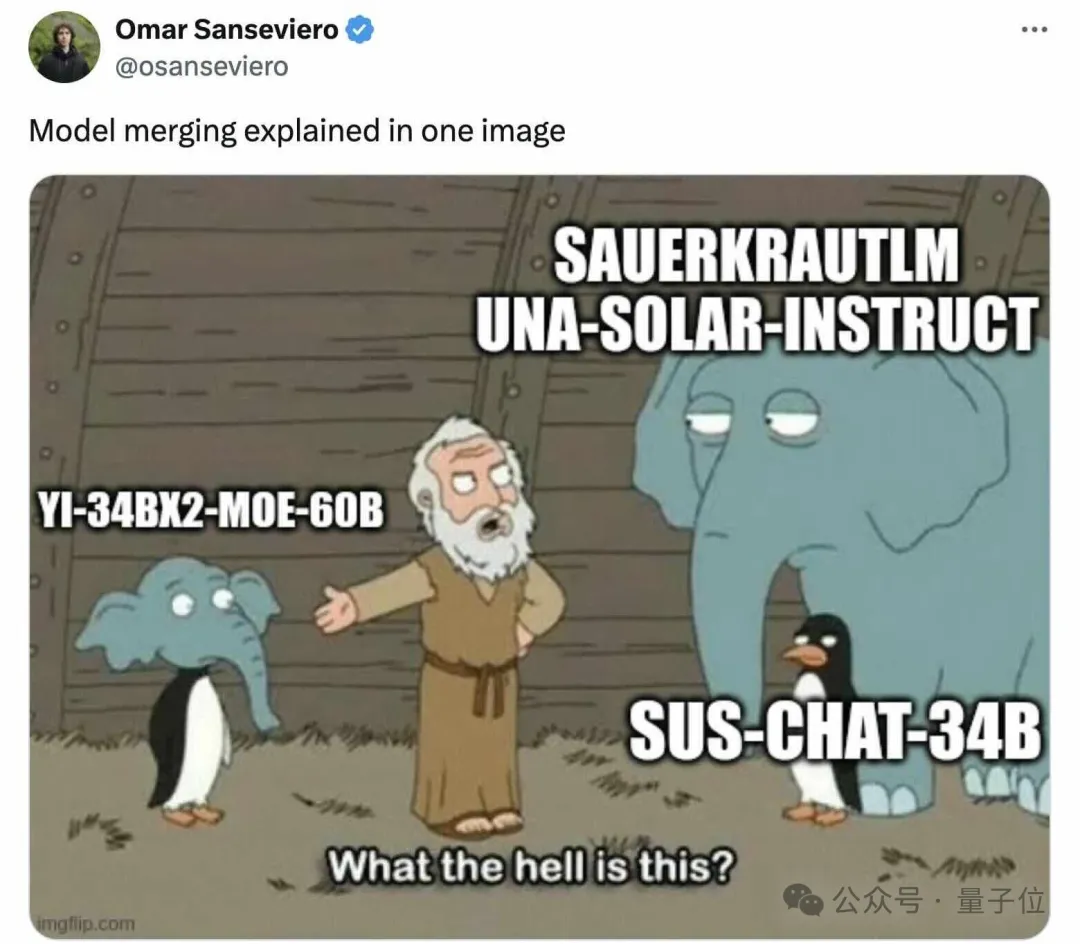

Most of the best-performing models from the open source large model rankings are no longer LLaMA Or "original" models like Mistral, but after some fine-tuning or merging models, we can see:

A new trend has emerged.

Sakana.ai introduced that the open source basic model can be easily extended and fine-tuned in hundreds of different directions, and then produce new models that perform well in new fields.

Among these, model merging shows great prospects.

However, it may be a kind of "black magic" that relies heavily on intuition and professional knowledge.

Therefore, we need a more systematic approach.

Inspired by natural selection in nature, Sakana.ai focused on evolutionary algorithms, introduced the concept of "Evolutionary Model Merge", and proposed a method that can discover the best model General method of combination.

This method combines two different ideas:

(1) merging models in the data flow space (layer) , and (2) merging parameter spaces (weights) model in .

Specifically, the first data flow space method uses evolution to discover the best combination of different model layers to form a new model.

In the past, the community relied on intuition to determine how and which layers of a model can be combined with layers of another model.

But in fact, Sakana.ai introduced that this problem has a search space with a huge number of combinations, which is most suitable for searching by optimization algorithms such as evolutionary algorithms.

The operation examples are as follows:

As for the second parameter space method, multiple model weights are mixed to form a new model.

There are actually countless ways to implement this method, and in principle, each layer of mixing can use different mixing ratios, even more.

And here, using evolutionary methods can effectively find more novel hybrid strategies.

The following is an example of mixing the weights of two different models to obtain a new model:

Combine the above two methods, that’s it :

The authors introduce that they hope to form new emerging fields that have not been explored before in distant fields, such as mathematics and non-English languages, vision and non-English languages. combination.

The result is really a bit surprising.

New model easily wins SOTA

Using the above evolutionary merging method, the team obtained 3 basic models:

- Large language model EvoLLM-JP

is formed by merging the large Japanese model Shisa-Gamma and the large mathematical model WizardMath/Abel. It is good at solving Japanese mathematics problems and has evolved for 100-150 generations.

- Visual language model EvoVLM-JP

- Image generation model EvoSDXL-JP

1. EvoLLM-JP

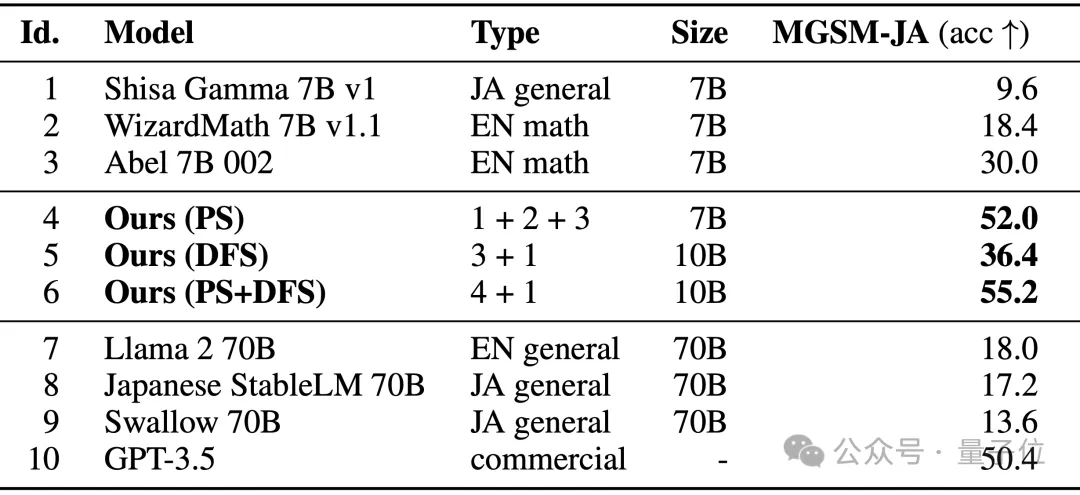

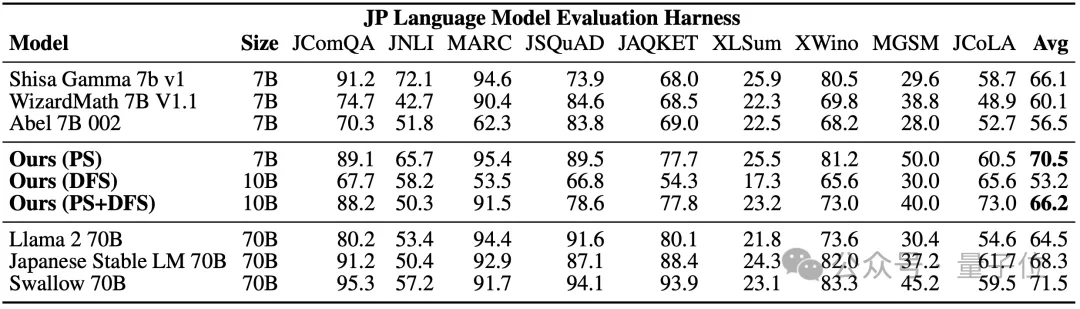

It achieved the following results on the Japanese evaluation set of MGSM, a multilingual version of the GSM8K data set:

Among them, Model 4 is optimized only in the parameter space, and Model 6 is the result of further optimization using Model 4 in the data flow space.

On the Japanese lm-evaluation-harness benchmark, which evaluates both data capabilities and general Japanese language skills, EvoLLM-JP achieved a maximum average score of 70.5 on 9 tasks - using only 7 billion parameters. It beats models such as the 70 billion Llama-2.

The team stated that EvoLLM-JP is good enough to be used as a general Japanese model and solve some interesting examples:

For example, specific Japanese culture is required Math problems of knowledge, or telling Japanese jokes in Kansai dialect.

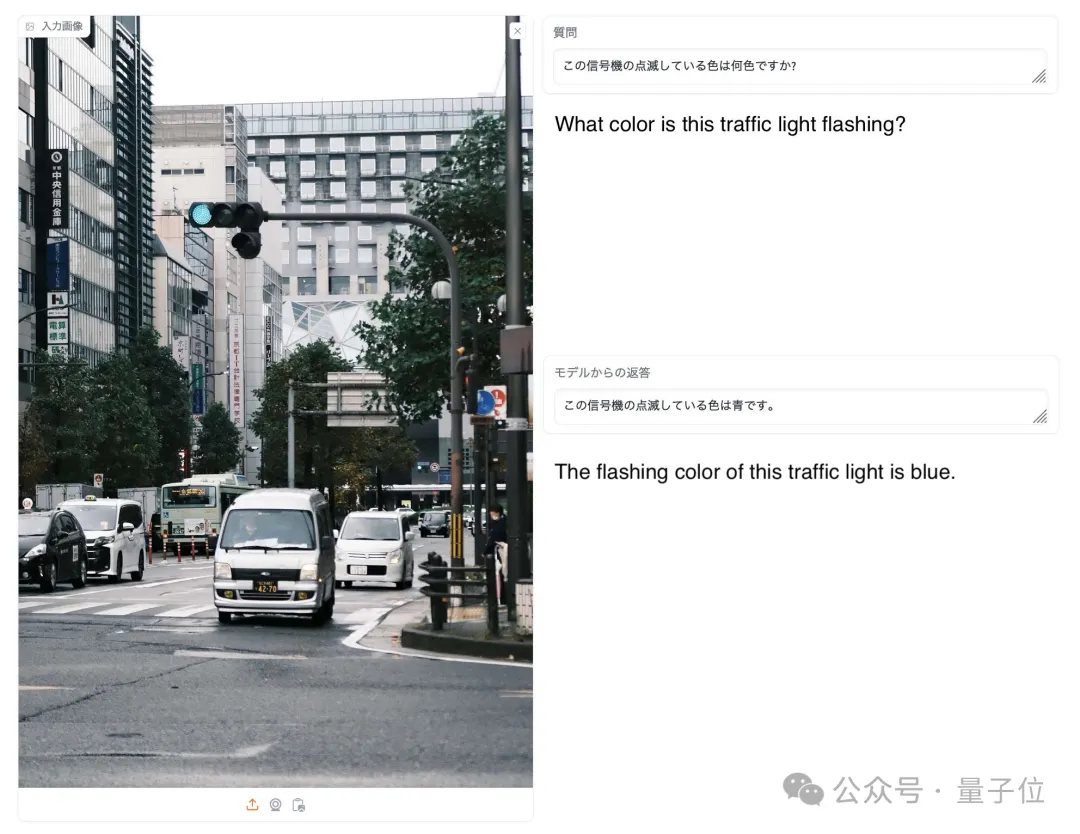

2, EvoVLM-JP

On the following two benchmark data sets of image question and answer, the higher the score, the model answers in Japanese The description is more accurate.

As a result, it is not only better than the English VLM LLaVa-1.6-Mistral-7B on which it is based, but also better than the existing Japanese VLM.

As shown in the picture below, when asked what the color of the signal light in the picture is, only EvoVLM-JP answered correctly: blue.

3. EvoSDXL-JP

This SDXL model that supports Japanese only requires 4 diffusion models Inference can be performed and the generation speed is quite fast.

The specific running scores have not yet been released, but the team revealed that it is "quite promising."

You can enjoy some examples:

The prompt words include: Miso ラーメン, the highest quality Ukiyoe, Katsushika Hokusai, Edo era.

For the above 3 new models, the team pointed out:

In principle, we can use gradient-based backpropagation to further improve the above performance of these models.

But we don’t use , because the purpose now is to show that even without backpropagation, we can still get a sufficiently advanced basic model to challenge the current "expensive Paradigm”.

Netizens liked this one after another.

Jim Fan also added:

In the field of basic models, the current community is almost entirely focused on letting the model learn, and does not pay much attention to search , but the latter actually has huge potential in the training (that is, the evolutionary algorithm proposed in this article) and inference stage.

△Liked by Musk

So, as netizens said:

We are now in the Cambrian of the model Is it the era of the Great Explosion?

Paper address: https://arxiv.org/abs/2403.13187

The above is the detailed content of The model will evolve after merging, and directly win SOTA! Transformer author's new entrepreneurial achievements are popular. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

Alibaba 7B multi-modal document understanding large model wins new SOTA

Apr 02, 2024 am 11:31 AM

New SOTA for multimodal document understanding capabilities! Alibaba's mPLUG team released the latest open source work mPLUG-DocOwl1.5, which proposed a series of solutions to address the four major challenges of high-resolution image text recognition, general document structure understanding, instruction following, and introduction of external knowledge. Without further ado, let’s look at the effects first. One-click recognition and conversion of charts with complex structures into Markdown format: Charts of different styles are available: More detailed text recognition and positioning can also be easily handled: Detailed explanations of document understanding can also be given: You know, "Document Understanding" is currently An important scenario for the implementation of large language models. There are many products on the market to assist document reading. Some of them mainly use OCR systems for text recognition and cooperate with LLM for text processing.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.