Introduction to the three core components of hadoop

The three core components of Hadoop are: Hadoop Distributed File System (HDFS), MapReduce and Yet Another Resource Negotiator (YARN).

-

Hadoop Distributed File System (HDFS):

- HDFS is Hadoop’s distributed file system, used to store large-scale data sets. It splits large files into multiple data blocks and distributes and stores these data blocks on multiple nodes in the cluster. HDFS provides high-capacity, high-reliability and high-throughput data storage solutions and is the foundation of the Hadoop distributed computing framework.

-

MapReduce:

- MapReduce is Hadoop's distributed computing framework for parallel processing of large-scale data sets. It is based on the functional programming model and decomposes the computing task into two stages: Map and Reduce. The Map stage divides the input data into independent tasks for processing, while the Reduce stage combines the results of the Map tasks into the final output. MapReduce provides fault tolerance, scalability, and parallel processing capabilities.

-

Yet Another Resource Negotiator (YARN):

- YARN is the resource manager of Hadoop, responsible for the scheduling and management of resources in the cluster. It can allocate and manage computing resources for multiple applications, thereby improving the utilization of computing resources. YARN divides the computing resources in the cluster into multiple containers and provides appropriate resources for different applications while monitoring and managing the running status of each application.

These three components together form the core of the Hadoop distributed computing framework, making Hadoop good at offline data analysis. In cloud computing, Hadoop is combined with big data and virtualization technology to provide powerful support for data processing.

The above is the detailed content of Introduction to the three core components of hadoop. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1658

1658

14

14

1415

1415

52

52

1309

1309

25

25

1257

1257

29

29

1231

1231

24

24

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid

Jun 24, 2023 pm 01:06 PM

Java Errors: Hadoop Errors, How to Handle and Avoid When using Hadoop to process big data, you often encounter some Java exception errors, which may affect the execution of tasks and cause data processing to fail. This article will introduce some common Hadoop errors and provide ways to deal with and avoid them. Java.lang.OutOfMemoryErrorOutOfMemoryError is an error caused by insufficient memory of the Java virtual machine. When Hadoop is

New work by Yan Shuicheng/Cheng Mingming! DiT training, the core component of Sora, is accelerated by 10 times, and Masked Diffusion Transformer V2 is open source

Mar 13, 2024 pm 05:58 PM

New work by Yan Shuicheng/Cheng Mingming! DiT training, the core component of Sora, is accelerated by 10 times, and Masked Diffusion Transformer V2 is open source

Mar 13, 2024 pm 05:58 PM

As one of Sora's compelling core technologies, DiT utilizes DiffusionTransformer to extend the generative model to a larger scale, thereby achieving excellent image generation effects. However, larger model sizes cause training costs to skyrocket. The research team of Yan Shuicheng and Cheng Mingming from SeaAILab, Nankai University, and Kunlun Wanwei 2050 Research Institute proposed a new model called MaskedDiffusionTransformer at the ICCV2023 conference. This model uses mask modeling technology to speed up the training of DiffusionTransfomer by learning semantic representation information, and has achieved SoTA results in the field of image generation. this one

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

Using Hadoop and HBase in Beego for big data storage and querying

Jun 22, 2023 am 10:21 AM

With the advent of the big data era, data processing and storage have become more and more important, and how to efficiently manage and analyze large amounts of data has become a challenge for enterprises. Hadoop and HBase, two projects of the Apache Foundation, provide a solution for big data storage and analysis. This article will introduce how to use Hadoop and HBase in Beego for big data storage and query. 1. Introduction to Hadoop and HBase Hadoop is an open source distributed storage and computing system that can

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

How to use PHP and Hadoop for big data processing

Jun 19, 2023 pm 02:24 PM

As the amount of data continues to increase, traditional data processing methods can no longer handle the challenges brought by the big data era. Hadoop is an open source distributed computing framework that solves the performance bottleneck problem caused by single-node servers in big data processing through distributed storage and processing of large amounts of data. PHP is a scripting language that is widely used in web development and has the advantages of rapid development and easy maintenance. This article will introduce how to use PHP and Hadoop for big data processing. What is HadoopHadoop is

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Explore the application of Java in the field of big data: understanding of Hadoop, Spark, Kafka and other technology stacks

Dec 26, 2023 pm 02:57 PM

Java big data technology stack: Understand the application of Java in the field of big data, such as Hadoop, Spark, Kafka, etc. As the amount of data continues to increase, big data technology has become a hot topic in today's Internet era. In the field of big data, we often hear the names of Hadoop, Spark, Kafka and other technologies. These technologies play a vital role, and Java, as a widely used programming language, also plays a huge role in the field of big data. This article will focus on the application of Java in large

Comprehensive analysis of the core components and functions of the Java technology platform

Jan 09, 2024 pm 08:01 PM

Comprehensive analysis of the core components and functions of the Java technology platform

Jan 09, 2024 pm 08:01 PM

An in-depth analysis of the core components and functions of the Java technology platform. Java technology is widely used in many fields and has become a mainstream programming language and development platform. The Java technology platform consists of a series of core components and functions, which provide developers with a wealth of tools and resources, making Java development more efficient and convenient. This article will provide an in-depth analysis of the core components and functions of the Java technology platform, and explore its importance and application scenarios in software development. First, the Java Virtual Machine (JVM) is Java

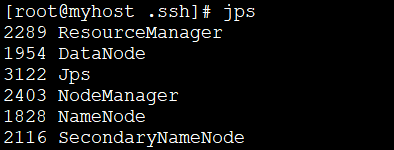

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

How to install Hadoop in linux

May 18, 2023 pm 08:19 PM

1: Install JDK1. Execute the following command to download the JDK1.8 installation package. wget--no-check-certificatehttps://repo.huaweicloud.com/java/jdk/8u151-b12/jdk-8u151-linux-x64.tar.gz2. Execute the following command to decompress the downloaded JDK1.8 installation package. tar-zxvfjdk-8u151-linux-x64.tar.gz3. Move and rename the JDK package. mvjdk1.8.0_151//usr/java84. Configure Java environment variables. echo'

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

Data processing engines in PHP (Spark, Hadoop, etc.)

Jun 23, 2023 am 09:43 AM

In the current Internet era, the processing of massive data is a problem that every enterprise and institution needs to face. As a widely used programming language, PHP also needs to keep up with the times in data processing. In order to process massive data more efficiently, PHP development has introduced some big data processing tools, such as Spark and Hadoop. Spark is an open source data processing engine that can be used for distributed processing of large data sets. The biggest feature of Spark is its fast data processing speed and efficient data storage.