Software Tutorial

Software Tutorial

Computer Software

Computer Software

What should I do if the memory usage of Chrome browser is too high? How to solve chrome memory usage is too high?

What should I do if the memory usage of Chrome browser is too high? How to solve chrome memory usage is too high?

What should I do if the memory usage of Chrome browser is too high? How to solve chrome memory usage is too high?

php editor Youzi found that many users encountered the problem of excessive memory usage when using the Chrome browser. As web pages load and tabs increase, Chrome's memory usage may gradually increase, causing the computer to slow down or even crash. In response to this problem, we will introduce some methods to solve the problem of excessive memory usage of Chrome browser to help you optimize browser performance and improve user experience.

How to solve the problem of high memory usage of chrome?

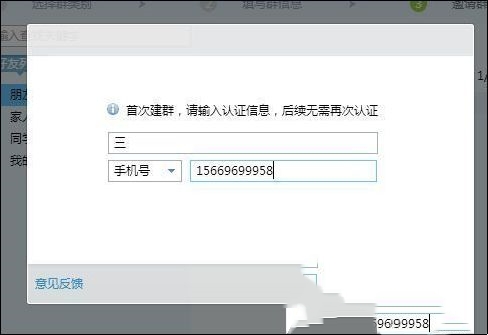

1. Use the shortcut key Ctrl D (Windows) / Cmd D (Mac) on any tab to open the bookmarked page, and then click "Modify".

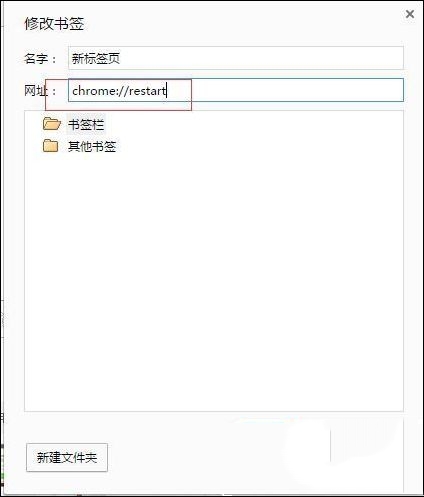

2. Enter: chrome://restart in the URL bar. You can name it as you like. Then click the save button at the bottom.

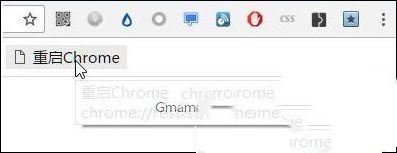

3. Then when you feel that Chrome is getting more and more stuck, click on the favorites bar to restart Chrome's bookmarks. It usually takes a few seconds to release the memory and let the computer return to normal.

The above is the detailed content of What should I do if the memory usage of Chrome browser is too high? How to solve chrome memory usage is too high?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Large memory optimization, what should I do if the computer upgrades to 16g/32g memory speed and there is no change?

Jun 18, 2024 pm 06:51 PM

Large memory optimization, what should I do if the computer upgrades to 16g/32g memory speed and there is no change?

Jun 18, 2024 pm 06:51 PM

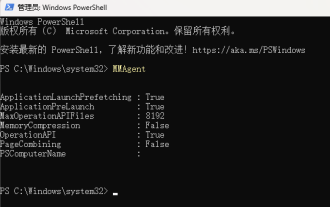

For mechanical hard drives or SATA solid-state drives, you will feel the increase in software running speed. If it is an NVME hard drive, you may not feel it. 1. Import the registry into the desktop and create a new text document, copy and paste the following content, save it as 1.reg, then right-click to merge and restart the computer. WindowsRegistryEditorVersion5.00[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\SessionManager\MemoryManagement]"DisablePagingExecutive"=d

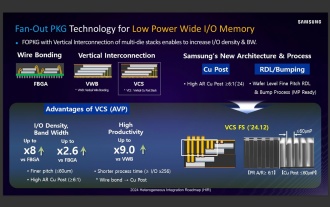

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

Sources say Samsung Electronics and SK Hynix will commercialize stacked mobile memory after 2026

Sep 03, 2024 pm 02:15 PM

According to news from this website on September 3, Korean media etnews reported yesterday (local time) that Samsung Electronics and SK Hynix’s “HBM-like” stacked structure mobile memory products will be commercialized after 2026. Sources said that the two Korean memory giants regard stacked mobile memory as an important source of future revenue and plan to expand "HBM-like memory" to smartphones, tablets and laptops to provide power for end-side AI. According to previous reports on this site, Samsung Electronics’ product is called LPWide I/O memory, and SK Hynix calls this technology VFO. The two companies have used roughly the same technical route, which is to combine fan-out packaging and vertical channels. Samsung Electronics’ LPWide I/O memory has a bit width of 512

Lexar launches Ares Wings of War DDR5 7600 16GB x2 memory kit: Hynix A-die particles, 1,299 yuan

May 07, 2024 am 08:13 AM

Lexar launches Ares Wings of War DDR5 7600 16GB x2 memory kit: Hynix A-die particles, 1,299 yuan

May 07, 2024 am 08:13 AM

According to news from this website on May 6, Lexar launched the Ares Wings of War series DDR57600CL36 overclocking memory. The 16GBx2 set will be available for pre-sale at 0:00 on May 7 with a deposit of 50 yuan, and the price is 1,299 yuan. Lexar Wings of War memory uses Hynix A-die memory chips, supports Intel XMP3.0, and provides the following two overclocking presets: 7600MT/s: CL36-46-46-961.4V8000MT/s: CL38-48-49 -1001.45V In terms of heat dissipation, this memory set is equipped with a 1.8mm thick all-aluminum heat dissipation vest and is equipped with PMIC's exclusive thermal conductive silicone grease pad. The memory uses 8 high-brightness LED beads and supports 13 RGB lighting modes.

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

How to fine-tune deepseek locally

Feb 19, 2025 pm 05:21 PM

Local fine-tuning of DeepSeek class models faces the challenge of insufficient computing resources and expertise. To address these challenges, the following strategies can be adopted: Model quantization: convert model parameters into low-precision integers, reducing memory footprint. Use smaller models: Select a pretrained model with smaller parameters for easier local fine-tuning. Data selection and preprocessing: Select high-quality data and perform appropriate preprocessing to avoid poor data quality affecting model effectiveness. Batch training: For large data sets, load data in batches for training to avoid memory overflow. Acceleration with GPU: Use independent graphics cards to accelerate the training process and shorten the training time.

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

What to do if the Edge browser takes up too much memory What to do if the Edge browser takes up too much memory

May 09, 2024 am 11:10 AM

1. First, enter the Edge browser and click the three dots in the upper right corner. 2. Then, select [Extensions] in the taskbar. 3. Next, close or uninstall the plug-ins you do not need.

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

For only $250, Hugging Face's technical director teaches you how to fine-tune Llama 3 step by step

May 06, 2024 pm 03:52 PM

The familiar open source large language models such as Llama3 launched by Meta, Mistral and Mixtral models launched by MistralAI, and Jamba launched by AI21 Lab have become competitors of OpenAI. In most cases, users need to fine-tune these open source models based on their own data to fully unleash the model's potential. It is not difficult to fine-tune a large language model (such as Mistral) compared to a small one using Q-Learning on a single GPU, but efficient fine-tuning of a large model like Llama370b or Mixtral has remained a challenge until now. Therefore, Philipp Sch, technical director of HuggingFace

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

The impact of the AI wave is obvious. TrendForce has revised up its forecast for DRAM memory and NAND flash memory contract price increases this quarter.

May 07, 2024 pm 09:58 PM

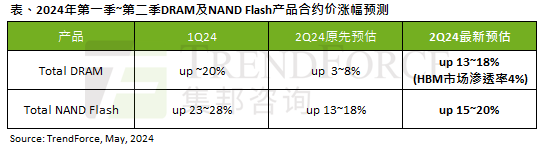

According to a TrendForce survey report, the AI wave has a significant impact on the DRAM memory and NAND flash memory markets. In this site’s news on May 7, TrendForce said in its latest research report today that the agency has increased the contract price increases for two types of storage products this quarter. Specifically, TrendForce originally estimated that the DRAM memory contract price in the second quarter of 2024 will increase by 3~8%, and now estimates it at 13~18%; in terms of NAND flash memory, the original estimate will increase by 13~18%, and the new estimate is 15%. ~20%, only eMMC/UFS has a lower increase of 10%. ▲Image source TrendForce TrendForce stated that the agency originally expected to continue to

Kingbang launches new DDR5 8600 memory, offering CAMM2, LPCAMM2 and regular models to choose from

Jun 08, 2024 pm 01:35 PM

Kingbang launches new DDR5 8600 memory, offering CAMM2, LPCAMM2 and regular models to choose from

Jun 08, 2024 pm 01:35 PM

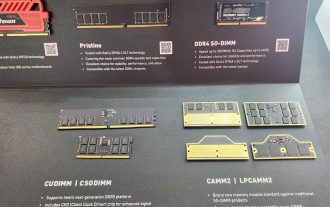

According to news from this site on June 7, GEIL launched its latest DDR5 solution at the 2024 Taipei International Computer Show, and provided SO-DIMM, CUDIMM, CSODIMM, CAMM2 and LPCAMM2 versions to choose from. ▲Picture source: Wccftech As shown in the picture, the CAMM2/LPCAMM2 memory exhibited by Jinbang adopts a very compact design, can provide a maximum capacity of 128GB, and a speed of up to 8533MT/s. Some of these products can even be stable on the AMDAM5 platform Overclocked to 9000MT/s without any auxiliary cooling. According to reports, Jinbang’s 2024 Polaris RGBDDR5 series memory can provide up to 8400