Technology peripherals

Technology peripherals

AI

AI

AI video explodes again! Photo + voice turned into video, Alibaba asked the heroine Sora to sing and rap with Li Zi.

AI video explodes again! Photo + voice turned into video, Alibaba asked the heroine Sora to sing and rap with Li Zi.

AI video explodes again! Photo + voice turned into video, Alibaba asked the heroine Sora to sing and rap with Li Zi.

After Sora, there is actually a new AI video model, which is so amazing that everyone likes it and praises it!

Pictures

Pictures

With it, the villain of "Kuronics" Gao Qiqiang transforms into Luo Xiang, and he can educate everyone (dog head).

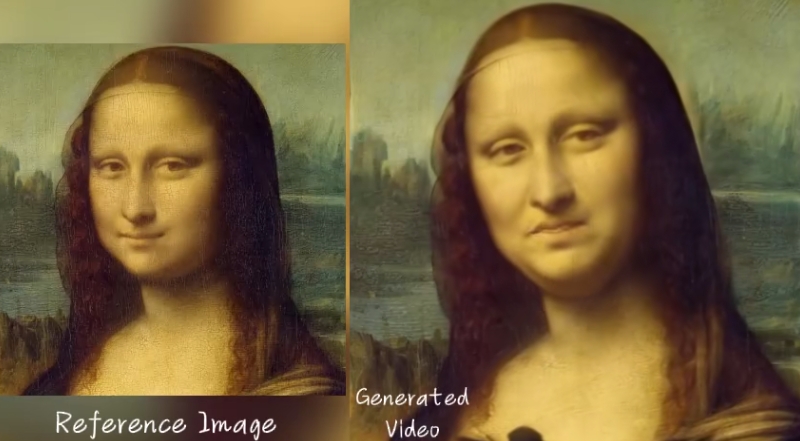

This is Alibaba’s latest audio-driven portrait video generation framework, EMO (Emote Portrait Alive).

With it, you can generate an AI video with vivid expressions by inputting a single reference image and a piece of audio (speech, singing, or rap can be used). The final length of the video depends on the length of the input audio.

You can ask Mona Lisa, a veteran contestant of AI effects experience, to recite a monologue:

Here comes the young and handsome little plum. During this fast-paced RAP talent show, there was no problem keeping up with the mouth shape:

Even the Cantonese lip-syncs could be held, which allowed my brother Leslie Cheung to sing Eason Chan's " Unconditional》:

#In short, whether it is to make the portrait sing (different styles of portraits and songs), to make the portrait speak (different languages), or to make all kinds of "pretentious" The cross-actor performance and the EMO effect left us stunned for a moment.

Netizens lamented: "We are entering a new reality!"

The 2019 version of "Joker" said the lines of the 2008 version of "The Dark Knight"

The 2019 version of "Joker" said the lines of the 2008 version of "The Dark Knight"

Some netizens have even started to pull videos of EMO generated videos and analyze the effect frame by frame.

As shown in the video below, the protagonist is the AI lady generated by Sora. The song she sang for you this time is "Don’t Start Now".

Commenters analyzed:

The consistency of this video is even better than before!

In the more than one minute video, the sunglasses on Ms. Sora’s face barely moved, and her ears and eyebrows moved independently.

The most exciting thing is that Ms. Sora’s throat seems to be really breathing! Her body trembled and moved slightly while singing, which shocked me!

##Picture

##Picture

Picture

Picture

Picture

Picture

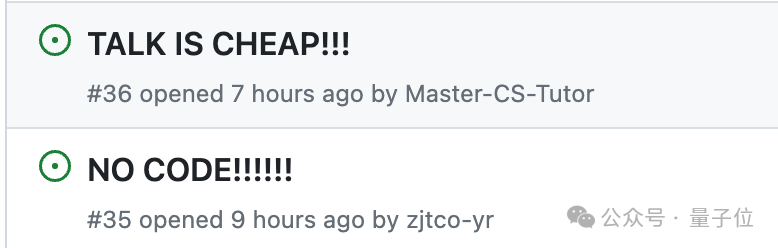

EMO is not based on a DiT-like architecture, that is, Transformer is not used to replace traditional UNet. Its backbone network is modified from Stable Diffusion 1.5.

Specifically, EMO is an expressive audio-driven portrait video generation framework that can generate videos of any duration based on the length of the input video.

Picture

Picture

The framework mainly consists of two stages:

- Frame encoding stage

Deploy a UNet network called ReferenceNet, which is responsible for extracting features from reference images and frames of videos.

- Diffusion stage

First, the pre-trained audio encoder processes the audio embedding, and the face region mask is combined with multi-frame noise to control the generation of the face image .

The backbone network then leads the denoising operation. Two types of attention are applied in the backbone network, reference attention and audio attention, which serve to maintain the identity consistency of the character and regulate the movement of the character respectively.

Additionally, the time module is used to manipulate the time dimension and adjust the speed of movement.

In terms of training data, the team built a large and diverse audio and video data set containing more than 250 hours of video and more than 15 million images.

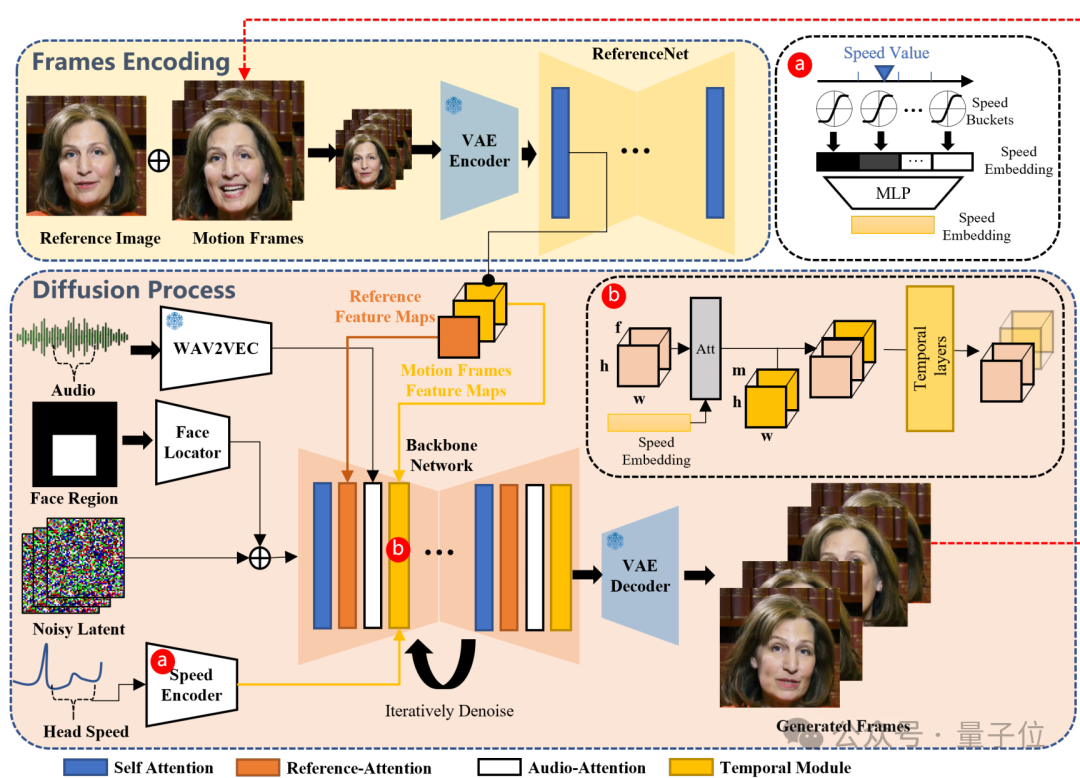

The specific features of the final implementation are as follows:

- Videos of any duration can be generated based on the input audio while ensuring character identity consistency (the longest single video given in the demonstration is 1 minute 49 seconds).

- Supports talking and singing in various languages (the demo includes Mandarin, Cantonese, English, Japanese, Korean)

- Supports different painting styles (photos, traditional paintings, comics, 3D rendering, AI digital person)

Picture

Picture

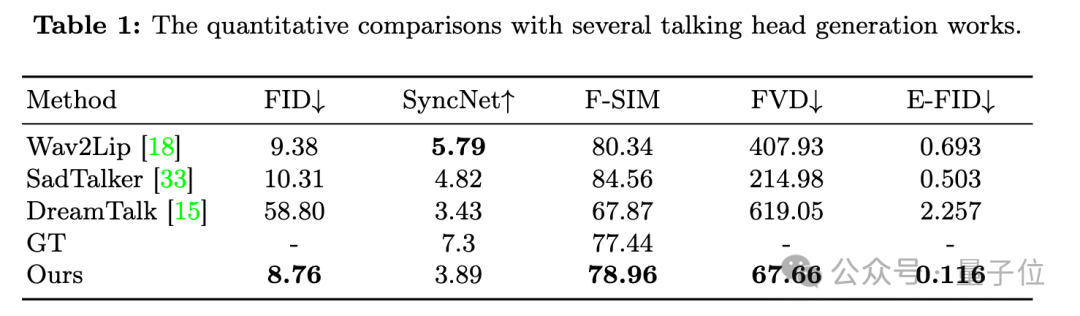

The quantitative comparison is also greatly improved compared to the previous method to obtain SOTA, only measuring mouth shape The SyncNet indicator of synchronization quality is slightly inferior.

Picture

Picture

Compared with other methods that do not rely on diffusion models, EMO is more time-consuming.

And since no explicit control signals are used, which may lead to the inadvertent generation of other body parts such as hands, a potential solution is to use control signals specifically for body parts.

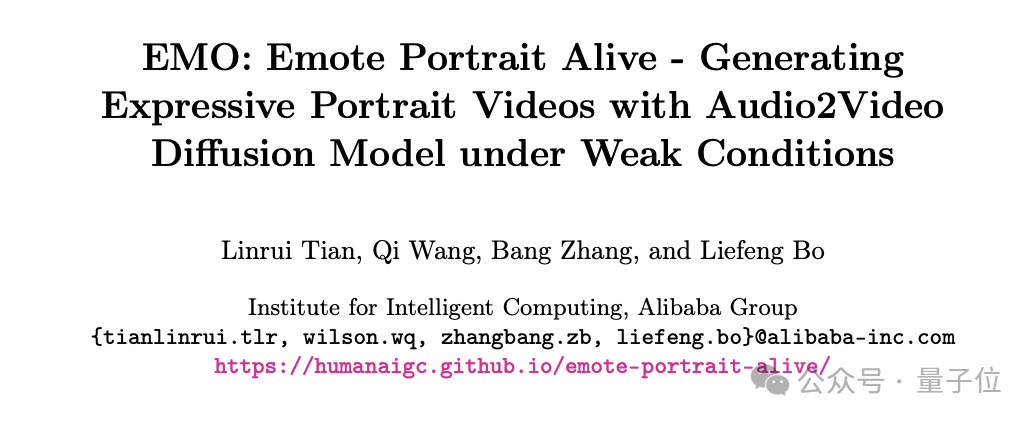

EMO’s team

Finally, let’s take a look at the people on the team behind EMO.

The paper shows that the EMO team comes from Alibaba Intelligent Computing Research Institute.

There are four authors, namely Linrui Tian, Qi Wang, Bang Zhang and Liefeng Bo.

Picture

Picture

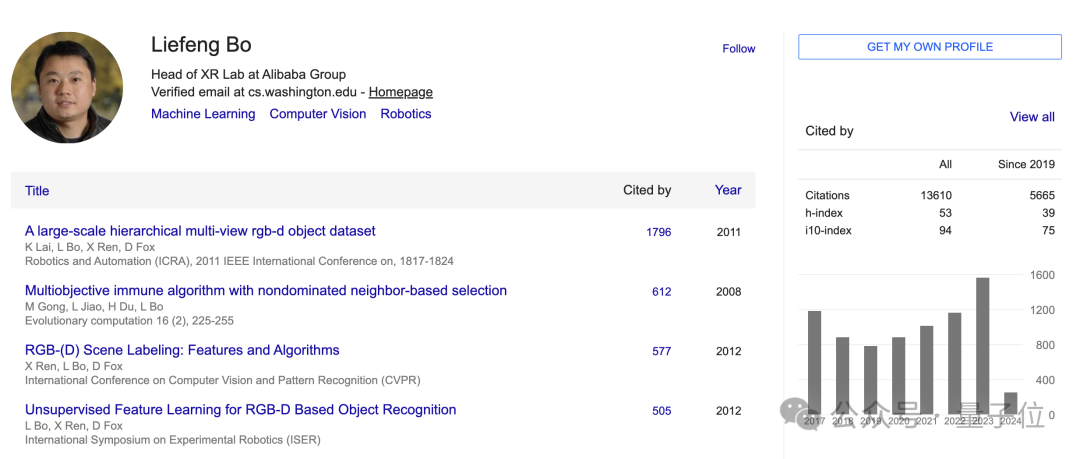

Among them, Liefeng Bo is the current head of the XR laboratory of Alibaba Tongyi Laboratory.

Dr. Bo Liefeng graduated from Xi'an University of Electronic Science and Technology. He has engaged in postdoctoral research at Toyota Research Institute of the University of Chicago and the University of Washington. His research directions are mainly ML, CV and robotics. Its Google Scholar citations exceed 13,000.

Before joining Alibaba, he first served as chief scientist at Amazon’s Seattle headquarters, and then joined JD Digital Technology Group’s AI laboratory as chief scientist.

In September 2022, Bo Liefeng joined Alibaba.

Picture

Picture

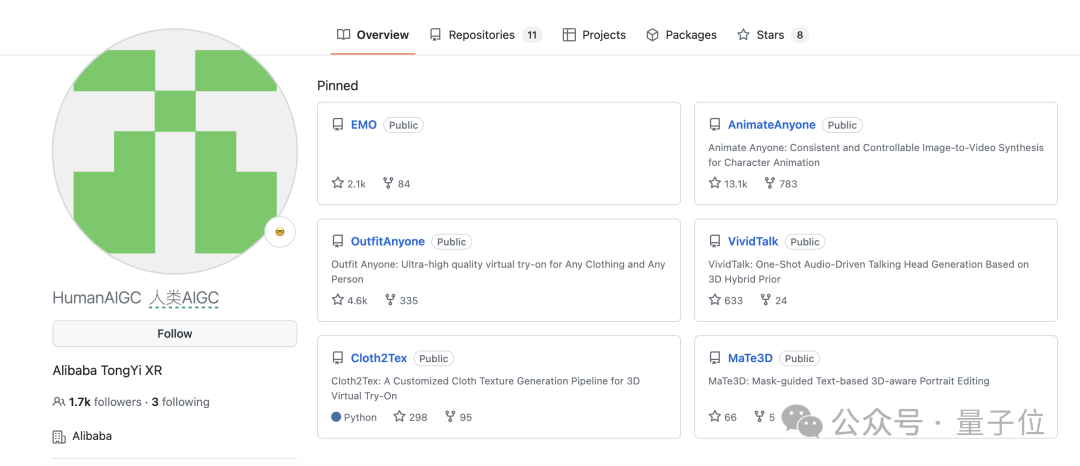

EMO is not the first time Alibaba has achieved success in the AIGC field.

Picture

Picture

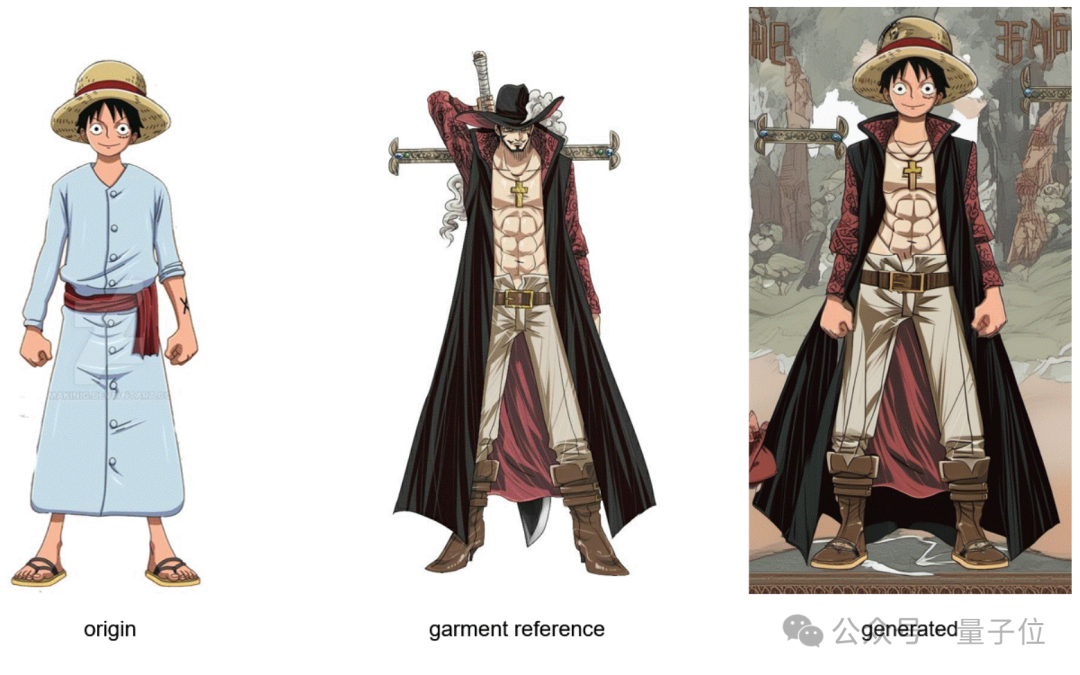

OutfitAnyone with AI one-click dress-up.

Pictures

Pictures

There is also AnimateAnyone, which makes cats and dogs all over the world dance the bath dance.

This is the one below:

Picture

Picture

Now that EMO is launched, many netizens are lamenting that Alibaba has accumulated some technology on it .

Picture

Picture

If all these technologies are combined now, the effect will be...

I don’t dare to think about it, but I’m really looking forward to it.

Picture

Picture

In short, we are getting closer and closer to "send a script to AI and output the entire movie".

Picture

Picture

One More Thing

Sora represents a cliff-edge breakthrough in text-driven video synthesis.

EMO also represents a new level of audio-driven video synthesis.

Although the two tasks are different and the specific architecture is different, they still have one important thing in common:

There is no explicit physical model in the middle, but they both simulate physical laws to a certain extent. .

Therefore, some people believe that this is contrary to Lecun's insistence that "modeling the world for actions by generating pixels is wasteful and doomed to failure" and supports Jim Fan's "data-driven world model" idea. .

Picture

Picture

Various methods have failed in the past, but the current success may really come from "Bitter Lessons" by Sutton, the father of reinforcement learning. Vigorously miracle.

Enabling AI to discover like people, rather than containing what people discover

Breakthrough progress is ultimately achieved by scaling up computing

Paper: https://www.php.cn/link/a717f41c203cb970f96f706e4b12617bGitHub:https://www.php.cn/link/e43a09ffc30b44cb1f0db46f87836f40

Reference Link:

[1]https://www.php.cn/link/0dd4f2526c7c874d06f19523264f6552

The above is the detailed content of AI video explodes again! Photo + voice turned into video, Alibaba asked the heroine Sora to sing and rap with Li Zi.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

The steps to draw a Bitcoin structure analysis chart include: 1. Determine the purpose and audience of the drawing, 2. Select the right tool, 3. Design the framework and fill in the core components, 4. Refer to the existing template. Complete steps ensure that the chart is accurate and easy to understand.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.