Technology peripherals

Technology peripherals

AI

AI

AI mind-reading technology has been upgraded! A pair of glasses directly controls the Boston robot dog, making brain-controlled robots a reality

AI mind-reading technology has been upgraded! A pair of glasses directly controls the Boston robot dog, making brain-controlled robots a reality

AI mind-reading technology has been upgraded! A pair of glasses directly controls the Boston robot dog, making brain-controlled robots a reality

Do you still remember the AI mind-reading skills from before? Recently, the ability to "make all your wishes come true" has evolved again.

-Humans can directly control robots through their own thoughts!

MIT researchers released the Ddog project. They independently developed a brain-computer interface (BCI) device to control Boston Dynamics' robot dog Spot.

Dogs can move to specific areas, help people get things, or take photos according to human thoughts.

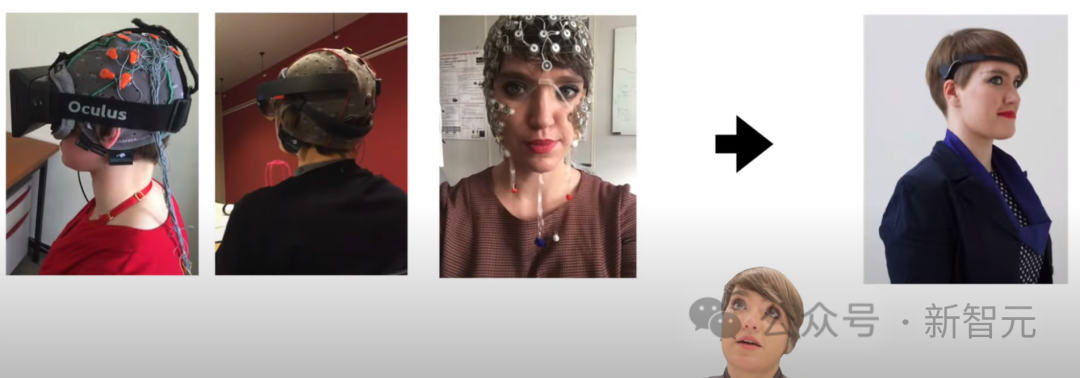

Compared with the previous need to use a headgear with many sensors to "read the mind", this time the brain-computer interface device is presented in the form of wireless glasses (AttentivU).

Although the behavior shown in the video is simple, the purpose of this system is to transform Spot into a basic communication tool to help people with diseases such as ALS, cerebral palsy, or spinal cord injury.

All it takes is two iPhones and a pair of glasses to bring practical help and care to desperate people.

And, as we will see in related papers, this system is actually built on very complex engineering.

Paper address: https://doi.org/10.3390/s24010080

Usage of Ddog system AttentivU is a brain-computer interface system with sensors embedded in the frame to measure a person's electroencephalogram (EEG), or brain activity, and electrooculogram, or eye movement.

The foundation for this research is MIT’s Brain Switch, a real-time, closed-loop BCI that allows users to communicate nonverbally and in real time with caregivers.

The Ddog system has an 83.4% success rate and is the first time a wireless, non-visual BCI system has been integrated with Spot in a personal assistant use case.

In the video, we can see the evolution of brain interface devices and some of the thoughts of developers.

Prior to this, the research team has completed the interaction between the brain-computer interface and the smart home, and now has completed the control of a robot that can move and operate.

These studies have given special groups a glimmer of light, giving them hope of survival and even a better life in the future.

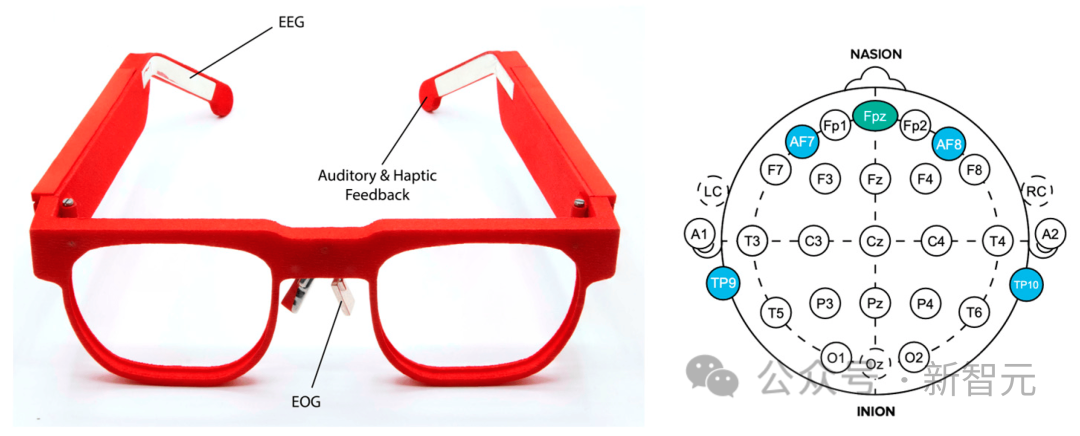

Compared to the octopus-like sensor headgear, the glasses below are indeed much cooler.

According to the National Organization for Rare Diseases, there are currently 30,000 ALS patients in the United States, and an estimated 5,000 new cases are diagnosed each year. Additionally, approximately 1 million Americans have cerebral palsy, according to the Cerebral Palsy Guide.

Many of these people have lost or will eventually lose the ability to walk, dress, talk, write, and even breathe.

While communication aids do exist, most are eye-gazing devices that allow users to communicate using a computer. There aren't many systems that allow users to interact with the world around them.

This BCI quadruped robotic system serves as an early prototype, paving the way for the future development of modern personal assistant robots.

Hopefully, we can see even more amazing capabilities in future iterations.

Brain-controlled quadruped robot

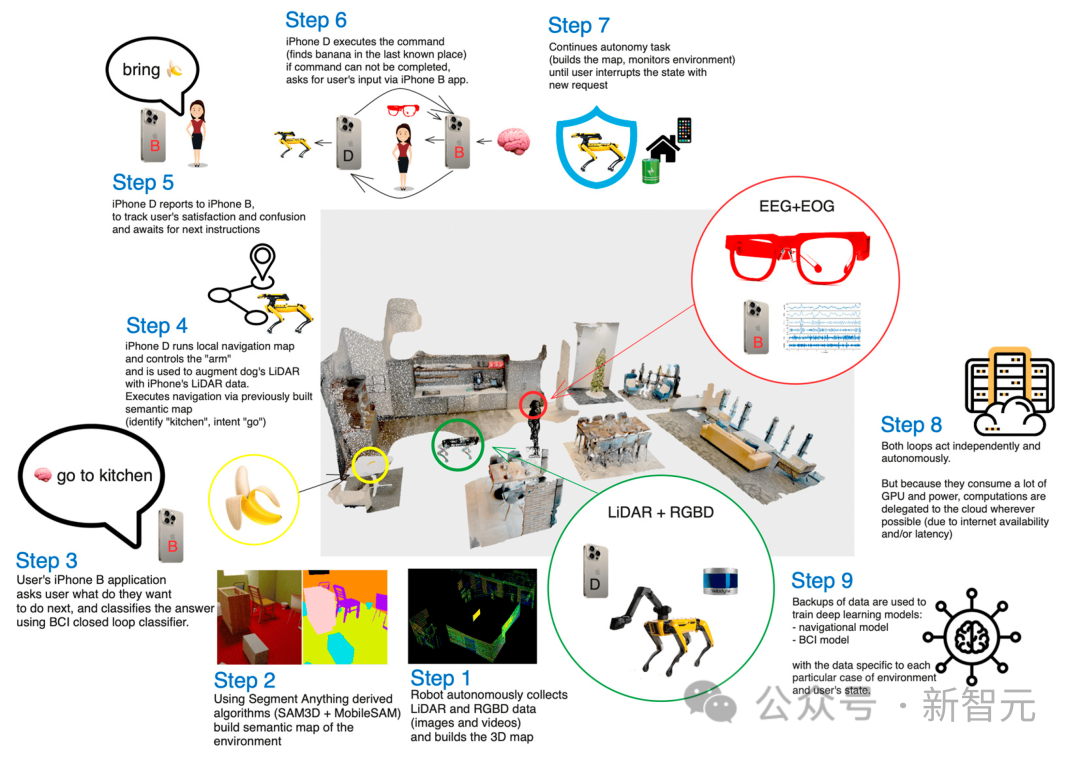

In this work, researchers explore how wireless and wearable BCI devices can control quadruped robots ——Boston Dynamics’ Spot.

The device developed by the researchers measures the user's electroencephalogram (EEG) and electrooculogram (EOG) activity through electrodes embedded in the frame of the glasses.

Users answer a series of questions in their mind ("yes" or "no"), and each question corresponds to a set of preset Spot operations.

For example, prompt Spot to walk through a room, pick up an object (such as a bottle of water), and then retrieve it for the user.

Robots and BCI

To this day, EEG remains one of the most practical and applicable non-invasive brain-computer interface methods.

BCI systems can be controlled using endogenous (spontaneous) or exogenous (evoked) signals.

In exogenous brain-computer interfaces, evoked signals occur when a person pays attention to external stimuli, such as visual or auditory cues.

The advantages of this approach include minimalist training and high bitrates of up to 60 bits/min, but this requires the user to always focus on the stimulus, thus limiting its use in real-life situations. applicability. Furthermore, users tire quickly when using exogenous BCIs.

In endogenous brain-computer interfaces, control signals are generated independently of any external stimulus and can be fully executed by the user on demand. For those users with sensory impairments, this provides a more natural and intuitive way of interacting, allowing users to spontaneously issue commands to the system.

However, this method usually requires longer training time and has a lower bit rate.

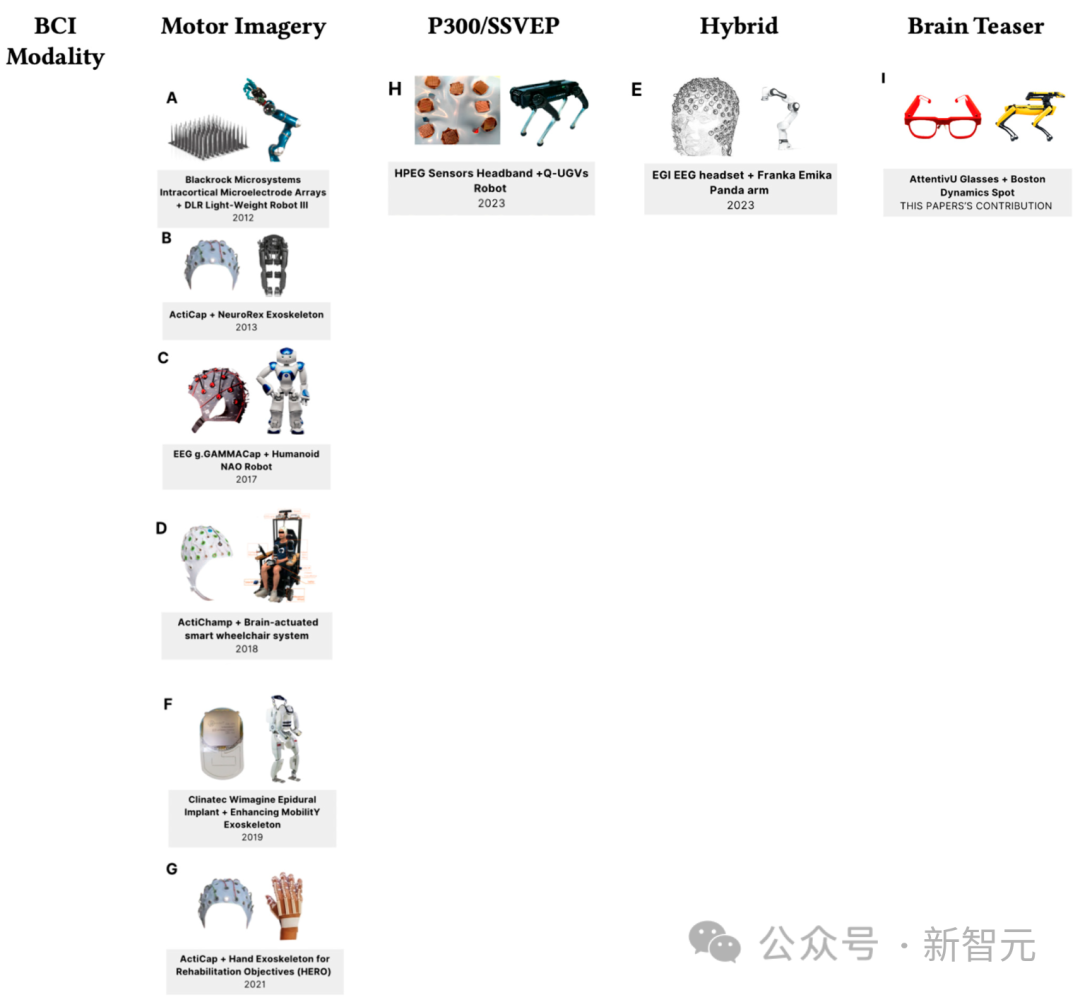

Robotic applications using brain-computer interfaces are often for people in need of assistance, and they often include wheelchairs and exoskeletons.

The figure below shows the latest progress in brain-computer interface and robotics technology as of 2023.

Quadruped robots are often used to support users in complex work environments or defense applications.

One of the most famous quadruped robots is Boston Dynamics’ Spot, which can carry up to 15 kilograms of payload and iteratively map maintenance sites such as tunnels. The real estate and mining industries are also adopting quadruped robots like Spot to help monitor job sites with complex logistics.

This article uses the Spot robot controlled by the mobile BCI solution and is based on mental arithmetic tasks. The overall architecture is named Ddog.

Ddog architecture

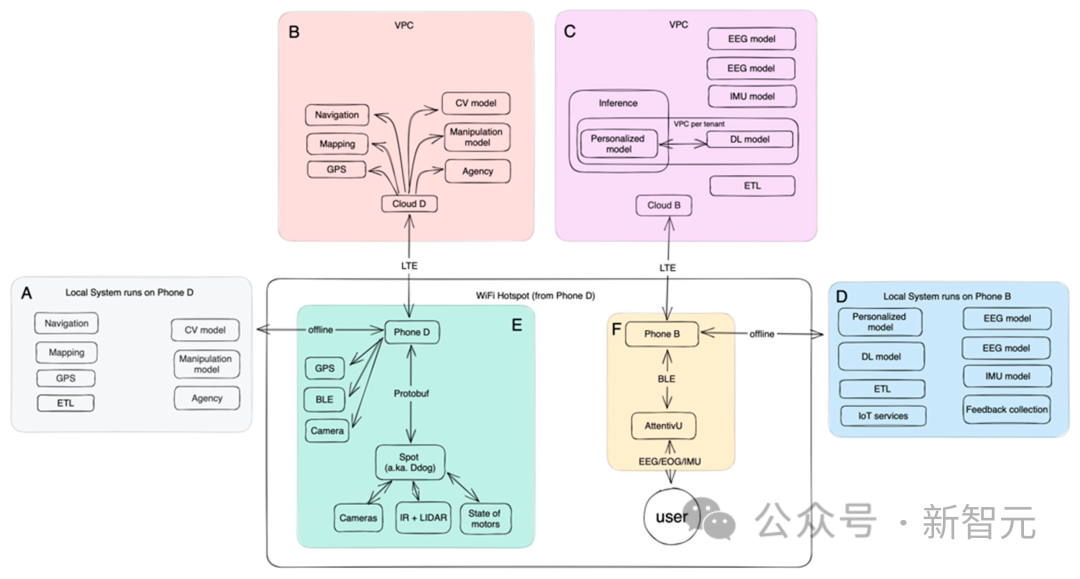

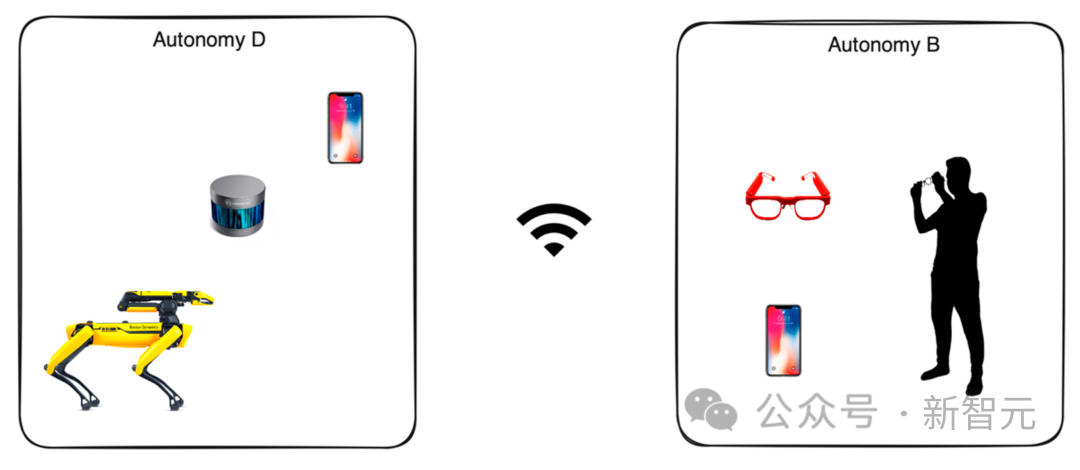

The following figure shows the overall structure of Ddog:

Ddog is an autonomous application that enables users to control the Spot robot through input from the BCI, while the application uses voice to provide feedback to the user and their caregivers.

The system is designed to work completely offline or completely online. The online version has a more advanced set of machine learning models, as well as better fine-tuned models, and is more power efficient for local devices.

The entire system is designed for real-life scenarios and allows for rapid iteration on most parts.

On the client side, the user interacts with the brain-computer interface device (AttentivU) through a mobile application that uses Bluetooth Low Energy ( BLE) protocol to communicate with the device.

The user’s mobile device communicates with another phone controlling the Spot robot to enable agency, manipulation, navigation, and ultimately assistance to the user.

Communication between mobile phones can be through Wi-Fi or mobile networks. The controlled mobile phone establishes a Wi-Fi hotspot, and both Ddog and the user's mobile phone are connected to this hotspot. When using online mode, you can also connect to models running on the cloud.

Server side

The server side uses Kubernetes (K8S) cluster, each cluster is deployed in its own Virtual Private Cloud (VPC) .

The cloud works within a dedicated VPC, typically deployed in the same Availability Zone closer to end users, minimizing response latency for each service.

Each container in the cluster is designed for a single purpose (microservice architecture). Each service is a running AI model. Their tasks include: navigation, mapping, Computer vision, manipulation, localization and agency.

Mapping: A service that collects information about the robot's surroundings from different sources. It maps static, immovable data (a tree, a building, a wall) but also collects dynamic data that changes over time (a car, a person).

Navigation: Based on map data collected and augmented in previous services, the navigation service is responsible for constructing a path between point A and point B in space and time. It is also responsible for constructing alternative routes, as well as estimating the time required.

Computer Vision: Collect visual data from robot cameras and augment it with data from your phone to generate spatial and temporal representations. This service also attempts to segment each visual point and identify objects.

Cloud is responsible for training BCI-related models, including electroencephalogram (EEG), electrooculogram (EOG) and inertial measurement unit (IMU).

The offline model deployed on the mobile phone runs data collection and aggregation, and also uses TensorFlow’s mobile model (for smaller RAM and based on ARM CPUs are optimized) for real-time inference.

Visual and Operational

The original version used to deploy the segmentation model was a single TensorFlow 3D model leveraging LIDAR data. The authors then extended this to a few-shot model and enhanced it by running complementary models on Neural Radiation Field (NeRF) and RGBD data.

The raw data collected by Ddog is aggregated from five cameras. Each camera can provide grayscale, fisheye, depth and infrared data. There is also a sixth camera inside the arm's gripper, with 4K resolution and LED capabilities, that works with a pre-trained TensorFlow model to detect objects.

The point cloud is generated from lidar data and RGBD data from Ddog and mobile phone. After data acquisition is complete, it is normalized through a single coordinate system and matched to a global state that brings together all imaging and 3D positioning data.

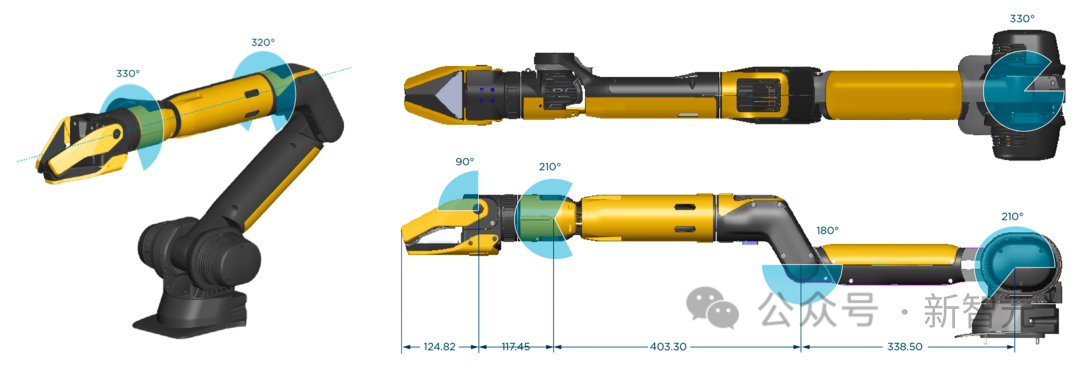

Operation is entirely dependent on the quality of the robotic arm gripper mounted on the Ddog, the one pictured below is manufactured by Boston Dynamics.

Limit the use cases in the experiment to basic interactions with objects in predefined locations.

The author drew a large laboratory space and set it up as an "apartment", which contained a "kitchen" area (with a tray with different cups and bottles), The "living room" area (small sofa with pillows and small coffee table), and the "window lounge" area.

The number of use cases is constantly growing, so the only way to cover most use cases is to deploy a system to run continuously for a period of time and use the data to Optimize such sequences and experiences.

AttentivU

EEG data is collected from the AttentivU device. The electrodes of AttentivU glasses are made of natural silver and are located at TP9 and TP10 according to the international 10-20 electrode placement system. The glasses also include two EOG electrodes located on the nose pads and an EEG reference electrode located at the Fpz position.

These sensors can provide the information needed and enable real-time, closed-loop intervention when needed.

The device has two modes, EEG and EOG, which can be used to capture signals of attention, engagement, fatigue and cognitive load in real time. EEG has been used as a neurophysiological indicator of the transition between wakefulness and sleep, while EOG is based on measuring bioelectrical signals induced during eye movements due to corneal-retinal dipole properties. . Research shows that eye movements correlate with the type of memory access needed to perform certain tasks and are a good measure of visual engagement, attention, and drowsiness.

Experiment

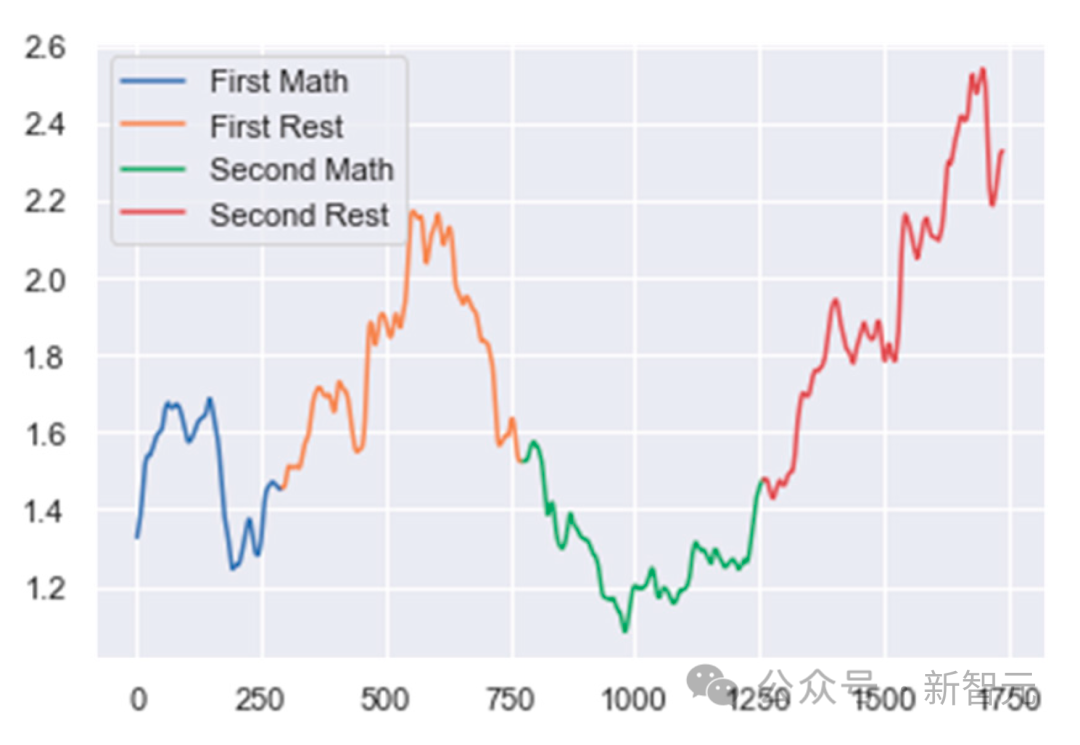

First divide the EEG data into several windows. Define each window as a 1 second long duration of EEG data with 75% overlap with the previous window.

Then comes data preprocessing and cleaning. Data were filtered using a combination of a 50 Hz notch filter and a bandpass filter with a passband of 0.5 Hz to 40 Hz to ensure removal of power line noise and unwanted high frequencies.

Next, the author created an artifact rejection algorithm. An epoch is rejected if the absolute power difference between two consecutive epochs is greater than a predefined threshold.

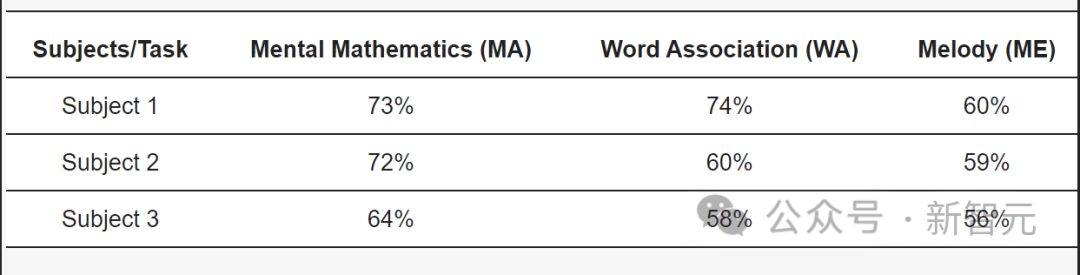

In the final step of classification, the authors mixed different spectral band power ratios to track each subject’s task-based mental activity. For MA, the ratio is (alpha/delta). For WA, the ratio is (delta/low beta) and for ME, the ratio is (delta/alpha).

Then, change point detection algorithms are used to track changes in these ratios. Sudden increases or decreases in these ratios indicate a change in the user's mental state.

For subjects with ALS, our model achieved an accuracy of 73% in the MA task and an accuracy of 73% in the WA task. It achieved an accuracy of 74% and achieved an accuracy of 60% in the ME task.

The above is the detailed content of AI mind-reading technology has been upgraded! A pair of glasses directly controls the Boston robot dog, making brain-controlled robots a reality. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

What is the analysis chart of Bitcoin finished product structure? How to draw?

Apr 21, 2025 pm 07:42 PM

The steps to draw a Bitcoin structure analysis chart include: 1. Determine the purpose and audience of the drawing, 2. Select the right tool, 3. Design the framework and fill in the core components, 4. Refer to the existing template. Complete steps ensure that the chart is accurate and easy to understand.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

The top ten free platform recommendations for real-time data on currency circle markets are released

Apr 22, 2025 am 08:12 AM

Cryptocurrency data platforms suitable for beginners include CoinMarketCap and non-small trumpet. 1. CoinMarketCap provides global real-time price, market value, and trading volume rankings for novice and basic analysis needs. 2. The non-small quotation provides a Chinese-friendly interface, suitable for Chinese users to quickly screen low-risk potential projects.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

Rexas Finance (RXS) can surpass Solana (Sol), Cardano (ADA), XRP and Dogecoin (Doge) in 2025

Apr 21, 2025 pm 02:30 PM

In the volatile cryptocurrency market, investors are looking for alternatives that go beyond popular currencies. Although well-known cryptocurrencies such as Solana (SOL), Cardano (ADA), XRP and Dogecoin (DOGE) also face challenges such as market sentiment, regulatory uncertainty and scalability. However, a new emerging project, RexasFinance (RXS), is emerging. It does not rely on celebrity effects or hype, but focuses on combining real-world assets (RWA) with blockchain technology to provide investors with an innovative way to invest. This strategy makes it hoped to be one of the most successful projects of 2025. RexasFi

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play