Technology peripherals

Technology peripherals

AI

AI

Why didn't ICLR accept Mamba's paper? The AI community has sparked a big discussion

Why didn't ICLR accept Mamba's paper? The AI community has sparked a big discussion

Why didn't ICLR accept Mamba's paper? The AI community has sparked a big discussion

In 2023, the status of Transformer, the dominant player in the field of AI large models, will begin to be challenged. A new architecture called "Mamba" has emerged. It is a selective state space model that is comparable to Transformer in terms of language modeling, and may even surpass it. At the same time, Mamba can achieve linear scaling as the context length increases, which enables it to handle million-word-length sequences and improve inference throughput by 5 times when processing real data. This breakthrough performance improvement is eye-catching and brings new possibilities to the development of the AI field.

In more than a month after its release, Mamba began to gradually show its influence and spawned many projects such as MoE-Mamba, Vision Mamba, VMamba, U-Mamba, MambaByte, etc. . Mamba has shown great potential in continuously overcoming the shortcomings of Transformer. These developments demonstrate Mamba’s continued development and advancement, bringing new possibilities to the field of artificial intelligence.

However, this rising "star" encountered a setback at the 2024 ICLR meeting. The latest public results show that Mamba’s paper is still pending. We can only see its name in the column of pending decision, and we cannot determine whether it was delayed or rejected.

Overall, Mamba received ratings from four reviewers, which were 8/8/6/3 respectively. Some people said it was really puzzling to still be rejected after receiving such a rating.

To understand the reason, we have to look at what the reviewers who gave low scores said.

Paper review page: https://openreview.net/forum?id=AL1fq05o7H

Why is it “not good enough”?

In the review feedback, the reviewer who gave a score of "3: reject, not good enough" explained several opinions about Mamba:

Thoughts on model design:

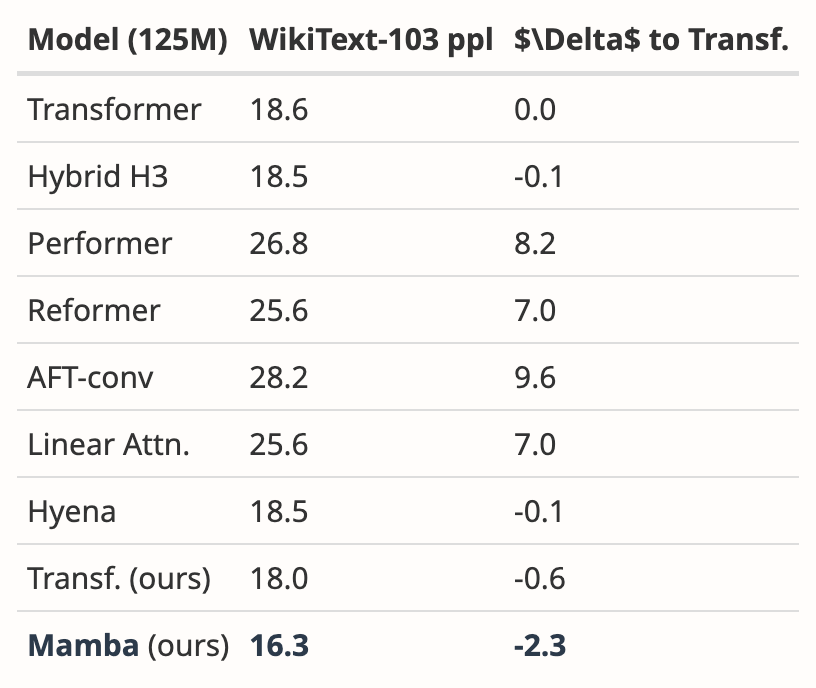

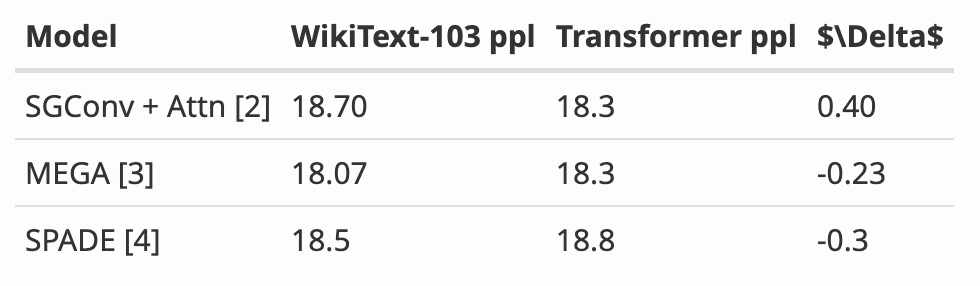

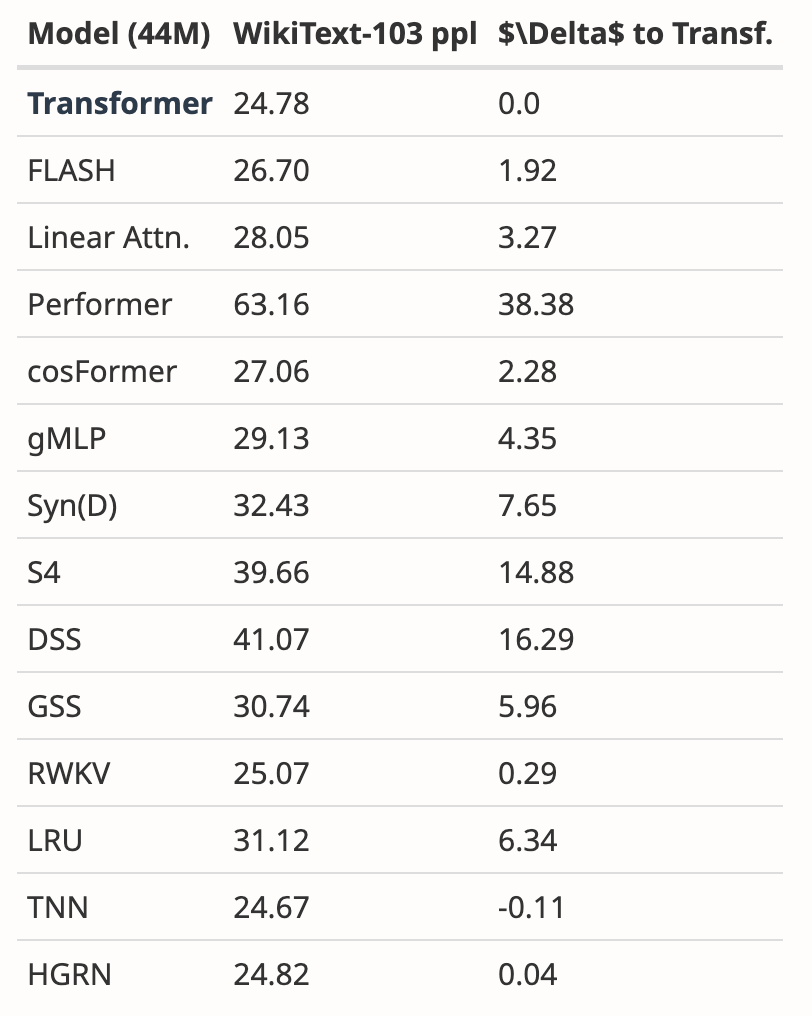

- Mamba’s motivation is to address the shortcomings of recursive models while improving the efficiency of attention-based models. There are many studies along this direction: S4-diagonal [1], SGConv [2], MEGA [3], SPADE [4], and many efficient Transformer models (e.g. [5]). All these models achieve near linear complexity and the authors need to compare Mamba with these works in terms of model performance and efficiency. Regarding model performance, some simple experiments (such as language modeling of Wikitext-103) are enough.

- Many attention-based Transformer models show length generalization ability, that is, the model can be trained on shorter sequence lengths and tested on longer sequence lengths. Examples include relative position encoding (T5) and Alibi [6]. Since SSM is generally continuous, does Mamba have this length generalization ability?

Thoughts on the experiment:

- The authors need to compare to a stronger baseline. The authors stated that H3 was used as motivation for the model architecture, however they did not compare with H3 in experiments. According to Table 4 in [7], on the Pile dataset, the ppl of H3 are 8.8 (1.25 M), 7.1 (3.55 M), and 6.0 (1.3B) respectively, which are significantly better than Mamba. The authors need to show a comparison with H3.

- For the pre-trained model, the author only shows the results of zero-sample inference. This setup is rather limited and the results do not support Mamba's effectiveness well. I recommend that the authors conduct more experiments with long sequences, such as document summarization, where the input sequences are naturally very long (e.g., the average sequence length of the arXiv dataset is >8k).

- The author claims that one of his main contributions is long sequence modeling. The authors should compare with more baselines on LRA (Long Range Arena), which is basically the standard benchmark for long sequence understanding.

- Missing memory benchmark. Although Section 4.5 is titled “Speed and Memory Benchmarks,” only speed comparisons are presented. In addition, the authors should provide more detailed settings on the left side of Figure 8, such as model layers, model size, convolution details, etc. Can the authors provide some intuition as to why FlashAttention is slowest when the sequence length is very large (Figure 8 left)?

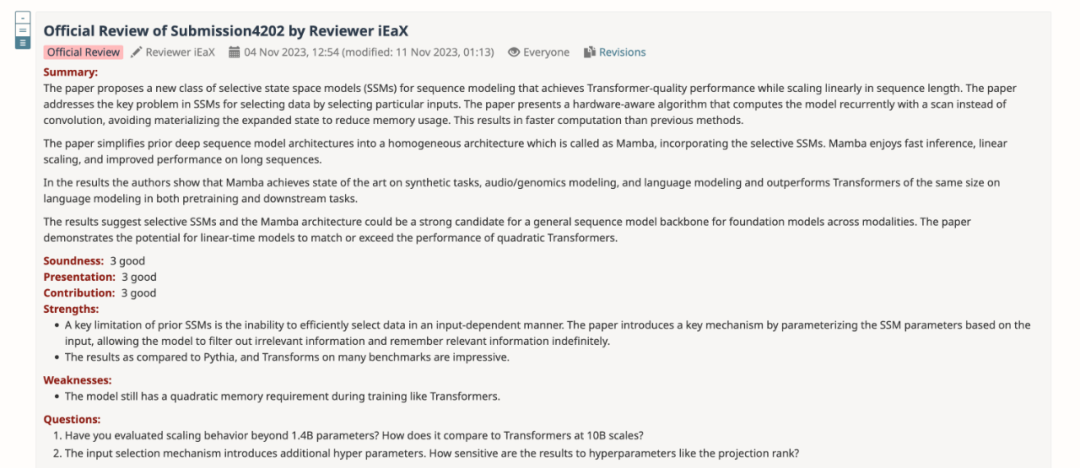

Additionally, another reviewer also pointed out a shortcoming of Mamba: the model still has secondary memory requirements during training like Transformers.

Author: Revised, please review

After summarizing the opinions of all reviewers, the author team also revised and improved the content of the paper and added new Experimental results and analysis:

- Added the evaluation results of the H3 model

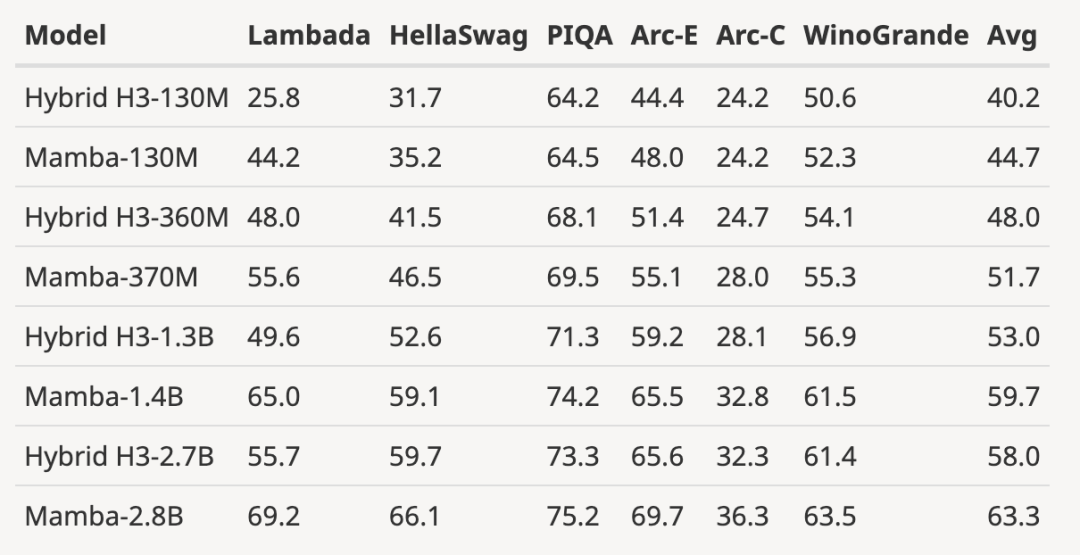

The author downloaded the size to 125M-2.7 Pretrained H3 model with B parameters and performed a series of evaluations. Mamba is significantly better in all language evaluations. It is worth noting that these H3 models are hybrid models using quadratic attention, while the author's pure model using only the linear-time Mamba layer is significantly better in all indicators. .

The evaluation comparison with the pre-trained H3 model is as follows:

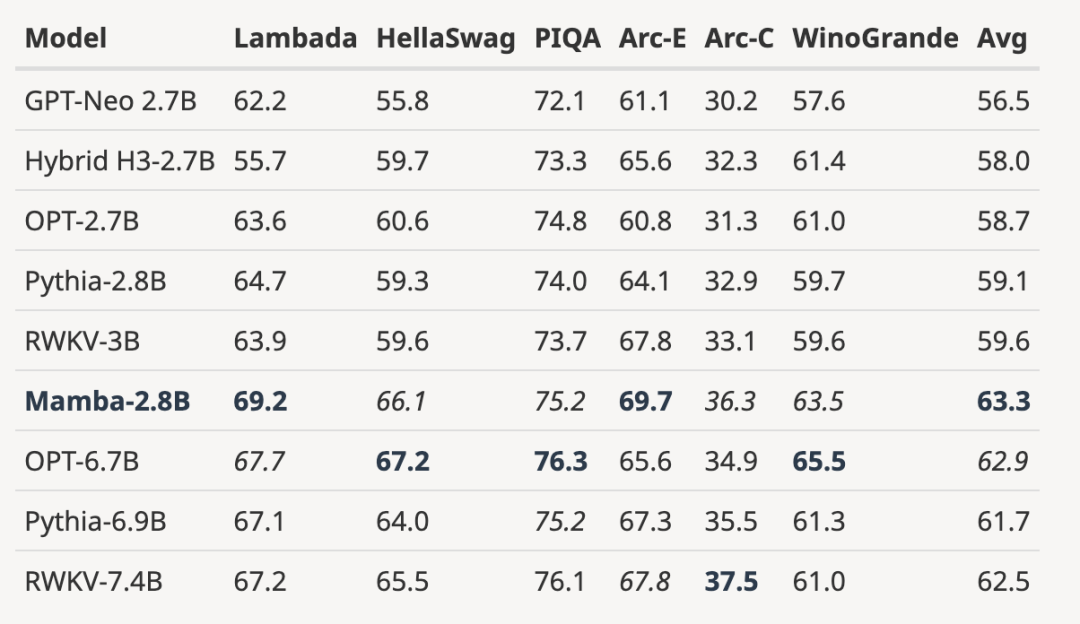

- ##Expand the fully trained model to a larger model size

As shown in the figure below, with 3B open source trained based on the same number of tokens (300B) Compared with the model, Mamba is superior in every evaluation result. It is even comparable to 7B-scale models: when comparing Mamba (2.8B) with OPT, Pythia and RWKV (7B), Mamba achieves the best average score and best/second best on every benchmark Score.

- shows the length extrapolation results beyond the training length

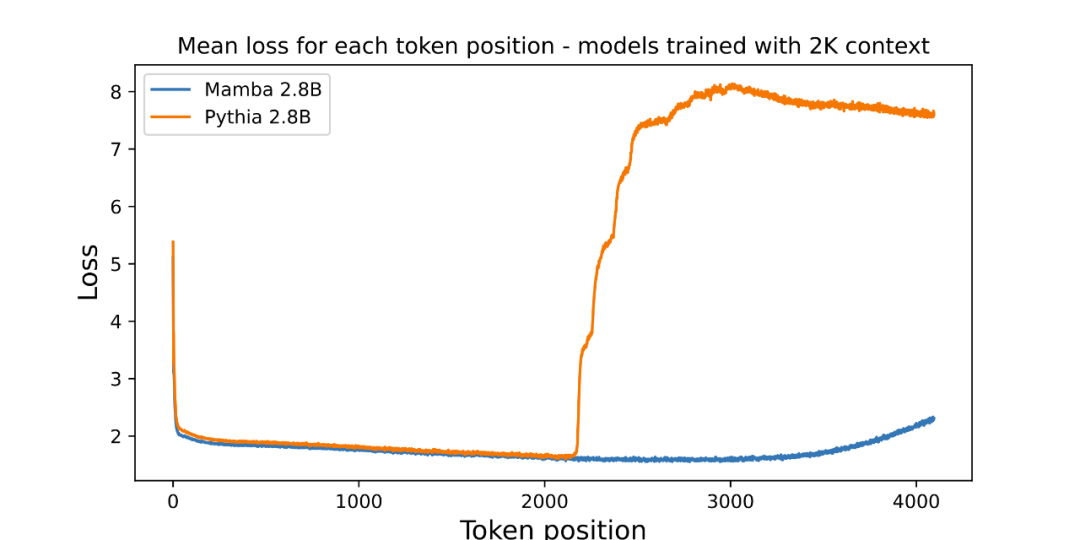

The author has attached a picture to evaluate the length extrapolation of the pre-trained 3B parameter language model:

Picture The average loss (log readability) per position is plotted in . The perplexity of the first token is high because it has no context, while the perplexity of both Mamba and the baseline Transformer (Pythia) increases before training on the context length (2048). Interestingly, Mamba's solvability improves significantly beyond its training context, up to a length of around 3000.

The author emphasizes that length extrapolation is not a direct motivation for the model in this article, but treats it as an additional feature:

- The baseline model here (Pythia) was not trained with length extrapolation in mind, and there may be other Transformer variants that are more general (such as T5 or Alibi relative position encoding).

- No open source 3B models trained on Pile using relative position encoding were found, so this comparison cannot be made.

- Mamba, like Pythia, does not take length extrapolation into account when training, so it is not comparable. Just as Transformers have many techniques (such as different positional embeddings) to improve their ability on length generalization isometrics, it might be interesting in future work to derive SSM-specific techniques for similar capabilities.

- Added new results from WikiText-103

The author analyzed the results of multiple papers, It shows that Mamba performs significantly better on WikiText-103 than more than 20 other state-of-the-art sub-quadratic sequence models.

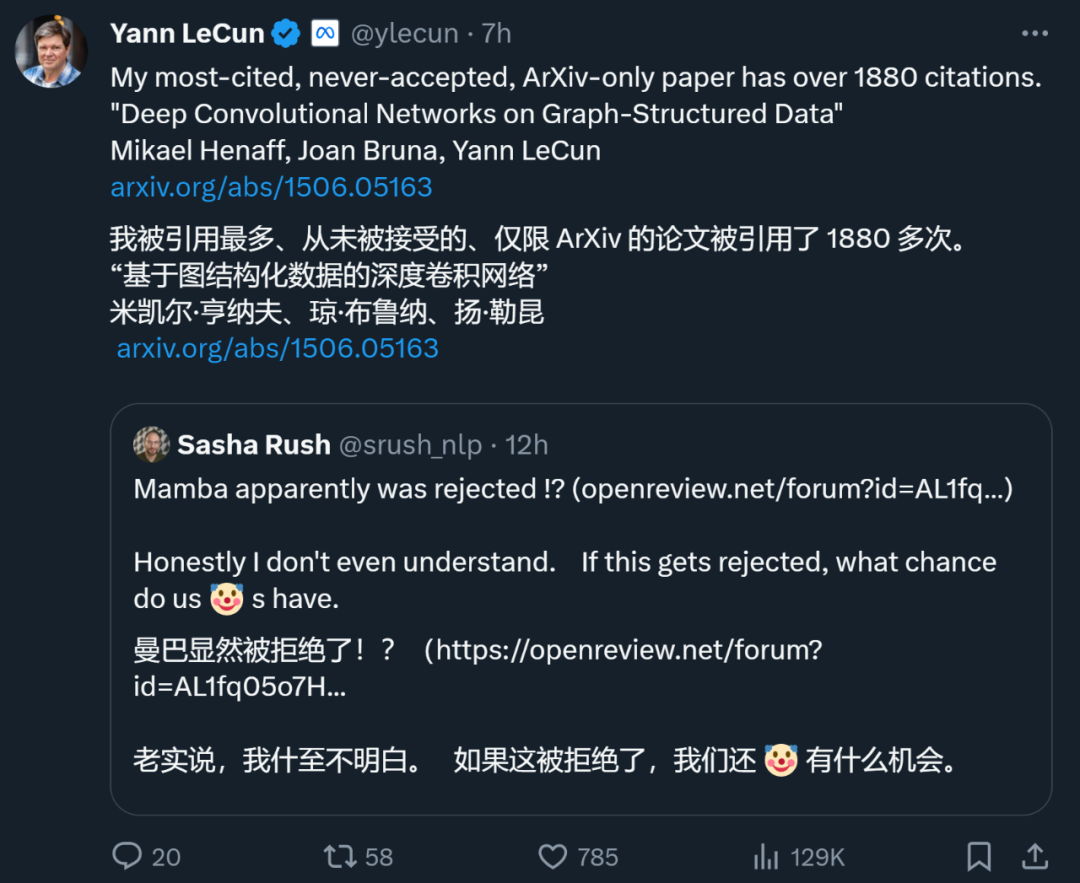

In major AI top conferences, "explosion in the number of submissions" is a headache, so energy is limited Reviewers will inevitably make mistakes. This has led to the rejection of many famous papers in history, including YOLO, transformer XL, Dropout, support vector machine (SVM), knowledge distillation, SIFT, and Google search engine's webpage ranking algorithm PageRank (see: "The famous YOLO and PageRank influential research was rejected by the top CS conference"). Even Yann LeCun, one of the three giants of deep learning, is also a major paper maker who is often rejected. Just now, he tweeted that his paper "Deep Convolutional Networks on Graph-Structured Data", which has been cited 1887 times, was also rejected by the top conference. During ICML 2022, he even "submitted three articles and three were rejected." So, just because a paper is rejected by a top conference does not mean it has no value. Among the above-mentioned rejected papers, many chose to transfer to other conferences and were eventually accepted. Therefore, netizens suggested that Mamba switch to COLM, which was established by young scholars such as Chen Danqi. COLM is an academic venue dedicated to language modeling research, focused on understanding, improving, and commenting on the development of language model technology, and may be a better choice for papers like Mamba's. However, regardless of whether Mamba is ultimately accepted by ICLR, it has become an influential work and has allowed the community to see a breakthrough The hope of Transformer shackles has injected new vitality into the exploration beyond the traditional Transformer model. Those papers rejected by top conferences

The above is the detailed content of Why didn't ICLR accept Mamba's paper? The AI community has sparked a big discussion. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.