Example analysis of Redis caching problem

1. Application of Redis cache

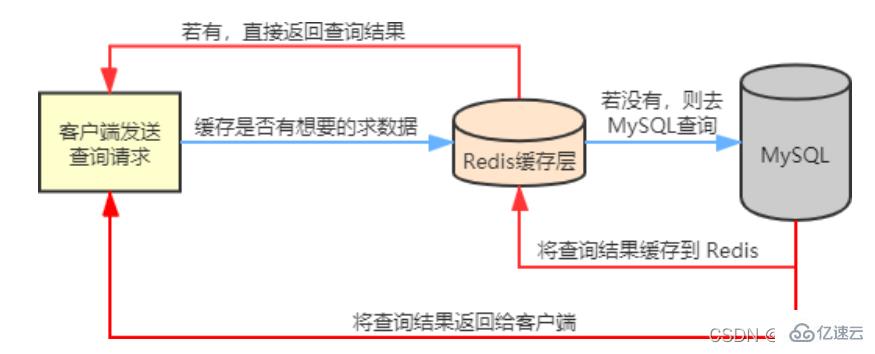

In our actual business scenarios, Redis is generally used in conjunction with other databases to reduce the pressure on back-end databases, such as relational databases. Used with database MySQL.

Redis will cache frequently queried data in MySQL, such as hotspot data, so that when users come to access, they do not need to go to MySQL. Instead of querying, the cached data in Redis is directly obtained, thereby reducing the reading pressure on the back-end database.

If the data queried by the user is not available in Redis, the user's query request will be transferred to the MySQL database. When MySQL returns the data to the client, the data will be cached in Redis at the same time., so that when the user reads again, the data can be obtained directly from Redis. The flow chart is as follows:

When using Redis as a cache database, we will inevitably face three common caching problems

Cache Penetration

Cache Penetration

Cache Avalanche

2. Cache Penetration

2.1 Introduction

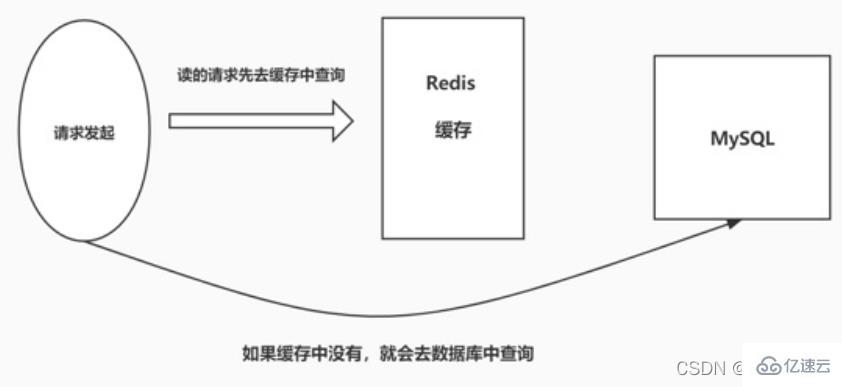

Cache penetration means that when the user queries a certain data, the data does not exist in Redis, that is, the cache does not hit. At this time, the query request will be transferred to the persistence layer database MySQL, and it is found that the data does not exist in MySQL either. , MySQL can only return an empty object, indicating that the query failed. If there are many such requests, or users use such requests to conduct malicious attacks, it will put great pressure on the MySQL database and even collapse. This phenomenon is called cache penetration.

2.2 Solution

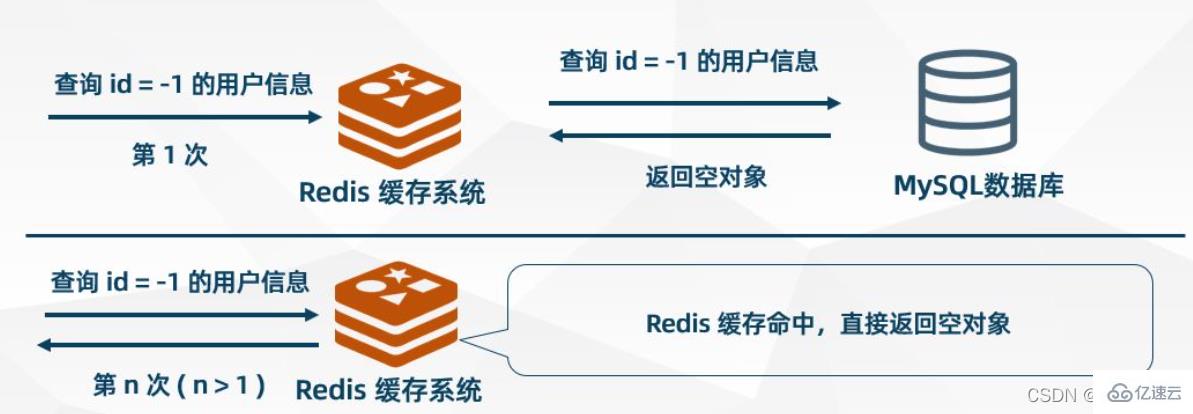

Cache empty objects

When MySQL When an empty object is returned, Redis caches the object and sets an expiration time for it. When the user initiates the same request again, an empty object will be obtained from the cache. The user's request is blocked in the cache layer, thus protecting the back-end database. However, this approach There are also some problems. Although the request cannot enter MSQL, this strategy will occupy Redis cache space.

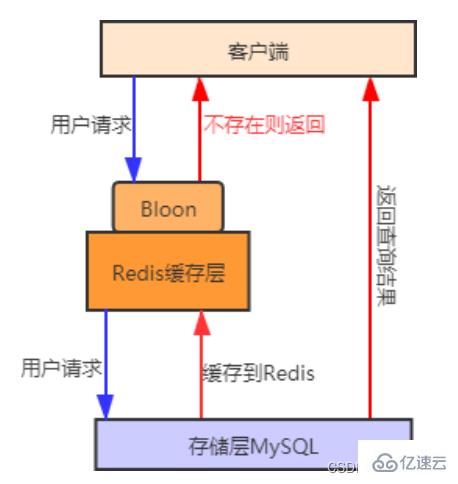

Bloom filter

First store all keys of hotspot data that users may access In the Bloom filter (also called cache preheating) , when a user makes a request, it will first go through the Bloom filter. The Bloom filter will determine whether the requested key exists . If If it does not exist, then the request will be rejected directly, otherwise the query will continue to be executed, first go to the cache to query, if the cache does not exist, then go to the database to query. Compared with the first method, using the Bloom filter method is more efficient and practical. The process diagram is as follows:

Cache preheating is the process of loading relevant data into the Redis cache system in advance before the system starts. . This avoids loading data when the user requests it.

2.3 Comparison of solutions

Both solutions can solve the problem of cache penetration, but their usage scenarios are different:

Cache empty objects: suitable for scenarios where the number of keys for empty data is limited and the probability of repeated key requests is high.

Bloom filter: suitable for scenarios where the keys of empty data are different and the probability of repeated key requests is low.

3. Cache breakdown

3.1 Introduction

Cache breakdown means that the data queried by the user does not exist in the cache , but it exists in the back-end database. The reason for this phenomenon is generally caused by the expiration of the key in the cache. For example, a hot data key receives a large number of concurrent accesses all the time. If the key suddenly fails at a certain moment, a large number of concurrent requests will enter the back-end database, causing its pressure to increase instantly. This phenomenon is called cache breakdown.

3.2 Solution

Change the expiration time

Set hotspot data to never expire.

Distributed lock

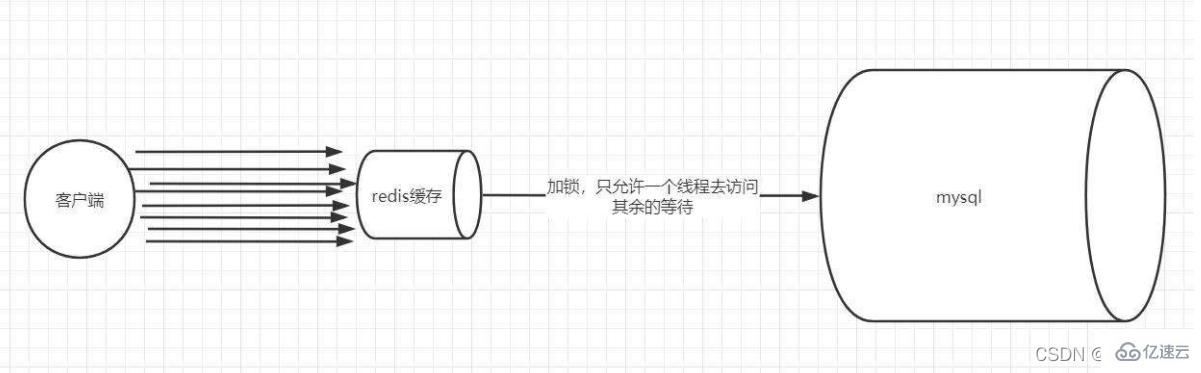

Adopt the distributed lock method to redesign the use of cache. The process is as follows:

Locking: When we query data through key, we first query the cache. If not, we lock it through distributed lock. The first process to obtain the lock Enter the back-end database query and buffer the query results to Redis.

Unlocking: When other processes find that the lock is occupied by a certain process, they enter the waiting state. After unlocking, other processes access the cached key in turn. .

Never expires: This solution does not set the real As for the expiration time, there are actually no series of hazards caused by hot keys, but there will be data inconsistencies, and the code complexity will increase.

Mutex lock: This solution is relatively simple, but there are certain hidden dangers. If there is a problem in the cache building process or it takes a long time, there may be deadlock and thread pool blocking. Risky, but this method can better reduce the back-end storage load and achieve better consistency.

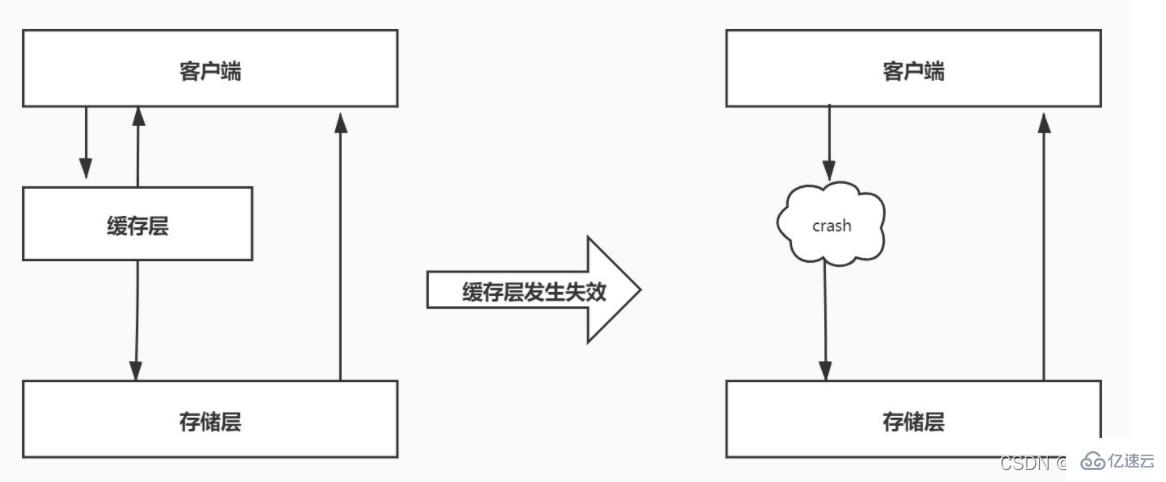

4. Cache avalanche4.1 IntroductionCache avalanche means that a large number of keys in the cache expire at the same time, and at this time the data The number of visits is very large, which leads to a sudden increase in pressure on the back-end database and may even cause it to crash. This phenomenon is called a cache avalanche. It is different from cache breakdown. Cache breakdown occurs when a certain hot key suddenly expires when the amount of concurrency is particularly large, while cache avalanche occurs when a large number of keys expire at the same time, so they are not of the same order of magnitude at all.

Handling expiration

In order to reduce cache breakdown and avalanche problems caused by a large number of keys expiring at the same time, a strategy of never expiring hotspot data can be adopted, which is similar to cache avalanche. In addition, in order to prevent keys from expiring at the same time, you can set a random expiration time for them.

redis high availability

One Redis may hang due to an avalanche, so you can add a few more Redis, build a cluster, if one hangs up, the others can continue to work.

The above is the detailed content of Example analysis of Redis caching problem. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

How to build the redis cluster mode

Apr 10, 2025 pm 10:15 PM

Redis cluster mode deploys Redis instances to multiple servers through sharding, improving scalability and availability. The construction steps are as follows: Create odd Redis instances with different ports; Create 3 sentinel instances, monitor Redis instances and failover; configure sentinel configuration files, add monitoring Redis instance information and failover settings; configure Redis instance configuration files, enable cluster mode and specify the cluster information file path; create nodes.conf file, containing information of each Redis instance; start the cluster, execute the create command to create a cluster and specify the number of replicas; log in to the cluster to execute the CLUSTER INFO command to verify the cluster status; make

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear redis data

Apr 10, 2025 pm 10:06 PM

How to clear Redis data: Use the FLUSHALL command to clear all key values. Use the FLUSHDB command to clear the key value of the currently selected database. Use SELECT to switch databases, and then use FLUSHDB to clear multiple databases. Use the DEL command to delete a specific key. Use the redis-cli tool to clear the data.

How to read redis queue

Apr 10, 2025 pm 10:12 PM

How to read redis queue

Apr 10, 2025 pm 10:12 PM

To read a queue from Redis, you need to get the queue name, read the elements using the LPOP command, and process the empty queue. The specific steps are as follows: Get the queue name: name it with the prefix of "queue:" such as "queue:my-queue". Use the LPOP command: Eject the element from the head of the queue and return its value, such as LPOP queue:my-queue. Processing empty queues: If the queue is empty, LPOP returns nil, and you can check whether the queue exists before reading the element.

How to use the redis command

Apr 10, 2025 pm 08:45 PM

How to use the redis command

Apr 10, 2025 pm 08:45 PM

Using the Redis directive requires the following steps: Open the Redis client. Enter the command (verb key value). Provides the required parameters (varies from instruction to instruction). Press Enter to execute the command. Redis returns a response indicating the result of the operation (usually OK or -ERR).

How to use redis lock

Apr 10, 2025 pm 08:39 PM

How to use redis lock

Apr 10, 2025 pm 08:39 PM

Using Redis to lock operations requires obtaining the lock through the SETNX command, and then using the EXPIRE command to set the expiration time. The specific steps are: (1) Use the SETNX command to try to set a key-value pair; (2) Use the EXPIRE command to set the expiration time for the lock; (3) Use the DEL command to delete the lock when the lock is no longer needed.

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

How to configure Lua script execution time in centos redis

Apr 14, 2025 pm 02:12 PM

On CentOS systems, you can limit the execution time of Lua scripts by modifying Redis configuration files or using Redis commands to prevent malicious scripts from consuming too much resources. Method 1: Modify the Redis configuration file and locate the Redis configuration file: The Redis configuration file is usually located in /etc/redis/redis.conf. Edit configuration file: Open the configuration file using a text editor (such as vi or nano): sudovi/etc/redis/redis.conf Set the Lua script execution time limit: Add or modify the following lines in the configuration file to set the maximum execution time of the Lua script (unit: milliseconds)

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

How to use the redis command line

Apr 10, 2025 pm 10:18 PM

Use the Redis command line tool (redis-cli) to manage and operate Redis through the following steps: Connect to the server, specify the address and port. Send commands to the server using the command name and parameters. Use the HELP command to view help information for a specific command. Use the QUIT command to exit the command line tool.

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

How to optimize the performance of debian readdir

Apr 13, 2025 am 08:48 AM

In Debian systems, readdir system calls are used to read directory contents. If its performance is not good, try the following optimization strategy: Simplify the number of directory files: Split large directories into multiple small directories as much as possible, reducing the number of items processed per readdir call. Enable directory content caching: build a cache mechanism, update the cache regularly or when directory content changes, and reduce frequent calls to readdir. Memory caches (such as Memcached or Redis) or local caches (such as files or databases) can be considered. Adopt efficient data structure: If you implement directory traversal by yourself, select more efficient data structures (such as hash tables instead of linear search) to store and access directory information