A review of visual methods for trajectory prediction

A recent review paper "Trajectory-Prediction With Vision: A Survey" comes from Hyundai and Aptiv's company Motional; however, it refers to the review article "Vision-based Intention and Trajectory Prediction in Autonomous Vehicles: A Survey" by Oxford University ".

The prediction task is basically divided into two parts: 1) Intention, which is a classification task that pre-designs a set of intention classes for the agent; it is usually regarded as a supervised learning problem, and it is necessary to label the possibility of the agent Classification intention; 2) Trajectory, which requires predicting a set of possible positions of the agent in subsequent future frames, called waypoints; this constitutes the interaction between agents and between agents and roads.

Previous behavioral prediction models can be divided into three categories: physics-based, maneuver-based and interaction-perception models. This sentence can be rewritten as: Using the dynamic equations of the physical model, artificially controllable movements are designed for various types of agents. This method cannot model the potential states of the entire situation, but usually only focuses on a specific agent. However, in the era before deep learning, this trend used to be SOTA. Maneuver-based models are models based on the type of movement expected by the agent. An interaction-aware model is typically a machine learning-based system that performs pairwise inference for each agent in the scene and generates interaction-aware predictions for all dynamic agents. There is a high degree of correlation between different nearby agent targets in the scene. Modeling complex agent trajectory attention modules can lead to better generalization.

Predicting future actions or events can be expressed implicitly, or its future trajectory can be explicit. The agent's intentions may be influenced by: a) the agent's own beliefs or wishes (which are often not observed and therefore difficult to model); b) social interactions, which can be modeled in different ways, e.g. Pooling, graph neural networks, attention, etc.; c) environmental constraints, such as road layout, which can be encoded through high-definition (HD) maps; d) background information in the form of RGB image frames, lidar point clouds, optical flow, segmentation Figure etc. Trajectory prediction, on the other hand, is a more challenging problem since it involves regression (continuous) rather than classification problems, unlike recognizing intent.

Trajectory and intention need to start from interaction-awareness. A reasonable assumption is that when trying to drive aggressively onto a highway with heavy traffic, a passing vehicle may brake hard. Modeling. It is better to model in BEV space, which allows trajectory prediction, but also in the image view (also called perspective). This sentence can be rewritten as: "This is because regions of interest (RoIs) can be assigned in the form of a grid to a dedicated distance range.". However, due to the vanishing line in perspective, the image perspective can theoretically expand the RoI infinitely. BEV space is more suitable for modeling occlusion because it models motion more linearly. By performing attitude estimation (translation and rotation of the own vehicle), compensation of own motion can be carried out simply. In addition, this space preserves the motion and scale of the agent, that is, the surrounding vehicles will occupy the same number of BEV pixels no matter how far away they are from the self-vehicle; but this is not the case for the image perspective. In order to predict the future, one needs to have an understanding of the past. This can usually be done through tracking, or it can be done with historical aggregated BEV features.

The following figure is a block diagram of some components and data flow of the prediction model:

The following table is a summary of the prediction model:

The following basically discusses the prediction model starting from input/output:

1) Tracklets: The perception module predicts the current status of all dynamic agents. This state includes 3-D center, dimensions, velocity, acceleration and other attributes. Trackers can leverage this data and establish temporary associations so that each tracker can preserve a history of the state of all agents. Now, each tracklet represents the agent's past movements. This form of predictive model is the simplest since its input consists only of sparse trajectories. A good tracker is able to track an agent even if it is occluded in the current frame. Since traditional trackers are based on non-machine learning networks, it becomes very difficult to implement an end-to-end model.

2) Raw sensor data: This is an end-to-end method. The model obtains raw sensor data information and directly predicts the trajectory prediction of each agent in the scene. This method may or may not have auxiliary outputs and their losses to supervise complex training. The disadvantage of this type of approach is that the input is information intensive and computationally expensive. This is due to the merging of the three problems of perception, tracking and prediction, making the model difficult to develop and even more difficult to achieve convergence.

3) Camera-vs-BEV: The BEV method processes data from a top-view map, and the camera prediction algorithm perceives the world from the perspective of the self-vehicle. The latter is usually more challenging than the former for a variety of reasons; first, from BEV perception can obtain a wider field of view and richer prediction information. In comparison, the camera's field of view is shorter, which limits the prediction range because the car cannot plan outside the field of view; in addition, the camera is more likely to be blocked, so it is different from the camera based on Compared with the camera method, the BEV method is subject to fewer "partial observability" challenges; secondly, unless lidar data is available, monocular vision makes it difficult for the algorithm to infer the depth of the agent in question, which is an important clue to predict its behavior ; Finally, the camera is moving, which needs to deal with the movement of the agent and the self-vehicle, which is different from the static BEV; A word of caution: As a shortcoming, the BEV representation method still has the problem of accumulated errors; although in processing the camera view There are inherent challenges, but it is still more practical than BEVs, and cars rarely have access to cameras that show the location of BEVs and concerned agents on the road. The conclusion is that the prediction system should be able to see the world from the perspective of the self-vehicle, including lidar and/or stereo cameras, whose data may be advantageous to perceive the world in 3D; another important related point is that every time if attention must be included When predicting the position of the agent, it is better to use the bounding box position rather than the pure center point, because the coordinates of the former imply changes in the relative distance between the vehicle and the pedestrian as well as the self-motion of the camera; in other words, as the agent As the body approaches the self-vehicle, the bounding box becomes larger, providing an additional (albeit preliminary) estimate of depth.

4) Self-motion prediction: Model the self-vehicle motion to generate a more accurate trajectory. Other approaches use deep networks or dynamical models to model the motion of the agent of interest, leveraging additional quantities computed from the dataset input, such as poses, optical flow, semantic maps, and heat maps.

5) Time domain encoding: Since the driving environment is dynamic and there are many active agents, it is necessary to encode in the agent time dimension to build a better prediction system that compares what happened in the past with The future is connected by what is happening now; knowing where the agent comes from helps guess where the agent might go next. Most camera-based models deal with shorter time scales, while for longer time scales deal with predictions. The model requires a more complex structure.

6) Social Encoding: To address the “multi-agent” challenge, most of the best-performing algorithms use different types of graph neural networks (GNN) to encode social interactions between agents; most Methods encode the temporal and social dimensions separately—either starting with the temporal dimension and then considering the social dimension, or in reverse order; there is a Transformer-based model that encodes both dimensions simultaneously.

7) Prediction based on expected goals: Behavioral intention prediction, like scene context, is usually affected by different expected goals and should be inferred through explanation; for future predictions conditioned on expected goals, this goal Will be modeled as the future state (defined as destination coordinates) or the type of movement expected by the agent; research in neuroscience and computer vision shows that people are usually goal-oriented agents; in addition, while making decisions, people Following a series of successive levels of reasoning, ultimately formulating a short or long-term plan; based on this, the question can be divided into two categories: the first is cognitive, answering the question of where the agent is going; the second is arbitrary Sexually, answer the question of how this agent achieves its intended goals.

8) Multi-modal prediction: Since the road environment is stochastic, a previous trajectory can unfold different future trajectories; therefore, a practical prediction system that solves the "stochasticity" challenge will have a huge impact on the problem. Uncertainty is modeled; although there are methods for latent space modeling of discrete variables, multimodality is only applied to trajectories, fully showing its potential in intention prediction; an attention mechanism is used, which can be used to calculate weights.

The above is the detailed content of A review of visual methods for trajectory prediction. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Intelligent App Control is a very useful tool in Windows 11 that helps protect your PC from unauthorized apps that can damage your data, such as ransomware or spyware. This article explains what Smart App Control is, how it works, and how to turn it on or off in Windows 11. What is Smart App Control in Windows 11? Smart App Control (SAC) is a new security feature introduced in the Windows 1122H2 update. It works with Microsoft Defender or third-party antivirus software to block potentially unnecessary apps that can slow down your device, display unexpected ads, or perform other unexpected actions. Smart application

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

1 Introduction Neural Radiation Fields (NeRF) are a fairly new paradigm in the field of deep learning and computer vision. This technology was introduced in the ECCV2020 paper "NeRF: Representing Scenes as Neural Radiation Fields for View Synthesis" (which won the Best Paper Award) and has since become extremely popular, with nearly 800 citations to date [1 ]. The approach marks a sea change in the traditional way machine learning processes 3D data. Neural radiation field scene representation and differentiable rendering process: composite images by sampling 5D coordinates (position and viewing direction) along camera rays; feed these positions into an MLP to produce color and volumetric densities; and composite these values using volumetric rendering techniques image; the rendering function is differentiable, so it can be passed

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass

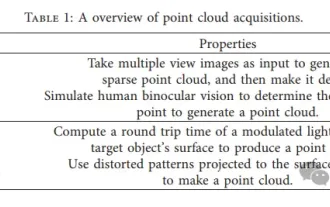

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

Point cloud, as a collection of points, is expected to bring about a change in acquiring and generating three-dimensional (3D) surface information of objects through 3D reconstruction, industrial inspection and robot operation. The most challenging but essential process is point cloud registration, i.e. obtaining a spatial transformation that aligns and matches two point clouds obtained in two different coordinates. This review introduces the overview and basic principles of point cloud registration, systematically classifies and compares various methods, and solves the technical problems existing in point cloud registration, trying to provide academic researchers outside the field and Engineers provide guidance and facilitate discussions on a unified vision for point cloud registration. The general method of point cloud acquisition is divided into active and passive methods. The point cloud actively acquired by the sensor is the active method, and the point cloud is reconstructed later.

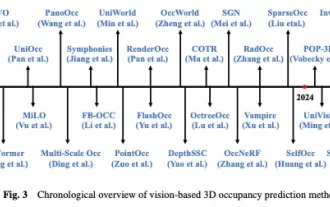

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Written above & The author’s personal understanding In recent years, autonomous driving has received increasing attention due to its potential in reducing driver burden and improving driving safety. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. at last