Technology peripherals

Technology peripherals

AI

AI

2023 CES: Sony and Qualcomm are enthusiastic about building cars, and European and American travel technology carnivals

2023 CES: Sony and Qualcomm are enthusiastic about building cars, and European and American travel technology carnivals

2023 CES: Sony and Qualcomm are enthusiastic about building cars, and European and American travel technology carnivals

The annual CES (International Consumer Electronics Show) is here again. As in previous years, concept cars and automotive technologies related to electric vehicles and autonomous driving are still the highlight of the 2023 CES. .

#At last year’s CES, the trend of technology giants such as Sony crossing over into the automotive field aroused heated public attention. At this year's CES exhibition, not only were there the latest technological achievements brought by these cross-border "players", but there were also more "back waves", including Tier 1 and "new Tier 1" who had finished building cars.

Sony and Honda launch AFEELA, pre-sales will start in 2025

At this CES show, Sony and Honda’s joint venture Sony Honda Mobile Company (Sony Honda Mobility) announced the launch of AFEELA, a new brand of smart connected cars, and its first prototype car also made its debut.

# It is reported that the new car is positioned as a medium and large sedan. Pre-sales will start in the first half of 2025, and it will be officially launched in the United States in the spring of 2026. Deliveries to the Japanese market will begin in half a year.

Sony revealed that the pricing of the new electric car will compete with other high-end car manufacturers such as Mercedes-Benz, BMW, Volvo and Audi, and announced the design details of the new car and the design of AFEELA idea.

# According to reports, the car will be equipped with 45 cameras and sensors inside and outside, and plans to use the system-level chip of Qualcomm Snapdragon Digital Chassis to achieve 800 Maximum computing power of TOPS*1. Based on the current road environment, SHM's goal is to develop level three autonomous driving in limited driving environments and achieve L2 driver-assisted driving in most situations (such as urban driving environments).

Moreover, the car will be equipped with Epics Games’ Unreal Engine (a 3D computer graphics game engine), which not only provides entertainment but also ensures vehicle communication and safety. Sony CEO Kenichiro Yoshida said that they will build AFEELA into a "mobile entertainment space" with autonomous driving capabilities.

In terms of innovative design, the car’s exterior features a “Media Bar”, which can share various information with people around it through light, enabling mobile travel devices and interaction between people. Details of the interior have not yet been disclosed, but Sony Honda Mobility said its interior will be designed to be comfortable and simple, and they will try to reflect the three themes of autonomy, enhancement and affinity.

Regarding AFEELA’s design, some netizens said, “It looks too much like Lucid” or “It’s simply a copied version of Lucid.” Someone else said, "This car looks like a cross between a Porsche and a Lucid Air."

Qualcomm concept car debuted and released a new car SoC

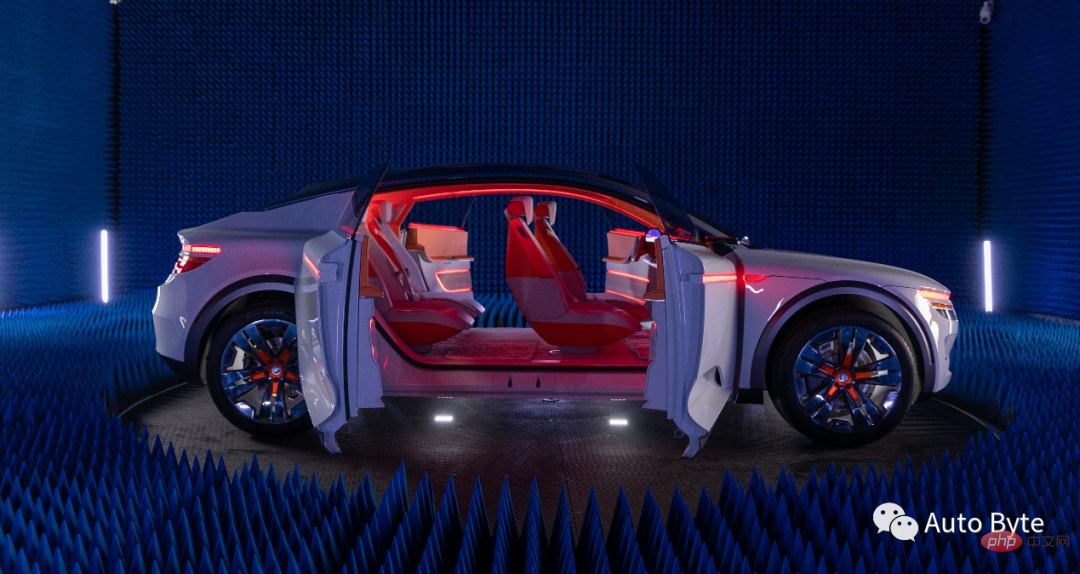

Qualcomm exhibited its new concept car at 2023 CES and released a model that can also support digital cockpit and ADAS (advanced driver assistance system) automotive processor chip Snapdragon Ride Flex SoC.

In terms of appearance, the car adopts a futuristic design. The overall shape is similar to a coupe SUV, with the front face and rear end Most of them adopt a split headlight set design, the front enclosure adopts a three-stage layout, and there is a recessed design on the front hood; the doors adopt a split design, and the light strips on the doors can switch to different colors.

This new concept car is equipped with a 55-inch display screen in the front row, 5G network connection, and rich functions; the rear row is also equipped with a display screen, and inherits the graphics and image enhancement function to optimize the visual experience. In addition, the new car provides personalized services that can identify each driver and passenger and automatically adjust the seat and air-conditioning temperature according to personal settings. In addition, the car supports real-time 3D maps and positioning services. When the vehicle encounters an accident, the situation around the vehicle will be recorded and saved to the cloud, providing more convenience for subsequent accident resolution.

It is reported that Snapdragon Ride Flex SoC is designed to support mixed critical-level workloads across heterogeneous computing resources, supporting digital cockpit, ADAS and AD functions with a single SoC. The first Snapdragon Ride Flex SoC is now sampling, with mass production expected to begin in 2024.

Qualcomm introduced that Snapdragon Ride Flex SoC is optimized for scalable performance and supports central computing systems from entry-level to high-end and top-level, which can help automakers flexibly target different levels of models. Choose the right performance point; Snapdragon Ride Flex SoC can be developed using cloud-native automotive software development workflows, including support for virtual platform simulation, which can be integrated as a cloud-native development operations (DevOps) and machine learning operations (MLOps) foundation part of the facility.

NVIDIA released a number of innovations in the automotive field

At this CES show, NVIDIA released a number of innovations in the automotive field, including cooperation with Foxconn to develop automation and self-driving car platforms, cloud gaming services onboard vehicles, and the Omniverse platform for production in automobile factories.

According to the cooperation agreement, Foxconn will manufacture automotive electronic control units (ECUs) based on NVIDIA's Drive Orin chips. The electric vehicles produced by Foxconn will also use NVIDIA's DRIVE Orin ECU and DRIVE Hyperion. Sensor architecture for highly automated driving.

NVIDIA also announced that its cloud gaming service (GeForce NOW) will be launched on the automotive platform. The first batch of supported automotive brands are BYD, Polestar, Kia, Hyundai, and Genesis. GeForce NOW uses breakthrough low-latency streaming technology provided by cloud-based GeForce servers to enable real-time playback of more than 1,000 games including Steam.

It is reported that this service combines NVIDIA’s advantages in gaming and infotainment, extends the real-time and complete PC gaming experience to software-defined cars, and further expands NVIDIA’s in-vehicle information The entertainment product portfolio can enhance users’ cockpit experience.

In addition, NVIDIA launched the latest version of the Omniverse Enterprise collaboration platform and announced that Mercedes-Benz will use the Omniverse platform to build its next-generation factory. By introducing Omniverse, Mercedes-Benz will use a "digital first" production approach to design, plan manufacturing and assembly facilities.

NVIDIA said that by leveraging NVIDIA’s AI and Metaverse technologies, automakers can create feedback loops to help reduce waste, reduce energy consumption and continuously improve quality.

Volkswagen ID.Aero is renamed ID.7 and will go on sale next year

At the 2023 CES show, Volkswagen announced that its ID.Aero model will Renamed ID.7, and stated that the car will have the best battery life performance among Volkswagen ID series models. It is reported that the ID.7 will be launched in Europe, Asia and North America; and its mass production version is planned to be released in the second quarter of this year, and is expected to be mass produced by FAW-Volkswagen and SAIC Volkswagen in the second half of the year.

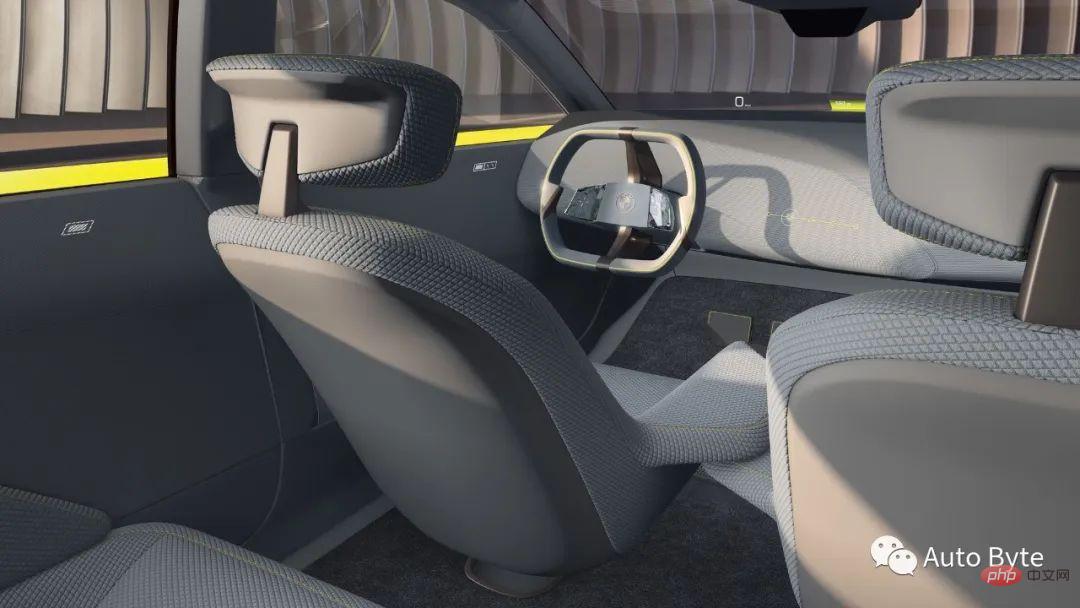

The ID.7 interior is composed of a touch screen, a digital instrument panel and a Volkswagen augmented reality head-up display, with control functions and volume touch sliders below the screen. Can light up when in use. However, it should be noted that although the steering wheel retains touch controls, according to previous reports, these touch controls may be replaced with physical buttons.

In terms of power, ID.7, like other Volkswagen ID models, will also use the MEB platform, which has the advantage of providing longer battery life and faster charging speed. According to Volkswagen, under the relatively loose WLTP working conditions in Europe, the ID.7's driving range can reach about 700 kilometers. The company has not yet announced the vehicle's endurance under American working conditions.

Moreover, ID.7 follows the design language of Volkswagen’s all-electric vehicle family. The front and roof of the vehicle retain an aerodynamic design, which helps reduce energy consumption and increase cruising range. The roof has a coupe-like slope at the rear, which can effectively reduce the drag coefficient; the front overhang is short, the wheelbase is 2970 mm, and the interior space is relatively spacious.

In addition, a major interior highlight of the car is the digitally controlled “smart vents”. Volkswagen said the system can identify the driver through the car key and adjust the temperature inside the car based on the weather before the driver enters the vehicle; the air conditioner can distribute air to a large area as quickly as possible or direct airflow to the passenger's body. Moreover, these functions can be activated individually for each passenger via the central touch screen or using voice commands, and support voice commands.

Volvo’s “smartest” model EX90 will be delivered in 2024

Volvo exhibited the EX90 at this CES and said that the EX90 will be their It is the “smartest” car model among all models. The car is expected to be delivered as early as early 2024. The specific price has not yet been announced. Volvo officials said that its starting price in North America will be less than $80,000.

According to reports, the EX90 is a seven-seat pure electric SUV. The core feature is a new computer system that can understand the driver and the surrounding environment of the vehicle in real time. It will also continue to learn from new data and conduct online OTA updates.

The entire vehicle is equipped with 8 cameras and a variety of hybrid sensors, including 1 long-range lidar, 5 millimeter wave radars and 16 ultrasonic sensors . Among them, lidar was developed by Luminar and can sense the road and complete detection at a distance of 180 meters day and night, even when driving at high speeds. It will be integrated with Volvo's driver assistance system, using a capacitive steering wheel and two camera-based monitoring sensors in the car to monitor the driver's status in real time.

In terms of the cockpit, the EX90’s central control screen is 14.5 inches in size. It is built with Volvo’s latest car system and supports Google applications and services, including hands-free help and navigation from Google Assistant. wait. Additionally, the system is compatible with wireless Apple CarPlay.

Extended range hybrid powered Aska A5 flying car, priced at US$789,000

At 2023 CES, the Aska A5 flying car will officially make its debut and is expected to Mass production in 2026. Currently, the car has been pre-sold overseas with a deposit of US$5,000. The official price is expected to be US$789,000 (approximately RMB 5.434 million).

It is reported that this is an eVTOL (an electric vertical takeoff and landing vehicle) flying car that can take off and land anywhere a helicopter can reach. When it is driving on land, its wings will retract and its size is equivalent to that of an SUV.

In terms of power, the car uses an extended-range drive and can travel 402km in the air. The official battery life on land has not yet been disclosed, but judging from the official pictures, it has 95% of the battery remaining. The battery life is 563km.

According to reports, when driving on land, it can transmit power to 4 wheels, with a top speed of 113km/h. When traveling in the air, it will be powered by electricity to drive six propellers into the air. After reaching a certain height, the wings on both sides can switch angles to complete the flight; if a mechanical failure occurs in the air, it can land safely by opening a parachute.

In terms of interior design, the car adopts a 4-seat layout. There are multiple screens in the car, which can realize split-screen display and support real-life navigation. The shape of the steering wheel combines the feeling of aircraft control with a traditional steering wheel, with an open design at the top. In addition, the car has both the traditional "PRND" virtual shift button and "V, F" gears for flying.

Holon launches self-driving bus, which can accommodate up to 15 people

Benteler's automotive brand Holon launched a self-driving bus at the 2023 CES show, calling it "the world's first compliant "Standard self-driving car" and announced that the car is expected to be put into production in the United States in 2025 and will be used in logistics and public travel companies, in addition to municipalities, universities, airports, parks, etc.

This car is designed by Pininfarina, developed and produced by Holon, Mobileye provides the autonomous driving system, and Beep provides mobile services. technology. Its top speed is 60 kilometers/hour and its endurance is approximately 290 kilometers.

According to reports, Holon uses "inclusiveness from the beginning" as the design concept of self-driving buses, emphasizing barrier-free design. The vehicle is equipped with ramps to assist passengers with reduced mobility, the seats are foldable, and the floor is equipped with locks that can secure wheelchairs.

The vehicle can accommodate up to 15 passengers, and the comfortable, subtly offset seating arrangement provides a sense of privacy while meeting all safety requirements. . The vehicle interior is also equipped with necessary braille, allowing visually impaired passengers to travel conveniently.

HARMAN launches AR assisted driving tools

HARMAN, an automotive technology company and subsidiary of Samsung Electronics, launched a series of AR-assisted driving tools at CES 2023 aimed at improving the health and safety of drivers and passengers. Safe automotive products, including AR-assisted driving tool Ready Vision, updated DMS system Ready Care and external car microphones.

Ready Vision’s AR software integrates with vehicle sensors to provide immersive audio and visual alerts that provide timely, accurate and non-intrusive information to drivers Provide key knowledge and information to staff. Furthermore, the tool navigates by displaying intuitive instructions on the windshield and uses computer vision and machine learning for 3D object detection to provide drivers with high-precision non-intrusive collision warning, blind spot warning, lane departure, Lane change assist and low speed zone notification.

In September, HARMAN launched a DMS system called Ready Care, which is used to measure the driver’s eye activity and mental state. At this CES show, the company announced that it has added non-contact measurement of human vital signs such as heart rate, respiration rate and heartbeat interval levels to Ready Care, and added an in-cabin radar to its sensor set. Used to detect the presence of children.

In addition, Harman also launched sound and vibration sensors and car exterior microphones at CES for identifying emergency vehicle sirens and detecting glass breakage and vehicle collisions.

ZF has five major upgrades in automotive technology

At the CES 2023 exhibition, ZF Group announced that it has made five major upgrades in automotive technology.

The new ZF ProAI vehicle-mounted high-performance computer further expands the development of multi-domain versions, which means that different boards of a computing platform can support advanced assisted driving (ADAS) ) domain, infotainment domain or chassis domain functionality, including SoC configurations from multiple vendors. It's even capable of running multiple operating systems in parallel, such as QNX for advanced driver assistance features and Android Auto for infotainment. Another major advantage is that the software stack has been developed and implemented on a specific microprocessor and can be carried over to a multi-domain architecture.

Currently, ZF’s ProAI vehicle-mounted high-performance computer series has achieved industrial production and is about to be launched. The company plans to launch it in 2024 Start bulk supply. The multi-domain version of ZF ProAI can achieve the expected goal of being installed on 30%-40% of new vehicle platforms as early as 2025.

ZF also launched the world’s first energy-saving contact heated seat belt, designed to help reduce the heat demand in the cabin. The contact heating device heats the occupants' bodies via webbing wires, achieving a maximum surface temperature of 40 degrees Celsius and using only 70 watts of energy. The device is simple to install and requires no adjustments to the seat belt retractor and pretensioner.

Combining ZF contact heated seat belts with other contact heaters such as seat or steering wheel heating, the driver can turn down The heating temperature setting in the cockpit can increase the cruising range of electric vehicles by up to 15% in cold conditions.

The next-generation shuttle vehicle released by ZF at the 2023 CES show will have SAE Level 4 autonomous driving capabilities. If the local legislative framework allows, it can operate without a safety administrator. driving in mixed traffic. The new L4-class shuttle used in mixed traffic also has a fully modular interior design that can be tailored to the specific needs of customers.

The shuttle joins ZF’s growing product portfolio and has become part of ZF’s Autonomous Transport System (ATS). Used for segregated lanes or dedicated lane driving. Recently, the latest version of the shuttle started operation in the Rivium Business Park in Rotterdam, the Netherlands.

ZF stated that it signed an agreement with the US mobility service provider Beep to develop and deploy L4 ATS for US customer projects. The agreement includes plans to deploy thousands of ATS in the US. An L4 shuttle vehicle.

In addition, ZF is helping customers achieve cloud-based information and data sharing through its ProConnect high-performance networking platform. As an advanced telematics module with modular hardware and customizable software, ProConnect features the latest in-vehicle and external communication technologies and is suitable for almost all types of vehicles.

ZF also provides independent software products that OEMs can integrate into their respective system architectures. This product category is complemented by smart sensors, smart actuators, telematics and computing modules, among others.

BMW’s latest car system iDrive 9

BMW announced its latest car system iDrive 9 based on the Android platform at the 2023 CES. The system will support 3D navigation and have a flexible touch layout. It may have a new name after it is officially launched in the future.

It is reported that the iDrive 9 vehicle system will be put into use soon. The latest model equipped with this system will be the replacement BMW X2, and the new BMW X1 will also be upgraded simultaneously. In addition to the new BMW X1, the new BMW 2 Series Active Tourer model will also use the BMW OS 9 car system. In addition, future MINI models will also update the machine, but will use a different visual UI to distinguish them from BMW models.

It should be noted that not all BMW models can currently update the iDrive 9 system. Some models whose systems run on the Linux platform will be updated to the iDrive 8.5 system. The latter will be launched this summer and is suitable for the new BMW 5 Series, i5, and the new 7 Series on sale.

However, BMW said that the interactive experience of the iDrive 9 system and the iDrive 8.5 system is very similar.

Continental released three new technologies for mobility

At 2023 CES, Continental Group demonstrated its three new technologies in the field of mobility , including two sustainable tires, long-range lidar and ADAS solutions.

Continental highlighted two sustainable tires: ContiTread EcoPlus Green and Conti Urban. Both commercial vehicle tires demonstrate Continental’s strengths in sustainability.

In addition, Continental also released the HRL131 high-performance lidar developed in cooperation with AEye. This radar can operate at a distance of more than 300 meters. Vehicles are detected at a distance of more than 200 meters, and pedestrians are detected at a distance of more than 200 meters. Testing and verification of production samples will take place in 2023, with first series production planned for the end of 2024.

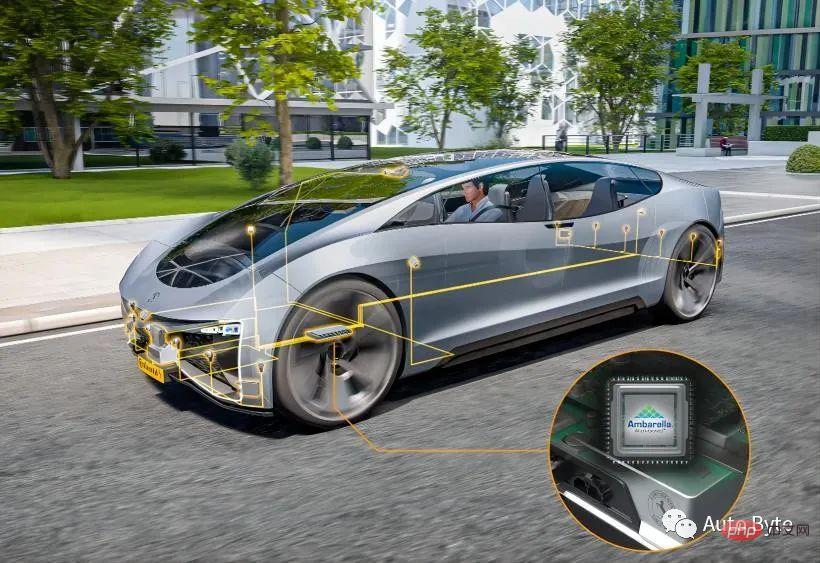

Currently, mainland China is integrating Ambarella Semiconductor’s “CV3” series of artificial intelligence chips into its advanced driver assistance system (ADAS) solution in plan. It is reported that Ambarella's high-performance, low-power and scalable SoC product portfolio can acquire sensor data faster and more comprehensively, and its power consumption is about half of conventional power consumption.

The boundaries between automobiles and consumer electronics are becoming blurred. In recent years, automobile manufacturers and suppliers have been frequent visitors to CES. The difference is that many of the concept cars at this year's show are not from car companies, but are developed by industry suppliers. Under this trend, the relationship between vehicle manufacturers and component suppliers may undergo some subtle changes.

The above is the detailed content of 2023 CES: Sony and Qualcomm are enthusiastic about building cars, and European and American travel technology carnivals. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving