Regarding AGI and ChatGPT, Stuart Russell and Zhu Songchun think so

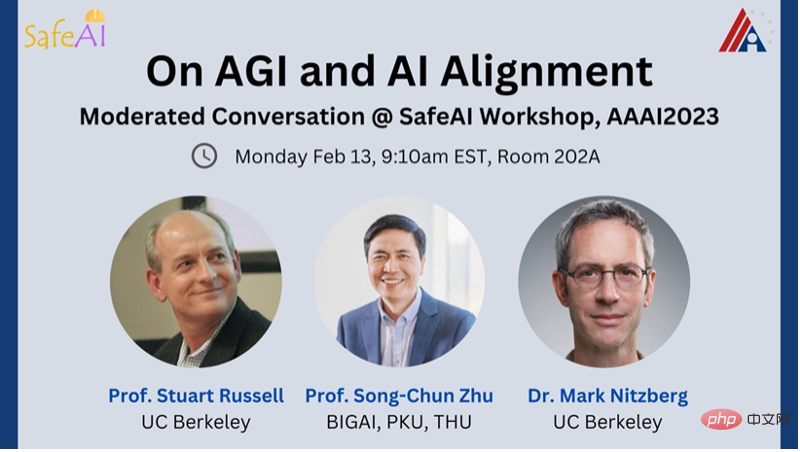

Mark Nitzberg: Today we are honored to invite two top experts in artificial intelligence to participate in this SafeAI seminar.

They are: Stuart Russell, a computer scientist at the University of California, Berkeley, director of the Center for Class-Compatible Artificial Intelligence (CHAI), and a member of the Steering Committee of the Artificial Intelligence Research Laboratory (BAIR). Vice Chairman of the Artificial Intelligence and Robotics Committee of the World Economic Forum, AAAS Fellow, and AAAI Fellow.

Zhu Songchun is the dean of the Beijing Institute of General Artificial Intelligence, the chair professor of Peking University, the dean of the School of Intelligence and the Artificial Intelligence Research Institute of Peking University, and the chair professor of basic sciences at Tsinghua University.

Mark Nitzberg: What is "general artificial intelligence"? Is there a well-defined test to determine when we created it?

Stuart Russell: General artificial intelligence has been described as being able to complete all tasks that humans can complete, but this is only a general statement. We hope that general artificial intelligence can do things that humans cannot, such as summarizing all knowledge or simulating complex particle interactions.

In order to study general artificial intelligence, we canshift from benchmarks of specific tasks to general attributes of the task environment, such as partial observability, long-term, unpredictability, etc., and ask yourself whether you have the ability to provide a complete solution for these properties. If we have this ability, general artificial intelligence should be able to automatically complete tasks that humans can complete, and still have the ability to complete more tasks. While there are some tests (such as BigBench) that claim to test generality, they do not cover tasks that are inaccessible to artificial intelligence systems, such as "Can you invent a gravitational wave detector?"

Zhu Songchun:A few years ago, many people thought that achieving general artificial intelligence was an unreachable goal. However, the recent popularity of ChatGPT has made everyone full of expectations, and they feel that general artificial intelligence seems to be within reach. Within reach. When I established the Beijing Institute of General Artificial Intelligence (BIGAI), a new research and development institution in China, I specifically decided to use AGI as part of the name of the institution to distinguish it from specialized artificial intelligence. The word "Tong" in Tongyuan is composed of the three letters "A", "G" and "I". According to the pronunciation of the word "tong", we also call general artificial intelligence TongAI.

General artificial intelligence is the original intention and ultimate goal of artificial intelligence research. The goal is to realize a general intelligent agent with autonomous perception, cognition, decision-making, learning, execution and social collaboration capabilities, and in line with human emotions, ethics and moral concepts. However, in the past 20-30 years, people have used massive amounts of classified data to solve tasks such as face recognition, target detection, and text translation one by one. This has brought about a question, that is, how many tasks can be considered universal?

I believe that there are three key requirements to achieve general artificial intelligence. 1) General-purpose agents can handle unlimited tasks, including those that are not predefined in complex and dynamic physical and social environments; 2) General-purpose agents should be Autonomous, that is, it should be able to generate and complete tasks on its own like humans; 3) The general agent should have a value system, Because its goals are defined by values. Intelligent systems are driven by cognitive architectures with value systems.

Mark Nitzberg: Do you think large language models (LLMs) and other basic types can achieve general artificial intelligence? A recent paper written by a Stanford University professor claims that language models may have mental states comparable to those of a 9-year-old child. What do you think of this statement?

Zhu Songchun:While some amazing progress has been made in large language models, if we compare the above three criteria, It was found that large language models do not yet meet the requirements for general artificial intelligence.

1) Large language models have limited capabilities in processing tasks. They can only handle tasks in the text domain and cannot interact with the physical and social environment. This means that models like ChatGPT can’t truly “understand” the meaning of language because they don’t have bodies to experience physical space. Chinese philosophers have long recognized the concept of "unity of knowledge and action", that is, people's "knowledge" of the world is based on "action". This is also the key to whether general intelligence can truly enter physical scenes and human society. Only when artificial agents are placed in the real physical world and human society can they truly understand and learn the physical relationships between things in the real world and the social relationships between different intelligent agents, thereby achieving the "unity of knowledge and action." .

2) Large language models are not autonomous. They require humans to specifically define each task, just like a "giant parrot" that can only imitate trained utterances. . Truly autonomous intelligence should be similar to “crow intelligence.” Crows can autonomously complete tasks that are more intelligent than today’s AI. Current AI systems do not yet have this potential.

3) Although ChatGPT has been trained at scale on different corpora of text data, including texts with implicit human values, it is not equipped to understand or relate to human values. The ability to remain consistent, a lack of what is known as a moral compass.

Regarding the paper’s discovery that the language model may have a mental state equivalent to that of a 9-year-old child, I think this is a bit exaggerated. This paper found through some experimental tests that GPT-3.5 Can answer 93% of the questions correctly, which is equivalent to the level of a 9-year-old child. But if some rule-based machines can pass similar tests, can we say that these machines have a theory of mind? Even if GPT can pass this test, it only reflects its ability to pass this theory of mind test, and it does not mean that it has a theory of mind. At the same time, we must also reflect on the practice of using these traditional test tasks to verify whether the machine has developed a theory of mind. Is it strict and legal? Why can machines complete these tasks without theory of mind?

Stuart Russell: In a 1948 paper, Turing posed the problem of generating intelligent actions from a giant lookup table. This is not practical, because if you want to generate 2000 words, you would need a table with about 10^10000 entries to store all possible sequences, as is the window size of a large language model that utilizes transformers. Such a system may appear to be very intelligent, but in fact it lacks mental states and reasoning processes (the basic meaning of classical artificial intelligence systems).

In fact, there is no evidence that ChatGPT possesses any mental state, let alone a mental state similar to that of a 9-year-old child. LLMs lack the ability to learn and express complex generalizations, which is why they require vast amounts of text data, far more than any 9-year-old can handle, and they still produce errors. It's like a chess-playing program that recognizes sequences of similar moves from previous grandmaster games (such as d4, c6, Nc3, etc.) and then outputs the next move in that sequence. Even though you would think of it as a master chess player most of the time, it will occasionally make illegal moves because it doesn't know the board, the pieces, or that the goal is to checkmate the opponent.

To some extent, ChatGPT is like this in every field. We're not sure there's any area it truly understands. Some errors may be fixed, but this is like fixing errors in the table of values for logarithmic functions. If a person only understands "log" to mean "the value in the table on page 17," then fixing the typo won't solve the problem. This table does not yet cover the meaning and definition of "logarithm", so it cannot be inferred at all. Expanding the size of the table with more data does not solve the root of the problem.

Mark Nitzberg: Stuart, you were one of the first to warn us about the existential risks posed by general artificial intelligence, like nuclear energy. Why do you think so? How can we prevent this? Matsuzumi, what risks from artificial intelligence are you most concerned about? ##

Stuart Russell: In fact, Turing was one of the first people to warn. He said in 1951: "Once a machine starts to think, it will soon surpass us. Therefore, at some point, we should expect that the machine will be able to be mastered." Because when an intelligence more powerful than humans emerges, humans will soon It is difficult to maintain power, especially when these agents have incorrect or incomplete goals.

If anyone thinks it’s alarmist to consider these risks now, you can ask them directly: How do you maintain power forever in the face of an intelligence more powerful than humans? I'd love to hear their answers. Furthermore, in the field of artificial intelligence, there are still some people who try to avoid the issue and deny the achievability of AGI without providing any evidence.

The EU Artificial Intelligence Act defines a standard artificial intelligence system that can achieve goals defined by humans. I was told that this standard came from the OECD, and the OECD people told me that it came from an earlier version of my textbook. Now, I think the standard definition of an AI system is fundamentally flawed, because we can't be completely accurate about what we want AI to do in the real world, nor can we accurately describe what we want AI to do in the real world. What does the future look like. An AI system that pursues the wrong goals will bring about a future we don’t want.

Recommender systems in social media provide an example - recommender systems try to maximize click-through rates or engagement, and they have learned to do this by manipulating humans, Transforming humans into more predictable and apparently more extreme versions of themselves through a series of prompts. Making AI “better” will only make human outcomes worse.

Instead, we need to build AI systems that 1) only aim for human benefit, and 2) make it clear that they don’t know what that means. Since AI does not understand the true meaning of human interests, it must remain uncertain about human preferences to ensure that we maintain control over it. Machines should be able to be shut down when they are unsure of human preferences. Once there is no longer any uncertainty about the goals of AI, humans are the ones who make the mistakes, and the machines can no longer be turned off.

Zhu Songchun:If general artificial intelligence becomes a reality, they may pose a threat to human existence in the long run. We can deduce by looking back at the long history of intelligence evolution that the birth of general artificial intelligence is almost inevitable.

Modern scientific research shows that life forms on the earth are constantly evolving, from inorganic matter to organic matter, single cells, multi-cell organisms, to plants, animals, and finally to humans. intelligent creatures. This reveals that there is a continuous evolutionary process from "physical" to "intelligent". From inanimate objects to living intelligence, where is the boundary? This question is very important because it is about how to understand and define the "intelligent agents" that will coexist with us in the future society. I think this answer is related to "life", from inanimate to simple life to complex intelligence. "Life" "Intelligence" is getting bigger and bigger, and "intelligence" is getting more and more complex. This is a continuous spectrum. We have no reason to think that humans will be the end of this evolutionary spectrum. This also indicates that it is possible for general intelligence to surpass humans in the future.In order to prevent future general artificial intelligence from posing threats to mankind, we can

gradually open up the capability space and value space of general intelligence. Just like when we face a robot, we first put it in a "cage" and slowly opened its permissions. Now, driverless vehicles have appeared on specific road sections. We can first limit the applicable occasions and action space of the artificial intelligence system to specific areas. As our trust in machines increases, and after confirming that AI systems are safe and controllable, we will gradually give them more space. Additionally, we should promote transparency in algorithmic decision-making processes. If we can explicitly represent the cognitive architecture of general artificial intelligence, so we know how it works, we can better control it.

Mark Nitzberg: Stuart, in your research work, what directions can be regarded as aligned research? Stuart Russell: Our core goal at CHAI is to achieve the above vision, which is to build an artificial intelligence system that can handle goal uncertainty . Existing methods, perhaps except for imitation learning, all assume a fixed known goal for the artificial intelligence system in advance, so these methods may need to be redesigned. In short, we are trying to simulate a system where multiple people and multiple machines interact. Since everyone has their own preferences, but the machine can influence many people, we define the machine's utility function as the sum of people's utility functions. However, we face three problems. #The first question is how to aggregate the preferences of different people so that the machine can understand and meet the needs of most people. It has been suggested that addition may be a good aggregation function because everyone is equally weighted and has a good sense of form, which is well demonstrated in the work of economist John Harsanyi and others. But there must be other perspectives. The second question is how do we characterize the richness of the preference structure, i.e. the ordering of distributions over all possible futures of the universe, these are very complex data Structure is not explicitly represented in the human brain or in machines. Therefore, we need ways to efficiently sort, decompose, and combine preferences. There are currently some AI research attempts to use so-called "CP nets" to represent some complex utility functions. The way CP Nets decomposes multi-attribute utility functions is similar to Bayesian networks decompose complex multivariate probabilistic models in much the same way. However, AI doesn’t really study the content of human happiness, which is surprising given the field’s claims to help humans. There are indeed academic communities studying human well-being in fields such as economics, sociology, development, etc. who tend to compile lists of factors such as health, safety, housing, food, etc. These lists are primarily designed to communicate priorities to the rest of humanity and public policy, may not capture many "obvious" unstated preferences, such as "wanting to be able-bodied." To my knowledge, these researchers have not yet developed a scientific theory to predict human behavior, but for AI we need the complete human preference structure, including all possible unstated preferences; if we have missed anything important Something that might cause problems. #The third question is how to infer preferences from human behavior and characterize the plasticity of human preferences. If preferences change over time, who is AI working for—you today or you tomorrow? We don’t want artificial intelligence to change our preferences to conform to easily achievable world states, which will cause the world to lose diversity. In addition to these basic research questions, we are also thinking about how to reconstruct on a broader basis all those that assume that artificial intelligence systems are given complete knowledge in the early stage Targeted artificial intelligence techniques (search algorithms, planning algorithms, dynamic programming algorithms, reinforcement learning, etc.). A key attribute of the new system is the flow of preference information from humans to machines while the system is running. This is very normal in the real world. For example, we ask a taxi driver to "take us to the airport." This is actually a broader goal than our true goal. When we were a mile away the driver asked us which terminal we needed. As we got closer, the driver might ask which airline to take us to the correct gate. (But not exactly how many millimeters from the door!) So we need to define the form of uncertainty and preference transfer that is best suited for a certain class of algorithms; for example, a search algorithm uses a cost function, so the machine can assume bounds on that function, and refines the bounds by asking humans which of two sequences of actions is more appropriate. Generally speaking, machines always have a large amount of uncertainty about human preferences; for this reason, I think the term "alignment" often used in this field can be misleading, Because people tend to think it means "first fully align machine and human preferences, and then choose what to do". That may not actually be the case. Mark Nitzberg: Songzhun, please introduce the value alignment research you have done. Zhu Songchun: When talking about value alignment, we must first discuss “value”. I believe that current artificial intelligence research should change from data-driven to value-driven. Various intelligent behaviors of people are driven by value, and people can quickly understand and learn value. For example, when you sit on a chair, we can observe the equilibrium state through force analysis of the contact between the chair and the body, thereby implicitly inferring the value of "comfort". This value may not be accurately described in words, but it can be expressed by interacting with the chair. We can also learn about people’s aesthetic value through the way they fold their clothes. #In addition, I believe that the value system has a unified set of representations, and the current richness of preferences is due to the mapping of unified values on different conditions. Our value will change depending on the situation. For example, a bus arrives at the stop. If you are waiting for the bus, you may want the bus to stay longer. , letting you get in; whereas if you are in a car, you may want the door to close immediately. AI systems must be able to quickly adapt to changes in our preferences, so a value-driven cognitive architecture is essential for AI. To achieve general intelligence at the human cognitive level, we included the element of value alignment in our BIGAI research and built a human-computer interaction system containing four alignments. The first alignment is a shared representation, including a common understanding of the world. The second alignment is shared core knowledge, such as common sense of physics, causal chains, logic, etc. The third alignment is shared social norms, which stipulate that AI should follow the norms of human society and maintain appropriate behavior. The fourth alignment is the shared value that AI needs to be aligned with human ethical principles. We published a study on real-time bidirectional human-robot value alignment. This research proposes an explainable artificial intelligence system. In this system, a group of robots infer the user's value goals through real-time interaction with the user and user feedback, and at the same time convey its decision-making process to the user through "explanation" so that the user understands the value basis for the robot's judgment. In addition, the system generates explanations that are easier for users to understand by inferring the user's intrinsic value preferences and predicting the best way to explain. Mark Nitzberg: What characteristics of AI systems lead us to conclude that they will not lead to general artificial intelligence? Zhu Songchun: How do we judge whether an AI is a general artificial intelligence? One of the important factors is how much we trust it. There are two levels of trust: one is trust in AI’s capabilities, and the other is trust in whether AI is beneficial to people’s emotions and values. For example, today’s artificial intelligence systems can recognize pictures very well, but to build trust, they must be explainable and understandable. Some technologies are very powerful, but if they cannot be explained, they will be considered untrustworthy. This is particularly important in areas such as weapons or aviation systems, where the costs of making mistakes are severe. In this case, the emotional dimension of trust is more important, and AIs need to explain how they make decisions in order to be trusted by humans. Therefore, we need to enhance mutual understanding through iteration, communication, and collaboration between humans and machines, and then reach a consensus and generate "Justified Trust." Stuart Russell: Current artificial intelligence systems are not universal because circuits cannot capture universality well. We've seen this already in the way that large language models have difficulty learning the basic rules of arithmetic. Our latest research finds that despite millions of examples, artificial intelligence Go systems that have previously beaten humans cannot correctly understand the concepts of "big dragon" and "life or death". We, as researchers on amateur Go players, have developed a strategy , defeated the Go program. If we are confident that artificial intelligence will be beneficial to humanity, then we need to know how it works. We need to build artificial intelligence systems on a semantically composable substrate that is supported by explicit logic and probability theory to serve as a good foundation , to achieve general artificial intelligence in the future. One possible way to create such a system is probabilistic programming, something we at CHAI have recently been looking to explore. I was encouraged to see Matsuzumi exploring a similar direction with BIGAI.

The above is the detailed content of Regarding AGI and ChatGPT, Stuart Russell and Zhu Songchun think so. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year