Write a Python program to crawl the fund flow of sectors

Through the above example of crawling the capital flow of individual stocks, you should be able to learn to write your own crawling code. Now consolidate it and do a similar small exercise. You need to write your own Python program to crawl the fund flow of online sectors. The crawled URL is http://data.eastmoney.com/bkzj/hy.html, and the display interface is shown in Figure 1.

# 图 1 The Faculty Stream URL interface

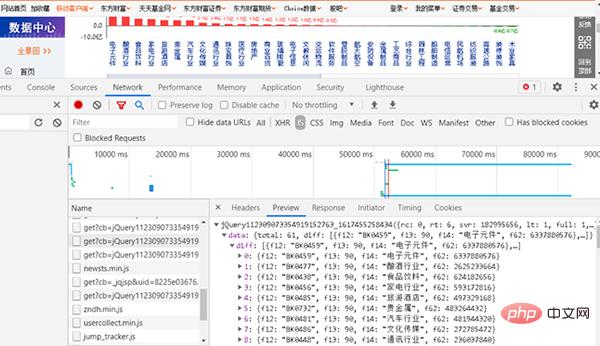

1, find js

# Directly press the F12 key, open the development commissioning tool and find the data The corresponding web page is shown in Figure 2.

Figure 2 Find the web page corresponding to JS

and then enter the URL into the browser. The URL is relatively long.

http://push2.eastmoney.com/api/qt/clist/get?cb=jQuery112309073354919152763_1617455258434&pn=1&pz=500&po=1&np=1&fields=f12,f13,f14,f62&fid=f62&fs=m:9 0+ t:2&ut=b2884a393a59ad64002292a3e90d46a5&_=1617455258435

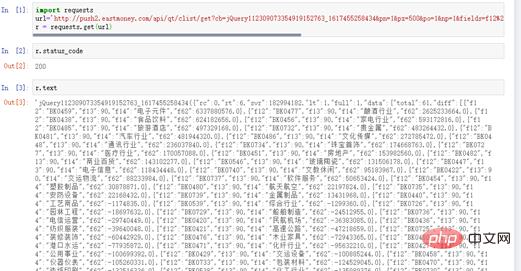

At this time, you will get feedback from the website, as shown in Figure 3.

Figure 3 Obtaining sections and capital flows from the website

The content corresponding to this URL is the content we want to crawl.

2, request request and response response status

Write the crawler code, see the following code for details:

# coding=utf-8 import requests url=" http://push2.eastmoney.com/api/qt/clist/get?cb=jQuery112309073354919152763_ 1617455258436&fid=f62&po=1&pz=50&pn=1&np=1&fltt=2&invt=2&ut=b2884a393a59ad64002292a3 e90d46a5&fs=m%3A90+t%3A2&fields=f12%2Cf14%2Cf2%2Cf3%2Cf62%2Cf184%2Cf66%2Cf69%2Cf72%2 Cf75%2Cf78%2Cf81%2Cf84%2Cf87%2Cf204%2Cf205%2Cf124" r = requests.get(url)

r.status_code displays 200, indicating that the response status is normal. r.text also has data, indicating that crawling the capital flow data is successful, as shown in Figure 4.

Figure 4 response status

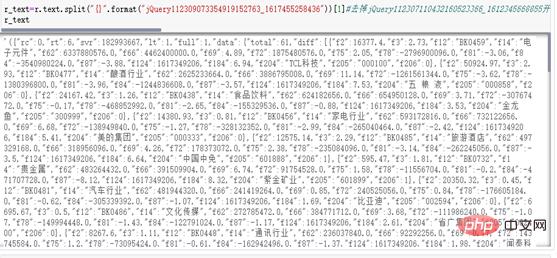

3, clean str into JSON standard format

(1) Analyze r.text data. Its internal format is standard JSON, but with some extra prefixes in front. Remove the jQ prefix and use the split() function to complete this operation. See the following code for details:

r_text=r.text.split("{}".format("jQuery112309073354919152763_1617455258436"))[1]

r_textThe running results are shown in Figure 5.

r_text_qu=r_text.rstrip(';')

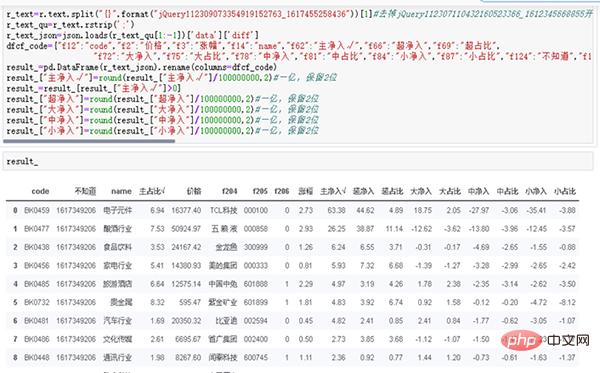

r_text_json=json.loads(r_text_qu[1:-1])['data']['diff']

dfcf_code={"f12":"code","f2":"价格","f3":"涨幅","f14":"name","f62":"主净入√","f66":"超净入","f69":"超占比", "f72":"大净入","f75":"大占比","f78":"中净入","f81":"中占比","f84":"小净入","f87":"小占比","f124":"不知道","f184":"主占比√"}

result_=pd.DataFrame(r_text_json).rename(columns=dfcf_code)

result_["主净入√"]=round(result_["主净入√"]/100000000,2)#一亿,保留2位

result_=result_[result_["主净入√"]>0]

result_["超净入"]=round(result_["超净入"]/100000000,2)#一亿,保留2位

result_["大净入"]=round(result_["大净入"]/100000000,2)#一亿,保留2位

result_["中净入"]=round(result_["中净入"]/100000000,2)#一亿,保留2位

result_["小净入"]=round(result_["小净入"]/100000000,2)#一亿,保留2位

result_ Through the above two examples of fund crawling, you must have understood some of the methods of using crawlers. The core idea is:

Through the above two examples of fund crawling, you must have understood some of the methods of using crawlers. The core idea is:

(1) Select the advantages of capital flows of individual stocks;

(2) Obtain the URL and analyze it;

(3) Use crawlers to collect data Get and save data.

Figure 6 Data SavingSummaryJSON format data is one of the standardized data formats used by many websites. The lightweight data exchange format is very easy to read and write, and can effectively improve network transmission efficiency. The first thing to crawl is the string in str format. Through data processing and processing, it is turned into standard JSON format, and then into Pandas format.Through case analysis and actual combat, we must learn to write our own code to crawl financial data and have the ability to convert it into JSON standard format. Complete daily data crawling and data storage work to provide effective data support for future historical testing and historical analysis of data.

Of course, capable readers can save the results to databases such as MySQL, MongoDB, or even the cloud database Mongo Atlas. The author will not focus on the explanation here. We focus entirely on the study of quantitative learning and strategy. Using txt format to save data can completely solve the problem of early data storage, and the data is also complete and effective.

The above is the detailed content of Write a Python program to crawl the fund flow of sectors. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1653

1653

14

14

1413

1413

52

52

1304

1304

25

25

1251

1251

29

29

1224

1224

24

24

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP and Python: A Deep Dive into Their History

Apr 18, 2025 am 12:25 AM

PHP originated in 1994 and was developed by RasmusLerdorf. It was originally used to track website visitors and gradually evolved into a server-side scripting language and was widely used in web development. Python was developed by Guidovan Rossum in the late 1980s and was first released in 1991. It emphasizes code readability and simplicity, and is suitable for scientific computing, data analysis and other fields.

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python vs. JavaScript: The Learning Curve and Ease of Use

Apr 16, 2025 am 12:12 AM

Python is more suitable for beginners, with a smooth learning curve and concise syntax; JavaScript is suitable for front-end development, with a steep learning curve and flexible syntax. 1. Python syntax is intuitive and suitable for data science and back-end development. 2. JavaScript is flexible and widely used in front-end and server-side programming.

How to run sublime code python

Apr 16, 2025 am 08:48 AM

How to run sublime code python

Apr 16, 2025 am 08:48 AM

To run Python code in Sublime Text, you need to install the Python plug-in first, then create a .py file and write the code, and finally press Ctrl B to run the code, and the output will be displayed in the console.

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

VS Code can run on Windows 8, but the experience may not be great. First make sure the system has been updated to the latest patch, then download the VS Code installation package that matches the system architecture and install it as prompted. After installation, be aware that some extensions may be incompatible with Windows 8 and need to look for alternative extensions or use newer Windows systems in a virtual machine. Install the necessary extensions to check whether they work properly. Although VS Code is feasible on Windows 8, it is recommended to upgrade to a newer Windows system for a better development experience and security.

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Where to write code in vscode

Apr 15, 2025 pm 09:54 PM

Writing code in Visual Studio Code (VSCode) is simple and easy to use. Just install VSCode, create a project, select a language, create a file, write code, save and run it. The advantages of VSCode include cross-platform, free and open source, powerful features, rich extensions, and lightweight and fast.

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

How to run python with notepad

Apr 16, 2025 pm 07:33 PM

Running Python code in Notepad requires the Python executable and NppExec plug-in to be installed. After installing Python and adding PATH to it, configure the command "python" and the parameter "{CURRENT_DIRECTORY}{FILE_NAME}" in the NppExec plug-in to run Python code in Notepad through the shortcut key "F6".