Technology peripherals

Technology peripherals

AI

AI

The future of CV is on these 68 pictures? Google Brain takes a deep look at ImageNet: top models all fail to predict

The future of CV is on these 68 pictures? Google Brain takes a deep look at ImageNet: top models all fail to predict

The future of CV is on these 68 pictures? Google Brain takes a deep look at ImageNet: top models all fail to predict

In the past ten years, ImageNet has basically been the "barometer" in the field of computer vision. If the accuracy rate has improved, you will know whether there is a new technology coming out.

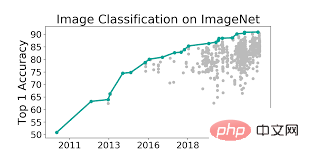

"Brushing the list" has always been the driving force for model innovation, pushing the model's Top-1 accuracy to 90%, which is higher than humans.

#But is the ImageNet dataset really as useful as we think?

Many papers have questioned ImageNet, such as data coverage, bias issues, whether labels are complete, etc.

The most important thing is, is the 90% accuracy of the model really accurate?

Recently, researchers from the Google Brain team and the University of California, Berkeley, re-examined the prediction results of several sota models and found that the true accuracy of the models may have been underestimated!

Paper link: https://arxiv.org/pdf/2205.04596.pdf

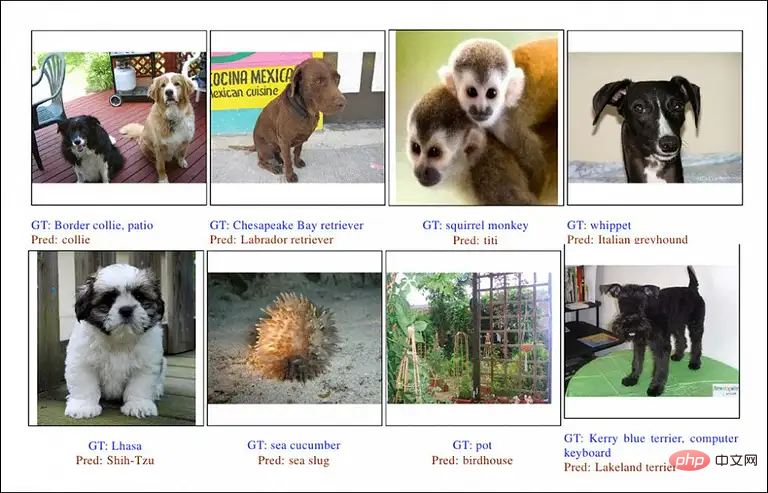

Every mistake researchers make by testing some top models Perform manual review and classification to gain insights into long-tail errors on benchmark datasets.

The main focus is on the multi-label subset evaluation of ImageNet. The best model has been able to achieve a Top-1 accuracy of 97%.

The study’s analysis shows that nearly half of the so-called prediction errors were not errors at all and were also found in the picture New multi-labels have been added, which means that if the prediction results have not been manually reviewed, the performance of these models may be "underestimated"!

Unskilled crowdsourced data annotators often label data incorrectly, which greatly affects the authenticity of the model accuracy.

In order to calibrate the ImageNet data set and promote good progress in the future, the researchers provide an updated version of the multi-label evaluation set in the article, and combine 68 examples with obvious errors in the sota model predictions into a new data Collect ImageNet-Major to facilitate future CV researchers to overcome these bad cases

Pay off "technical debt"

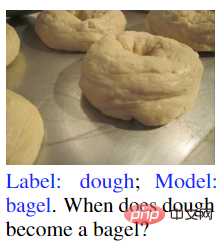

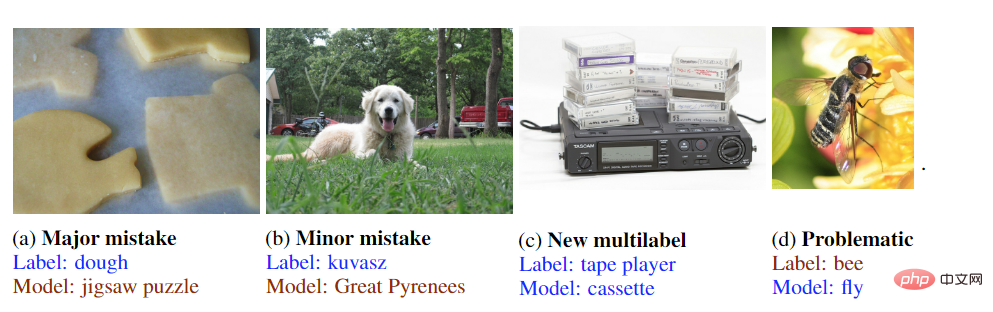

Just start from the title of the article "When does dough become bagel?" It can be seen that the author mainly focuses on the label issue in ImageNet, which is also a historical issue.

The picture below is a very typical example of label ambiguity. The label in the picture is "dough", and the model's prediction result is "bagel". Is it wrong?

Theoretically speaking, this model has no prediction error, because the dough is baking and is about to become a bagel, so it is both dough and bagel.

It can be seen that the model has actually been able to predict that this dough will "become" a bagel, but it did not get this score in terms of accuracy.

In fact, using the classification task of the standard ImageNet data set as the evaluation criterion, problems such as the lack of multiple labels, label noise, and unspecified categories are inevitable.

From the perspective of the crowdsourced annotators tasked with identifying such objects, this is a semantic and even philosophical conundrum that can only be solved through multi-labeling, Therefore, the main improvement in the ImageNet derived data set is the labeling problem.

It has been 16 years since the establishment of ImageNet. The annotators and model developers at that time certainly did not have as rich an understanding of the data as they do today, and ImageNet was an early large-capacity and relatively well-annotated data set, so ImageNet It has naturally become the standard for CV rankings.

But the budget for labeling data is obviously not as large as that for developing models, so the improvement of labeling problems has become a kind of technical debt.

To find the remaining errors in ImageNet, the researchers used a standard ViT-3B model with 3 billion parameters (able to achieve 89.5% accuracy), with JFT-3B as a pre-trained model, and fine-tuned on ImageNet-1K.

Using the ImageNet2012_multilabel data set as the test set, ViT-3B initially achieved an accuracy of 96.3%, in which the model clearly mispredicted 676 images, and then conducted in-depth research on these examples.

When re-labeling the data, the author did not choose crowdsourcing, but formed a team of 5 expert reviewers to perform labeling, because this type of labeling errors are difficult to identify for non-professionals.

For example, in picture (a), ordinary annotators may just write "table", but in fact there are many other objects in the picture, such as screens, monitors, mugs, etc.

The subject of picture (b) is two people, but the label is picket fence (fence), which is obviously imperfect. Possible labels include bow tie, uniform, etc. .

Picture (c) is also an obvious example. If only "African elephant" is marked, the ivory may be ignored.

Picture (d) is labeled lakeshore, but there is actually nothing wrong with labeling it seashore.

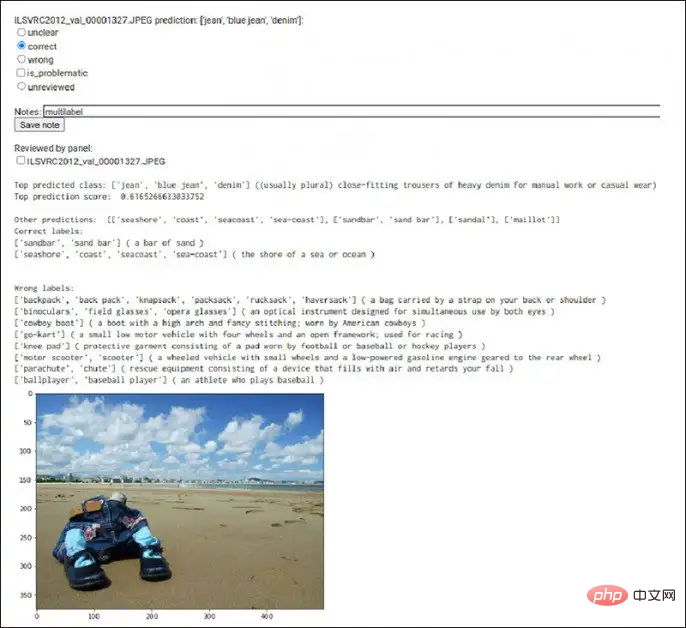

In order to increase the efficiency of annotation, the researchers also developed a dedicated tool that can simultaneously display the categories, prediction scores, labels and images predicted by the model.

In some cases, there may still be label disputes between the expert groups, and at this time the images will be put into Google search to assist in labeling.

For example, in one example, the model’s prediction results include taxis, but there is no taxi brand in the picture except for “a little yellow”.

The annotation of this image was mainly found through Google image search that the background of the image is an iconic bridge. Then the researchers located the city where the image is located, and after retrieving taxi images in the city, It is acknowledged that this picture does contain a taxi and not an ordinary car. And a comparison of the license plate design also verified that the model's prediction was correct.

After a preliminary review of the errors discovered during several stages of the research, the authors first divided them into two categories based on their severity:

1. Major: Human Be able to understand the meaning of the label, and the model's predictions have nothing to do with the label;

2. Minor error (Minor): The label may be wrong or incomplete, resulting in prediction errors. Corrections require expert review of the data.

For the 155 major errors made by the ViT-3B model, the researchers found three other models to predict together to increase the diversity of prediction results.

There were 68 major errors that all four models failed to predict. We then analyzed all models' predictions for these examples and verified that none of them were correct. New multi-label, i.e., predictions for each model The results are indeed major errors.

These 68 examples have several common characteristics. The first is that the sota models trained in different ways have made mistakes on this subset, and expert reviewers also believe that the prediction results are completely irrelevant.

The data set of 68 images is also small enough to facilitate manual evaluation by subsequent researchers. If these 68 examples are conquered in the future, the CV model may achieve new breakthroughs.

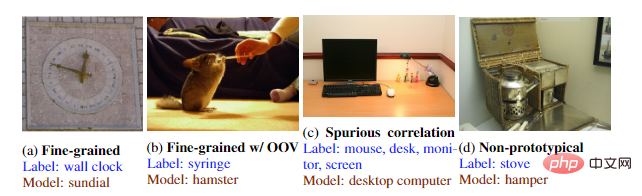

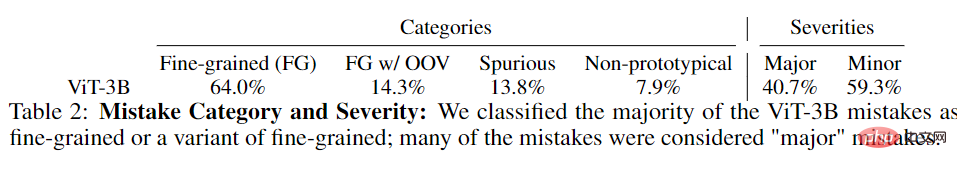

By analyzing the data, the researchers divided prediction errors into four types:

1. Fine-grained errors, in which the predicted category is similar to the real label, but not exactly the same;

2. Fine-grained with out-of-vocabulary (OOV), where the model identifies a class whose category is correct but does not exist for the object in ImageNet;

3. Spurious correlation, where the predicted label is read from the context of the image;

4. Non-prototype, where the object in the label is similar to the predicted label, but not exactly the same.

After reviewing the original 676 errors, researchers found that 298 of them should have been correct, or it was determined that the original label was wrong or problematic.

In general, four conclusions can be drawn from the research results of the article:

1. When a large-scale, high-precision model makes other When the model does not have new predictions, about 50% of them are correct new multi-labels;

2. The higher accuracy model does not show a clear correlation between category and error severity;

3. Today’s SOTA model performance on human-evaluated multi-label subsets largely matches or exceeds the best expert human performance;

4. Noisy training data and Unspecified classes can be a factor that limits effective measurement of image classification improvements.

Perhaps the image labeling problem still has to wait for natural language processing technology to be solved?

The above is the detailed content of The future of CV is on these 68 pictures? Google Brain takes a deep look at ImageNet: top models all fail to predict. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Bitget trading platform official app download and installation address

Feb 25, 2025 pm 02:42 PM

Bitget trading platform official app download and installation address

Feb 25, 2025 pm 02:42 PM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as