Technology peripherals

Technology peripherals

AI

AI

A brief analysis of the development of human-computer interaction in smart cockpits

A brief analysis of the development of human-computer interaction in smart cockpits

A brief analysis of the development of human-computer interaction in smart cockpits

At present, cars have not only changed in terms of power sources, driving methods and driving experience, but the cockpit has also bid farewell to the traditional boring mechanical and electronic space. The level of intelligence has soared, becoming a part of people's lives outside of home and office. The "third space" after that. Through high technologies such as face and fingerprint recognition, voice/gesture interaction, and multi-screen linkage, today's smart cockpits in automobiles have significantly enhanced their capabilities in environmental perception, information collection and processing, and have become "intelligent assistants" for human driving.

One of the significant signs that smart cockpit bids farewell to simple electronics and enters the intelligent assistant stage is that the interaction between humans and the cockpit changes from passive to active. This "passive" And "active" is defined centered on the cockpit itself. In the past, information exchange was mainly initiated by people, but now it can be initiated by both people and machines. The level of interaction between people and machines has become an important symbol for defining the level of smart cockpit products.

Human-computer interaction development background

It can be reflected from the history of computers and mobile phones The development of interaction methods between machines and humans, from complexity to simplicity, from abstract movements to natural interaction. The most important development trend of human-computer interaction in the future is to move machinery from passive response to active interaction. Looking at the extension of this trend, the ultimate goal of human-machine interaction is to anthropomorphize machines, making the interaction between humans and machines as natural and smooth as communication between humans. In other words, the history of human-computer interaction is the history of people adapting from machines to adapting to people through machines.

The development of smart cockpits has a similar process. With the advancement of electronic technology and the expectations of car owners, there are more and more electronic signals and functions inside and outside the car, so that car owners can reduce the waste of attention resources, thereby reducing driving distraction. As a result, car interaction methods have also gradually changed: Physical knob/keyboard - digital touch screen - language control - natural state interaction.

Natural interaction is the ideal model for the next generation of human-computer interaction

What is natural interaction?

#In short, communication is achieved through movement, eye tracking, language, etc. The consciousness modality here is more specifically similar to human "perception". Its form is mixed with various perceptions and corresponds to the five major human perceptions of vision, hearing, touch, smell, and taste. Corresponding information media include various sensors, such as sound, video, text and infrared, pressure, and radar. A smart car is essentially a manned robot. Its two most critical functions are self-control and interaction with people. Without one of them, it will not be able to work efficiently with people. Therefore, an intelligent human-computer interaction system is very necessary.

How to realize natural interaction

#More and more sensors are integrated into the cockpit, and the sensors improve the form Capabilities for diversity, data richness and accuracy. On the one hand, it makes the computing power demand in the cockpit leap forward, and on the other hand, it also provides better perception capability support. This trend makes it possible to create richer cockpit scene innovations and better interactive experiences. Among them, visual processing is the key to cockpit human-computer interaction technology. And fusion technology is the real solution. For example, when it comes to speech recognition in noisy conditions, microphones alone are not enough. In this case, people can selectively listen to someone's speech, not only with their ears, but also with their eyes. Therefore, by visually identifying the sound source and reading lips, it is possible to obtain better results than simple voice recognition. If the sensor is the five senses of a person, then the computing power is an automatically interactive human brain. The AI algorithm combines vision and speech. Through various cognitive methods, it can process various signals such as face, movement, posture, and voice. identification. As a result, more intelligent human target interaction can be achieved, including eye tracking, speech recognition, spoken language recognition linkage and driver fatigue status detection, etc.

The design of cockpit personnel interaction usually needs to be completed through edge computing rather than cloud computing. Three points: security, real-time and privacy security. Cloud computing relies on the network. For smart cars, relying on wireless networks cannot guarantee the reliability of their connections. At the same time, the data transmission delay is uncontrollable and smooth interaction cannot be guaranteed. To ensure a complete user experience for automated security domains, the solution lies in edge computing.

However, personal information security is also one of the problems faced. The private space in the cab is particularly safe in terms of safety. Today's personalized voice recognition is mainly implemented on the cloud, and private biometric information such as voiceprints can more conveniently display private identity information. By using the edge AI design on the car side, private biometric information such as pictures and sounds can be converted into car semantic information and then uploaded to the cloud, thus effectively ensuring the security of the car's personal information.

In the era of autonomous driving, interactive intelligence must match driving intelligence

In the foreseeable future, no one will Collaborative flight with aircraft will become a long-standing phenomenon, and drone interaction in the cockpit will become the first interface for people to master active flight skills. Currently, the field of intelligent driving faces the problem of uneven evolution. The level of human-computer interaction lags behind the improvement of autonomous driving, causing frequent autonomous driving problems and hindering the development of autonomous driving. The characteristic of human-computer interaction cooperation behavior is the human operation loop. Therefore, the human-computer interaction function must be consistent with the autonomous driving function. Failure to do so will result in serious expected functional safety risks, which are associated with the vast majority of fatal autonomous driving incidents. Once the human-computer interaction interface can provide the cognitive results of one's own driving, the energy boundary of the autonomous driving system can be further understood, which will greatly help improve the acceptance of L-level autonomous driving functions.

Of course, the current smart cockpit interaction method is mainly an extension of the mobile phone Android ecosystem, mainly supported by the host screen. Today's monitors are getting larger and larger, and this is actually because low-priority functions occupy the space of high-priority functions, causing additional signal interference and affecting operational safety. In the future, although physical displays will still exist, I believe that in the future, they will be replaced by natural human-computer interaction AR-HUD.

If the intelligent driving system is developed to L4 or above, people will be liberated from boring and tiring driving, and cars will become "people's third living space." In this way, the locations of the entertainment area and safety functional area (human-computer interaction and automatic control) in the cab will be changed in the future, and the safety area will become the main control area. Autonomous driving is the interaction between cars and the environment, and the interaction between people is the interaction between people and cars. The two are integrated to complete the collaboration of people, cars, and the environment, forming a complete closed loop of driving.

Second, the automatic dialogue AR-HUD dialogue interface is safer. When communicating with words or gestures, it can avoid diverting the driver's attention, thereby improving driving efficiency. Safety. This is simply not possible on a large cockpit screen, but ARHUD circumvents this problem while displaying autonomous driving sensing signals.

Third, the natural conversation method is an implicit, concise, and emotional natural conversation method. You can't occupy too much valuable physical space in the car, but you can be with the free person anytime and anywhere. Therefore, in the future, the intra-domain integration of smart driving and smart cockpit will be a safer development method, and the final development will be the central system of the car.

Practical principles of human-computer interaction

Touch interaction

The early center console screen only displayed radio information, and most of the area accommodated a large number of physical interaction buttons. These buttons basically achieved communication with humans through tactile interaction.

With the development of intelligent interaction, large screens for central control have appeared, and physical interaction buttons have begun to gradually decrease. The large central control screen is getting larger and larger, occupying an increasingly important position. The physical buttons on the center console have been reduced to none. At this time, the occupants can no longer interact with people through touch. However, at this stage, it gradually changes to visual interaction. People no longer use touch to communicate with others, but mainly use vision to communicate. operate. But it will be absolutely inconvenient for people to communicate with humans in the smart cockpit using only vision. Especially during driving, 90% of human visual attention must be devoted to observing road conditions, so that they can focus on the screen for a long time and talk to the smart cockpit.

Voice interaction

(1) Principle of voice interaction.

Understanding of natural speech - speech recognition - speech into speech.

(2) Scenarios required for voice interaction.

There are two main elements in the scenario application of voice control. One is that it can replace functions without prompts on the touch screen and have a natural dialogue with the human-machine interface. The other is that it minimizes the human-machine interface. The impact of manual control improves safety.

First, when you come home from get off work, you want to quickly control the vehicle, query information, and check air conditioning, seats, etc. while driving. On long journeys, investigate service areas and gas stations along the way, and investigate the schedule. The second is to use voice to link everything. Music and sub-screen entertainment in the car can be quickly evoked. So what we have to do is quickly control the vehicle.

The first is to quickly control the car. The basic functions include adjusting the ambient lighting in the car, adjusting the volume, adjusting the air conditioning temperature control in the car, adjusting the windows, adjusting the rearview mirror, and quickly controlling the vehicle. The original intention is to allow the driver to control the vehicle more quickly, reducing distractions and helping to increase the safe operation factor. Remote language interaction is an important entrance to the implementation of the entire system, because the system must understand the driver's voice instructions and provide intelligent navigation. Not only can we passively accept tasks, but we can also provide you with additional services such as destination introduction and schedule planning.

Next, there is the monitoring of the vehicle and driver. During real-time operation, the performance and status of the vehicle such as tire pressure, tank temperature, coolant, and engine oil can be inquired at any time. . Real-time information query helps drivers process information in advance. Of course, you should also pay attention in real time when reaching the warning critical point. In addition to internal monitoring, external monitoring is of course also required. Mixed monitoring of biometrics and voice monitoring can monitor the driver's emotions. Remind the driver to cheer up at the appropriate time to avoid traffic accidents. As well as precautions for fatigue sounds caused by long-term driving. Finally, in terms of multimedia entertainment, driving scenes, playing music and radio are the most frequent operations and needs. In addition to simple functions such as play, pause, and song switching, the development of personalized functions such as collection, account registration, opening of play history, switching of play order, and on-site interaction are also awaiting.

Accommodating Errors

Fault tolerance mechanisms must be allowed in voice conversations. Basic fault tolerance is also handled on a scenario-by-scenario basis. The first is that the user does not understand, and the user is asked to say it again. The second is that the user has listened but does not have the ability to handle the problem. The third is that it is recognized as an error message, which can be confirmed again.

Face recognition

(1) Principle of face recognition.

Facial feature recognition technology in the cockpit generally includes the following three aspects: facial feature inspection and pattern recognition. As the overall information on the Internet becomes biogenic, facial information is input on multiple platforms, and cars are a focus of the Internet of Everything. As more mobile terminal usage scenarios move to the car, account registration and identity authentication need to be performed in the car.

(2) Face recognition usage scenarios.

Before driving, you must get in the car to verify the car owner information and register the application ID. During walking, facial recognition is the main work scenario for fatigue with eyes closed while walking, phone reminder, no eyesight, and yawning.

Mere interactions can make it more inconvenient for the driver. For example, using voice alone is prone to misdirections and simple touch operations, and the driver cannot meet the 3-second principle. Only when multiple interaction methods such as voice, gestures, and vision are integrated can the intelligent system communicate with the driver in various scenarios more accurately, conveniently, and safely.

Challenges and future of human-computer interaction

Challenges of human-computer interaction

The ideal natural interaction starts with the user’s experience and creates a safe, smooth, and predictable interactive experience. But no matter how rich life is, we must always start from the facts. There are still many challenges at present.

At present, misrecognition of natural interactions is still very serious, and reliability and accuracy in all working conditions and all-weather are far from enough. Therefore, in gesture recognition, the gesture recognition rate based on vision is still very low, so various algorithms must be developed to improve the accuracy and speed of recognition. Unintentional gestures may be mistaken for command actions, but in fact this is just one of countless misunderstandings. In the case of movement, the projection, vibration, and occlusion of light are all major technical issues. Therefore, in order to reduce the misrecognition rate, various technical means need to be comprehensively supported by using multi-sensor fusion verification methods, sound confirmation and other methods to match the operating scenario. Secondly, the current smoothness problem of natural interaction is still a difficulty that must be overcome, requiring more advanced sensors, more powerful capabilities, and more efficient computing. At the same time, natural language processing capabilities and intention expression are still in their infancy, and require in-depth research on algorithmic technology.

In the future, cockpit human-computer interaction will move toward the virtual world and emotional connection

One of the reasons why consumers are willing to pay for additional intelligent functions beyond car mobility is conversation and experience. We mentioned above that the development of smart cockpits in the future is people-centered, and it will evolve into the third space in people's lives.

This kind of human-computer interaction is by no means a simple call response, but a multi-channel, multi-level, multi-mode communication experience. From the perspective of the occupants, the future intelligent cockpit human-computer interaction system will use intelligent language as the main communication method, and use touch, gestures, dynamics, expressions, etc. as auxiliary communication methods to free the occupants' hands and eyes to reduce the risk of driver manipulation.

With the increase of sensors in the cockpit, it is a certain trend that the human-computer interaction service object shifts from the driver as the center to the full-vehicle passenger service. The smart cockpit builds a virtual space, and the natural interaction between people will bring a new immersive extended reality entertainment experience. The powerful configuration, combined with the powerful interactive equipment in the cockpit, can build an in-car metaverse and provide various immersive games. Smart cockpits may be a good carrier for original space.

The natural interaction between man and machine also brings emotional connection. The cockpit becomes a human companion, a more intelligent companion, learning the behavior, habits and preferences of the car owner, and sensing the inside of the cockpit. environment, combined with the vehicle's current location, to proactively provide information and functional prompts when needed. With the development of artificial intelligence, in our lifetime, we have the opportunity to see human emotional connections gradually participate in our personal lives. Ensuring that technology is good may be another major issue we must face at that time. But no matter what, technology will develop in this direction.

Summary of human-computer interaction in intelligent cockpit

#In the current fierce competition in the automobile industry, artificial intelligence cockpit systems have become The key issue to realize the functional differentiation of the whole machine factory is that the cockpit human-computer interaction system is closely related to people's communication behavior, language and culture, etc., so it needs to be highly localized. Intelligent vehicle human-computer interaction is an important breakthrough for the brand upgrade of Chinese intelligent vehicle companies and a breakthrough for China's intelligent vehicle technology to guide the world's technological development trends.

The integration of these interactions and interactions will provide a more comprehensive immersive experience in the future, continue to promote the maturity of new interaction methods and technologies, and hope to evolve from the current experience-enhancing functions to A must-have feature for future smart cockpits. In the future, smart cockpit interaction technology is expected to cover a variety of travel needs, whether it is basic safety needs or deeper psychological needs of belonging and self-realization.

The above is the detailed content of A brief analysis of the development of human-computer interaction in smart cockpits. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Smart App Control on Windows 11: How to turn it on or off

Jun 06, 2023 pm 11:10 PM

Intelligent App Control is a very useful tool in Windows 11 that helps protect your PC from unauthorized apps that can damage your data, such as ransomware or spyware. This article explains what Smart App Control is, how it works, and how to turn it on or off in Windows 11. What is Smart App Control in Windows 11? Smart App Control (SAC) is a new security feature introduced in the Windows 1122H2 update. It works with Microsoft Defender or third-party antivirus software to block potentially unnecessary apps that can slow down your device, display unexpected ads, or perform other unexpected actions. Smart application

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

The facial features are flying around, opening the mouth, staring, and raising eyebrows, AI can imitate them perfectly, making it impossible to prevent video scams

Dec 14, 2023 pm 11:30 PM

With such a powerful AI imitation ability, it is really impossible to prevent it. It is completely impossible to prevent it. Has the development of AI reached this level now? Your front foot makes your facial features fly, and on your back foot, the exact same expression is reproduced. Staring, raising eyebrows, pouting, no matter how exaggerated the expression is, it is all imitated perfectly. Increase the difficulty, raise the eyebrows higher, open the eyes wider, and even the mouth shape is crooked, and the virtual character avatar can perfectly reproduce the expression. When you adjust the parameters on the left, the virtual avatar on the right will also change its movements accordingly to give a close-up of the mouth and eyes. The imitation cannot be said to be exactly the same, but the expression is exactly the same (far right). The research comes from institutions such as the Technical University of Munich, which proposes GaussianAvatars, which

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

MotionLM: Language modeling technology for multi-agent motion prediction

Oct 13, 2023 pm 12:09 PM

This article is reprinted with permission from the Autonomous Driving Heart public account. Please contact the source for reprinting. Original title: MotionLM: Multi-Agent Motion Forecasting as Language Modeling Paper link: https://arxiv.org/pdf/2309.16534.pdf Author affiliation: Waymo Conference: ICCV2023 Paper idea: For autonomous vehicle safety planning, reliably predict the future behavior of road agents is crucial. This study represents continuous trajectories as sequences of discrete motion tokens and treats multi-agent motion prediction as a language modeling task. The model we propose, MotionLM, has the following advantages: First

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

Do you know that programmers will be in decline in a few years?

Nov 08, 2023 am 11:17 AM

"ComputerWorld" magazine once wrote an article saying that "programming will disappear by 1960" because IBM developed a new language FORTRAN, which allows engineers to write the mathematical formulas they need and then submit them. Give the computer a run, so programming ends. A few years later, we heard a new saying: any business person can use business terms to describe their problems and tell the computer what to do. Using this programming language called COBOL, companies no longer need programmers. . Later, it is said that IBM developed a new programming language called RPG that allows employees to fill in forms and generate reports, so most of the company's programming needs can be completed through it.

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

GR-1 Fourier Intelligent Universal Humanoid Robot is about to start pre-sale!

Sep 27, 2023 pm 08:41 PM

The humanoid robot is 1.65 meters tall, weighs 55 kilograms, and has 44 degrees of freedom in its body. It can walk quickly, avoid obstacles quickly, climb steadily up and down slopes, and resist impact interference. You can now take it home! Fourier Intelligence's universal humanoid robot GR-1 has started pre-sale. Robot Lecture Hall Fourier Intelligence's Fourier GR-1 universal humanoid robot has now opened for pre-sale. GR-1 has a highly bionic trunk configuration and anthropomorphic motion control. The whole body has 44 degrees of freedom. It has the ability to walk, avoid obstacles, cross obstacles, go up and down slopes, resist interference, and adapt to different road surfaces. It is a general artificial intelligence system. Ideal carrier. Official website pre-sale page: www.fftai.cn/order#FourierGR-1# Fourier Intelligence needs to be rewritten.

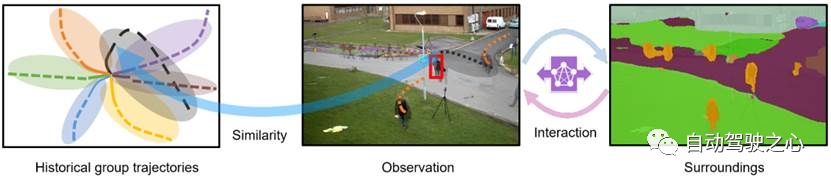

What are the effective methods and common Base methods for pedestrian trajectory prediction? Top conference papers sharing!

Oct 17, 2023 am 11:13 AM

What are the effective methods and common Base methods for pedestrian trajectory prediction? Top conference papers sharing!

Oct 17, 2023 am 11:13 AM

Trajectory prediction has been gaining momentum in the past two years, but most of it focuses on the direction of vehicle trajectory prediction. Today, Autonomous Driving Heart will share with you the algorithm for pedestrian trajectory prediction on NeurIPS - SHENet. In restricted scenes, human movement patterns are usually To a certain extent, it conforms to limited rules. Based on this assumption, SHENet predicts a person's future trajectory by learning implicit scene rules. The article has been authorized to be original by Autonomous Driving Heart! The author's personal understanding is that currently predicting a person's future trajectory is still a challenging problem due to the randomness and subjectivity of human movement. However, human movement patterns in constrained scenes often vary due to scene constraints (such as floor plans, roads, and obstacles) and human-to-human or human-to-object interactivity.

An article discussing the application of SLAM technology in autonomous driving

Apr 09, 2023 pm 01:11 PM

An article discussing the application of SLAM technology in autonomous driving

Apr 09, 2023 pm 01:11 PM

Positioning occupies an irreplaceable position in autonomous driving, and there is promising development in the future. Currently, positioning in autonomous driving relies on RTK and high-precision maps, which adds a lot of cost and difficulty to the implementation of autonomous driving. Just imagine that when humans drive, they do not need to know their own global high-precision positioning and the detailed surrounding environment. It is enough to have a global navigation path and match the vehicle's position on the path. What is involved here is the SLAM field. key technologies. What is SLAMSLAM (Simultaneous Localization and Mapping), also known as CML (Concurrent Mapping and Localiza

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Recently, Huawei announced that it will launch a new smart wearable product equipped with Xuanji sensing system in September, which is expected to be Huawei's latest smart watch. This new product will integrate advanced emotional health monitoring functions. The Xuanji Perception System provides users with a comprehensive health assessment with its six characteristics - accuracy, comprehensiveness, speed, flexibility, openness and scalability. The system uses a super-sensing module and optimizes the multi-channel optical path architecture technology, which greatly improves the monitoring accuracy of basic indicators such as heart rate, blood oxygen and respiration rate. In addition, the Xuanji Sensing System has also expanded the research on emotional states based on heart rate data. It is not limited to physiological indicators, but can also evaluate the user's emotional state and stress level. It supports the monitoring of more than 60 sports health indicators, covering cardiovascular, respiratory, neurological, endocrine,