Technology peripherals

Technology peripherals

AI

AI

The latest deep architecture for target detection has half the parameters and is 3 times faster +

The latest deep architecture for target detection has half the parameters and is 3 times faster +

The latest deep architecture for target detection has half the parameters and is 3 times faster +

Brief introduction

The research authors propose Matrix Net (xNet), a new deep architecture for object detection. xNets map objects with different size dimensions and aspect ratios into network layers, where the objects are almost uniform in size and aspect ratio within the layer. Therefore, xNets provide a size- and aspect-ratio-aware architecture. Researchers use xNets to enhance keypoint-based target detection. The new architecture achieves higher time-efficiency than any other single-shot detector, with 47.8 mAP on the MS COCO dataset, while using half the parameters and being 3 times faster to train than the next best framework. times.

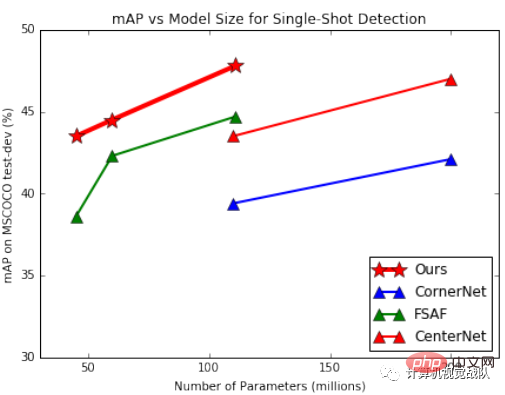

Simple result display

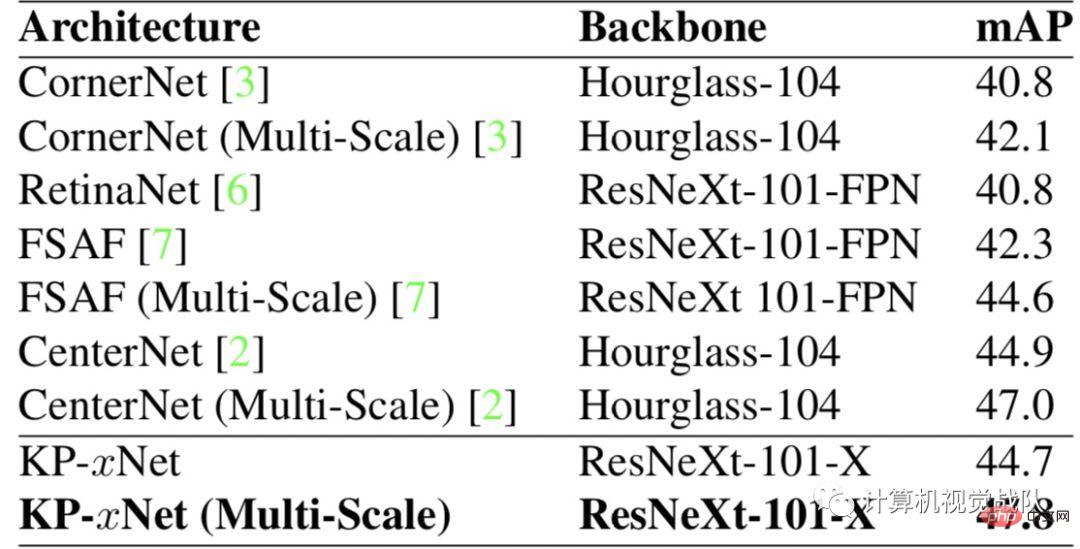

As shown in the figure above, xNet’s parameters and efficiency far exceed those of other models. Among them, FSAF has the best effect among anchor-based detectors, surpassing the classic RetinaNet. The model proposed by the researchers outperforms all other single-shot architectures with a similar number of parameters.

Background and current situation

Object detection is one of the most widely studied tasks in computer vision, with many applications to other vision tasks such as object tracking, instance segmentation and Image captions. Target detection structures can be divided into two categories: single-shot detector and two-stage detector. Two-stage detectors utilize a region proposal network to find a fixed number of object candidates, and then use a second network to predict the score of each candidate and improve its bounding box.

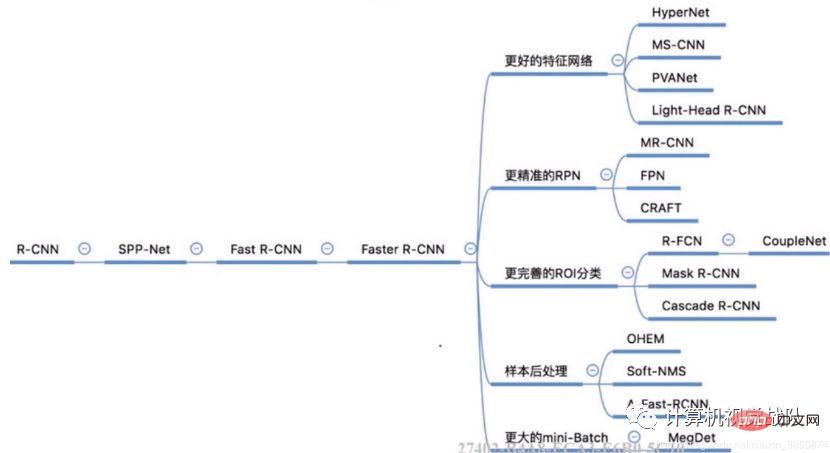

Common Two-stage algorithm

Single-shot detectors can also be divided into two categories: anchor-based detectors and keypoint-based detectors. Anchor-based detectors contain many anchor bounding boxes and then predict the offset and class of each template. The most famous anchor-based architecture is RetinaNet, which proposes a focal loss function to help correct the class imbalance of anchor bounding boxes. The best performing anchor-based detector is FSAF. FSAF integrates anchor-based outputs with anchor-less output heads to further improve performance.

On the other hand, the keypoint-based detector predicts the top left and bottom right heatmaps and matches them using feature embeddings. The original keypoint-based detector is CornerNet, which utilizes a special coener pooling layer to accurately detect objects of different sizes. Since then, Centerne has greatly improved the CornerNet architecture by predicting object centers and corners.

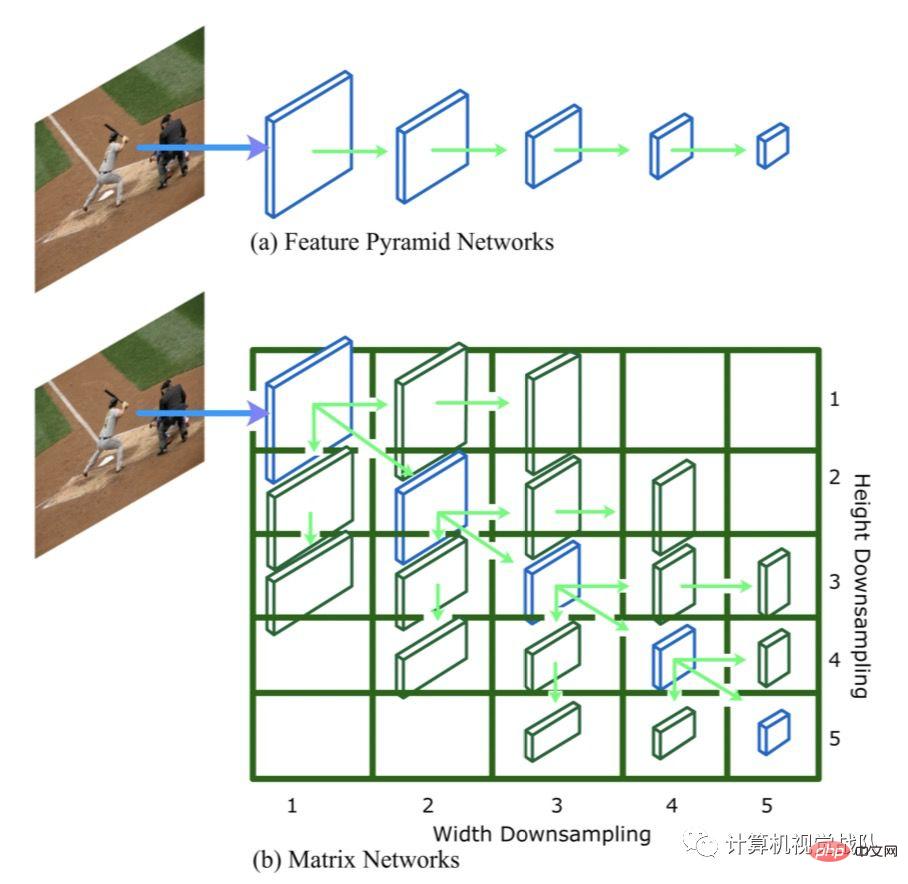

Matrix Nets

The figure below shows Matrix nets (xNets), which use hierarchical matrices to model targets with different sizes and cluster transverse ratios, where in the matrix Each entry i, j of represents a layer li,j. The width in the upper left corner of the matrix l1,1 is downsampled by 2^(i-1), and the height is downsampled by 2^(j-1). Diagonal layers are square layers of different sizes, equivalent to an FPN, while off-diagonal layers are rectangular layers (this is unique to xNets). Layer l1,1 is the largest layer. The width of the layer is halved for each step to the right, and the height is halved for each step to the right.

For example, layer l3,4 is half the width of layer l3,3. Diagonal layers model objects whose aspect ratio is close to square, while non-diagonal layers model objects whose aspect ratio is not close to square. Layers near the upper right or lower left corner of the matrix model objects with extremely high or low aspect ratios. Such targets are very rare, so they can be pruned to improve efficiency.

1. Layer Generation

Generating the matrix layer is a critical step because it affects the number of model parameters. The more parameters, the stronger the model expression and the more difficult the optimization problem, so researchers choose to introduce as few new parameters as possible. Diagonal layers can be obtained from different stages of the backbone or using a feature pyramid framework. The upper triangular layer is obtained by applying a series of shared 3x3 convolutions with 1x2 stride on the diagonal layer. Similarly, the bottom left layer is obtained using a shared 3x3 convolution with a stride of 2x1. Parameters are shared between all downsampling convolutions to minimize the number of new parameters.

2. Layer range

Each layer in the matrix models a target with a certain width and height, so we need to define the width assigned to the target of each layer in the matrix and height range. The range needs to reflect the receptive field of the matrix layer feature vector. Each step to the right in the matrix effectively doubles the receptive field in the horizontal dimension, and each step doubles the receptive field in the vertical dimension. So as we move to the right or down in the matrix, the range of width or height needs to double. Once the range for the first layer l1,1 is defined, we can use the above rules to generate ranges for the rest of the matrix layer.

3. Advantages of Matrix Nets

The main advantage of Matrix Nets is that they allow square convolution kernels to accurately collect information about different aspect ratios. In traditional object detection models, such as RetinaNet, a square convolution kernel is required to output different aspect ratios and scales. This is counter-intuitive because different aspects of the bounding box require different backgrounds. In Matrix Nets, since the context of each matrix layer changes, the same square convolution kernel can be used for bounding boxes of different scales and aspect ratios.

Because the target size is nearly uniform within its designated layer, the dynamic range of width and height is smaller compared to other architectures (such as FPN). Therefore, regressing the height and width of the target will become an easier optimization problem. Finally, Matrix Nets can be used as any object detection architecture, anchor-based or keypoint-based, one-shot or two-shots detector.

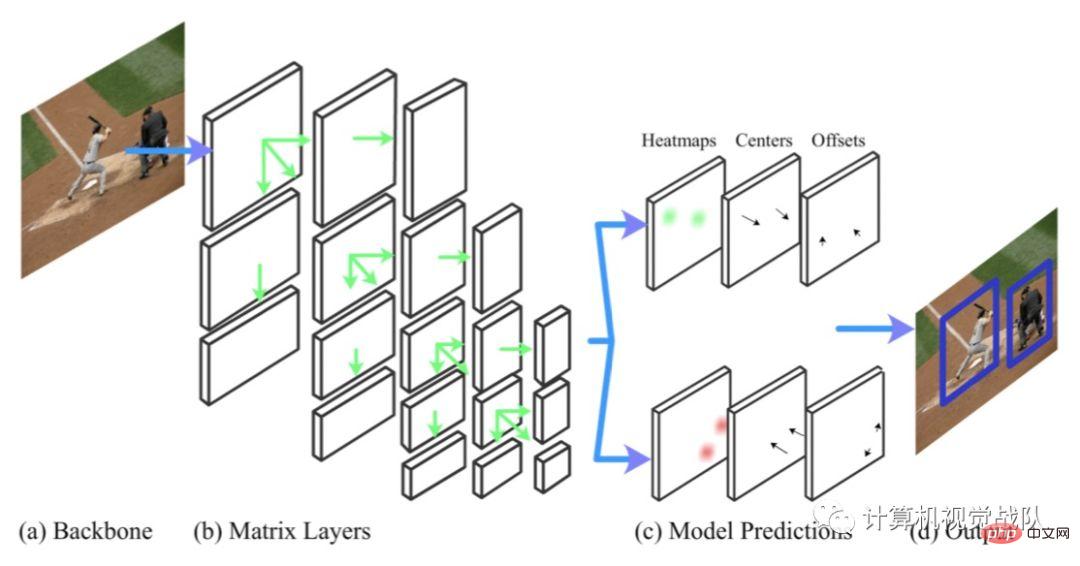

Matrix Nets are used for detection based on key points

When CornerNet was proposed, it was for Instead of anchor-based detection, it utilizes a pair of corners (top left and bottom right) to predict bounding boxes. For each corner, CornerNet predicts heatmaps, offsets and embeddings.

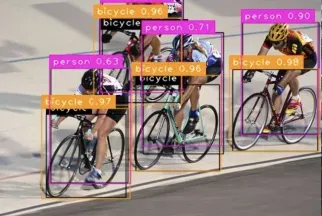

The above picture is the target detection framework based on key points - KP-xNet, which contains 4 steps.

- (a-b): The backbone of xNet is used;

- (c): The shared output sub-network is used, and for For each matrix layer, the heatmap and offset of the upper left corner and lower right corner are predicted, and center points are predicted for them within the target layer;

- (d): Using the center Point prediction matches corners in the same layer, and then the outputs of all layers are combined with soft non-maximum suppression to get the final output.

Experimental results

The following table shows the results on the MS COCO data set:

The researchers also compared the newly proposed model with other models based on the number of parameters on different backbones. In the first figure, we find that KP-xNet outperforms all other structures at all parameter levels. The researchers believe this is because KP-xNet uses a scale- and aspect-ratio-aware architecture.

Paper address: https://arxiv.org/pdf/1908.04646.pdf

The above is the detailed content of The latest deep architecture for target detection has half the parameters and is 3 times faster +. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

![How to Use Depth Effect on iPhone [2023]](https://img.php.cn/upload/article/000/465/014/169410031113297.png?x-oss-process=image/resize,m_fill,h_207,w_330) How to Use Depth Effect on iPhone [2023]

Sep 07, 2023 pm 11:25 PM

How to Use Depth Effect on iPhone [2023]

Sep 07, 2023 pm 11:25 PM

If there's one thing you can single out as different on an iPhone, it's the number of customization options you have when dealing with your iPhone's lock screen. Among the options, there is the depth effects feature, which makes your wallpaper look like it interacts with the lock screen clock widget. We'll explain the depth effect, when and where you can apply it, and how to use it on your iPhone. What is the depth effect on iPhone? When you add a wallpaper with different elements, iPhone will split it into several layers of depth. To do this, iOS utilizes a built-in neural engine to detect depth information in wallpapers, separating the subject you want to appear in focus from other elements of the selected background. This will create a cool looking effect where the main character in the wallpaper

Multi-grid redundant bounding box annotation for accurate object detection

Jun 01, 2024 pm 09:46 PM

Multi-grid redundant bounding box annotation for accurate object detection

Jun 01, 2024 pm 09:46 PM

1. Introduction Currently, the leading object detectors are two-stage or single-stage networks based on the repurposed backbone classifier network of deep CNN. YOLOv3 is one such well-known state-of-the-art single-stage detector that receives an input image and divides it into an equal-sized grid matrix. Grid cells with target centers are responsible for detecting specific targets. What I’m sharing today is a new mathematical method that allocates multiple grids to each target to achieve accurate tight-fit bounding box prediction. The researchers also proposed an effective offline copy-paste data enhancement for target detection. The newly proposed method significantly outperforms some current state-of-the-art object detectors and promises better performance. 2. The background target detection network is designed to use

New SOTA for target detection: YOLOv9 comes out, and the new architecture brings traditional convolution back to life

Feb 23, 2024 pm 12:49 PM

New SOTA for target detection: YOLOv9 comes out, and the new architecture brings traditional convolution back to life

Feb 23, 2024 pm 12:49 PM

In the field of target detection, YOLOv9 continues to make progress in the implementation process. By adopting new architecture and methods, it effectively improves the parameter utilization of traditional convolution, which makes its performance far superior to previous generation products. More than a year after YOLOv8 was officially released in January 2023, YOLOv9 is finally here! Since Joseph Redmon, Ali Farhadi and others proposed the first-generation YOLO model in 2015, researchers in the field of target detection have updated and iterated it many times. YOLO is a prediction system based on global information of images, and its model performance is continuously enhanced. By continuously improving algorithms and technologies, researchers have achieved remarkable results, making YOLO increasingly powerful in target detection tasks.

Comparative analysis of deep learning architectures

May 17, 2023 pm 04:34 PM

Comparative analysis of deep learning architectures

May 17, 2023 pm 04:34 PM

The concept of deep learning originates from the research of artificial neural networks. A multi-layer perceptron containing multiple hidden layers is a deep learning structure. Deep learning combines low-level features to form more abstract high-level representations to represent categories or characteristics of data. It is able to discover distributed feature representations of data. Deep learning is a type of machine learning, and machine learning is the only way to achieve artificial intelligence. So, what are the differences between various deep learning system architectures? 1. Fully Connected Network (FCN) A fully connected network (FCN) consists of a series of fully connected layers, with every neuron in each layer connected to every neuron in another layer. Its main advantage is that it is "structure agnostic", i.e. no special assumptions about the input are required. Although this structural agnostic makes the complete

Multi-path, multi-domain, all-inclusive! Google AI releases multi-domain learning general model MDL

May 28, 2023 pm 02:12 PM

Multi-path, multi-domain, all-inclusive! Google AI releases multi-domain learning general model MDL

May 28, 2023 pm 02:12 PM

Deep learning models for vision tasks (such as image classification) are usually trained end-to-end with data from a single visual domain (such as natural images or computer-generated images). Generally, an application that completes vision tasks for multiple domains needs to build multiple models for each separate domain and train them independently. Data is not shared between different domains. During inference, each model will handle a specific domain. input data. Even if they are oriented to different fields, some features of the early layers between these models are similar, so joint training of these models is more efficient. This reduces latency and power consumption, and reduces the memory cost of storing each model parameter. This approach is called multi-domain learning (MDL). In addition, MDL models can also outperform single

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective

What is the architecture and working principle of Spring Data JPA?

Apr 17, 2024 pm 02:48 PM

What is the architecture and working principle of Spring Data JPA?

Apr 17, 2024 pm 02:48 PM

SpringDataJPA is based on the JPA architecture and interacts with the database through mapping, ORM and transaction management. Its repository provides CRUD operations, and derived queries simplify database access. Additionally, it uses lazy loading to only retrieve data when necessary, thus improving performance.

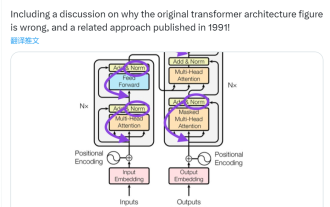

This 'mistake' is not really a mistake: start with four classic papers to understand what is 'wrong' with the Transformer architecture diagram

Jun 14, 2023 pm 01:43 PM

This 'mistake' is not really a mistake: start with four classic papers to understand what is 'wrong' with the Transformer architecture diagram

Jun 14, 2023 pm 01:43 PM

Some time ago, a tweet pointing out the inconsistency between the Transformer architecture diagram and the code in the Google Brain team's paper "AttentionIsAllYouNeed" triggered a lot of discussion. Some people think that Sebastian's discovery was an unintentional mistake, but it is also surprising. After all, considering the popularity of the Transformer paper, this inconsistency should have been mentioned a thousand times. Sebastian Raschka said in response to netizen comments that the "most original" code was indeed consistent with the architecture diagram, but the code version submitted in 2017 was modified, but the architecture diagram was not updated at the same time. This is also the root cause of "inconsistent" discussions.