Technology peripherals

Technology peripherals

AI

AI

PyTorch is transferred to the Linux Foundation, which will have a significant impact on AI research

PyTorch is transferred to the Linux Foundation, which will have a significant impact on AI research

PyTorch is transferred to the Linux Foundation, which will have a significant impact on AI research

Recently, PyTorch founder Soumith Chintala announced on the PyTorch official website that PyTorch, as a top-level project, will be officially transferred to the Linux Foundation (LF), named PyTorch Foundation.

PyTorch was born in January 2017 and launched by Facebook Artificial Intelligence Research (FAIR). It is a Python open source machine learning library based on Torch and can be used for natural language processing and other applications. . As one of the most popular machine learning frameworks, PyTorch currently has more than 2,400 contributors, and nearly 154,000 projects are built on PyTorch.

The core mission of the Linux Foundation is to collaboratively develop open source software. The management committee members of the Foundation are all from AMD, Amazon Web Services (AWS), Google Cloud, Meta, Microsoft For companies such as Azure and NVIDIA, this model is consistent with the current status and development direction of PyTorch. The establishment of the PyTorch Foundation will ensure that business decisions are made in a transparent and open manner by a diverse group of members in the coming years.

In response, Soumith Chintala said, "As PyTorch continues to grow into a multi-stakeholder project, it is time to move to a broader open source base." "I am pleased that the Linux Foundation will Be our new home as they have extensive experience supporting large open source projects like ours like Kubernetes and NodeJS."

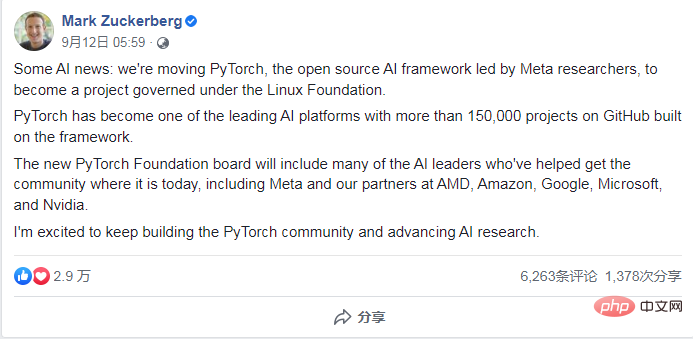

Zuckerberg was also in the Facebook post wrote, “The new PyTorch Foundation Board will include many of the AI leaders who have helped get the community to where it is today, including Meta and our partners at AMD, Amazon, Google, Microsoft, and NVIDIA. I’m excited to continue building the PyTorch community And advance AI research.”

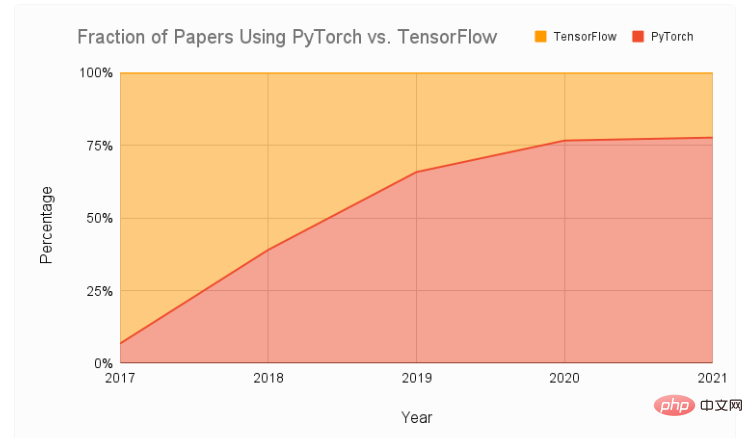

Torch introduces PyTorch. It is a Python-based sustainable computing package that provides two advanced features: tensor computing with powerful GPU acceleration (such as NumPy), and deep neural networks including an automatic derivation system. As one of the most popular machine learning frameworks, PyTorch has quickly occupied the top spot on GitHub's popularity list since it was first open sourced on GitHub. Compared with another popular TensorFlow framework, PyTorch has grown from only 7% usage to nearly 80% in just a few years.

Since PyTorch was created, more than 2,400 contributors have built projects based on PyTorch, and nearly 154,000 projects have been built. Soumith Chintala said that PyTorch’s business governance has been unstructured for a long time, and Meta team members have spent a lot of time and energy building PyTorch into an organizationally healthier entities and introduces many structures. In the next stage, PyTorch’s development goal is to support the interests of multiple stakeholders, which is why the Linux Foundation was chosen. “It has extensive organizational experience in hosting large-scale multi-stakeholder open source projects, and has strong organizational structure and The right balance has been struck in finding specific solutions for these projects.”Currently, the Linux Foundation has thousands of members around the world and more than 850 open source projects, whose projects directly underpin AI/ Contribute to ML projects, or contribute to their use cases and integrate with their platforms, such as LF Networking, AGL, Delta Lake, RISC-V, etc. As part of the Linux Foundation, PyTorch and its community will benefit from the support of the Linux Foundation's many programs and community activities, including training and certification programs, community research, and more. ######PyTorch also has access to the Linux Foundation’s LFX collaboration portal, which provides guidance and helps the PyTorch community identify future leaders, find potential employees, and observe shared community dynamics. The Linux Foundation said, "PyTorch has reached its current state through good maintenance and open source community management. We will not change any of the advantages of PyTorch." ######Reference link: ######https:// pytorch.org/blog/PyTorchfoundation/######https://www.assemblyai.com/blog/pytorch-vs-tensorflow-in-2022/######https://linuxfoundation.org/ zh/blog/welcoming-pytorch-to-the-linux-foundation/###The above is the detailed content of PyTorch is transferred to the Linux Foundation, which will have a significant impact on AI research. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

iFlytek: Huawei's Ascend 910B's capabilities are basically comparable to Nvidia's A100, and they are working together to create a new base for my country's general artificial intelligence

Oct 22, 2023 pm 06:13 PM

iFlytek: Huawei's Ascend 910B's capabilities are basically comparable to Nvidia's A100, and they are working together to create a new base for my country's general artificial intelligence

Oct 22, 2023 pm 06:13 PM

This site reported on October 22 that in the third quarter of this year, iFlytek achieved a net profit of 25.79 million yuan, a year-on-year decrease of 81.86%; the net profit in the first three quarters was 99.36 million yuan, a year-on-year decrease of 76.36%. Jiang Tao, Vice President of iFlytek, revealed at the Q3 performance briefing that iFlytek has launched a special research project with Huawei Shengteng in early 2023, and jointly developed a high-performance operator library with Huawei to jointly create a new base for China's general artificial intelligence, allowing domestic large-scale models to be used. The architecture is based on independently innovative software and hardware. He pointed out that the current capabilities of Huawei’s Ascend 910B are basically comparable to Nvidia’s A100. At the upcoming iFlytek 1024 Global Developer Festival, iFlytek and Huawei will make further joint announcements on the artificial intelligence computing power base. He also mentioned,

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

The perfect combination of PyCharm and PyTorch: detailed installation and configuration steps

Feb 21, 2024 pm 12:00 PM

PyCharm is a powerful integrated development environment (IDE), and PyTorch is a popular open source framework in the field of deep learning. In the field of machine learning and deep learning, using PyCharm and PyTorch for development can greatly improve development efficiency and code quality. This article will introduce in detail how to install and configure PyTorch in PyCharm, and attach specific code examples to help readers better utilize the powerful functions of these two. Step 1: Install PyCharm and Python

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

Introduction to five sampling methods in natural language generation tasks and Pytorch code implementation

Feb 20, 2024 am 08:50 AM

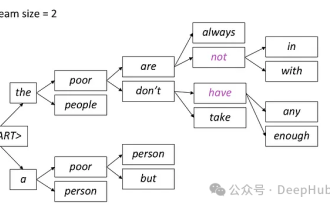

In natural language generation tasks, sampling method is a technique to obtain text output from a generative model. This article will discuss 5 common methods and implement them using PyTorch. 1. GreedyDecoding In greedy decoding, the generative model predicts the words of the output sequence based on the input sequence time step by time. At each time step, the model calculates the conditional probability distribution of each word, and then selects the word with the highest conditional probability as the output of the current time step. This word becomes the input to the next time step, and the generation process continues until some termination condition is met, such as a sequence of a specified length or a special end marker. The characteristic of GreedyDecoding is that each time the current conditional probability is the best

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Implementing noise removal diffusion model using PyTorch

Jan 14, 2024 pm 10:33 PM

Before we understand the working principle of the Denoising Diffusion Probabilistic Model (DDPM) in detail, let us first understand some of the development of generative artificial intelligence, which is also one of the basic research of DDPM. VAEVAE uses an encoder, a probabilistic latent space, and a decoder. During training, the encoder predicts the mean and variance of each image and samples these values from a Gaussian distribution. The result of the sampling is passed to the decoder, which converts the input image into a form similar to the output image. KL divergence is used to calculate the loss. A significant advantage of VAE is its ability to generate diverse images. In the sampling stage, one can directly sample from the Gaussian distribution and generate new images through the decoder. GAN has made great progress in variational autoencoders (VAEs) in just one year.

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

Tutorial on installing PyCharm with PyTorch

Feb 24, 2024 am 10:09 AM

As a powerful deep learning framework, PyTorch is widely used in various machine learning projects. As a powerful Python integrated development environment, PyCharm can also provide good support when implementing deep learning tasks. This article will introduce in detail how to install PyTorch in PyCharm and provide specific code examples to help readers quickly get started using PyTorch for deep learning tasks. Step 1: Install PyCharm First, we need to make sure we have

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

so fast! Recognize video speech into text in just a few minutes with less than 10 lines of code

Feb 27, 2024 pm 01:55 PM

Hello everyone, I am Kite. Two years ago, the need to convert audio and video files into text content was difficult to achieve, but now it can be easily solved in just a few minutes. It is said that in order to obtain training data, some companies have fully crawled videos on short video platforms such as Douyin and Kuaishou, and then extracted the audio from the videos and converted them into text form to be used as training corpus for big data models. If you need to convert a video or audio file to text, you can try this open source solution available today. For example, you can search for the specific time points when dialogues in film and television programs appear. Without further ado, let’s get to the point. Whisper is OpenAI’s open source Whisper. Of course it is written in Python. It only requires a few simple installation packages.

Deep Learning with PHP and PyTorch

Jun 19, 2023 pm 02:43 PM

Deep Learning with PHP and PyTorch

Jun 19, 2023 pm 02:43 PM

Deep learning is an important branch in the field of artificial intelligence and has received more and more attention in recent years. In order to be able to conduct deep learning research and applications, it is often necessary to use some deep learning frameworks to help achieve it. In this article, we will introduce how to use PHP and PyTorch for deep learning. 1. What is PyTorch? PyTorch is an open source machine learning framework developed by Facebook. It can help us quickly create and train deep learning models. PyTorc

Detailed explanation of GQA, the attention mechanism commonly used in large models, and Pytorch code implementation

Apr 03, 2024 pm 05:40 PM

Detailed explanation of GQA, the attention mechanism commonly used in large models, and Pytorch code implementation

Apr 03, 2024 pm 05:40 PM

GroupedQueryAttention is a multi-query attention method in large language models. Its goal is to achieve the quality of MHA while maintaining the speed of MQA. GroupedQueryAttention groups queries, and queries within each group share the same attention weight, which helps reduce computational complexity and increase inference speed. In this article, we will explain the idea of GQA and how to translate it into code. GQA is in the paper GQA:TrainingGeneralizedMulti-QueryTransformerModelsfromMulti-HeadCheckpoint