Web Front-end

Web Front-end

JS Tutorial

JS Tutorial

Let's talk about processes, threads, coroutines and concurrency models in Node.js

Let's talk about processes, threads, coroutines and concurrency models in Node.js

Let's talk about processes, threads, coroutines and concurrency models in Node.js

Node.js Now it has become a member of the toolbox for building high-concurrency network application services. Why has Node.js become the darling of the public? This article will start with the basic concepts of processes, threads, coroutines, and I/O models, and give you a comprehensive introduction to Node.js and the concurrency model.

Process

We generally call the running instance of a program a process, which is a basic unit for resource allocation and scheduling by the operating system. , generally includes the following parts:

- Program: the code to be executed, used to describe the functions to be completed by the process;

- Data area: the data space processed by the process, including Data, dynamically allocated memory, user stack of processing functions, modifiable programs and other information;

- Process table items: In order to implement the process model, the operating system maintains a process called

process tabletable, each process occupies aprocess table entry(also calledprocess control block), which contains the program counter, stack pointer, memory allocation, and open files status, scheduling information and other important process status information to ensure that after the process is suspended, the operating system can correctly revive the process.

The process has the following characteristics:

- Dynamicity: The essence of the process is an execution process of the program in the multi-programming system. The process is dynamically generated and dynamically destroyed. ;

- Concurrency: Any process can be executed concurrently with other processes;

- Independence: A process is a basic unit that can run independently, and it is also an independent unit for system allocation and scheduling of resources. ;

- Asynchronicity: Due to the mutual constraints between processes, the process has intermittent execution, that is, the processes move forward at independent and unpredictable speeds.

It should be noted that if a program is run twice, even if the operating system can enable them to share code (that is, only one copy of the code is in memory), it cannot change the running program. The fact that the two instances are two different processes.

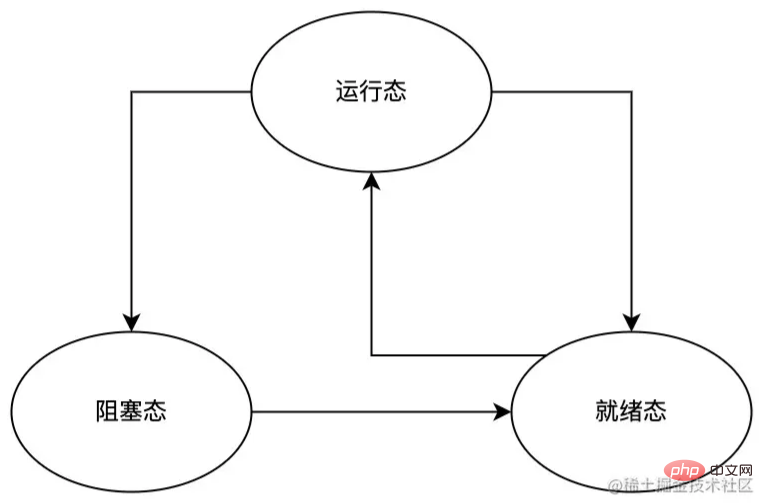

During the execution of the process, due to various reasons such as interruptions and CPU scheduling, the process will switch between the following states:

- Running state: The process is running at this moment and occupying the CPU;

- Ready state: The process is ready at this moment and can be run at any time, but it is temporarily stopped because other processes are running;

- Blocked state: The process is in a blocked state at this moment. Unless an external event occurs (such as keyboard input data has arrived), the process will not be able to run.

As can be seen from the process state switching diagram above, the process can switch from the running state to the ready state and the blocking state, but only the ready state can be directly switched to the running state. This is because:

- The switch from running state to ready state is caused by the process scheduler, because the system believes that the current process has taken up too much CPU time and decides to let other processes use the CPU time; and the process scheduler is the operating system's In part, the process does not even feel the existence of the scheduler;

- The switch from the running state to the blocking state is due to the process's own reasons (such as waiting for the user's keyboard input). The process cannot continue to execute and can only hang and wait for something. An event (such as keyboard input data has arrived) occurs; when a related event occurs, the process first converts to the ready state. If no other process is running at this time, it immediately converts to the running state. Otherwise, the process will remain in the ready state and wait for the process. Scheduling by the scheduler.

Threads

Sometimes, we need to use threads to solve the following problems:

- As the number of processes increases, The cost of switching between processes will become higher and higher, and the effective utilization rate of the CPU will become lower and lower. In severe cases, it may cause the system to freeze and other phenomena;

- Each process has its own independent memory space , and the memory space between each process is isolated from each other, and some tasks may need to share some data, so data synchronization between multiple processes is too cumbersome.

Regarding threads, we need to know the following points:

- A thread is a single sequential control flow in program execution, and is the smallest unit that the operating system can perform calculation scheduling. , it is included in the process and is the actual running unit in the process;

- A process can contain multiple threads, each thread performs different tasks in parallel;

- In a process All threads share the process's memory space (including code, data, heap, etc.) and some resource information (such as open files and system signals);

- Threads in one process are not visible in other processes.

Now that we understand the basic characteristics of threads, let’s talk about some common thread types.

Kernel state thread

Kernel state thread is a thread directly supported by the operating system. Its main features are as follows:

- The creation, scheduling, synchronization, and destruction of threads are completed by the system kernel, but its overhead is relatively expensive;

- The kernel can map kernel-state threads to each processor, making it easy to The processor core corresponds to a kernel thread, thereby fully competing for and utilizing CPU resources;

- can only access the code and data of the kernel;

- The efficiency of resource synchronization and data sharing is lower than that of process resource synchronization and data sharing efficiency.

User-mode thread

User-mode thread is a thread completely built in user space. Its main characteristics are as follows:

- The creation, scheduling, synchronization, and destruction of threads are completed by user space, and its overhead is very low;

- Since user-mode threads are maintained by user-space, the kernel does not perceive the existence of user-mode threads at all, so the kernel only The process to which it belongs does scheduling and resource allocation, and the scheduling and resource allocation of threads in the process are handled by the program itself. This is likely to cause a user-mode thread to be blocked in a system call, and the entire process will be blocked;

- Ability to access all shared address spaces and system resources of the process to which it belongs;

- Resource synchronization and data sharing are more efficient.

Lightweight process (LWP)

Lightweight process (LWP) is a user thread built on and supported by the kernel. The main features are as follows:

User space can only use kernel threads through lightweight processes (LWP), which can be regarded as a bridge between user state threads and kernel threads. Therefore, only the first Only by supporting kernel threads can there be a lightweight process (LWP);

Most operations of a lightweight process (LWP) require the user mode space to initiate a system call. This system call The cost is relatively high (requiring switching between user mode and kernel mode);

-

Each lightweight process (LWP) needs to be associated with a specific kernel thread, Therefore:

- Like kernel threads, it can fully compete and utilize CPU resources throughout the system;

- Each lightweight process (LWP) is an independent Thread scheduling unit, so that even if a lightweight process (LWP) is blocked in a system call, it will not affect the execution of the entire process;

- Lightweight process (LWP) needs to consume kernel resources (mainly refers to The stack space of the kernel thread), which makes it impossible to support a large number of lightweight processes (LWP) in the system;

can access all shared address spaces and systems of the processes to which they belong. resource.

Summary

Above we have briefly discussed the common thread types (kernel state threads, user state threads, lightweight processes) Introduction, each of them has its own scope of application. In actual use, they can be freely combined and used according to their own needs, such as common one-to-one, many-to-one, many-to-many and other models. Due to space limitations, this article I won’t introduce too much about this, and interested students can do their own research.

Coroutine

Coroutine, also called Fiber, is a type of thread that is built on threads and is managed and scheduled by the developer. , state maintenance and other behaviors, its main features are:

- Because execution scheduling does not require context switching, it has good execution efficiency;

- Because it runs in the same Threads, so there is no synchronization problem in thread communication;

- facilitates switching of control flow and simplifies the programming model.

In JavaScript, the async/await we often use is an implementation of coroutine, such as the following example:

function updateUserName(id, name) {

const user = getUserById(id);

user.updateName(name);

return true;

}

async function updateUserNameAsync(id, name) {

const user = await getUserById(id);

await user.updateName(name);

return true;

}Above example , the logical execution sequence within functions updateUserName and updateUserNameAsync is:

- Call function

getUserByIdand assign its return value to Variableuser; - Call the

updateNamemethod ofuser; - returns

trueto the caller .

The main difference between the two lies in the state control during actual operation:

- During the execution of function

updateUserName, as mentioned above The above logical sequence is executed in sequence; - During the execution of function

updateUserNameAsync, it is also executed in sequence according to the logical sequence mentioned above, except that whenawaitis encountered When,updateUserNameAsyncwill be suspended and save the current program state at the suspended location. It will not be awakened again untilawaitthe subsequent program fragment returns.updateUserNameAsyncAnd restore the program state before suspending, and then continue to execute the next program.

Through the above analysis, we can boldly guess: what coroutines need to solve is not the program concurrency problems that processes and threads need to solve, but the problems encountered when processing asynchronous tasks (such as File operations, network requests, etc.); before async/await, we could only handle asynchronous tasks through callback functions, which could easily make us fall into callback hell and produce a mess of Code that is generally difficult to maintain can be achieved through coroutines to synchronize asynchronous code.

What needs to be kept in mind is that the core capability of the coroutine is to be able to suspend a certain program and maintain the state of the suspended position of the program, and resume at the suspended position at some time in the future, and continue to execute the suspended position. the next program.

I/O model

A complete I/O operation needs to go through the following stages:

- User The thread (thread) initiates an

I/Ooperation request to the kernel through a system call; the - kernel processes the

I/Ooperation request (divided into a preparation phase and Actual execution stage), and returns the processing results to the user thread.

We can roughly divide I/O operations into blocking I/O, non-blocking I/O, Synchronous I/O, Asynchronous I/O Four types. Before discussing these types, we first become familiar with the following two sets of concepts (assuming here that service A calls service B):

-

##Blocking/non-blocking

:- If A returns only after receiving B's response, then the call is

- Blocking call

;If A returns immediately after calling B (that is, without waiting for B to complete execution), then the call is - non-blocking call

.

- Blocking call

-

Synchronous/asynchronous

:- If B notifies A only after execution is completed, then service B is

- Synchronization

;If A calls B, B immediately gives A a notification that the request has been received, and then notifies the execution result through - callback

after execution. To A, then service B isasynchronous.

- Synchronization

blocking/non-blocking with synchronous/asynchronous, so special attention is required:

- Blocking/non-blocking

For thecallerof the service; - Synchronous/asynchronous

For the serviceAs far as the calleeis concerned.

blocking/non-blocking and synchronous/asynchronous, let’s look at the specific I/O model.

Blocking I/O

Definition: After the user enters the (thread) process and initiates theI/O system call, the user enters the (thread) process Will be immediately blocked until the entire I/O operation is processed and the result is returned to the user (thread) process, the user (thread) process can be unblocked status, continue to perform subsequent operations.

- Because this model blocks the user's (thread) process, this model does not occupy CPU resources;

- When executing

- I/ During O

operation, the user cannot perform other operations in the (thread) process;This model is only suitable for applications with small concurrency, because an - I/O

request can block the incoming (thread) process, so in order to respond toI/Orequests in a timely manner, it is necessary to allocate an incoming (thread) process to each request. This will cause huge resource usage, and for long connections In terms of requests, since the incoming (thread) process resources cannot be released for a long time, if there are new requests in the future, a serious performance bottleneck will occur.

Non-blocking I/O

Definition:- User process initiates

- I/O

After the system call, if theI/Ooperation is not ready, theI/Ocall will return an error, and the user does not need to wait when entering the thread. Instead, polling is used to detect whether theI/Ooperation is ready; after the operation is ready, the actual - I/O

operation will block the user from entering ( Thread (thread) until the execution result is returned to the user (thread).

- Since this model requires the user to continuously ask the

- I/O

operation readiness status (generally usewhileloop), so the model needs to occupy the CPU and consume CPU resources;Before the - I/O

operation is ready, the user's (thread) process will not be blocked untilI/OAfter the operation is ready, subsequent actualI/Ooperations will block the user from entering the thread;This model is only suitable for small concurrency. and applications that do not require timely response.

Synchronous (asynchronous) I/O

The user thread initiatesI/O After the system call, if The I/O call will cause the user's thread to be blocked, then the I/O call will be synchronous I/O, otherwise it will be AsynchronousI/O.

I/O operation synchronous or asynchronous is the user's thread (thread) connection with I/O Communication mechanism for operations, where:

-

SynchronizationIn this case, the interaction between the user thread andI/Ois synchronized through the kernel buffer, that is, the kernel will /OThe execution result of the operation is synchronized to the buffer, and then the data in the buffer is copied to the user thread. This process will block the user thread untilI/OThe operation is completed; - asynchronous

In the case of user thread (thread) interaction withI/Ois directly synchronized through the kernel, that is, the kernel will directlyI/OThe execution result of the operation is copied to the user's thread. This process will not block the user's thread.

The concurrency model of Node.js

Node.js uses a single-threaded, event-driven asynchronousI/O model, I personally believe that the reason for choosing this model is:

- JavaScript runs in single-threaded mode under V8, and it is extremely difficult to implement multi-threading;

- Most networks Applications are

- I/O

intensive. How to manage multi-threaded resources reasonably and efficiently while ensuring high concurrency is more complicated than the management of single-threaded resources.

I/O model, and uses the main thread's EventLoop and Auxiliary Worker thread to implement its model:

- After the Node.js process is started, the Node.js main thread will create an EventLoop. The main function of the EventLoop is to register the callback function of the event and execute it at some time in the future. Executed in an event loop;

- Worker thread is used to execute specific event tasks (executed synchronously in other threads other than the main thread), and then returns the execution results to the EventLoop of the main thread, so that EventLoop executes callback functions for related events.

Summary

Node.js is a technology that front-end developers have to face now and even in the future. However, most front-end developers only know about Node.js. Staying on the surface, in order to let everyone better understand the concurrency model of Node.js, this article first introduces processes, threads, and coroutines, then introduces differentI/O models, and finally introduces Node.js The concurrency model is briefly introduced. Although introduced

There is not much space on the Node.js concurrency model, but I believe that it will never change without departing from its roots. Once you master the relevant basics and then deeply understand the design and implementation of Node.js, you will get twice the result with half the effort.

nodejs tutorial!

The above is the detailed content of Let's talk about processes, threads, coroutines and concurrency models in Node.js. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Is nodejs a backend framework?

Apr 21, 2024 am 05:09 AM

Is nodejs a backend framework?

Apr 21, 2024 am 05:09 AM

Node.js can be used as a backend framework as it offers features such as high performance, scalability, cross-platform support, rich ecosystem, and ease of development.

How to connect nodejs to mysql database

Apr 21, 2024 am 06:13 AM

How to connect nodejs to mysql database

Apr 21, 2024 am 06:13 AM

To connect to a MySQL database, you need to follow these steps: Install the mysql2 driver. Use mysql2.createConnection() to create a connection object that contains the host address, port, username, password, and database name. Use connection.query() to perform queries. Finally use connection.end() to end the connection.

What are the global variables in nodejs

Apr 21, 2024 am 04:54 AM

What are the global variables in nodejs

Apr 21, 2024 am 04:54 AM

The following global variables exist in Node.js: Global object: global Core module: process, console, require Runtime environment variables: __dirname, __filename, __line, __column Constants: undefined, null, NaN, Infinity, -Infinity

What is the difference between npm and npm.cmd files in the nodejs installation directory?

Apr 21, 2024 am 05:18 AM

What is the difference between npm and npm.cmd files in the nodejs installation directory?

Apr 21, 2024 am 05:18 AM

There are two npm-related files in the Node.js installation directory: npm and npm.cmd. The differences are as follows: different extensions: npm is an executable file, and npm.cmd is a command window shortcut. Windows users: npm.cmd can be used from the command prompt, npm can only be run from the command line. Compatibility: npm.cmd is specific to Windows systems, npm is available cross-platform. Usage recommendations: Windows users use npm.cmd, other operating systems use npm.

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Pi Node Teaching: What is a Pi Node? How to install and set up Pi Node?

Mar 05, 2025 pm 05:57 PM

Detailed explanation and installation guide for PiNetwork nodes This article will introduce the PiNetwork ecosystem in detail - Pi nodes, a key role in the PiNetwork ecosystem, and provide complete steps for installation and configuration. After the launch of the PiNetwork blockchain test network, Pi nodes have become an important part of many pioneers actively participating in the testing, preparing for the upcoming main network release. If you don’t know PiNetwork yet, please refer to what is Picoin? What is the price for listing? Pi usage, mining and security analysis. What is PiNetwork? The PiNetwork project started in 2019 and owns its exclusive cryptocurrency Pi Coin. The project aims to create a one that everyone can participate

Is there a big difference between nodejs and java?

Apr 21, 2024 am 06:12 AM

Is there a big difference between nodejs and java?

Apr 21, 2024 am 06:12 AM

The main differences between Node.js and Java are design and features: Event-driven vs. thread-driven: Node.js is event-driven and Java is thread-driven. Single-threaded vs. multi-threaded: Node.js uses a single-threaded event loop, and Java uses a multi-threaded architecture. Runtime environment: Node.js runs on the V8 JavaScript engine, while Java runs on the JVM. Syntax: Node.js uses JavaScript syntax, while Java uses Java syntax. Purpose: Node.js is suitable for I/O-intensive tasks, while Java is suitable for large enterprise applications.

Is nodejs a back-end development language?

Apr 21, 2024 am 05:09 AM

Is nodejs a back-end development language?

Apr 21, 2024 am 05:09 AM

Yes, Node.js is a backend development language. It is used for back-end development, including handling server-side business logic, managing database connections, and providing APIs.

Which one to choose between nodejs and java?

Apr 21, 2024 am 04:40 AM

Which one to choose between nodejs and java?

Apr 21, 2024 am 04:40 AM

Node.js and Java each have their pros and cons in web development, and the choice depends on project requirements. Node.js excels in real-time applications, rapid development, and microservices architecture, while Java excels in enterprise-grade support, performance, and security.