Detailed explanation of MySQL8.0 InnoDB parallel execution

Overview

After years of development, MySQL has become the most popular database, widely used in the Internet industry, and gradually penetrating into various traditional industries. The reason for its popularity is, on the one hand, its excellent high-concurrency transaction processing capabilities, and on the other hand, it also benefits from the rich ecosystem of MySQL. MySQL works well in processing short queries in OLTP scenarios, but its ability to handle complex large queries is limited. The most direct point is that for a SQL statement, MySQL can only use one CPU core to process it. In this scenario, it cannot use the multi-core capabilities of the host CPU. MySQL has not stood still and has been developing. The newly launched version 8.0.14 introduces the parallel query feature for the first time, which doubles the performance of check table and select count(*) type statements. Although the current usage scenarios are relatively limited, the subsequent development is worth looking forward to.

Recommendation: "mysql video tutorial"

Usage method

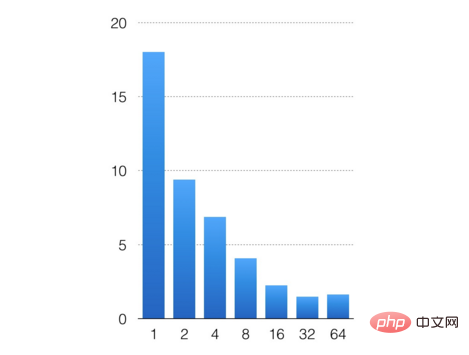

Set the number of concurrent threads by configuring the parameter innodb_parallel_read_threads. Can start the parallel scan function, the default value is 4. I will do a simple experiment here, import 200 million pieces of data through sysbench, configure innodb_parallel_read_threads to 1, 2, 4, 8, 16, 32, 64 respectively to test the effect of parallel execution. The test statement is select count(*) from sbtest1;

The horizontal axis is the number of configured concurrent threads, and the vertical axis is the statement execution time. Judging from the test results, the overall parallel performance is still good. Scanning 200 million records dropped from 18s for a single thread to 1s for 32 threads. No matter how much concurrency is developed in the future, due to the limited amount of data, the management consumption of multi-threads exceeds the performance improvement brought by concurrency, and the SQL execution time cannot be continued to be shortened.

MySQL Parallel Execution

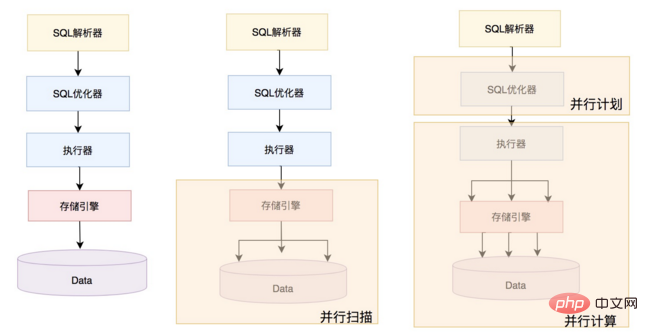

In fact, the current parallel execution of MySQL is still in a very early stage, as shown in the figure below. The left side is the previous MySQL serial processing of a single SQL form; The middle one is the parallel capability provided by the current MySQL version, the form of parallel scanning of the InnoDB engine; the far right one is the form that MySQL will develop in the future. The optimizer generates a parallel plan based on the system load and SQL, and sends the partition plan to the executor for parallelization. implement. Parallel execution is not just parallel scanning, but also includes parallel aggregation, parallel joining, parallel grouping, and parallel sorting. There are no supporting modifications to the upper-level optimizer and executor of the current version of MySQL. Therefore, the following discussion mainly focuses on how the InnoDB engine implements parallel scanning, mainly including partitioning, parallel scanning, read-ahead, and adapter classes that interact with the executor.

Partitioning

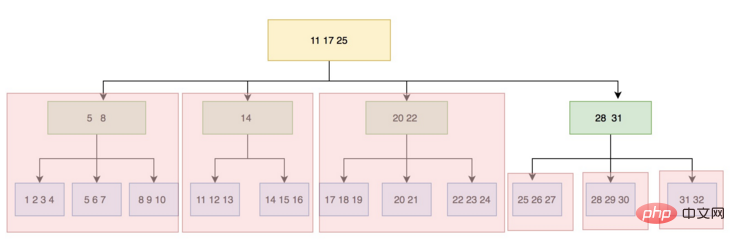

One of the core steps of parallel scanning is partitioning, which divides the scanned data into multiple parts so that multiple threads can Parallel scan. The InnoDB engine is an index-organized table. Data is stored on the disk in the form of a B tree. The unit of a node is a page (block/page). At the same time, hot pages are cached in the buffer pool and eliminated through the LRU algorithm. The logic of partitioning is to start from the root node page and scan down layer by layer. When it is judged that the number of branches on a certain layer exceeds the configured number of threads, the splitting will stop. During implementation, a total of two partitions will actually be performed. The first partition is divided according to the number of branches of the root node page. The record of the leftmost leaf node of each branch is the left lower bound, and this record is recorded as the adjacent upper bound. The upper right bound of a branch. In this way, B tree is divided into several subtrees, and each subtree is a scan partition. After the first partition, there may be a problem that the number of partitions cannot fully utilize the multi-core. For example, if the parallel scanning thread is configured as 3, and after the first partition, 4 partitions are generated, then after the first 3 partitions are completed in parallel, the fourth Each partition can only be scanned by one thread at most, and the final effect is that multi-core resources cannot be fully utilized.

Secondary partitioning

In order to solve this problem, version 8.0.17 introduced secondary partitioning. For the fourth partition, continue to explore the split, so many Sub-partitions can be scanned concurrently, and the minimum granularity of concurrent scanning by the InnoDB engine is the page level. The specific logic for judging secondary partitioning is that after one partitioning, if the number of partitions is greater than the number of threads, the partitions whose number is greater than the number of threads need to continue to be partitioned for the second time; if the number of partitions is less than the number of threads and the B tree level is very deep, then all All partitions require secondary partitioning.

The relevant code is as follows:

split_point = 0;

if (ranges.size() > max_threads()) {

//最后一批分区进行二次分区

split_point = (ranges.size() / max_threads()) * max_threads();

} else if (m_depth < SPLIT_THRESHOLD) {

/* If the tree is not very deep then don't split. For smaller tables

it is more expensive to split because we end up traversing more blocks*/

split_point = max_threads();

} else {

//如果B+tree的层次很深(层数大于或等于3,数据量很大),则所有分区都需要进行二次分区

}Whether it is a primary partition or a secondary partition, the logic of the partition boundary is the same. The record of the leftmost leaf node of each partition is the lower left boundary, and Record this record as the upper right boundary of the adjacent previous branch. This ensures that there are enough partitions, fine enough granularity, and sufficient parallelism. The figure below shows the configuration of 3 concurrent threads scanning for secondary partitioning.

The relevant code is as follows:

create_ranges(size_t depth, size_t level)

一次分区:

parallel_check_table

add_scan

partition(scan_range, level=0) /* start at root-page */

create_ranges(scan_range, depth=0, level=0)

create_contexts(range, index >= split_point)

二次分区:

split()

partition(scan_range, level=1)

create_ranges(depth=0,level)Parallel scanning

After a partition, put each partition scanning task into a lock-free queue. The parallel worker thread obtains the task from the queue and executes the scanning task. If the obtained task has the split attribute, at this time the worker The task will be split twice and put into the queue. This process mainly includes two core interfaces, one is the worker thread interface, and the other is the traversal record interface. The former obtains tasks from the queue and executes them, and maintains statistical counts; the latter obtains appropriate records based on visibility and injects them through the upper layer Callback function processing, such as counting, etc.

Parallel_reader::worker(size_t thread_id)

{

1. Extract ctx task from ctx-queue

2. According to the split attribute of ctx, Determine whether the partition needs to be further split (split())

3. Traverse all records in the partition (traverse())

4. After a partition task is completed, maintain the m_n_completed count

5. If the m_n_compeleted count reaches the ctx number, wake up all worker threads and end

6. Return err information according to the traverse interface.

}

Parallel_reader::Ctx::traverse()

{

1. Set pcursor according to range

2. Find btree, position the cursor to the starting position of the range

3. Determine visibility (check_visibility)

4. If visible, calculate according to the callback function (such as statistics)

5. Traverse backwards. If the last record of the page is reached, start the read-ahead mechanism (submit_read_ahead)

6. End after exceeding the range

}

At the same time in 8.0 Version .17 also introduces a read-ahead mechanism to avoid the problem of poor parallel performance due to IO bottlenecks. Currently, the number of threads for pre-reading cannot be configured and is hard-coded to 2 threads in the code. The unit of each pre-read is a cluster (InnoDB files are managed through a three-level structure of segments, clusters, and pages, and a cluster is a group of consecutive pages). Depending on the size of the page configuration, it may be 1M or 2M. For a common 16k page configuration, 1M is pre-read each time, which is 64 pages. When the worker thread scans, it will first determine whether the next adjacent page is the first page of the cluster. If so, it will initiate a pre-read task. Read-ahead tasks are also cached through the lock-free queue. The worker thread is the producer and the read-ahead-worker is the consumer. Since all partition pages do not overlap, read-ahead tasks are not repeated.

Executor interaction (adapter)

In fact, MySQL has encapsulated an adapter class Parallel_reader_adapter for use by the upper layer to prepare for subsequent richer parallel execution. . First of all, this class needs to solve the problem of record format and convert the records scanned by the engine layer into MySQL format. In this way, the upper and lower layers are decoupled. The executor does not need to sense the engine layer format and is processed in the MySQL format. The whole process is an assembly line. MySQL records are stored in batches through a buffer. The worker thread continuously reads the records from the engine layer. At the same time, records are continuously processed by the upper layer. The difference in reading and processing speed can be balanced through the buffer. Make sure the whole process flows. The default cache size is 2M. The number of MySQL records that the buffer can cache is determined based on the record row length of the table. The core process is mainly in the process_rows interface. The process is as follows

process_rows

{

1. Convert engine records into MySQL records

2. Get this thread Buffer information (how many mysql records were converted and how many were sent to the upper layer)

3. Fill the MySQL records into the buffer and increment the statistics m_n_read

4. Call the callback function to process (such as statistics , aggregation, sorting, etc.), auto-increment statistics m_n_send

}

For the caller, it is necessary to set the meta-information of the table and inject the processing record callback function, such as processing aggregation, sorting, Group work. The callback function is controlled by setting m_init_fn, m_load_fn and m_end_fn.

Summary

MySQL8.0 introduced parallel query. Although it is still relatively rudimentary, it has already allowed us to see the potential of MySQL parallel query. We have also seen it from the experiment. After parallel execution is turned on, SQL statement execution fully utilizes the multi-core capabilities, and the response time drops sharply. I believe that in the near future, 8.0 will support more parallel operators, including parallel aggregation, parallel connection, parallel grouping, and parallel sorting.

The above is the detailed content of Detailed explanation of MySQL8.0 InnoDB parallel execution. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1659

1659

14

14

1416

1416

52

52

1310

1310

25

25

1258

1258

29

29

1232

1232

24

24

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

MySQL and MariaDB can coexist, but need to be configured with caution. The key is to allocate different port numbers and data directories to each database, and adjust parameters such as memory allocation and cache size. Connection pooling, application configuration, and version differences also need to be considered and need to be carefully tested and planned to avoid pitfalls. Running two databases simultaneously can cause performance problems in situations where resources are limited.

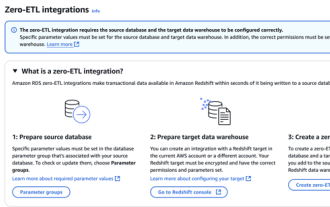

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

LaravelEloquent Model Retrieval: Easily obtaining database data EloquentORM provides a concise and easy-to-understand way to operate the database. This article will introduce various Eloquent model search techniques in detail to help you obtain data from the database efficiently. 1. Get all records. Use the all() method to get all records in the database table: useApp\Models\Post;$posts=Post::all(); This will return a collection. You can access data using foreach loop or other collection methods: foreach($postsas$post){echo$post->

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL is suitable for beginners because it is simple to install, powerful and easy to manage data. 1. Simple installation and configuration, suitable for a variety of operating systems. 2. Support basic operations such as creating databases and tables, inserting, querying, updating and deleting data. 3. Provide advanced functions such as JOIN operations and subqueries. 4. Performance can be improved through indexing, query optimization and table partitioning. 5. Support backup, recovery and security measures to ensure data security and consistency.