what is apache kafka data collection

What is Apache Kafka data collection?

Apache Kafka - Introduction

Apache Kafka originated at LinkedIn and later became an open source Apache project in 2011 and then became an Apache first-class project in 2012. Kafka is written in Scala and Java. Apache Kafka is a fault-tolerant messaging system based on publish and subscribe. It is fast, scalable and distributed by design.

This tutorial will explore the principles, installation, and operation of Kafka, and then introduce the deployment of Kafka clusters. Finally, we will conclude with real-time applications and integration with Big Data Technologies.

Before proceeding with this tutorial, you must have a good understanding of Java, Scala, distributed messaging systems, and Linux environments.

In big data, a large amount of data is used. Regarding data, we have two main challenges. The first challenge is how to collect large amounts of data, and the second challenge is analyzing the collected data. To overcome these challenges, you need a messaging system.

Kafka is designed for distributed high-throughput systems. Kafka tends to work well as an alternative to more traditional mail brokers. Compared to other messaging systems, Kafka has better throughput, built-in partitioning, replication, and inherent fault tolerance, making it ideal for large-scale message processing applications.

What is a mail system?

The messaging system is responsible for transferring data from one application to another, so applications can focus on the data but not worry about how to share it. Distributed messaging is based on the concept of reliable message queues. Messages are queued asynchronously between the client application and the messaging system. Two types of messaging patterns are available - one is point-to-point and the other is a publish-subscribe (pub-sub) messaging system. Most messaging patterns follow pub-sub.

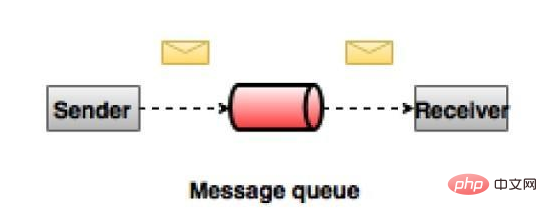

Point-to-point messaging system

In a point-to-point system, messages will remain in queues. One or more consumers can consume messages from the queue, but a specific message can be consumed by at most only one consumer. Once a consumer reads a message from a queue, it disappears from the queue. A typical example of this system is an order processing system, where each order will be processed by one order processor, but multiple order processors can work simultaneously. The diagram below depicts the structure.

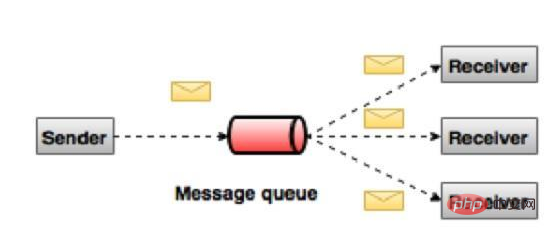

Publish-Subscribe Messaging System

In a publish-subscribe system, messages will remain in topics. Unlike peer-to-peer systems, a consumer can subscribe to one or more topics and consume all messages in that topic. In the Publish-Subscribe system, the message generator is called the publisher, and the message consumer is called the subscriber. A real-life example is Dish TV, which publishes different channels like sports, movies, music, etc. Anyone can subscribe to their own channels and get their subscription channels.

#What is Kafka?

Apache Kafka is a distributed publish-subscribe messaging system and powerful queue that can handle large amounts of data and enables you to deliver messages from one endpoint to another. Kafka is suitable for offline and online message consumption. Kafka messages are persisted on disk and replicated within the cluster to prevent data loss. Kafka is built on the ZooKeeper synchronization service. It integrates perfectly with Apache Storm and Spark to stream data analysis in real time.

Advantages The following are several benefits of Kafka -

Reliability - Kafka is distributed, partitioned, replicated and fault-tolerant.

Scalability - The Kafka messaging system scales easily with no downtime.

Durability - Kafka uses a distributed commit log, which means messages remain on disk as quickly as possible, so it is durable.

Performance - Kafka has high throughput for both publish and subscribe messages. It maintains stable performance even when many terabytes of messages are stored.

Kafka is very fast, guaranteeing zero downtime and zero data loss.

Use Cases

Kafka can be used for many use cases. Some of them are listed below -

Metrics - Kafka is often used to run monitoring data. This involves aggregating statistics from distributed applications to produce a centralized feed of operational data.

Log aggregation solution - Kafka can be used across an organization to collect logs from multiple services and serve them to multiple servers in a standard format.

Stream Processing - Popular frameworks like Storm and Spark

Streaming reads data from a topic, processes it, and writes the processed data to a new topic that is available to users and applications . Kafka's strong durability is also very useful in stream processing.

Kafka requires

Kafka is a unified platform for processing all real-time data sources. Kafka supports low-latency messaging and guarantees fault tolerance in the presence of machine failures. It has the ability to handle a large number of different consumers. Kafka is very fast, performing 2 million writes/second. Kafka persists all data to disk, which essentially means that all writes go to the operating system's (RAM) page cache. This transfers data from the page cache to the web socket very efficiently.

For more Apache related knowledge, please visit the Apache usage tutorial column!

The above is the detailed content of what is apache kafka data collection. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to set the cgi directory in apache

Apr 13, 2025 pm 01:18 PM

How to set the cgi directory in apache

Apr 13, 2025 pm 01:18 PM

To set up a CGI directory in Apache, you need to perform the following steps: Create a CGI directory such as "cgi-bin", and grant Apache write permissions. Add the "ScriptAlias" directive block in the Apache configuration file to map the CGI directory to the "/cgi-bin" URL. Restart Apache.

What to do if the apache80 port is occupied

Apr 13, 2025 pm 01:24 PM

What to do if the apache80 port is occupied

Apr 13, 2025 pm 01:24 PM

When the Apache 80 port is occupied, the solution is as follows: find out the process that occupies the port and close it. Check the firewall settings to make sure Apache is not blocked. If the above method does not work, please reconfigure Apache to use a different port. Restart the Apache service.

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

How to connect to the database of apache

Apr 13, 2025 pm 01:03 PM

Apache connects to a database requires the following steps: Install the database driver. Configure the web.xml file to create a connection pool. Create a JDBC data source and specify the connection settings. Use the JDBC API to access the database from Java code, including getting connections, creating statements, binding parameters, executing queries or updates, and processing results.

How to view your apache version

Apr 13, 2025 pm 01:15 PM

How to view your apache version

Apr 13, 2025 pm 01:15 PM

There are 3 ways to view the version on the Apache server: via the command line (apachectl -v or apache2ctl -v), check the server status page (http://<server IP or domain name>/server-status), or view the Apache configuration file (ServerVersion: Apache/<version number>).

How to view the apache version

Apr 13, 2025 pm 01:00 PM

How to view the apache version

Apr 13, 2025 pm 01:00 PM

How to view the Apache version? Start the Apache server: Use sudo service apache2 start to start the server. View version number: Use one of the following methods to view version: Command line: Run the apache2 -v command. Server Status Page: Access the default port of the Apache server (usually 80) in a web browser, and the version information is displayed at the bottom of the page.

How to configure zend for apache

Apr 13, 2025 pm 12:57 PM

How to configure zend for apache

Apr 13, 2025 pm 12:57 PM

How to configure Zend in Apache? The steps to configure Zend Framework in an Apache Web Server are as follows: Install Zend Framework and extract it into the Web Server directory. Create a .htaccess file. Create the Zend application directory and add the index.php file. Configure the Zend application (application.ini). Restart the Apache Web server.

How to delete more than server names of apache

Apr 13, 2025 pm 01:09 PM

How to delete more than server names of apache

Apr 13, 2025 pm 01:09 PM

To delete an extra ServerName directive from Apache, you can take the following steps: Identify and delete the extra ServerName directive. Restart Apache to make the changes take effect. Check the configuration file to verify changes. Test the server to make sure the problem is resolved.

How to solve the problem that apache cannot be started

Apr 13, 2025 pm 01:21 PM

How to solve the problem that apache cannot be started

Apr 13, 2025 pm 01:21 PM

Apache cannot start because the following reasons may be: Configuration file syntax error. Conflict with other application ports. Permissions issue. Out of memory. Process deadlock. Daemon failure. SELinux permissions issues. Firewall problem. Software conflict.