How to implement a simple crawler using Node.js

Why choose to use node to write a crawler? It’s because the cheerio library is fully compatible with jQuery syntax. If you are familiar with it, it is really fun to use

Dependency selection

cheerio: Node .js version of jQuery

http: encapsulates an HTTP server and a simple HTTP client

iconv-lite: Solve the problem of garbled characters when crawling gb2312 web pages

Initial implementation

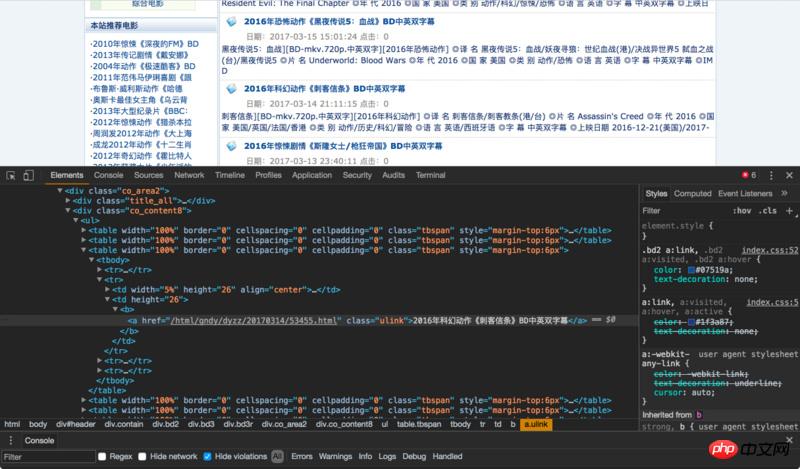

Since we want to crawl the website content, we should first take a look at the basic structure of the website

We selected Movie Paradise as the target website and want to crawl all the latest movie download links

Analysis page

The page structure is as follows:

We can see that the title of each movie is under a <a href="http://www.php.cn/wiki/164.html" target="_blank">class</a>ulinka tag. Moving up, we can See that the outermost box class is co_content8

ok, you can start work

Get a page of movie titles

First Introduce dependencies and set the url to be crawled

var cheerio = require('cheerio');

var http = require('http');

var iconv = require('iconv-lite');

var url = 'http://www.ygdy8.net/html/gndy/dyzz/index.html';Core codeindex.js

http.get(url, function(sres) {

var chunks = [];

sres.on('data', function(chunk) {

chunks.push(chunk);

});

// chunks里面存储着网页的 html 内容,将它zhuan ma传给 cheerio.load 之后

// 就可以得到一个实现了 jQuery 接口的变量,将它命名为 `$`

// 剩下就都是 jQuery 的内容了

sres.on('end', function() {

var titles = [];

//由于咱们发现此网页的编码格式为gb2312,所以需要对其进行转码,否则乱码

//依据:“<meta>”

var html = iconv.decode(Buffer.concat(chunks), 'gb2312');

var $ = cheerio.load(html, {decodeEntities: false});

$('.co_content8 .ulink').each(function (idx, element) {

var $element = $(element);

titles.push({

title: $element.text()

})

})

console.log(titles);

});

});Runnode index

The results are as follows

Successfully obtained the movie title. If I want to obtain the titles of multiple pages, it is impossible to change the URLs one by one. Of course there is a way to do this, please read on!

Get multi-page movie titles

We only need to encapsulate the previous code into a functionand recursivelyexecute it and we are done

Core Codeindex.js

var index = 1; //页面数控制

var url = 'http://www.ygdy8.net/html/gndy/dyzz/list_23_';

var titles = []; //用于保存title

function getTitle(url, i) {

console.log("正在获取第" + i + "页的内容");

http.get(url + i + '.html', function(sres) {

var chunks = [];

sres.on('data', function(chunk) {

chunks.push(chunk);

});

sres.on('end', function() {

var html = iconv.decode(Buffer.concat(chunks), 'gb2312');

var $ = cheerio.load(html, {decodeEntities: false});

$('.co_content8 .ulink').each(function (idx, element) {

var $element = $(element);

titles.push({

title: $element.text()

})

})

if(i <p>The results are as follows<br><img src="/static/imghw/default1.png" data-src="https://img.php.cn/upload/article/000/000/013/79a1edcfe9244c5fb6f23f007f455aaf-2.png" class="lazy" style="max-width:90%" style="max-width:90%" title="How to implement a simple crawler using Node.js" alt="How to implement a simple crawler using Node.js"></p><h4 id="Get-the-movie-download-link">Get the movie download link</h4><p>If it is a manual operation, we need In one operation, you can find the download address by clicking into the movie details page <br> So how do we implement it through node </p><p> Let’s analyze the routine first <a href="http://www.php.cn/code/7955.html" target="_blank"> Page layout </a><br><img src="/static/imghw/default1.png" data-src="https://img.php.cn/upload/article/000/000/013/45e6e69669b80c60f0e7eabd78b3a018-3.png" class="lazy" style="max-width:90%" style="max-width:90%" title="How to implement a simple crawler using Node.js" alt="How to implement a simple crawler using Node.js"></p><p> If we want to accurately locate the download link, we need to first find the p with <code>id</code> as <code>Zoom</code>, and the download link is under this <code>p</code> Within the <code>a</code> tag under ##tr<code>. </code></p>Then we will <p>define a function<a href="http://www.php.cn/code/8119.html" target="_blank"> to get the download link</a></p>getBtLink()<p></p><pre class="brush:php;toolbar:false">function getBtLink(urls, n) { //urls里面包含着所有详情页的地址

console.log("正在获取第" + n + "个url的内容");

http.get('http://www.ygdy8.net' + urls[n].title, function(sres) {

var chunks = [];

sres.on('data', function(chunk) {

chunks.push(chunk);

});

sres.on('end', function() {

var html = iconv.decode(Buffer.concat(chunks), 'gb2312'); //进行转码

var $ = cheerio.load(html, {decodeEntities: false});

$('#Zoom td').children('a').each(function (idx, element) {

var $element = $(element);

btLink.push({

bt: $element.attr('href')

})

})

if(n Run again<p>node index<code></code><br><img src="/static/imghw/default1.png" data-src="https://img.php.cn/upload/article/000/000/013/2816c9cbd03b1466c255e54c10156e14-4.png" class="lazy" style="max-width:90%" style="max-width:90%" title="How to implement a simple crawler using Node.js" alt="How to implement a simple crawler using Node.js"><br><img src="/static/imghw/default1.png" data-src="https://img.php.cn/upload/article/000/000/013/8eb570e10f1a4e755ebffd92bd150760-5.png" class="lazy" style="max-width:90%" style="max-width:90%" title="How to implement a simple crawler using Node.js" alt="How to implement a simple crawler using Node.js"></p>In this way we have obtained the download links for all the movies in the three pages. Isn’t it very simple? <p></p>Save data<h2></h2>Of course we need to save the data after crawling it. Here I chose <p>MongoDB<a href="http://www.php.cn/wiki/1523.html" target="_blank"> to save it</a> </p>Data saving function<p>save()<code></code></p><pre class="brush:php;toolbar:false">function save() {

var MongoClient = require('mongodb').MongoClient; //导入依赖

MongoClient.connect(mongo_url, function (err, db) {

if (err) {

console.error(err);

return;

} else {

console.log("成功连接数据库");

var collection = db.collection('node-reptitle');

collection.insertMany(btLink, function (err,result) { //插入数据

if (err) {

console.error(err);

} else {

console.log("保存数据成功");

}

})

db.close();

}

});

}Run again

node index

The above is the detailed content of How to implement a simple crawler using Node.js. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Detailed graphic explanation of the memory and GC of the Node V8 engine

Mar 29, 2023 pm 06:02 PM

Detailed graphic explanation of the memory and GC of the Node V8 engine

Mar 29, 2023 pm 06:02 PM

This article will give you an in-depth understanding of the memory and garbage collector (GC) of the NodeJS V8 engine. I hope it will be helpful to you!

An article about memory control in Node

Apr 26, 2023 pm 05:37 PM

An article about memory control in Node

Apr 26, 2023 pm 05:37 PM

The Node service built based on non-blocking and event-driven has the advantage of low memory consumption and is very suitable for handling massive network requests. Under the premise of massive requests, issues related to "memory control" need to be considered. 1. V8’s garbage collection mechanism and memory limitations Js is controlled by the garbage collection machine

Let's talk about how to choose the best Node.js Docker image?

Dec 13, 2022 pm 08:00 PM

Let's talk about how to choose the best Node.js Docker image?

Dec 13, 2022 pm 08:00 PM

Choosing a Docker image for Node may seem like a trivial matter, but the size and potential vulnerabilities of the image can have a significant impact on your CI/CD process and security. So how do we choose the best Node.js Docker image?

Node.js 19 is officially released, let's talk about its 6 major features!

Nov 16, 2022 pm 08:34 PM

Node.js 19 is officially released, let's talk about its 6 major features!

Nov 16, 2022 pm 08:34 PM

Node 19 has been officially released. This article will give you a detailed explanation of the 6 major features of Node.js 19. I hope it will be helpful to you!

Let's talk in depth about the File module in Node

Apr 24, 2023 pm 05:49 PM

Let's talk in depth about the File module in Node

Apr 24, 2023 pm 05:49 PM

The file module is an encapsulation of underlying file operations, such as file reading/writing/opening/closing/delete adding, etc. The biggest feature of the file module is that all methods provide two versions of **synchronous** and **asynchronous**, with Methods with the sync suffix are all synchronization methods, and those without are all heterogeneous methods.

Let's talk about the GC (garbage collection) mechanism in Node.js

Nov 29, 2022 pm 08:44 PM

Let's talk about the GC (garbage collection) mechanism in Node.js

Nov 29, 2022 pm 08:44 PM

How does Node.js do GC (garbage collection)? The following article will take you through it.

Let's talk about the event loop in Node

Apr 11, 2023 pm 07:08 PM

Let's talk about the event loop in Node

Apr 11, 2023 pm 07:08 PM

The event loop is a fundamental part of Node.js and enables asynchronous programming by ensuring that the main thread is not blocked. Understanding the event loop is crucial to building efficient applications. The following article will give you an in-depth understanding of the event loop in Node. I hope it will be helpful to you!

Learn more about Buffers in Node

Apr 25, 2023 pm 07:49 PM

Learn more about Buffers in Node

Apr 25, 2023 pm 07:49 PM

At the beginning, JS only ran on the browser side. It was easy to process Unicode-encoded strings, but it was difficult to process binary and non-Unicode-encoded strings. And binary is the lowest level data format of the computer, video/audio/program/network package