How do you limit the number of rows returned using the LIMIT clause?

How do you limit the number of rows returned using the LIMIT clause?

The LIMIT clause is used in SQL queries to restrict the number of rows returned in the result set. It is commonly used in databases like MySQL, PostgreSQL, and SQLite to manage the output of a query, particularly useful for large datasets where you want to control the amount of data being returned.

To use the LIMIT clause, you simply append it to your SELECT statement followed by the number of rows you wish to retrieve. For example, if you want to retrieve only the first 10 rows from a table named employees, your query would look like this:

SELECT * FROM employees LIMIT 10;

In this example, the query will return only the first 10 rows from the employees table. If you need to sort the data before applying the LIMIT, you can include an ORDER BY clause before the LIMIT, such as:

SELECT * FROM employees ORDER BY last_name LIMIT 10;

This will return the first 10 rows after sorting the table by last_name. The LIMIT clause is extremely useful for pagination, API responses, and general performance optimization by reducing the amount of data processed and returned.

What are the best practices for using the LIMIT clause to optimize query performance?

Using the LIMIT clause effectively can significantly improve query performance, especially in large databases. Here are some best practices to consider:

- Use LIMIT Early: Apply the LIMIT clause as early as possible in the query execution process. This helps in reducing the amount of data processed by the database engine, thereby saving resources and time.

Combine with ORDER BY: When using LIMIT, it's often necessary to sort the data with an ORDER BY clause before limiting the output. This ensures that the limited results are meaningful and in the correct order. For example:

SELECT * FROM employees ORDER BY hire_date DESC LIMIT 5;

Copy after loginThis query returns the 5 most recently hired employees.

Pagination: Use LIMIT along with OFFSET for pagination. This practice is essential for applications displaying large datasets in manageable chunks. For example:

SELECT * FROM posts ORDER BY created_at DESC LIMIT 10 OFFSET 20;

Copy after loginThis returns the next 10 posts after the first 20, useful for displaying pages of content.

- Avoid Overuse of LIMIT with Large OFFSETs: Large OFFSET values can lead to performance issues because the database still has to read and sort the entire dataset up to the offset before returning the requested rows. Consider using keyset pagination or other techniques for large datasets.

- Indexing: Ensure that the columns used in the ORDER BY clause are properly indexed. This can dramatically speed up the query execution time when combined with LIMIT.

Can the LIMIT clause be combined with OFFSET, and how does it affect the result set?

Yes, the LIMIT clause can be combined with OFFSET to skip a specified number of rows before beginning to return rows from the result set. This combination is commonly used for pagination, allowing you to retrieve specific subsets of data from a larger result set.

The OFFSET clause specifies the number of rows to skip before starting to return rows. For example, if you want to skip the first 10 rows and return the next 5 rows, you could use the following query:

SELECT * FROM employees ORDER BY employee_id LIMIT 5 OFFSET 10;

In this example, the query skips the first 10 rows of the employees table, sorted by employee_id, and then returns the next 5 rows. The combination of LIMIT and OFFSET helps in retrieving specific "pages" of data, which is crucial for applications that need to display data in a user-friendly, paginated format.

However, using large OFFSET values can be inefficient because the database still needs to process the entire dataset up to the offset before returning the requested rows. This can lead to slower query performance and increased resource usage. To mitigate this, you can use keyset pagination or other techniques that avoid large OFFSETs.

How can you ensure data consistency when using LIMIT in database queries?

Ensuring data consistency when using the LIMIT clause in database queries involves several strategies to ensure that the data returned is accurate and reliable. Here are some approaches to consider:

- Use Transactions: When performing operations that involve multiple queries, use transactions to ensure that all parts of the operation are completed consistently. This helps prevent partial updates that could lead to inconsistent data.

Locking Mechanisms: Use appropriate locking mechanisms (e.g., table locks, row locks) to prevent concurrent modifications that could affect the data returned by a query with LIMIT. For example:

BEGIN TRANSACTION; LOCK TABLE employees IN EXCLUSIVE MODE; SELECT * FROM employees LIMIT 10; COMMIT;

Copy after loginThis ensures that no other operations can modify the

employeestable while you are retrieving the limited set of rows.Repeatable Read Isolation Level: Use the REPEATABLE READ or SERIALIZABLE isolation level to prevent dirty reads and ensure that the data remains consistent throughout the transaction. For example, in PostgreSQL:

SET TRANSACTION ISOLATION LEVEL REPEATABLE READ; SELECT * FROM employees LIMIT 10;

Copy after login- Avoid Race Conditions: When multiple queries are running concurrently, especially those involving LIMIT and OFFSET, consider the impact of race conditions. For example, if two users request the next page of results simultaneously, they might receive overlapping or inconsistent data. To mitigate this, use timestamp-based queries or keyset pagination instead of relying solely on LIMIT and OFFSET.

- Data Validation and Error Handling: Implement robust data validation and error handling to catch any inconsistencies. For example, if a query returns fewer rows than expected due to concurrent deletions, handle this scenario gracefully in your application logic.

By combining these strategies, you can ensure that the data returned by queries using the LIMIT clause remains consistent and reliable, even in high-concurrency environments.

The above is the detailed content of How do you limit the number of rows returned using the LIMIT clause?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1660

1660

14

14

1416

1416

52

52

1310

1310

25

25

1260

1260

29

29

1233

1233

24

24

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

MySQL and MariaDB can coexist, but need to be configured with caution. The key is to allocate different port numbers and data directories to each database, and adjust parameters such as memory allocation and cache size. Connection pooling, application configuration, and version differences also need to be considered and need to be carefully tested and planned to avoid pitfalls. Running two databases simultaneously can cause performance problems in situations where resources are limited.

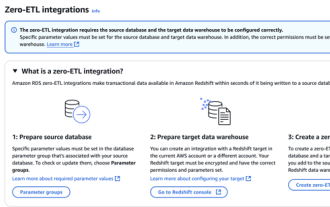

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

LaravelEloquent Model Retrieval: Easily obtaining database data EloquentORM provides a concise and easy-to-understand way to operate the database. This article will introduce various Eloquent model search techniques in detail to help you obtain data from the database efficiently. 1. Get all records. Use the all() method to get all records in the database table: useApp\Models\Post;$posts=Post::all(); This will return a collection. You can access data using foreach loop or other collection methods: foreach($postsas$post){echo$post->

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL is suitable for beginners because it is simple to install, powerful and easy to manage data. 1. Simple installation and configuration, suitable for a variety of operating systems. 2. Support basic operations such as creating databases and tables, inserting, querying, updating and deleting data. 3. Provide advanced functions such as JOIN operations and subqueries. 4. Performance can be improved through indexing, query optimization and table partitioning. 5. Support backup, recovery and security measures to ensure data security and consistency.