Technology peripherals

Technology peripherals

It Industry

It Industry

Machine Learning Pipelines: Setting Up On-premise Kubernetes

Machine Learning Pipelines: Setting Up On-premise Kubernetes

Machine Learning Pipelines: Setting Up On-premise Kubernetes

This multi-part tutorial guides you through building an on-premise machine learning pipeline using open-source tools. It's perfect for startups on a budget, emphasizing control and cost predictability.

Key Advantages:

- Cost-Effective: Avoids cloud service expenses.

- Customizable: Offers greater control over your ML pipeline.

- Accessible: Simplifies Kubernetes setup with Rancher Kubernetes Engine (RKE), requiring only basic Docker and Linux skills.

- Practical Approach: A hands-on guide for ML pipeline development, ideal for beginners.

Why Go On-Premise?

Many assume cloud storage (AWS S3, Google Cloud Storage), but this series demonstrates building a functional pipeline using existing servers, ideal for resource-constrained environments. This approach provides a safe learning environment without unpredictable costs.

Target Audience:

This guide is for software engineers or individuals building production-ready ML models, especially those new to ML pipelines.

Prerequisites:

Familiarity with Linux (Ubuntu 18.04 recommended) and basic Docker knowledge are helpful. Deep Kubernetes expertise isn't required.

Tools Used:

- Docker

- Kubernetes

- Rancher (with RKE)

- KubeFlow/KubeFlow Pipelines (covered in later parts)

- Minio

- TensorFlow (covered in later parts)

Phase 1: Easy Kubernetes Installation with Rancher

This section focuses on the challenging task of Kubernetes installation, simplified with RKE.

Step 0: Machine Preparation:

You'll need at least two Linux machines (or VMs with bridged networking and promiscuous mode enabled) on the same LAN, designated as 'master' and 'worker'. Note that using VMs limits GPU access and performance.

Essential machine details (IP addresses, usernames, SSH keys) are needed for configuration. A temporary hostname (e.g., rancher-demo.domain.test) will be used for this tutorial. Modify your /etc/hosts file accordingly on both machines to reflect this hostname and the IP addresses. If using VMs, add the hostname entry to your host machine's /etc/hosts file as well for browser access.

Step 1: Obtaining the RKE Binary:

Download the appropriate RKE binary for your OS from the GitHub release page, make it executable, and move it to /usr/local/bin. Verify the installation by running rke.

Step 2: Preparing Linux Hosts:

On all machines:

-

Install Docker: Install Docker CE (version 19.03 or later) using the provided commands. Verify the installation and add your user to the

dockergroup. Log out and back in for the group changes to take effect. -

SSH Keys: Set up SSH keys on the master node and copy the public key to all worker nodes. Configure SSH servers to allow port forwarding (

AllowTcpForwarding yesin/etc/ssh/sshd_config). -

Disable Swap: Disable swap using

sudo swapoff -aand comment out swap entries in/etc/fstab. -

Apply Sysctl Settings: Run

sudo sysctl net.bridge.bridge-nf-call-iptables=1. -

DNS Configuration (Ubuntu 18.04 ): Install

resolvconf, edit/etc/resolvconf/resolv.conf.d/head, add nameservers (e.g., 8.8.4.4 and 8.8.8.8), and restartresolvconf.

Step 3: Cluster Configuration File:

On the master node, use rke config to create a cluster.yml file. Provide the necessary information (IP addresses, hostnames, roles, SSH key paths, etc.).

Step 4: Bringing Up the Cluster:

Run rke up on the master node to create the Kubernetes cluster. This process takes some time.

Step 5: Copying Kubeconfig:

Copy kube_config_cluster.yml to $HOME/.kube/config.

Step 6: Installing Kubectl:

Install kubectl on the master node using the provided commands. Verify the installation by running kubectl get nodes.

Step 7: Installing Helm 3:

Install Helm 3 using the provided command.

Step 8: Installing Rancher using Helm:

Add the Rancher repository, create a cattle-system namespace, and install Rancher using Helm. Monitor the deployment status.

Step 9: Setting up Ingress (for access without a load balancer):

Create an ingress.yml file (adapting the host to your chosen hostname) and apply it using kubectl apply -f ingress.yml.

Step 10: Accessing Rancher:

Access the Rancher UI at https://rancher-demo.domain.test (or your chosen hostname), create a password, and set the domain name.

Step 11: Installing cert-manager:

Install cert-manager (version v0.9.1) using the provided commands. Monitor the pods to ensure they are running.

This completes the Kubernetes cluster setup. The next part of the series will cover installing Kubeflow.

Frequently Asked Questions (FAQs):

The FAQs section provides comprehensive answers to common questions about on-premise Kubernetes setup, covering benefits, comparisons with cloud-based solutions, challenges, security considerations, migration strategies, hardware requirements, scaling, Kubernetes operators, machine learning workloads, and performance monitoring.

The above is the detailed content of Machine Learning Pipelines: Setting Up On-premise Kubernetes. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

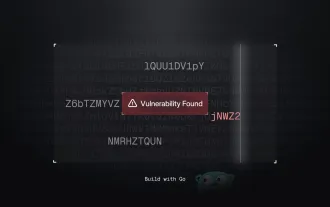

Building a Network Vulnerability Scanner with Go

Apr 01, 2025 am 08:27 AM

Building a Network Vulnerability Scanner with Go

Apr 01, 2025 am 08:27 AM

This Go-based network vulnerability scanner efficiently identifies potential security weaknesses. It leverages Go's concurrency features for speed and includes service detection and vulnerability matching. Let's explore its capabilities and ethical

CNCF Arm64 Pilot: Impact and Insights

Apr 15, 2025 am 08:27 AM

CNCF Arm64 Pilot: Impact and Insights

Apr 15, 2025 am 08:27 AM

This pilot program, a collaboration between the CNCF (Cloud Native Computing Foundation), Ampere Computing, Equinix Metal, and Actuated, streamlines arm64 CI/CD for CNCF GitHub projects. The initiative addresses security concerns and performance lim

Serverless Image Processing Pipeline with AWS ECS and Lambda

Apr 18, 2025 am 08:28 AM

Serverless Image Processing Pipeline with AWS ECS and Lambda

Apr 18, 2025 am 08:28 AM

This tutorial guides you through building a serverless image processing pipeline using AWS services. We'll create a Next.js frontend deployed on an ECS Fargate cluster, interacting with an API Gateway, Lambda functions, S3 buckets, and DynamoDB. Th

Top 21 Developer Newsletters to Subscribe To in 2025

Apr 24, 2025 am 08:28 AM

Top 21 Developer Newsletters to Subscribe To in 2025

Apr 24, 2025 am 08:28 AM

Stay informed about the latest tech trends with these top developer newsletters! This curated list offers something for everyone, from AI enthusiasts to seasoned backend and frontend developers. Choose your favorites and save time searching for rel