Database

Database

Mysql Tutorial

Mysql Tutorial

How Can I Optimize Entity Framework Inserts for Maximum Performance When Dealing with Large Datasets?

How Can I Optimize Entity Framework Inserts for Maximum Performance When Dealing with Large Datasets?

How Can I Optimize Entity Framework Inserts for Maximum Performance When Dealing with Large Datasets?

Boosting Entity Framework Insert Performance with Large Datasets

Inserting large datasets into Entity Framework (EF) can be slow. This article explores techniques to significantly speed up this process, especially when using TransactionScopes and handling a substantial number of records.

Optimizing SaveChanges() Calls

The common practice of calling SaveChanges() for each record is inefficient for bulk inserts. Instead, consider these alternatives:

-

Batch

SaveChanges(): CallSaveChanges()only once after all records are added to the context. -

Interval

SaveChanges(): CallSaveChanges()after a set number of records (e.g., 100 or 1000). -

Context Recycling: Call

SaveChanges(), dispose the context, and create a new one after a certain number of records.

These methods reduce database round trips and minimize overhead from change tracking.

Efficient Bulk Insert Implementation

The following code demonstrates optimized bulk insertion:

using (TransactionScope scope = new TransactionScope())

{

MyDbContext context = null;

try

{

context = new MyDbContext();

context.Configuration.AutoDetectChangesEnabled = false;

int count = 0;

foreach (var entityToInsert in someCollectionOfEntitiesToInsert)

{

++count;

context = AddToContext(context, entityToInsert, count, 1000, true);

}

context.SaveChanges();

}

finally

{

if (context != null)

context.Dispose();

}

scope.Complete();

}

private MyDbContext AddToContext(MyDbContext context, Entity entity, int count, int commitCount, bool recreateContext)

{

context.Set<Entity>().Add(entity);

if (count % commitCount == 0)

{

context.SaveChanges();

if (recreateContext)

{

context.Dispose();

context = new MyDbContext();

context.Configuration.AutoDetectChangesEnabled = false;

}

}

return context;

}This example disables automatic change detection (AutoDetectChangesEnabled = false) for performance gains. The AddToContext method handles committing changes and optionally recreating the context after a specified number of records.

Benchmark Results

Testing with 560,000 records showed dramatic improvements:

- Per-record

SaveChanges(): Hours -

SaveChanges()after 100 records: Over 20 minutes -

SaveChanges()after 1000 records (no context disposal): 242 seconds -

SaveChanges()after 1000 records (with context disposal): 191 seconds

Conclusion

These strategies dramatically improve Entity Framework bulk insert performance. Disabling change tracking, using batch SaveChanges(), and managing context effectively are key to efficiently handling large datasets.

The above is the detailed content of How Can I Optimize Entity Framework Inserts for Maximum Performance When Dealing with Large Datasets?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1653

1653

14

14

1413

1413

52

52

1304

1304

25

25

1251

1251

29

29

1224

1224

24

24

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

MySQL and MariaDB can coexist, but need to be configured with caution. The key is to allocate different port numbers and data directories to each database, and adjust parameters such as memory allocation and cache size. Connection pooling, application configuration, and version differences also need to be considered and need to be carefully tested and planned to avoid pitfalls. Running two databases simultaneously can cause performance problems in situations where resources are limited.

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

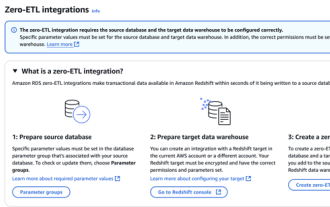

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

LaravelEloquent Model Retrieval: Easily obtaining database data EloquentORM provides a concise and easy-to-understand way to operate the database. This article will introduce various Eloquent model search techniques in detail to help you obtain data from the database efficiently. 1. Get all records. Use the all() method to get all records in the database table: useApp\Models\Post;$posts=Post::all(); This will return a collection. You can access data using foreach loop or other collection methods: foreach($postsas$post){echo$post->

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL is suitable for beginners because it is simple to install, powerful and easy to manage data. 1. Simple installation and configuration, suitable for a variety of operating systems. 2. Support basic operations such as creating databases and tables, inserting, querying, updating and deleting data. 3. Provide advanced functions such as JOIN operations and subqueries. 4. Performance can be improved through indexing, query optimization and table partitioning. 5. Support backup, recovery and security measures to ensure data security and consistency.