Experiment with Chainlit AI interface with RAG on Upsun

Chainlit: A Scalable Conversational AI Framework

Chainlit is an open-source, asynchronous Python framework designed for building robust and scalable conversational AI applications. It offers a flexible foundation, allowing developers to integrate external APIs, custom logic, and local models seamlessly.

This tutorial demonstrates two Retrieval Augmented Generation (RAG) implementations within Chainlit:

- Leveraging OpenAI Assistants with uploaded documents.

- Utilizing llama_index with a local document folder.

Local Chainlit Setup

Virtual Environment

Create a virtual environment:

mkdir chainlit && cd chainlit python3 -m venv venv source venv/bin/activate

Install Dependencies

Install required packages and save dependencies:

pip install chainlit pip install llama_index # For implementation #2 pip install openai pip freeze > requirements.txt

Test Chainlit

Start Chainlit:

chainlit hello

Access the placeholder at https://www.php.cn/link/2674cea93e3214abce13e072a2dc2ca5

Upsun Deployment

Git Initialization

Initialize a Git repository:

git init .

Create a .gitignore file:

<code>.env database/** data/** storage/** .chainlit venv __pycache__</code>

Upsun Project Creation

Create an Upsun project using the CLI (follow prompts). Upsun will automatically configure the remote repository.

Configuration

Example Upsun configuration for Chainlit:

applications:

chainlit:

source:

root: "/"

type: "python:3.11"

mounts:

"/database":

source: "storage"

source_path: "database"

".files":

source: "storage"

source_path: "files"

"__pycache__":

source: "storage"

source_path: "pycache"

".chainlit":

source: "storage"

source_path: ".chainlit"

web:

commands:

start: "chainlit run app.py --port $PORT --host 0.0.0.0"

upstream:

socket_family: tcp

locations:

"/":

passthru: true

"/public":

passthru: true

build:

flavor: none

hooks:

build: |

set -eux

pip install -r requirements.txt

deploy: |

set -eux

# post_deploy: |

routes:

"https://{default}/":

type: upstream

upstream: "chainlit:http"

"https://www.{default}":

type: redirect

to: "https://{default}/"Set the OPENAI_API_KEY environment variable via Upsun CLI:

upsun variable:create env:OPENAI_API_KEY --value=sk-proj[...]

Deployment

Commit and deploy:

git add . git commit -m "First chainlit example" upsun push

Review the deployment status. Successful deployment will show Chainlit running on your main environment.

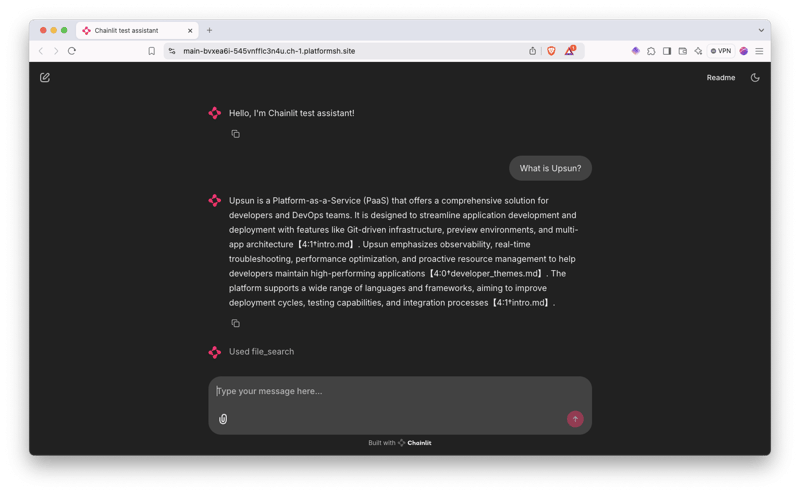

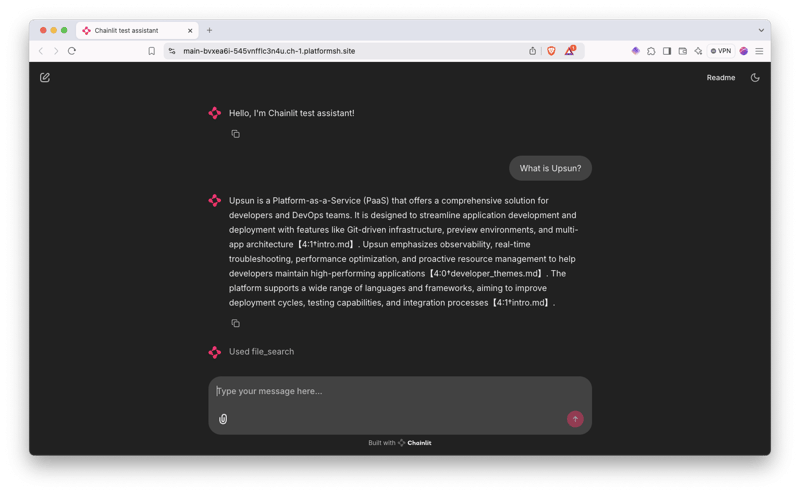

Implementation 1: OpenAI Assistant & Uploaded Files

This implementation uses an OpenAI assistant to process uploaded documents.

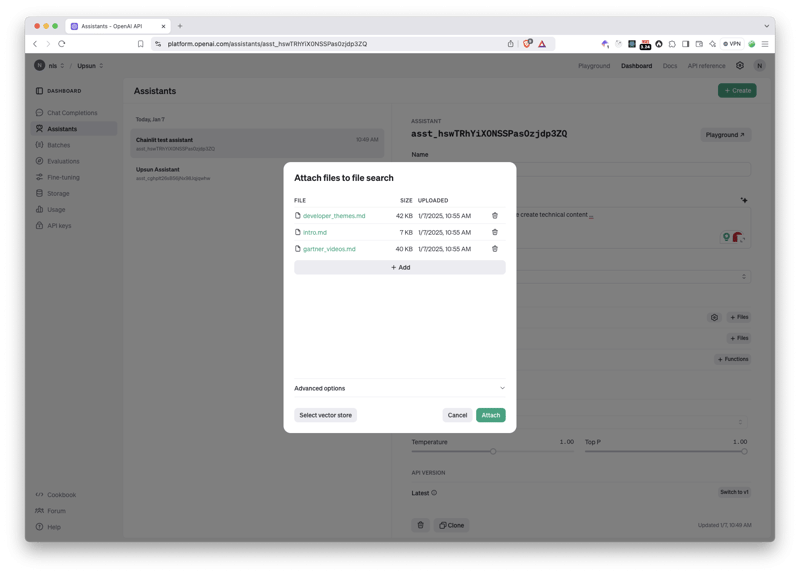

Assistant Creation

Create a new OpenAI assistant on the OpenAI Platform. Set system instructions, choose a model (with text response format), and keep the temperature low (e.g., 0.10). Copy the assistant ID (asst_[xxx]) and set it as an environment variable:

upsun variable:create env:OPENAI_ASSISTANT_ID --value=asst_[...]

Content Upload

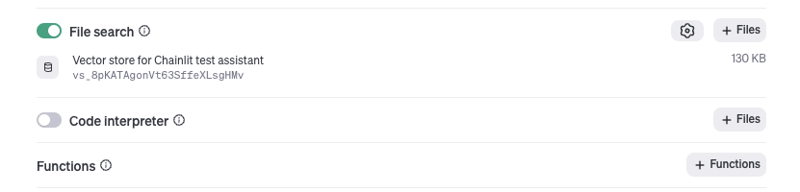

Upload your documents (Markdown preferred) to the assistant. OpenAI will create a vector store.

Assistant Logic (app.py)

Replace app.py content with the provided code. Key parts: @cl.on_chat_start creates a new OpenAI thread, and @cl.on_message sends user messages to the thread and streams the response.

Commit and deploy the changes. Test the assistant.

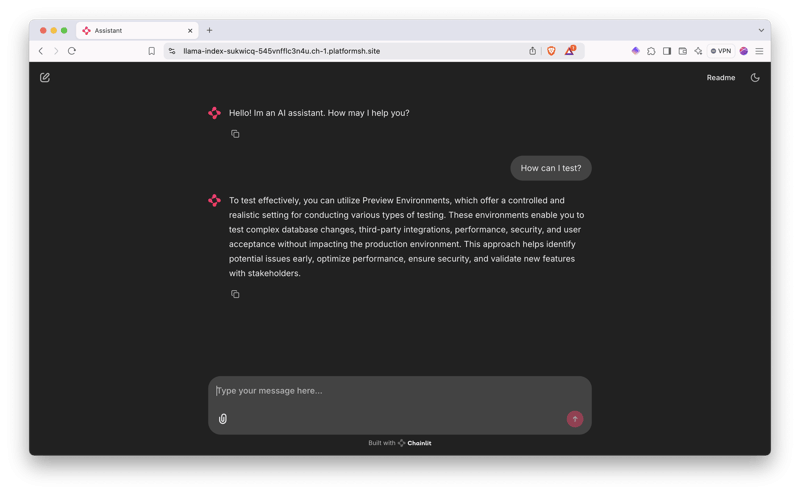

Implementation 2: OpenAI llama_index

This implementation uses llama_index for local knowledge management and OpenAI for response generation.

Branch Creation

Create a new branch:

mkdir chainlit && cd chainlit python3 -m venv venv source venv/bin/activate

Folder Creation and Mounts

Create data and storage folders. Add mounts to the Upsun configuration.

app.py Update

Update app.py with the provided llama_index code. This code loads documents, creates a VectorStoreIndex, and uses it to answer queries via OpenAI.

Deploy the new environment and upload the data folder. Test the application.

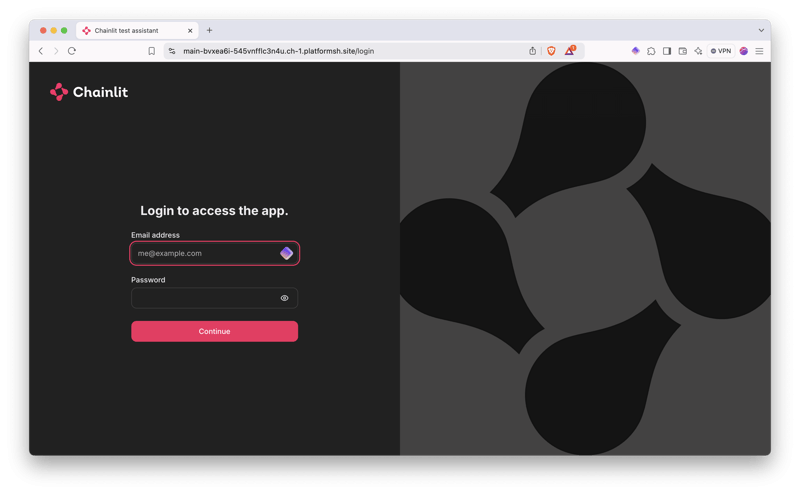

Bonus: Authentication

Add authentication using a SQLite database.

Database Setup

Create a database folder and add a mount to the Upsun configuration. Create an environment variable for the database path:

pip install chainlit pip install llama_index # For implementation #2 pip install openai pip freeze > requirements.txt

Authentication Logic (app.py)

Add authentication logic to app.py using @cl.password_auth_callback. This adds a login form.

Create a script to generate hashed passwords. Add users to the database (using hashed passwords). Deploy the authentication and test login.

Conclusion

This tutorial demonstrated deploying a Chainlit application on Upsun with two RAG implementations and authentication. The flexible architecture allows for various adaptations and integrations.

The above is the detailed content of Experiment with Chainlit AI interface with RAG on Upsun. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected by the browser when using Fiddler Everywhere for man-in-the-middle reading?

Apr 02, 2025 am 07:15 AM

How to avoid being detected when using FiddlerEverywhere for man-in-the-middle readings When you use FiddlerEverywhere...

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

How to handle comma-separated list query parameters in FastAPI?

Apr 02, 2025 am 06:51 AM

Fastapi ...

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

How to solve permission issues when using python --version command in Linux terminal?

Apr 02, 2025 am 06:36 AM

Using python in Linux terminal...

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics in project and problem-driven methods within 10 hours?

Apr 02, 2025 am 07:18 AM

How to teach computer novice programming basics within 10 hours? If you only have 10 hours to teach computer novice some programming knowledge, what would you choose to teach...

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

How to get news data bypassing Investing.com's anti-crawler mechanism?

Apr 02, 2025 am 07:03 AM

Understanding the anti-crawling strategy of Investing.com Many people often try to crawl news data from Investing.com (https://cn.investing.com/news/latest-news)...

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

Python asyncio Telnet connection is disconnected immediately: How to solve server-side blocking problem?

Apr 02, 2025 am 06:30 AM

About Pythonasyncio...

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

What is the reason why pipeline files cannot be written when using Scapy crawler?

Apr 02, 2025 am 06:45 AM

Discussion on the reasons why pipeline files cannot be written when using Scapy crawlers When learning and using Scapy crawlers for persistent data storage, you may encounter pipeline files...

Python 3.6 loading pickle file error ModuleNotFoundError: What should I do if I load pickle file '__builtin__'?

Apr 02, 2025 am 06:27 AM

Python 3.6 loading pickle file error ModuleNotFoundError: What should I do if I load pickle file '__builtin__'?

Apr 02, 2025 am 06:27 AM

Loading pickle file in Python 3.6 environment error: ModuleNotFoundError:Nomodulenamed...