A surprising thing about PyPI&#s BigQuery data

You can get download numbers for PyPI packages (or projects) from a Google BigQuery dataset. You need a Google account and credentials, and Google gives 1 TiB of free quota per month.

Each month, I have automation to fetch the download numbers for the 8,000 most popular packages over the past 30 days, and make it available as more accessible JSON and CSV files at Top PyPI Packages. This data is widely used for research in academia and industry.

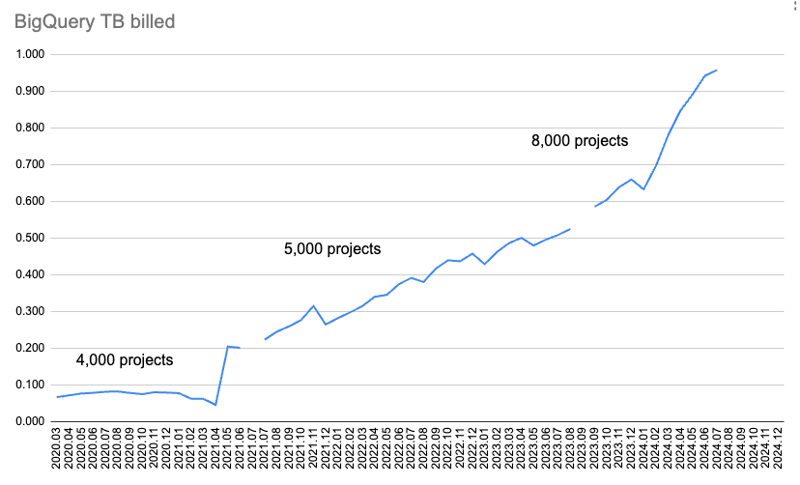

However, as more packages and releases are uploaded to PyPI, and there are more and more downloads logged, the amount of billed data increases too.

This chart shows the amount of data billed per month.

At first, I was only collecting downloads data for 4,000 packages, and it was fetched for two queries: downloads over 365 days and over 30 days. But as time passed, it started using up too much quota to download data for 365 days.

So I ditched the 365-day data, and increased the 30-day data from 4,000 to 5,000 packages. Later, I checked how much quota was being used and increased from 5,000 packages to 8,000 packages.

But then I exceeded the BigQuery monthly quota of 1 TiB fetching data for July 2024.

To fetch the missing data and investigate what's going in, I started Google Cloud's 90-day, $300 (€277.46) free-trial ?

Here's what I found!

Finding: it costs more to get data for downloads from only pip than from all installers

I use the pypinfo client to help query BigQuery. By default, it only fetches downloads for pip.

Only pip

This command gets one day's download data for the top 10 packages, for pip only:

$ pypinfo --limit 10 --days 1 "" project Served from cache: False Data processed: 58.21 GiB Data billed: 58.21 GiB Estimated cost: <pre class="brush:php;toolbar:false">$ pypinfo --all --limit 10 --days 1 "" project Served from cache: False Data processed: 46.63 GiB Data billed: 46.63 GiB Estimated cost: <pre class="brush:php;toolbar:false">SELECT file.project as project, COUNT(*) as download_count, FROM `bigquery-public-data.pypi.file_downloads` WHERE timestamp BETWEEN TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -2 DAY) AND TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -1 DAY) AND details.installer.name = "pip" GROUP BY project ORDER BY download_count DESC LIMIT 10

Results:

| project | download count |

|---|---|

| boto3 | 37,251,744 |

| aiobotocore | 16,252,824 |

| urllib3 | 16,243,278 |

| botocore | 15,687,125 |

| requests | 13,271,314 |

| s3fs | 12,865,055 |

| s3transfer | 12,014,278 |

| fsspec | 11,982,305 |

| charset-normalizer | 11,684,740 |

| certifi | 11,639,584 |

| Total | 158,892,247 |

All installers

Adding the --all flag gets one day's download data for the top 10 packages, for all installers:

$ pypinfo --limit 10 --days 1 "" project Served from cache: False Data processed: 58.21 GiB Data billed: 58.21 GiB Estimated cost: <pre class="brush:php;toolbar:false">$ pypinfo --all --limit 10 --days 1 "" project Served from cache: False Data processed: 46.63 GiB Data billed: 46.63 GiB Estimated cost: <pre class="brush:php;toolbar:false">SELECT file.project as project, COUNT(*) as download_count, FROM `bigquery-public-data.pypi.file_downloads` WHERE timestamp BETWEEN TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -2 DAY) AND TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -1 DAY) AND details.installer.name = "pip" GROUP BY project ORDER BY download_count DESC LIMIT 10

| project | download count |

|---|---|

| boto3 | 39,495,624 |

| botocore | 17,281,187 |

| urllib3 | 17,225,121 |

| aiobotocore | 16,430,826 |

| requests | 14,287,965 |

| s3fs | 12,958,516 |

| charset-normalizer | 12,781,405 |

| certifi | 12,647,098 |

| setuptools | 12,608,120 |

| idna | 12,510,335 |

| Total | 168,226,197 |

So we can see the default pip-only costs an extra 25% data processed and data billed, and costs an extra 25% in dollars.

Unsurprisingly, the actual download counts are higher for all installers. The ranking has changed a bit, but I expect we're still getting more-or-less the same packages in the top thousands of results.

Queries

It sends a query like this to BigQuery for only pip:

SELECT file.project as project, COUNT(*) as download_count, FROM `bigquery-public-data.pypi.file_downloads` WHERE timestamp BETWEEN TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -2 DAY) AND TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -1 DAY) GROUP BY project ORDER BY download_count DESC LIMIT 10

And for all installers:

$ pypinfo --all --limit 100 --days 1 "" installer Served from cache: False Data processed: 29.49 GiB Data billed: 29.49 GiB Estimated cost: <pre class="brush:php;toolbar:false">SELECT file.project as project, COUNT(*) as download_count, FROM `bigquery-public-data.pypi.file_downloads` WHERE timestamp BETWEEN TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -2 DAY) AND TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -1 DAY) GROUP BY project ORDER BY download_count DESC LIMIT 8000

These queries are the same, except the default has an extra AND details.installer.name = "pip" condition. It seems reasonable it would cost more to do extra filtering work.

Installers

Let's look at the installers:

| installer name | download count |

|---|---|

| pip | 1,121,198,711 |

| uv | 117,194,833 |

| requests | 29,828,272 |

| poetry | 23,009,454 |

| None | 8,916,745 |

| bandersnatch | 6,171,555 |

| setuptools | 1,362,797 |

| Bazel | 1,280,271 |

| Browser | 1,096,328 |

| Nexus | 593,230 |

| Homebrew | 510,247 |

| Artifactory | 69,063 |

| pdm | 62,904 |

| OS | 13,108 |

| devpi | 9,530 |

| conda | 2,272 |

| pex | 194 |

| Total | 1,311,319,514 |

pip still by far the most popular, and unsurprising uv is up there too, with about 10% of pip's downloads.

The others are about 25% or less of uv. A lot of them are mirroring services that we wanted to exclude before.

I think given uv's importance, and my expectation that it will continue to take a bigger share of the pie, plus especially the extra cost for filtering by just pip, means that we should switch to fetching data for all downloaders. Plus the others don't account for that much of the pie.

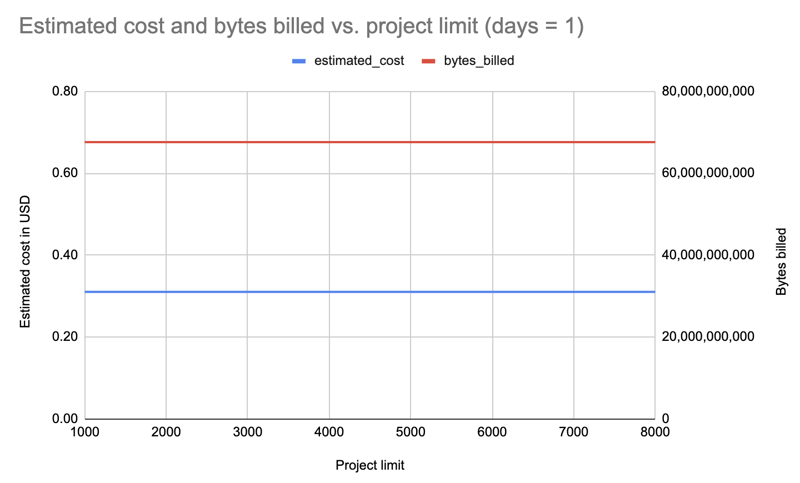

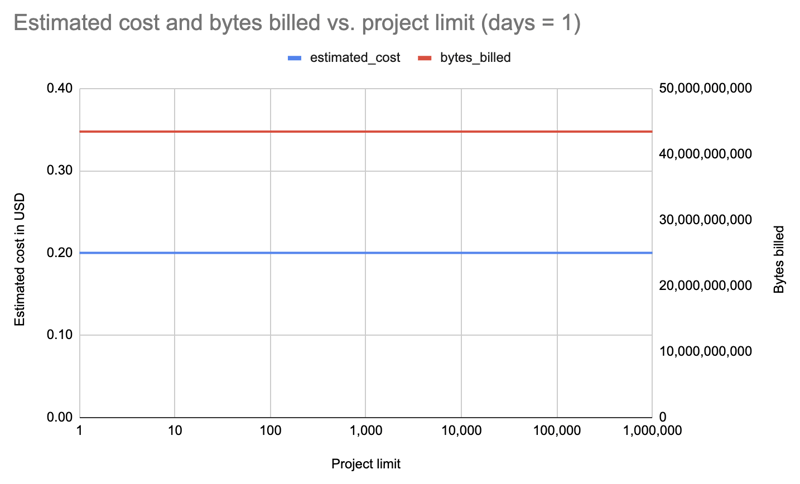

Finding: the number of packages doesn't affect the cost

This was the biggest surprise. Earlier I'd been increasing or decreasing the number to try and remain under quota. But it turns out it makes no difference how many packages you query!

I fetched data for just one day and all installers for different package limits: 1000, 2000, 3000, 4000, 5000, 6000, 7000, 8000. Sample query:

$ pypinfo --limit 10 --days 1 "" project Served from cache: False Data processed: 58.21 GiB Data billed: 58.21 GiB Estimated cost: <pre class="brush:php;toolbar:false">$ pypinfo --all --limit 10 --days 1 "" project Served from cache: False Data processed: 46.63 GiB Data billed: 46.63 GiB Estimated cost: <pre class="brush:php;toolbar:false">SELECT file.project as project, COUNT(*) as download_count, FROM `bigquery-public-data.pypi.file_downloads` WHERE timestamp BETWEEN TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -2 DAY) AND TIMESTAMP_ADD(CURRENT_TIMESTAMP(), INTERVAL -1 DAY) AND details.installer.name = "pip" GROUP BY project ORDER BY download_count DESC LIMIT 10

Result: Interestingly, the cost is the same for all limits (1000-8000): $0.31.

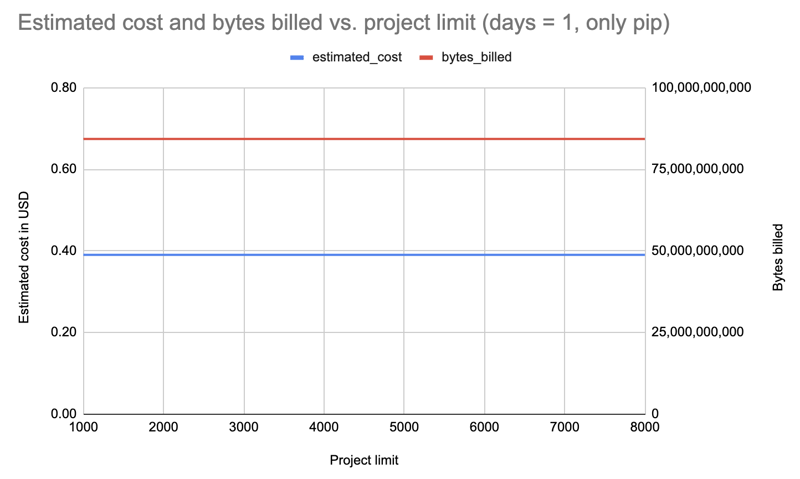

Repeating with one day but filtering for pip only:

Result: Cost increased to $0.39 but again the same for all limits.

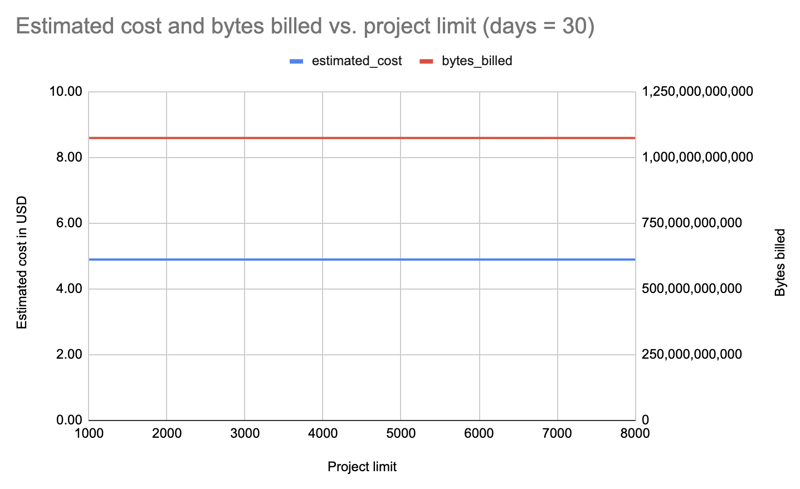

Let's repeat with all installers, but for 30 days, and this time query in decreasing limits, in case we were only paying for incremental changes: 8000, 7000, 6000, 5000, 4000, 3000, 2000, 1000:

Result: Again, the cost is the same regardless of package limit: $4.89 per query.

Well then, let's repeat with the limit increasing by powers of ten, up to 1,000,000! This last one fetches data for all 531,022 packages on PyPI:

| limit | projects count | estimated cost | bytes billed | bytes processed |

|---|---|---|---|---|

| 1 | 1 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 10 | 10 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 100 | 100 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 1000 | 1,000 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 8000 | 8,000 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 10000 | 10,000 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 100000 | 100,000 | 0.20 | 43,447,746,560 | 43,447,720,943 |

| 1000000 | 531,022 | 0.20 | 43,447,746,560 | 43,447,720,943 |

Result: Again, same cost, whether for 1 package or 531,022 packages!

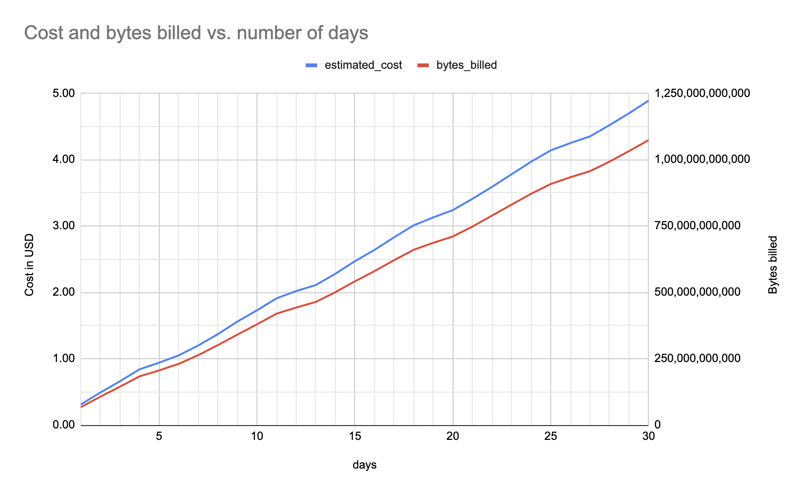

Finding: the number of days affects the cost

No surprise. I'd earlier noticed 365 days too took much quota, and I could continue with 30 days.

Here's the estimated cost and bytes billed (for one package, all installers) between one and 30 days (f"pypinfo --all --json --indent 0 --days {days} --limit 1 '' project"), showing a roughly linear increase:

Conclusion

It doesn't matter how many packages I fetch data for, I might as well fetch all and make it available to everyone, depending on the size of the data file. It will make sense to still offer a smaller file with 8,000 or so packages: often you just need a large-ish yet manageable number.

It costs more to filter for only downloads from pip, so I've switched to fetching data for all installers.

The number of days affects the cost, so I will need to decrease this in the future to stay within quota. For example, at some point I may need to switch from 30 to 25 days, and later from 25 to 20 days.

More details from the investigation, the scripts and data files can be found at

hugovk/top-pypi-packages#36.

And let me know if you know any tricks to reduce costs!

Header photo: "The Balancing Rock, Stonehenge, Near Glen Innes, NSW" by the Royal Australian Historical Society, with no known copyright restrictions.

The above is the detailed content of A surprising thing about PyPI&#s BigQuery data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1670

1670

14

14

1428

1428

52

52

1329

1329

25

25

1274

1274

29

29

1256

1256

24

24

Python vs. C : Learning Curves and Ease of Use

Apr 19, 2025 am 12:20 AM

Python vs. C : Learning Curves and Ease of Use

Apr 19, 2025 am 12:20 AM

Python is easier to learn and use, while C is more powerful but complex. 1. Python syntax is concise and suitable for beginners. Dynamic typing and automatic memory management make it easy to use, but may cause runtime errors. 2.C provides low-level control and advanced features, suitable for high-performance applications, but has a high learning threshold and requires manual memory and type safety management.

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

Python and Time: Making the Most of Your Study Time

Apr 14, 2025 am 12:02 AM

To maximize the efficiency of learning Python in a limited time, you can use Python's datetime, time, and schedule modules. 1. The datetime module is used to record and plan learning time. 2. The time module helps to set study and rest time. 3. The schedule module automatically arranges weekly learning tasks.

Python vs. C : Exploring Performance and Efficiency

Apr 18, 2025 am 12:20 AM

Python vs. C : Exploring Performance and Efficiency

Apr 18, 2025 am 12:20 AM

Python is better than C in development efficiency, but C is higher in execution performance. 1. Python's concise syntax and rich libraries improve development efficiency. 2.C's compilation-type characteristics and hardware control improve execution performance. When making a choice, you need to weigh the development speed and execution efficiency based on project needs.

Learning Python: Is 2 Hours of Daily Study Sufficient?

Apr 18, 2025 am 12:22 AM

Learning Python: Is 2 Hours of Daily Study Sufficient?

Apr 18, 2025 am 12:22 AM

Is it enough to learn Python for two hours a day? It depends on your goals and learning methods. 1) Develop a clear learning plan, 2) Select appropriate learning resources and methods, 3) Practice and review and consolidate hands-on practice and review and consolidate, and you can gradually master the basic knowledge and advanced functions of Python during this period.

Python vs. C : Understanding the Key Differences

Apr 21, 2025 am 12:18 AM

Python vs. C : Understanding the Key Differences

Apr 21, 2025 am 12:18 AM

Python and C each have their own advantages, and the choice should be based on project requirements. 1) Python is suitable for rapid development and data processing due to its concise syntax and dynamic typing. 2)C is suitable for high performance and system programming due to its static typing and manual memory management.

Which is part of the Python standard library: lists or arrays?

Apr 27, 2025 am 12:03 AM

Which is part of the Python standard library: lists or arrays?

Apr 27, 2025 am 12:03 AM

Pythonlistsarepartofthestandardlibrary,whilearraysarenot.Listsarebuilt-in,versatile,andusedforstoringcollections,whereasarraysareprovidedbythearraymoduleandlesscommonlyusedduetolimitedfunctionality.

Python: Automation, Scripting, and Task Management

Apr 16, 2025 am 12:14 AM

Python: Automation, Scripting, and Task Management

Apr 16, 2025 am 12:14 AM

Python excels in automation, scripting, and task management. 1) Automation: File backup is realized through standard libraries such as os and shutil. 2) Script writing: Use the psutil library to monitor system resources. 3) Task management: Use the schedule library to schedule tasks. Python's ease of use and rich library support makes it the preferred tool in these areas.

Python for Web Development: Key Applications

Apr 18, 2025 am 12:20 AM

Python for Web Development: Key Applications

Apr 18, 2025 am 12:20 AM

Key applications of Python in web development include the use of Django and Flask frameworks, API development, data analysis and visualization, machine learning and AI, and performance optimization. 1. Django and Flask framework: Django is suitable for rapid development of complex applications, and Flask is suitable for small or highly customized projects. 2. API development: Use Flask or DjangoRESTFramework to build RESTfulAPI. 3. Data analysis and visualization: Use Python to process data and display it through the web interface. 4. Machine Learning and AI: Python is used to build intelligent web applications. 5. Performance optimization: optimized through asynchronous programming, caching and code