DB Migration For Golang Services, Why it matters?

DB Migration, why it matters?

Have you ever faced the situations when you deploy new update on production with updated database schemas, but got bugs after that and need to revert things.... that's when migration comes into place.

Database migration serves several key purposes:

- Schema Evolution: As applications evolve, their data models change. Migrations allow developers to systematically update the database schema to reflect these changes, ensuring that the database structure matches the application code.

- Version Control: Migrations provide a way to version the database schema, allowing teams to track changes over time. This versioning helps in understanding the evolution of the database and aids in collaboration among developers.

- Consistency Across Environments: Migrations ensure that the database schema is consistent across different environments (development, testing, production). This reduces the risk of discrepancies that can lead to bugs and integration issues.

- Rollback Capability: Many migration tools support rolling back changes, allowing developers to revert to a previous state of the database if a migration causes issues. This enhances stability during the development and deployment process.

- Automated Deployment: Migrations can be automated as part of the deployment process, ensuring that the necessary schema changes are applied to the database without manual intervention. This streamlines the release process and reduces human error.

Applying in golang projects

To create a comprehensive, production-grade setup for a Golang service using GORM with MySQL that allows for easy migrations, updates, and rollbacks, you need to include migration tooling, handle database connection pooling, and ensure proper struct definitions. Here’s a complete example to guide you through the process:

Project Structure

/golang-service |-- main.go |-- database | |-- migration.go |-- models | |-- user.go |-- config | |-- config.go |-- migrations | |-- ... |-- go.mod

1. Database Configuration (config/config.go)

package config

import (

"fmt"

"log"

"os"

"time"

"github.com/joho/godotenv"

"gorm.io/driver/mysql"

"gorm.io/gorm"

)

var DB *gorm.DB

func ConnectDB() {

err := godotenv.Load()

if err != nil {

log.Fatal("Error loading .env file")

}

// charset=utf8mb4: Sets the character set to utf8mb4, which supports all Unicode characters, including emojis.

// parseTime=True: Tells the driver to automatically parse DATE and DATETIME values into Go's time.Time type.

// loc=Local: Uses the local timezone of the server for time-related queries and storage.

dsn := fmt.Sprintf(

"%s:%s@tcp(%s:%s)/%s?charset=utf8mb4&parseTime=True&loc=Local",

os.Getenv("DB_USER"),

os.Getenv("DB_PASS"),

os.Getenv("DB_HOST"),

os.Getenv("DB_PORT"),

os.Getenv("DB_NAME"),

)

db, err := gorm.Open(mysql.Open(dsn), &gorm.Config{})

if err != nil {

panic("failed to connect database")

}

sqlDB, err := db.DB()

if err != nil {

panic("failed to configure database connection")

}

// Set connection pool settings

sqlDB.SetMaxIdleConns(10)

sqlDB.SetMaxOpenConns(100)

sqlDB.SetConnMaxLifetime(time.Hour)

// 1.sqlDB.SetMaxIdleConns(10)

// Sets the maximum number of idle (unused but open) connections in the connection pool.

// A value of 10 means up to 10 connections can remain idle, ready to be reused.

// 2. sqlDB.SetMaxOpenConns(100):

// Sets the maximum number of open (active or idle) connections that can be created to the database.

// A value of 100 limits the total number of connections, helping to prevent overloading the database.

// 3. sqlDB.SetConnMaxLifetime(time.Hour):

// Sets the maximum amount of time a connection can be reused before it’s closed.

// A value of time.Hour means that each connection will be kept for up to 1 hour, after which it will be discarded and a new connection will be created if needed.

DB = db

}

2. Database Migration (database/migration.go)

package database

import (

"golang-service/models"

"golang-service/migrations"

"gorm.io/gorm"

)

func Migrate(db *gorm.DB) {

db.AutoMigrate(&models.User{})

// Apply additional custom migrations if needed

}

3. Models (models/user.go)

package models

import "gorm.io/gorm"

type User struct {

gorm.Model

Name string `json:"name"`

}

4. Environment Configuration (.env)

DB_USER=root DB_PASS=yourpassword DB_HOST=127.0.0.1 DB_PORT=3306 DB_NAME=yourdb

5. Main Entry Point (main.go)

package main

import (

"golang-service/config"

"golang-service/database"

"golang-service/models"

"github.com/gin-gonic/gin"

"gorm.io/gorm"

)

func main() {

config.ConnectDB()

database.Migrate(config.DB)

r := gin.Default()

r.POST("/users", createUser)

r.GET("/users/:id", getUser)

r.Run(":8080")

}

func createUser(c *gin.Context) {

var user models.User

if err := c.ShouldBindJSON(&user); err != nil {

c.JSON(400, gin.H{"error": err.Error()})

return

}

if err := config.DB.Create(&user).Error; err != nil {

c.JSON(500, gin.H{"error": err.Error()})

return

}

c.JSON(201, user)

}

func getUser(c *gin.Context) {

id := c.Param("id")

var user models.User

if err := config.DB.First(&user, id).Error; err != nil {

c.JSON(404, gin.H{"error": "User not found"})

return

}

c.JSON(200, user)

}

6. Explanation:

- Database Config: Manages connection pooling for production-grade performance.

- Migration Files: (in migrations folder) Helps in versioning the database schema.

- GORM Models: Maps database tables to Go structs.

- Database Migrations: (in database folder) Custom logic for altering tables over time, allowing for easy rollbacks.

- Testing: You can create integration tests for this setup using httptest and testify.

7. Create First Migration

-

For production environments, we could use a migration library like golang-migrate to apply, rollback, or redo migrations.

Install golang-migrate:

go install -tags 'mysql' github.com/golang-migrate/migrate/v4/cmd/migrate@latest

Copy after loginCopy after login -

Generate migrate files for users table

migrate create -ext=sql -dir=./migrations -seq create_users_table

Copy after loginCopy after loginAfter running the command we'll get a pair of .up.sql (to update schema) and down.sql (for potential rollback later) . The number 000001 is the auto generated index of migration.

/golang-service |-- migrations | |-- 000001_create_users_table.down.sql | |-- 000001_create_users_table.up.sql

Copy after loginAdd relevant sql command to .up file , and .down file.

000001_create_users_table.up.sql

CREATE TABLE users ( id BIGINT AUTO_INCREMENT PRIMARY KEY, name VARCHAR(255) NOT NULL, created_at DATETIME, updated_at DATETIME, deleted_at DATETIME);

Copy after login000001_create_users_table.down.sql

DROP TABLE IF EXISTS users;

Copy after loginRun the up migration and apply changes to the database with the following command (-verbose flag to see more log details):

migrate -path ./migrations -database "mysql://user:password@tcp(localhost:3306)/dbname" -verbose up

Copy after loginIn case we got issue with migration we can use the following command to see the current migration version and its status:

migrate -path ./migrations -database "mysql://user:password@tcp(localhost:3306)/dbname" version

Copy after loginIf we have a broken migration for some reasons we can consider to use the force (use carefully) command with the version number of the dirty migration. If the version is 1 (could check it in migrations or schema_migrations table), we would run:

migrate -path ./migrations -database "mysql://user:password@tcp(localhost:3306)/dbname" force 1

Copy after login

8. Changing schemes

-

At some point in time, we might like to add new features and some of those might require chaning data schemes, for instance we'd like to add email field to users table. We'd do it as following.

Make a new migration for adding email column to users table

migrate create -ext=sql -dir=./migrations -seq add_email_to_users

Copy after loginNow we have a new pair of .up.sql and .down.sql

/golang-service |-- migrations | |-- 000001_create_users_table.down.sql | |-- 000001_create_users_table.up.sql | |-- 000002_add_email_to_users.down.sql | |-- 000002_add_email_to_users.up.sql

Copy after login -

Adding following content to *_add_email_to_users.*.sql files

000002_add_email_to_users.up.sql

/golang-service |-- main.go |-- database | |-- migration.go |-- models | |-- user.go |-- config | |-- config.go |-- migrations | |-- ... |-- go.mod

Copy after loginCopy after loginCopy after login000002_add_email_to_users.down.sql

package config import ( "fmt" "log" "os" "time" "github.com/joho/godotenv" "gorm.io/driver/mysql" "gorm.io/gorm" ) var DB *gorm.DB func ConnectDB() { err := godotenv.Load() if err != nil { log.Fatal("Error loading .env file") } // charset=utf8mb4: Sets the character set to utf8mb4, which supports all Unicode characters, including emojis. // parseTime=True: Tells the driver to automatically parse DATE and DATETIME values into Go's time.Time type. // loc=Local: Uses the local timezone of the server for time-related queries and storage. dsn := fmt.Sprintf( "%s:%s@tcp(%s:%s)/%s?charset=utf8mb4&parseTime=True&loc=Local", os.Getenv("DB_USER"), os.Getenv("DB_PASS"), os.Getenv("DB_HOST"), os.Getenv("DB_PORT"), os.Getenv("DB_NAME"), ) db, err := gorm.Open(mysql.Open(dsn), &gorm.Config{}) if err != nil { panic("failed to connect database") } sqlDB, err := db.DB() if err != nil { panic("failed to configure database connection") } // Set connection pool settings sqlDB.SetMaxIdleConns(10) sqlDB.SetMaxOpenConns(100) sqlDB.SetConnMaxLifetime(time.Hour) // 1.sqlDB.SetMaxIdleConns(10) // Sets the maximum number of idle (unused but open) connections in the connection pool. // A value of 10 means up to 10 connections can remain idle, ready to be reused. // 2. sqlDB.SetMaxOpenConns(100): // Sets the maximum number of open (active or idle) connections that can be created to the database. // A value of 100 limits the total number of connections, helping to prevent overloading the database. // 3. sqlDB.SetConnMaxLifetime(time.Hour): // Sets the maximum amount of time a connection can be reused before it’s closed. // A value of time.Hour means that each connection will be kept for up to 1 hour, after which it will be discarded and a new connection will be created if needed. DB = db }Copy after loginCopy after loginCopy after loginRun the up migration command again to make update to the data schemas

package database import ( "golang-service/models" "golang-service/migrations" "gorm.io/gorm" ) func Migrate(db *gorm.DB) { db.AutoMigrate(&models.User{}) // Apply additional custom migrations if needed }Copy after loginCopy after loginCopy after loginWe'll also need to update the golang users struct (adding Email field) to keep it in sync with the new schemas..

package models import "gorm.io/gorm" type User struct { gorm.Model Name string `json:"name"` }Copy after loginCopy after loginCopy after login

9. Rolling Back Migrations:

In case for some reasons we got bugs with new updated schemas, and we need to rollback, this case we'll use the down command:

DB_USER=root DB_PASS=yourpassword DB_HOST=127.0.0.1 DB_PORT=3306 DB_NAME=yourdb

Number 1 indicates that we want to rollback 1 migration.

Here we also need manually to update golang users struct (remove the Email field) to reflect the data schema changes.

package main

import (

"golang-service/config"

"golang-service/database"

"golang-service/models"

"github.com/gin-gonic/gin"

"gorm.io/gorm"

)

func main() {

config.ConnectDB()

database.Migrate(config.DB)

r := gin.Default()

r.POST("/users", createUser)

r.GET("/users/:id", getUser)

r.Run(":8080")

}

func createUser(c *gin.Context) {

var user models.User

if err := c.ShouldBindJSON(&user); err != nil {

c.JSON(400, gin.H{"error": err.Error()})

return

}

if err := config.DB.Create(&user).Error; err != nil {

c.JSON(500, gin.H{"error": err.Error()})

return

}

c.JSON(201, user)

}

func getUser(c *gin.Context) {

id := c.Param("id")

var user models.User

if err := config.DB.First(&user, id).Error; err != nil {

c.JSON(404, gin.H{"error": "User not found"})

return

}

c.JSON(200, user)

}

10. Use with Makefile

To simplify the process of migration and rolling back, we can add a Makefile .

go install -tags 'mysql' github.com/golang-migrate/migrate/v4/cmd/migrate@latest

The content of Makefile as following.

migrate create -ext=sql -dir=./migrations -seq create_users_table

Now we can simply run make migrate_up or make migrate_down on CLI to do the migration and the rollback.

11.Considerations:

- Data Loss During Rollback: Rolling back migrations that delete columns or tables may result in data loss, so always backup data before running a rollback.

- CI/CD Integration: Integrate the migration process into your CI/CD pipeline to automate schema changes during deployment.

- DB Backups: Schedule regular database backups to prevent data loss in case of migration errors.

About DB backups

Before rolling back a migration or making changes that could potentially affect your database, here are some key points to consider.

- Schema Changes: If the migration involved altering the schema (e.g., adding or removing columns, changing data types), rolling back to a previous migration can result in the loss of any data stored in those altered columns or tables.

- Data Removal: If the migration includes commands that delete data (like dropping tables or truncating tables), rolling back will execute the corresponding "down" migration, which could permanently remove that data.

- Transaction Handling: If your migration tool supports transactions, the rollback might be safer since changes are applied in a transaction. However, if you manually run SQL commands outside of transactions, there is a risk of losing data.

- Data Integrity: If you have modified data in a way that depends on the current schema, rolling back could leave your database in an inconsistent state.

So it’s crucial to back up your data. Here’s a brief guide:

-

Database Dump:

Use database-specific tools to create a full backup of your database. For MySQL, you can use:

/golang-service |-- main.go |-- database | |-- migration.go |-- models | |-- user.go |-- config | |-- config.go |-- migrations | |-- ... |-- go.mod

Copy after loginCopy after loginCopy after loginThis creates a file (backup_before_rollback.sql) that contains all the data and schema of the dbname database.

-

Export Specific Tables:

If you only need to back up certain tables, specify them in the mysqldump command:

package config import ( "fmt" "log" "os" "time" "github.com/joho/godotenv" "gorm.io/driver/mysql" "gorm.io/gorm" ) var DB *gorm.DB func ConnectDB() { err := godotenv.Load() if err != nil { log.Fatal("Error loading .env file") } // charset=utf8mb4: Sets the character set to utf8mb4, which supports all Unicode characters, including emojis. // parseTime=True: Tells the driver to automatically parse DATE and DATETIME values into Go's time.Time type. // loc=Local: Uses the local timezone of the server for time-related queries and storage. dsn := fmt.Sprintf( "%s:%s@tcp(%s:%s)/%s?charset=utf8mb4&parseTime=True&loc=Local", os.Getenv("DB_USER"), os.Getenv("DB_PASS"), os.Getenv("DB_HOST"), os.Getenv("DB_PORT"), os.Getenv("DB_NAME"), ) db, err := gorm.Open(mysql.Open(dsn), &gorm.Config{}) if err != nil { panic("failed to connect database") } sqlDB, err := db.DB() if err != nil { panic("failed to configure database connection") } // Set connection pool settings sqlDB.SetMaxIdleConns(10) sqlDB.SetMaxOpenConns(100) sqlDB.SetConnMaxLifetime(time.Hour) // 1.sqlDB.SetMaxIdleConns(10) // Sets the maximum number of idle (unused but open) connections in the connection pool. // A value of 10 means up to 10 connections can remain idle, ready to be reused. // 2. sqlDB.SetMaxOpenConns(100): // Sets the maximum number of open (active or idle) connections that can be created to the database. // A value of 100 limits the total number of connections, helping to prevent overloading the database. // 3. sqlDB.SetConnMaxLifetime(time.Hour): // Sets the maximum amount of time a connection can be reused before it’s closed. // A value of time.Hour means that each connection will be kept for up to 1 hour, after which it will be discarded and a new connection will be created if needed. DB = db }Copy after loginCopy after loginCopy after login Verify the Backup:

Ensure that the backup file has been created and check its size or open it to ensure it contains the necessary data.Store Backups Securely:

Keep a copy of the backup in a secure location, such as cloud storage or a separate server, to prevent data loss during the rollback process.

Backups on cloud

To back up your MySQL data when using Golang and deploying on AWS EKS, you can follow these steps:

-

Use mysqldump for Database Backup:

Create a mysqldump of your MySQL database using a Kubernetes cron job.

package database import ( "golang-service/models" "golang-service/migrations" "gorm.io/gorm" ) func Migrate(db *gorm.DB) { db.AutoMigrate(&models.User{}) // Apply additional custom migrations if needed }Copy after loginCopy after loginCopy after loginStore this in a persistent volume or an S3 bucket.

-

Automate with Kubernetes CronJob:

Use a Kubernetes CronJob to automate the mysqldump process.

Example YAML configuration:yaml

package models import "gorm.io/gorm" type User struct { gorm.Model Name string `json:"name"` }Copy after loginCopy after loginCopy after login

` Using AWS RDS Automated Backups (if using RDS):

If your MySQL database is on AWS RDS, you can leverage RDS automated backups and snapshots.

Set a backup retention period and take snapshots manually or automate snapshots using Lambda functions.Back Up Persistent Volumes (PV) with Velero:

Use Velero, a backup tool for Kubernetes, to back up the persistent volume that holds MySQL data.

Install Velero on your EKS cluster and configure it to back up to S3.

By using these methods, you can ensure your MySQL data is regularly backed up and securely stored.

The above is the detailed content of DB Migration For Golang Services, Why it matters?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

When might a full table scan be faster than using an index in MySQL?

Apr 09, 2025 am 12:05 AM

Full table scanning may be faster in MySQL than using indexes. Specific cases include: 1) the data volume is small; 2) when the query returns a large amount of data; 3) when the index column is not highly selective; 4) when the complex query. By analyzing query plans, optimizing indexes, avoiding over-index and regularly maintaining tables, you can make the best choices in practical applications.

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Can I install mysql on Windows 7

Apr 08, 2025 pm 03:21 PM

Yes, MySQL can be installed on Windows 7, and although Microsoft has stopped supporting Windows 7, MySQL is still compatible with it. However, the following points should be noted during the installation process: Download the MySQL installer for Windows. Select the appropriate version of MySQL (community or enterprise). Select the appropriate installation directory and character set during the installation process. Set the root user password and keep it properly. Connect to the database for testing. Note the compatibility and security issues on Windows 7, and it is recommended to upgrade to a supported operating system.

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL: Simple Concepts for Easy Learning

Apr 10, 2025 am 09:29 AM

MySQL is an open source relational database management system. 1) Create database and tables: Use the CREATEDATABASE and CREATETABLE commands. 2) Basic operations: INSERT, UPDATE, DELETE and SELECT. 3) Advanced operations: JOIN, subquery and transaction processing. 4) Debugging skills: Check syntax, data type and permissions. 5) Optimization suggestions: Use indexes, avoid SELECT* and use transactions.

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

Can mysql and mariadb coexist

Apr 08, 2025 pm 02:27 PM

MySQL and MariaDB can coexist, but need to be configured with caution. The key is to allocate different port numbers and data directories to each database, and adjust parameters such as memory allocation and cache size. Connection pooling, application configuration, and version differences also need to be considered and need to be carefully tested and planned to avoid pitfalls. Running two databases simultaneously can cause performance problems in situations where resources are limited.

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

The relationship between mysql user and database

Apr 08, 2025 pm 07:15 PM

In MySQL database, the relationship between the user and the database is defined by permissions and tables. The user has a username and password to access the database. Permissions are granted through the GRANT command, while the table is created by the CREATE TABLE command. To establish a relationship between a user and a database, you need to create a database, create a user, and then grant permissions.

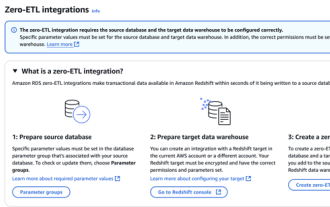

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

RDS MySQL integration with Redshift zero ETL

Apr 08, 2025 pm 07:06 PM

Data Integration Simplification: AmazonRDSMySQL and Redshift's zero ETL integration Efficient data integration is at the heart of a data-driven organization. Traditional ETL (extract, convert, load) processes are complex and time-consuming, especially when integrating databases (such as AmazonRDSMySQL) with data warehouses (such as Redshift). However, AWS provides zero ETL integration solutions that have completely changed this situation, providing a simplified, near-real-time solution for data migration from RDSMySQL to Redshift. This article will dive into RDSMySQL zero ETL integration with Redshift, explaining how it works and the advantages it brings to data engineers and developers.

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

Laravel Eloquent ORM in Bangla partial model search)

Apr 08, 2025 pm 02:06 PM

LaravelEloquent Model Retrieval: Easily obtaining database data EloquentORM provides a concise and easy-to-understand way to operate the database. This article will introduce various Eloquent model search techniques in detail to help you obtain data from the database efficiently. 1. Get all records. Use the all() method to get all records in the database table: useApp\Models\Post;$posts=Post::all(); This will return a collection. You can access data using foreach loop or other collection methods: foreach($postsas$post){echo$post->

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL: The Ease of Data Management for Beginners

Apr 09, 2025 am 12:07 AM

MySQL is suitable for beginners because it is simple to install, powerful and easy to manage data. 1. Simple installation and configuration, suitable for a variety of operating systems. 2. Support basic operations such as creating databases and tables, inserting, querying, updating and deleting data. 3. Provide advanced functions such as JOIN operations and subqueries. 4. Performance can be improved through indexing, query optimization and table partitioning. 5. Support backup, recovery and security measures to ensure data security and consistency.