Technology peripherals

Technology peripherals

AI

AI

Xiaohongshu's large model paper sharing session brought together authors from four major international conferences

Xiaohongshu's large model paper sharing session brought together authors from four major international conferences

Xiaohongshu's large model paper sharing session brought together authors from four major international conferences

Large models are leading a new round of research boom, with numerous innovative results emerging in both industry and academia.

The Xiaohongshu technical team is also constantly exploring in this wave, and the research results of many papers have been frequently presented at top international conferences such as ICLR, ACL, CVPR, AAAI, SIGIR, and WWW.

What new opportunities and challenges are we discovering at the intersection of large models and natural language processing?

What are some effective evaluation methods for large models? How can it be better integrated into application scenarios?

On June 27, 19:00-21:30, [REDtech is coming] The eleventh issue of "Little Red Book 2024 Large Model Frontier Paper Sharing" will be broadcast online!

REDtech specially invited the Xiaohongshu community search team to the live broadcast room. They will share 6 large-scale model research papers published by Xiaohongshu in 2024. Feng Shaoxiong, the person in charge of Xiaohongshu Jingpai LTR, joined hands with Li Yiwei, Wang Xinglin, Yuan Peiwen, Zhang Chao, and others to discuss the latest large model decoding and distillation technology, large model evaluation methods, and the use of large models in Practical applications on the Xiaohongshu platform.

Activity Agenda

01 Escape Sky-high Cost: Early-stopping Self-Consistency for Multi-step Reasoning / Selected for ICLR 2024

Escape Sky-high Cost: Early-stopping Self-Consistency for Multi-step Reasoning Sexual method | Shared by: Li Yiwei

Self-Consistency (SC) has always been a widely used decoding strategy in thinking chain reasoning. It generates multiple thinking chains and takes the majority answer as the final answer. Improve model performance. But it is a costly method that requires multiple samples of a preset size. At ICLR 2024, Xiaohongshu proposed a simple and scalable sampling process - Early-Stopping Self-Consistency (ESC), which can significantly reduce Cost of SC. On this basis, the team further derived an ESC control scheme to dynamically select the performance-cost balance for different tasks and models. Experimental results on three mainstream reasoning tasks (mathematics, common sense, and symbolic reasoning) show that ESC significantly reduces the average number of samples across six benchmarks while almost maintaining the original performance.

Paper address: https://arxiv.org/abs/2401.10480

02 Integrate the Essence and Eliminate the Dross: Fine-Grained Self-Consistency for Free-Form Language Generation / Selected for ACL 2024

Select the finer points: Fine-grained self-consistency method for free-form generation tasks| Sharer: Wang Xinglin

Xiaohongshu proposed the Fine-Grained Self-Consistency (FSC) method in ACL 2024, which can significantly improve the self-consistency method in Performance on free-form generation tasks. The team first analyzed through experiments that the shortcomings of existing self-consistent methods for free-form generation tasks come from coarse-grained common sample selection, which cannot effectively utilize the common knowledge between fine-grained fragments of different samples. On this basis, the team proposed an FSC method based on large model self-fusion, and experiments confirmed that it achieved significantly better performance in code generation, summary generation, and mathematical reasoning tasks, while maintaining considerable consumption.

Paper address: https://github.com/WangXinglin/FSC

03 BatchEval: Towards Human-like Text Evaluation / Selected for ACL 2024, the field chairman gave full marks and recommended the best paper

Mai Toward human-level text evaluation| Shareer: Yuan Peiwen

Xiaohongshu proposed the BatchEval method in ACL 2024, which can achieve human-like text evaluation effects with lower overhead. The team first analyzed from a theoretical level that the shortcomings of existing text evaluation methods in evaluation robustness stem from the uneven distribution of evaluation scores, and the suboptimal performance in score integration comes from the lack of diversity of evaluation perspectives. On this basis, inspired by the comparison between samples in the human evaluation process to establish a more three-dimensional and comprehensive evaluation benchmark with diverse perspectives, BatchEval was proposed by analogy. Compared with several current state-of-the-art methods, BatchEval achieves significantly better performance in both evaluation overhead and evaluation effect.

Paper address: https://arxiv.org/abs/2401.00437

04 Poor-Supervised Evaluation for SuperLLM via Mutual Consistency / Selected for ACL 2024

Achieve superhuman level under the lack of accurate supervision signal through mutual consistency Large language model evaluation| Sharer: Yuan Peiwen

Xiaohongshu schlug in ACL 2024 die PEEM-Methode vor, mit der durch gegenseitige Konsistenz zwischen Modellen eine genaue Bewertung großer Sprachmodelle über die menschliche Ebene hinaus erreicht werden kann. Das Team analysierte zunächst, dass der aktuelle Trend der schnellen Entwicklung großer Sprachmodelle dazu führen wird, dass sie in vielerlei Hinsicht allmählich das menschliche Niveau erreichen oder sogar übertreffen. In dieser Situation wird der Mensch nicht mehr in der Lage sein, genaue Bewertungssignale zu liefern. Um die Fähigkeitsbewertung in diesem Szenario zu realisieren, schlug das Team die Idee vor, die gegenseitige Konsistenz zwischen Modellen als Bewertungssignal zu verwenden, und leitete daraus ab, dass bei unendlichen Bewertungsstichproben eine unabhängige Vorhersageverteilung zwischen den Referenzmodellen vorliegt und das zu bewertende Modell. Diese Konsistenz zwischen Referenzmodellen kann als genaues Maß für die Modellfähigkeit verwendet werden. Auf dieser Grundlage schlug das Team die auf dem EM-Algorithmus basierende PEEM-Methode vor, und Experimente bestätigten, dass sie die Unzulänglichkeit der oben genannten Bedingungen in der Realität wirksam lindern und so eine genaue Bewertung großer Sprachmodelle erreichen kann, die über das menschliche Niveau hinausgehen.

Papieradresse: https://github.com/ypw0102/PEEM

05 Staub in Gold verwandeln: Destillierung komplexer Denkfähigkeiten aus LLMs durch Nutzung negativer Daten / Ausgewählt in AAAI 2024 Oral

Verwendung negativer Proben zur Werbung große Modelle Destillation von Argumentationsfähigkeiten |. Teiler: Li Yiwei

Große Sprachmodelle (LLMs) eignen sich gut für verschiedene Argumentationsaufgaben, aber ihre Black-Box-Eigenschaften und die große Anzahl von Parametern behindern ihre weit verbreitete Anwendung in der Praxis. Insbesondere bei der Bearbeitung komplexer mathematischer Probleme kommt es bei LLMs manchmal zu fehlerhaften Argumentationsketten. Herkömmliche Forschungsmethoden übertragen nur Wissen aus positiven Stichproben und ignorieren synthetische Daten mit falschen Antworten. Auf der AAAI 2024 schlug das Xiaohongshu-Suchalgorithmus-Team ein innovatives Framework vor, schlug erstmals den Wert negativer Proben im Modelldestillationsprozess vor und überprüfte ihn und baute ein Modellspezialisierungs-Framework auf, das neben der Verwendung positiver Proben auch vollständige Ergebnisse lieferte Verwendung negativer Proben Zur Verfeinerung des LLM-Wissens. Das Framework umfasst drei Serialisierungsschritte, darunter Negative Assisted Training (NAT), Negative Calibration Enhancement (NCE) und Dynamic Self-Consistency (ASC), die den gesamten Prozess vom Training bis zur Inferenz abdecken. Eine umfangreiche Reihe von Experimenten zeigt die entscheidende Rolle negativer Daten bei der Destillation von LLM-Wissen.

Papieradresse: https://arxiv.org/abs/2312.12832

06 NoteLLM: Ein abrufbares großes Sprachmodell für Notizempfehlungen / Ausgewählt für WWW 2024

Empfehlungssystem für die Darstellung von Notizinhalten basierend auf einem großen Sprachmodell| Geteilt von: Zhang Chao

Die Xiaohongshu APP generiert jeden Tag eine große Anzahl neuer Notizen. Wie kann man diese neuen Inhalte interessierten Benutzern effektiv empfehlen? Die auf Notizinhalten basierende Empfehlungsdarstellung ist eine Methode zur Linderung des Kaltstartproblems von Notizen und bildet auch die Grundlage für viele nachgelagerte Anwendungen. In den letzten Jahren haben große Sprachmodelle aufgrund ihrer starken Generalisierungs- und Textverständnisfähigkeiten große Aufmerksamkeit auf sich gezogen. Daher hoffen wir, mithilfe großer Sprachmodelle ein Empfehlungssystem für die Darstellung von Notizinhalten zu erstellen und so das Verständnis von Notizinhalten zu verbessern. Wir stellen unsere jüngsten Arbeiten aus zwei Perspektiven vor: der Generierung erweiterter Darstellungen und der multimodalen Inhaltsdarstellung. Derzeit wurde dieses System auf mehrere Geschäftsszenarien von Xiaohongshu angewendet und erzielte erhebliche Vorteile. Papieradresse: https://arxiv.org/abs/2403.01744

So können Sie live zuschauen

-

Live-Übertragungszeit: 27. Juni 2024 19:00-21:30

Live-Broadcast-Plattform: WeChat-Videokonto [REDtech], Live-Übertragung auf gleichnamigen Bilibili-, Douyin- und Xiaohongshu-Konten.

Freunde einladen, einen Termin für Live-Übertragungsgeschenke zu vereinbaren

The above is the detailed content of Xiaohongshu's large model paper sharing session brought together authors from four major international conferences. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

The first mechanical claw! Yuanluobao appeared at the 2024 World Robot Conference and released the first chess robot that can enter the home

Aug 21, 2024 pm 07:33 PM

On August 21, the 2024 World Robot Conference was grandly held in Beijing. SenseTime's home robot brand "Yuanluobot SenseRobot" has unveiled its entire family of products, and recently released the Yuanluobot AI chess-playing robot - Chess Professional Edition (hereinafter referred to as "Yuanluobot SenseRobot"), becoming the world's first A chess robot for the home. As the third chess-playing robot product of Yuanluobo, the new Guoxiang robot has undergone a large number of special technical upgrades and innovations in AI and engineering machinery. For the first time, it has realized the ability to pick up three-dimensional chess pieces through mechanical claws on a home robot, and perform human-machine Functions such as chess playing, everyone playing chess, notation review, etc.

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

Claude has become lazy too! Netizen: Learn to give yourself a holiday

Sep 02, 2024 pm 01:56 PM

The start of school is about to begin, and it’s not just the students who are about to start the new semester who should take care of themselves, but also the large AI models. Some time ago, Reddit was filled with netizens complaining that Claude was getting lazy. "Its level has dropped a lot, it often pauses, and even the output becomes very short. In the first week of release, it could translate a full 4-page document at once, but now it can't even output half a page!" https:// www.reddit.com/r/ClaudeAI/comments/1by8rw8/something_just_feels_wrong_with_claude_in_the/ in a post titled "Totally disappointed with Claude", full of

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference, this domestic robot carrying 'the hope of future elderly care' was surrounded

Aug 22, 2024 pm 10:35 PM

At the World Robot Conference being held in Beijing, the display of humanoid robots has become the absolute focus of the scene. At the Stardust Intelligent booth, the AI robot assistant S1 performed three major performances of dulcimer, martial arts, and calligraphy in one exhibition area, capable of both literary and martial arts. , attracted a large number of professional audiences and media. The elegant playing on the elastic strings allows the S1 to demonstrate fine operation and absolute control with speed, strength and precision. CCTV News conducted a special report on the imitation learning and intelligent control behind "Calligraphy". Company founder Lai Jie explained that behind the silky movements, the hardware side pursues the best force control and the most human-like body indicators (speed, load) etc.), but on the AI side, the real movement data of people is collected, allowing the robot to become stronger when it encounters a strong situation and learn to evolve quickly. And agile

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

ACL 2024 Awards Announced: One of the Best Papers on Oracle Deciphering by HuaTech, GloVe Time Test Award

Aug 15, 2024 pm 04:37 PM

At this ACL conference, contributors have gained a lot. The six-day ACL2024 is being held in Bangkok, Thailand. ACL is the top international conference in the field of computational linguistics and natural language processing. It is organized by the International Association for Computational Linguistics and is held annually. ACL has always ranked first in academic influence in the field of NLP, and it is also a CCF-A recommended conference. This year's ACL conference is the 62nd and has received more than 400 cutting-edge works in the field of NLP. Yesterday afternoon, the conference announced the best paper and other awards. This time, there are 7 Best Paper Awards (two unpublished), 1 Best Theme Paper Award, and 35 Outstanding Paper Awards. The conference also awarded 3 Resource Paper Awards (ResourceAward) and Social Impact Award (

AI hardware adds another member! Rather than replacing mobile phones, can NotePin last longer?

Sep 02, 2024 pm 01:40 PM

AI hardware adds another member! Rather than replacing mobile phones, can NotePin last longer?

Sep 02, 2024 pm 01:40 PM

So far, no product in the AI wearable device track has achieved particularly good results. AIPin, which was launched at MWC24 at the beginning of this year, once the evaluation prototype was shipped, the "AI myth" that was hyped at the time of its release began to be shattered, and it experienced large-scale returns in just a few months; RabbitR1, which also sold well at the beginning, was relatively It's better, but it also received negative reviews similar to "Android cases" when it was delivered in large quantities. Now, another company has entered the AI wearable device track. Technology media TheVerge published a blog post yesterday saying that AI startup Plaud has launched a product called NotePin. Unlike AIFriend, which is still in the "painting" stage, NotePin has now started

How to remove fans on Xiaohongshu Graphic tutorial on how to remove fans on Xiaohongshu

Jan 16, 2025 pm 03:39 PM

How to remove fans on Xiaohongshu Graphic tutorial on how to remove fans on Xiaohongshu

Jan 16, 2025 pm 03:39 PM

How to remove fans on Xiaohongshu. Step 1: Open [Xiaohongshu] APP and enter the main page, click the [Fans] button as shown below; Step 2: Enter the fans page and select the fans that need to be removed. ; Step 3: Go to the personal information page and click the three-dot icon in the upper right corner; Step 4: When the page challenges the small window, select the [Block] button; Step 5: An option will appear in the window, click [Block] Black] option can be removed.

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Li Feifei's team proposed ReKep to give robots spatial intelligence and integrate GPT-4o

Sep 03, 2024 pm 05:18 PM

Deep integration of vision and robot learning. When two robot hands work together smoothly to fold clothes, pour tea, and pack shoes, coupled with the 1X humanoid robot NEO that has been making headlines recently, you may have a feeling: we seem to be entering the age of robots. In fact, these silky movements are the product of advanced robotic technology + exquisite frame design + multi-modal large models. We know that useful robots often require complex and exquisite interactions with the environment, and the environment can be represented as constraints in the spatial and temporal domains. For example, if you want a robot to pour tea, the robot first needs to grasp the handle of the teapot and keep it upright without spilling the tea, then move it smoothly until the mouth of the pot is aligned with the mouth of the cup, and then tilt the teapot at a certain angle. . this

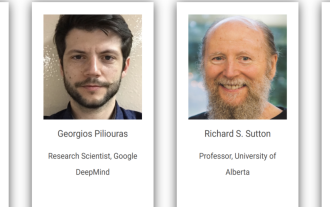

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Distributed Artificial Intelligence Conference DAI 2024 Call for Papers: Agent Day, Richard Sutton, the father of reinforcement learning, will attend! Yan Shuicheng, Sergey Levine and DeepMind scientists will give keynote speeches

Aug 22, 2024 pm 08:02 PM

Conference Introduction With the rapid development of science and technology, artificial intelligence has become an important force in promoting social progress. In this era, we are fortunate to witness and participate in the innovation and application of Distributed Artificial Intelligence (DAI). Distributed artificial intelligence is an important branch of the field of artificial intelligence, which has attracted more and more attention in recent years. Agents based on large language models (LLM) have suddenly emerged. By combining the powerful language understanding and generation capabilities of large models, they have shown great potential in natural language interaction, knowledge reasoning, task planning, etc. AIAgent is taking over the big language model and has become a hot topic in the current AI circle. Au