Backend Development

Backend Development

C++

C++

C++ neural network model implementation in financial artificial intelligence

C++ neural network model implementation in financial artificial intelligence

C++ neural network model implementation in financial artificial intelligence

C++ is suitable for implementing neural networks because of its excellent performance and memory management. Neural network models can be built using neural network libraries such as TensorFlow or Eigen, including input layers, hidden layers, and output layers. Neural networks are trained via the backpropagation algorithm, which involves forward propagation, computing losses, backpropagation, and weight updates. In the practical case of stock price prediction, you can define input and output data, create a neural network, and use a prediction function to predict new stock prices.

C++ neural network model implementation in financial artificial intelligence

Introduction

Neural networks are an important part of financial artificial intelligence and are used to predict market trends, optimize investment portfolios and detect fraud. This article introduces how to use C++ to implement and train a neural network model, and provides a practical case.

C++ and Neural Network Libraries

C++ is well suited for implementing neural networks due to its high performance and memory management capabilities. There are various C++ neural network libraries available, such as:

- TensorFlow

- PyTorch

- Eigen

Neural Network Model Construct

A basic neural network model includes input layer, hidden layer and output layer. Each layer consists of neurons that apply weights and biases to perform a linear transformation on the input. The results are then passed to an activation function such as ReLU or sigmoid.

Training neural networks

Neural networks are trained using the backpropagation algorithm. This process involves:

- Forward propagation: The input is passed through the model, and the output is calculated.

- Calculate loss: Compare the model output with the expected output and calculate the value of the loss function.

- Backpropagation: Calculate the gradient of the loss with respect to the weights and biases.

- Update weights: Use the gradient descent algorithm to update the weights to minimize the loss.

Practical case: Stock price prediction

Consider a practical case of using a neural network model to predict stock prices. Here's how to do it:

#include <eigen3/Eigen/Dense>

#include <iostream>

using namespace Eigen;

int main() {

// 定义输入数据

MatrixXd inputs = MatrixXd::Random(100, 10);

// 定义输出数据

MatrixXd outputs = MatrixXd::Random(100, 1);

// 创建和训练神经网络

NeuralNetwork network;

network.AddLayer(10, "relu");

network.AddLayer(1, "linear");

network.Train(inputs, outputs);

// 预测新股票价格

MatrixXd newInput = MatrixXd::Random(1, 10);

MatrixXd prediction = network.Predict(newInput);

std::cout << "Predicted stock price: " << prediction << std::endl;

return 0;

}The above is the detailed content of C++ neural network model implementation in financial artificial intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The foundation, frontier and application of GNN

Apr 11, 2023 pm 11:40 PM

The foundation, frontier and application of GNN

Apr 11, 2023 pm 11:40 PM

Graph neural networks (GNN) have made rapid and incredible progress in recent years. Graph neural network, also known as graph deep learning, graph representation learning (graph representation learning) or geometric deep learning, is the fastest growing research topic in the field of machine learning, especially deep learning. The title of this sharing is "Basics, Frontiers and Applications of GNN", which mainly introduces the general content of the comprehensive book "Basics, Frontiers and Applications of Graph Neural Networks" compiled by scholars Wu Lingfei, Cui Peng, Pei Jian and Zhao Liang. . 1. Introduction to graph neural networks 1. Why study graphs? Graphs are a universal language for describing and modeling complex systems. The graph itself is not complicated, it mainly consists of edges and nodes. We can use nodes to represent any object we want to model, and edges to represent two

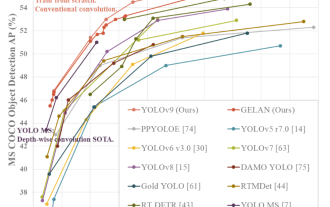

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

YOLO is immortal! YOLOv9 is released: performance and speed SOTA~

Feb 26, 2024 am 11:31 AM

Today's deep learning methods focus on designing the most suitable objective function so that the model's prediction results are closest to the actual situation. At the same time, a suitable architecture must be designed to obtain sufficient information for prediction. Existing methods ignore the fact that when the input data undergoes layer-by-layer feature extraction and spatial transformation, a large amount of information will be lost. This article will delve into important issues when transmitting data through deep networks, namely information bottlenecks and reversible functions. Based on this, the concept of programmable gradient information (PGI) is proposed to cope with the various changes required by deep networks to achieve multi-objectives. PGI can provide complete input information for the target task to calculate the objective function, thereby obtaining reliable gradient information to update network weights. In addition, a new lightweight network framework is designed

An overview of the three mainstream chip architectures for autonomous driving in one article

Apr 12, 2023 pm 12:07 PM

An overview of the three mainstream chip architectures for autonomous driving in one article

Apr 12, 2023 pm 12:07 PM

The current mainstream AI chips are mainly divided into three categories: GPU, FPGA, and ASIC. Both GPU and FPGA are relatively mature chip architectures in the early stage and are general-purpose chips. ASIC is a chip customized for specific AI scenarios. The industry has confirmed that CPUs are not suitable for AI computing, but they are also essential in AI applications. GPU Solution Architecture Comparison between GPU and CPU The CPU follows the von Neumann architecture, the core of which is the storage of programs/data and serial sequential execution. Therefore, the CPU architecture requires a large amount of space to place the storage unit (Cache) and the control unit (Control). In contrast, the computing unit (ALU) only occupies a small part, so the CPU is performing large-scale parallel computing.

'The owner of Bilibili UP successfully created the world's first redstone-based neural network, which caused a sensation on social media and was praised by Yann LeCun.'

May 07, 2023 pm 10:58 PM

'The owner of Bilibili UP successfully created the world's first redstone-based neural network, which caused a sensation on social media and was praised by Yann LeCun.'

May 07, 2023 pm 10:58 PM

In Minecraft, redstone is a very important item. It is a unique material in the game. Switches, redstone torches, and redstone blocks can provide electricity-like energy to wires or objects. Redstone circuits can be used to build structures for you to control or activate other machinery. They themselves can be designed to respond to manual activation by players, or they can repeatedly output signals or respond to changes caused by non-players, such as creature movement and items. Falling, plant growth, day and night, and more. Therefore, in my world, redstone can control extremely many types of machinery, ranging from simple machinery such as automatic doors, light switches and strobe power supplies, to huge elevators, automatic farms, small game platforms and even in-game machines. built computer. Recently, B station UP main @

A drone that can withstand strong winds? Caltech uses 12 minutes of flight data to teach drones to fly in the wind

Apr 09, 2023 pm 11:51 PM

A drone that can withstand strong winds? Caltech uses 12 minutes of flight data to teach drones to fly in the wind

Apr 09, 2023 pm 11:51 PM

When the wind is strong enough to blow the umbrella, the drone is stable, just like this: Flying with the wind is a part of flying in the air. From a large level, when the pilot lands the aircraft, the wind speed may be Bringing challenges to them; on a smaller level, gusty winds can also affect drone flight. Currently, drones either fly under controlled conditions, without wind, or are operated by humans using remote controls. Drones are controlled by researchers to fly in formations in the open sky, but these flights are usually conducted under ideal conditions and environments. However, for drones to autonomously perform necessary but routine tasks, such as delivering packages, they must be able to adapt to wind conditions in real time. To make drones more maneuverable when flying in the wind, a team of engineers from Caltech

Multi-path, multi-domain, all-inclusive! Google AI releases multi-domain learning general model MDL

May 28, 2023 pm 02:12 PM

Multi-path, multi-domain, all-inclusive! Google AI releases multi-domain learning general model MDL

May 28, 2023 pm 02:12 PM

Deep learning models for vision tasks (such as image classification) are usually trained end-to-end with data from a single visual domain (such as natural images or computer-generated images). Generally, an application that completes vision tasks for multiple domains needs to build multiple models for each separate domain and train them independently. Data is not shared between different domains. During inference, each model will handle a specific domain. input data. Even if they are oriented to different fields, some features of the early layers between these models are similar, so joint training of these models is more efficient. This reduces latency and power consumption, and reduces the memory cost of storing each model parameter. This approach is called multi-domain learning (MDL). In addition, MDL models can also outperform single

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

1.3ms takes 1.3ms! Tsinghua's latest open source mobile neural network architecture RepViT

Mar 11, 2024 pm 12:07 PM

Paper address: https://arxiv.org/abs/2307.09283 Code address: https://github.com/THU-MIG/RepViTRepViT performs well in the mobile ViT architecture and shows significant advantages. Next, we explore the contributions of this study. It is mentioned in the article that lightweight ViTs generally perform better than lightweight CNNs on visual tasks, mainly due to their multi-head self-attention module (MSHA) that allows the model to learn global representations. However, the architectural differences between lightweight ViTs and lightweight CNNs have not been fully studied. In this study, the authors integrated lightweight ViTs into the effective

The practice of contrastive learning algorithms in Zhuanzhuan

Apr 11, 2023 pm 09:25 PM

The practice of contrastive learning algorithms in Zhuanzhuan

Apr 11, 2023 pm 09:25 PM

1 What is contrastive learning 1.1 Definition of contrastive learning 1.2 Principles of contrastive learning 1.3 Classic contrastive learning algorithm series 2 Application of contrastive learning 3 The practice of contrastive learning in Zhuanzhuan 3.1 The practice of CL in recommended recall 3.2 CL’s future planning in Zhuanzhuan 1 What is Contrastive Learning 1.1 Definition of Contrastive Learning Contrastive Learning (CL) is a popular research direction in the field of AI in recent years, attracting the attention of many research scholars. Its self-supervised learning method was even announced by Bengio at ICLR 2020. He and LeCun and other big guys named it as the future of AI, and then successively landed on NIPS, ACL,