Python crawler agent IP pool implementation method

Working as a distributed deep web crawler in the company, we have built a stable proxy pool service to provide effective proxies for thousands of crawlers, ensuring that each crawler gets a valid proxy IP for the corresponding website, thereby ensuring that the crawler It runs quickly and stably, so I want to use some free resources to build a simple proxy pool service.

Working as a distributed deep web crawler in the company, we have built a stable proxy pool service to provide effective proxies for thousands of crawlers, ensuring that each crawler gets a valid proxy IP for the corresponding website. , thereby ensuring the fast and stable operation of the crawler. Of course, things done in the company cannot be open sourced. However, I feel itchy in my free time, so I want to use some free resources to build a simple proxy pool service.

1. Question

Where does the proxy IP come from?

When I first learned crawling by myself, I didn’t have a proxy IP, so I went to websites with free proxies such as Xiqi Proxy and Express Proxy to crawl. There were still some proxies that could be used. Of course, if you have a better proxy interface, you can also connect it yourself. The collection of free agents is also very simple. It is nothing more than: visit the page page —> Regular/xpath extraction —> Save

How to ensure the quality of the agent?

It is certain that most of the free proxy IPs cannot be used, otherwise why would others provide paid IPs (but the fact is that the paid IPs of many agents are not stable, and many of them cannot be used). Therefore, the collected proxy IP cannot be used directly. You can write a detection program to continuously use these proxies to access a stable website to see if it can be used normally. This process can be multi-threaded or asynchronous, since detecting proxies is a slow process.

How to store the collected agents?

Here I have to recommend a high-performance NoSQL database SSDB that supports multiple data structures for proxying Redis. Supports queue, hash, set, k-v pairs, and T-level data. It is a very good intermediate storage tool for distributed crawlers.

How to make it easier for crawlers to use these proxies?

The answer is definitely to make it a service. Python has so many web frameworks. Just pick one and write an API for the crawler to call. This has many benefits. For example, when the crawler finds that the agent cannot be used, it can actively delete the agent IP through the API. When the crawler finds that the agent pool IP is not enough, it can actively refresh the agent pool. This is more reliable than the detection program.

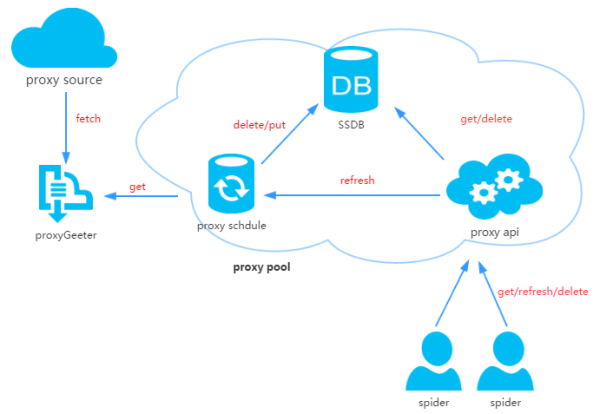

2. Proxy pool design

The proxy pool consists of four parts:

ProxyGetter:

Proxy acquisition interface, currently there are 5 free ones Proxy source, every time it is called, the latest proxies of these 5 websites will be captured and put into the DB. You can add additional proxy acquisition interfaces by yourself;

DB:

is used to store the proxy IP. Currently, it only Support SSDB. As for why you chose SSDB, you can refer to this article. I personally think SSDB is a good Redis alternative. If you have not used SSDB, it is very simple to install. You can refer to here;

Schedule:

Scheduled task users regularly check the agent availability in the DB and delete unavailable agents. At the same time, it will also take the initiative to get the latest proxy through ProxyGetter and put it into the DB;

ProxyApi:

The external interface of the proxy pool. Since the proxy pool function is relatively simple now, I spent two hours looking at Flask. I was happy. The decision was made with Flask. The function is to provide get/delete/refresh and other interfaces for crawlers to facilitate direct use by crawlers.

[HTML_REMOVED] Design

3. Code module

High-level data structure in Python, dynamic Types and dynamic binding make it very suitable for rapid application development, and also suitable as a glue language to connect existing software components. It is also very simple to use Python to create this proxy IP pool. The code is divided into 6 modules:

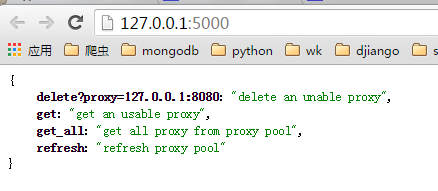

Api: api interface related code. The api is currently implemented by Flask, and the code is also very simple. The client request is passed to Flask, and Flask calls the implementation in ProxyManager, including get/delete/refresh/get_all;

DB: database related code. The current database uses SSDB. The code is implemented in factory mode to facilitate expansion of other types of databases in the future;

Manager: get/delete/refresh/get_all and other interface specific implementation classes. Currently, the proxy pool is only responsible for managing the proxy. In the future, There may be more functions, such as the binding of agents and crawlers, the binding of agents and accounts, etc.;

ProxyGetter: Relevant codes obtained by agents. Currently, fast agents, agents 66, and agents are captured. , Xisha Proxy, and guobanjia are free proxies for five websites. After testing, these five websites only have sixty or seventy available proxies that are updated every day. Of course, they also support their own expansion of the proxy interface;

Schedule: Scheduled task related The code is now just implemented to refresh the code regularly and verify the available agents, using a multi-process approach;

Util: Stores some public module methods or functions, including GetConfig: the class that reads the configuration file config.ini, ConfigParse: integrated rewriting ConfigParser's class makes it case-sensitive, Singleton: implements a singleton, LazyProperty: implements lazy calculation of class properties. Etc.;

Other files: Configuration file: Config.ini, database configuration and proxy acquisition interface configuration. You can add a new proxy acquisition method in GetFreeProxy and register it in Config.ini to use;

4. Installation

Download code:

1 2 3 |

|

Installation dependencies:

1 |

|

Startup:

1 2 3 4 5 6 7 8 |

|

5. Use

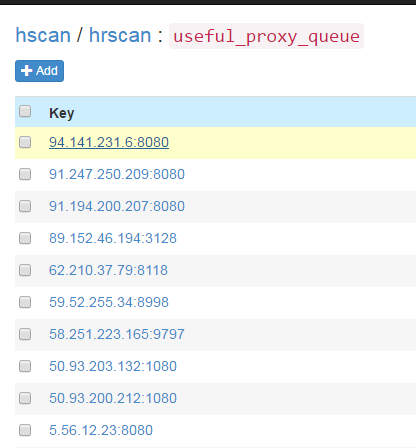

After the scheduled task is started, all agents will be fetched into the database through the agent acquisition method and verified. Thereafter, it will be repeated every 20 minutes by default. About a minute or two after the scheduled task is started, you can see the available proxies refreshed in SSDB:

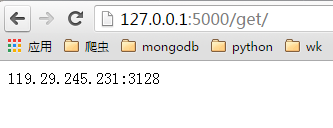

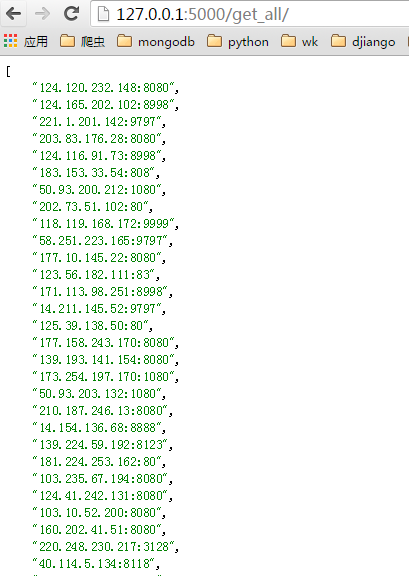

You can use it in the browser after starting ProxyApi.py The interface gets the proxy, here is the screenshot in the browser:

index page:

get page:

get_all page:

Used in crawlers. If you want to use it in crawler code, you can encapsulate this api into a function and use it directly. , For example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

|

6. Finally

I am in a hurry and the functions and codes are relatively simple. I will improve it when I have time in the future. If you like it, give it a star on github. grateful!

For more articles related to Python crawler agent IP pool implementation methods, please pay attention to the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1419

1419

52

52

1313

1313

25

25

1262

1262

29

29

1235

1235

24

24

Practical crawler combat in Python: Toutiao crawler

Jun 10, 2023 pm 01:00 PM

Practical crawler combat in Python: Toutiao crawler

Jun 10, 2023 pm 01:00 PM

Practical crawler combat in Python: Today's Toutiao crawler In today's information age, the Internet contains massive amounts of data, and the demand for using this data for analysis and application is getting higher and higher. As one of the technical means to achieve data acquisition, crawlers have also become one of the popular areas of research. This article will mainly introduce the actual crawler in Python, and focus on how to use Python to write a crawler program for Toutiao. Basic concepts of crawlers Before starting to introduce the actual crawler combat in Python, we need to first understand

Proxy IP Pool Operation Guide in PHP

May 21, 2023 am 11:52 AM

Proxy IP Pool Operation Guide in PHP

May 21, 2023 am 11:52 AM

As a commonly used programming language, PHP is widely used in web development. During the web development process, using a proxy IP pool can help us solve some common problems, such as anti-crawlers, etc. Therefore, in this article, we will introduce some proxy IP pool operation guides in PHP to help you better use proxy IP pools in web development. 1. What is a proxy IP pool? A proxy IP pool refers to a collection of proxy IP addresses. These proxy IPs can be used in turn to make the web crawler appear more efficient when requesting the website.

Comparing Golang and Python crawlers: analysis of differences in anti-crawling, data processing and framework selection

Jan 20, 2024 am 09:45 AM

Comparing Golang and Python crawlers: analysis of differences in anti-crawling, data processing and framework selection

Jan 20, 2024 am 09:45 AM

In-depth exploration of the similarities and differences between Golang crawlers and Python crawlers: anti-crawling response, data processing and framework selection Introduction: In recent years, with the rapid development of the Internet, the amount of data on the network has shown explosive growth. As a technical means to obtain Internet data, crawlers have attracted the attention of developers. The two mainstream languages, Golang and Python, each have their own advantages and characteristics. This article will delve into the similarities and differences between Golang crawlers and Python crawlers, including anti-crawling responses and data processing.

Teach you step-by-step how to use Python web crawler to obtain King of Glory hero equipment instructions and automatically generate markdown files

Jul 24, 2023 pm 02:55 PM

Teach you step-by-step how to use Python web crawler to obtain King of Glory hero equipment instructions and automatically generate markdown files

Jul 24, 2023 pm 02:55 PM

Use a Python web crawler to obtain the equipment instructions for the Honor of Kings hero, and use a thread pool to download the equipment pictures. Then, a markdown file is automatically generated. There is a lot of useful content. I will share it with you here. Everyone is welcome to try it.

Python crawler method to obtain data

Nov 13, 2023 am 10:44 AM

Python crawler method to obtain data

Nov 13, 2023 am 10:44 AM

Python crawlers can send HTTP requests through the request library, parse HTML with the parsing library, extract data with regular expressions, or use a data scraping framework to obtain data. Detailed introduction: 1. The request library sends HTTP requests, such as Requests, urllib, etc.; 2. The parsing library parses HTML, such as BeautifulSoup, lxml, etc.; 3. Regular expressions extract data. Regular expressions are used to describe string patterns. Tools can extract data that meets requirements by matching patterns, etc.

What is the difference between golang crawler and Python crawler

Dec 12, 2023 pm 03:34 PM

What is the difference between golang crawler and Python crawler

Dec 12, 2023 pm 03:34 PM

The difference between golang crawlers and Python crawlers is: 1. Golang has higher performance, while Python is usually slower; 2. Golang’s syntax is designed to be concise and clear, while Python’s syntax is concise, easy to read and write; 3. Golang’s natural support Concurrency, while Python's concurrency performance is relatively poor; 4. Golang has a rich standard library and third-party libraries, while Python has a huge ecosystem, etc.; 5. Golang is used for large projects, while Python is used for small projects.

Practical crawler combat in Python: Maoyan movie crawler

Jun 10, 2023 pm 12:27 PM

Practical crawler combat in Python: Maoyan movie crawler

Jun 10, 2023 pm 12:27 PM

With the rapid development of Internet technology, the amount of information on the Internet is becoming larger and larger. As the leading domestic film data platform, Maoyan Movies provides users with comprehensive film information services. This article will introduce how to use Python to write a simple Maoyan movie crawler to obtain movie-related data. Crawler Overview A crawler, or web crawler, is a program that automatically obtains Internet data. It can access target websites and obtain data through links on the Internet, realizing automated collection of information. Python is a powerful programming language,

Compare the advantages and disadvantages of Golang and Python crawlers in terms of speed, resource usage and ecosystem

Jan 20, 2024 am 09:44 AM

Compare the advantages and disadvantages of Golang and Python crawlers in terms of speed, resource usage and ecosystem

Jan 20, 2024 am 09:44 AM

Analysis of the advantages and disadvantages of Golang crawlers and Python crawlers: comparison of speed, resource usage and ecosystem, specific code examples are required Introduction: With the rapid development of the Internet, crawler technology has been widely used in various industries. Many developers choose to use Golang or Python to write crawler programs. This article will compare the advantages and disadvantages of Golang crawlers and Python crawlers in terms of speed, resource usage, and ecosystem, and give specific code examples to illustrate. 1. Speed comparison in crawler development