Technology peripherals

Technology peripherals

AI

AI

AI learns to hide its thinking and reason secretly! Solving complex tasks without relying on human experience is more black box

AI learns to hide its thinking and reason secretly! Solving complex tasks without relying on human experience is more black box

AI learns to hide its thinking and reason secretly! Solving complex tasks without relying on human experience is more black box

When AI does math problems, the real thinking is actually "mental arithmetic" secretly?

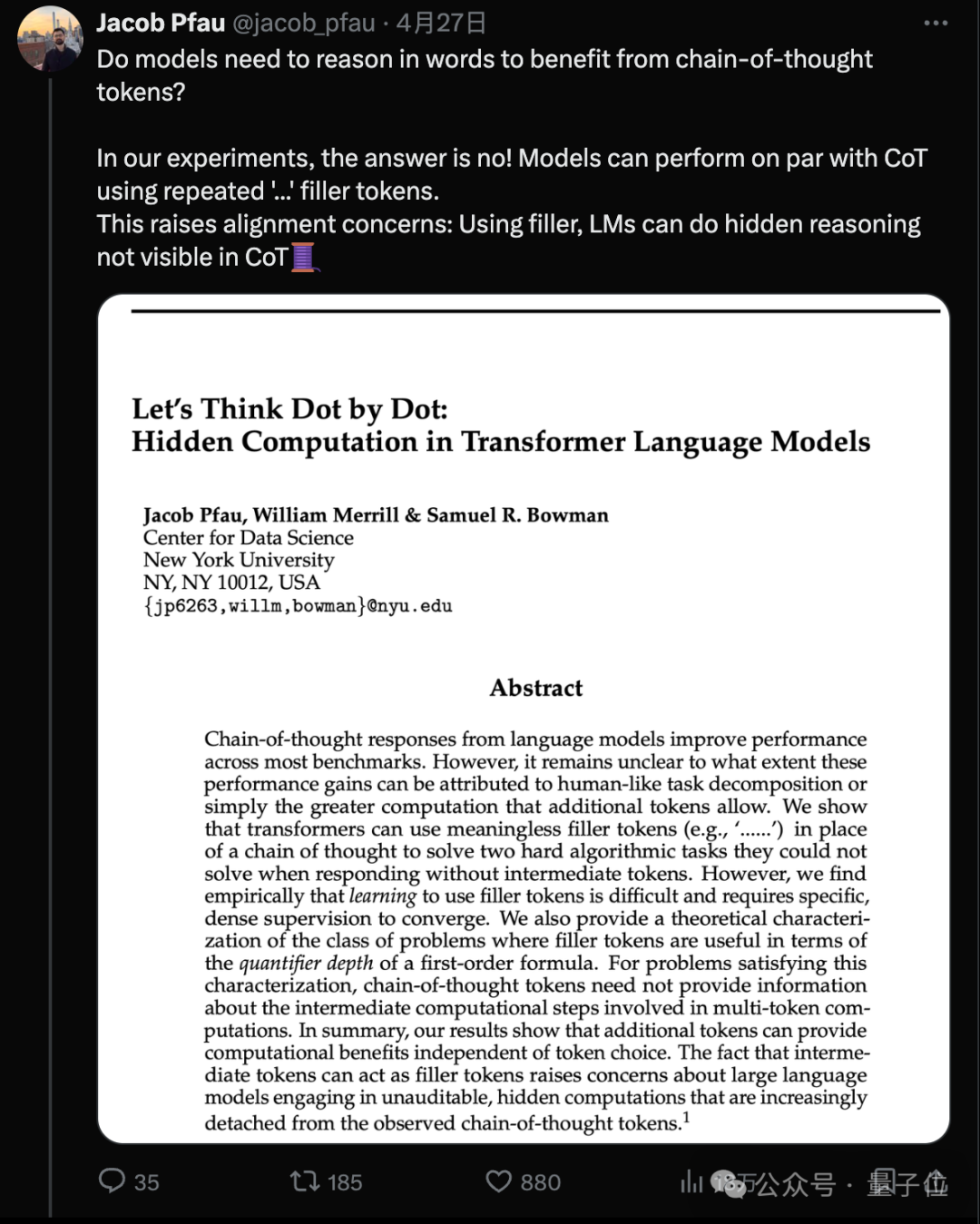

New research by a New York University team found that even if AI is not allowed to write steps and is replaced with meaningless "...", its performance on some complex tasks can be greatly improved!

First author Jacab Pfau said: As long as you spend computing power to generate additional tokens, you can bring advantages. It doesn’t matter what token you choose.

Picture

Picture

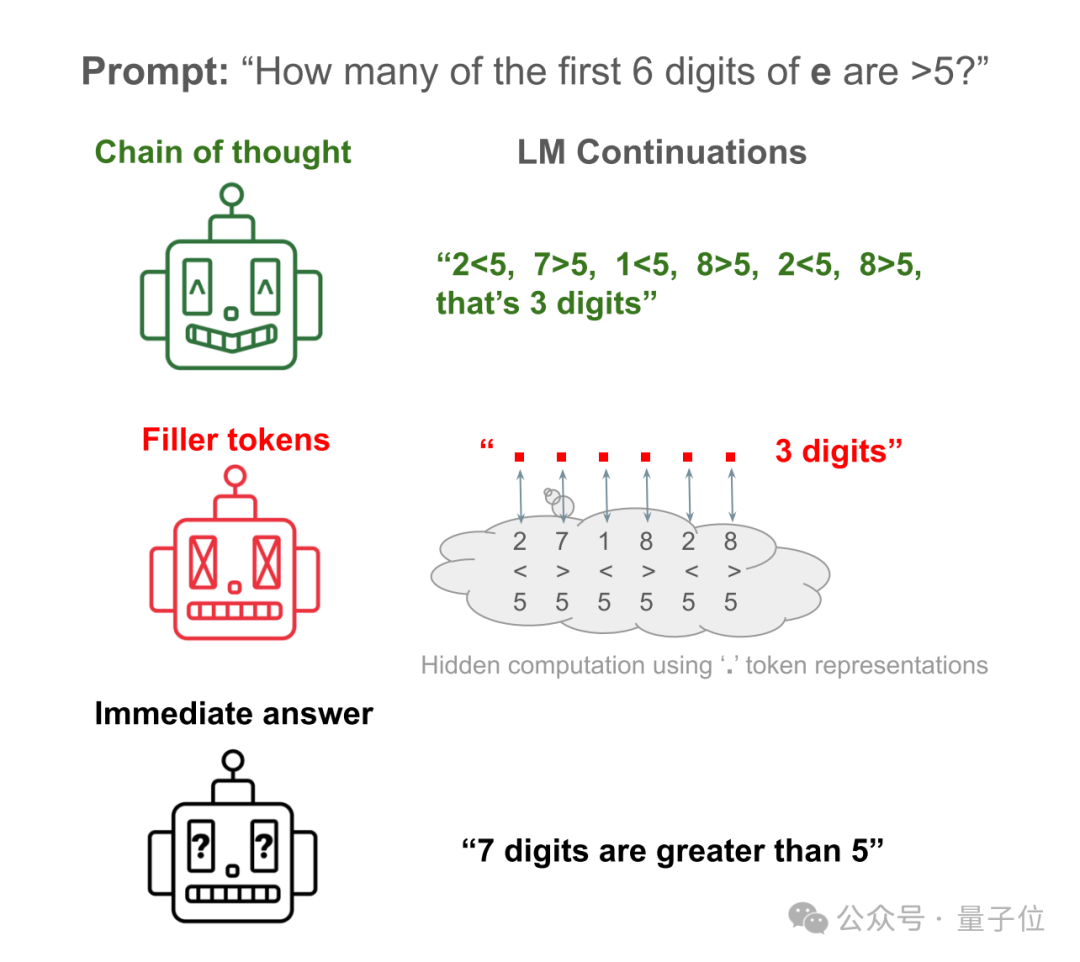

For example, let Llama 34M answer a simple question: How many of the first 6 digits of the natural constant e are greater than 5 ?

The AI's direct answer is equivalent to making trouble. It only counts the first 6 digits and actually counts 7.

Let AI write out the steps to verify each number, and you can get the correct answer.

Let AI hide the steps and replace them with a lot of "...", and you can still get the correct answer!

Picture

Picture

This paper sparked a lot of discussion as soon as it was released, and was evaluated as "the most metaphysical AI paper I have ever seen."

Picture

Picture

So, young people like to say more meaningless words such as "um...", "like...", is it okay? Strengthen reasoning skills?

Picture

Picture

From thinking "step by step" to thinking "little by little"

In fact, the New York University team The research starts from the Chain-of-Thought (CoT).

That’s the famous prompt “Let’s think step by step”.

Picture

Picture

In the past, it was found that using CoT inference can significantly improve the performance of large models on various benchmarks.

It’s unclear whether this performance improvement comes from imitating humans by breaking tasks into easier-to-solve steps, or whether it is a by-product of the extra calculations.

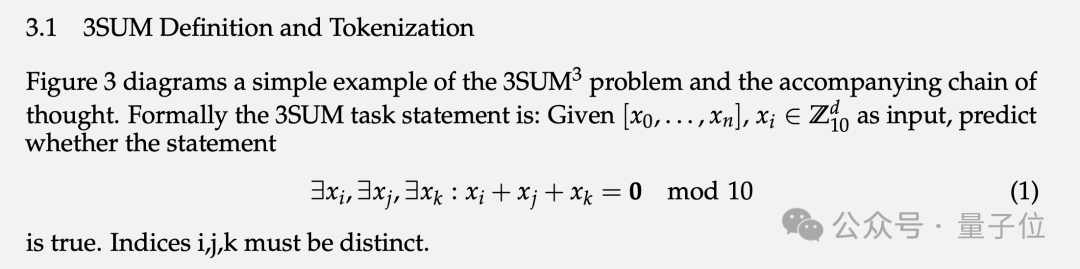

In order to verify this problem, the team designed two special tasks and corresponding synthetic data sets: 3SUM and 2SUM-Transform.

3SUM requires finding three numbers from a given set of number sequences so that the sum of the three numbers satisfies certain conditions, such as dividing by 10 with a remainder of 0.

Picture

Picture

The computational complexity of this task is O(n3), and the standard Transformer uses the input of the upper layer and the activation of the next layer Only secondary dependencies can occur between them.

That is to say, when n is large enough and the sequence is long enough, the 3SUM task exceeds the expression ability of Transformer.

In the training data set, "..." with the same length as the human reasoning steps is filled between the question and the answer. That is, the AI has not seen how humans disassemble the problem during training.

Picture

Picture

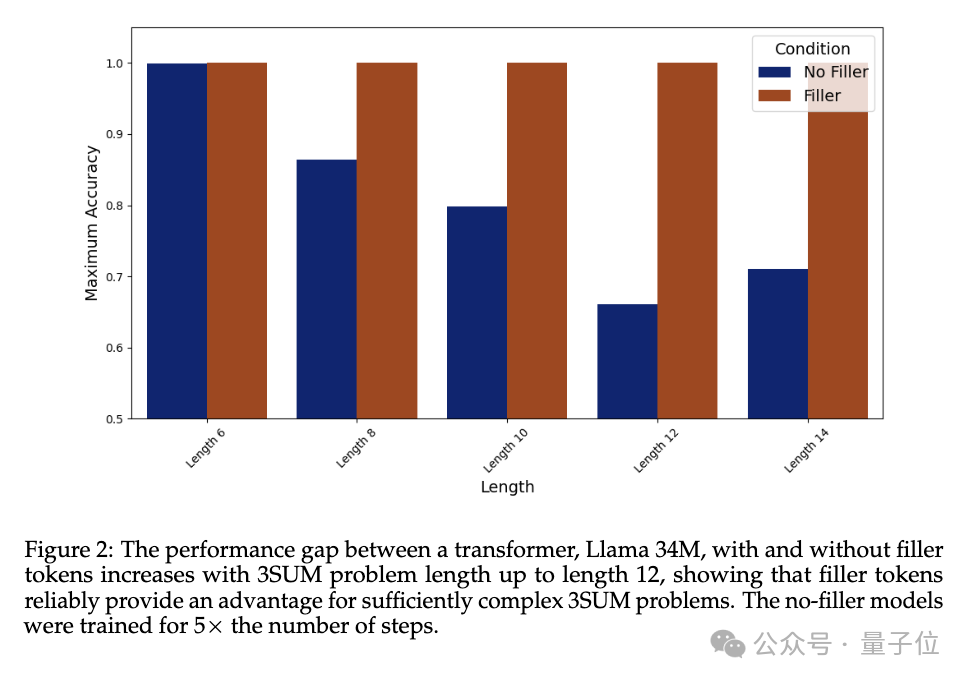

In the experiment, the performance of Llama 34M that does not output the padding token "..." decreases as the sequence length increases, while the output When filling the token, 100% accuracy can be guaranteed until the length is 14.

Picture

Picture

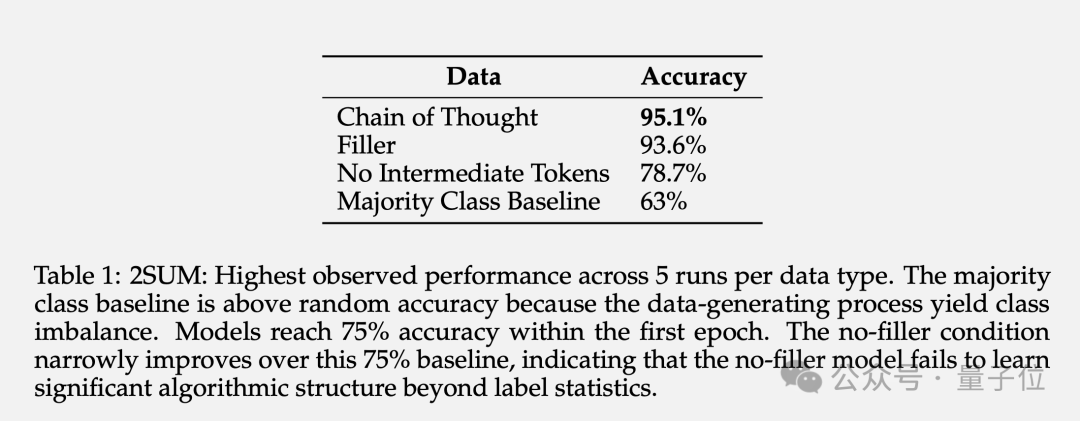

2SUM-Transform only needs to determine whether the sum of two numbers meets the requirements, which is within the expressive capabilities of Transformer.

But at the end of the question, a step is added to "randomly replace each number of the input sequence" to prevent the model from directly calculating on the input token.

The results show that using padding tokens can increase the accuracy from 78.7% to 93.6%.

picture

picture

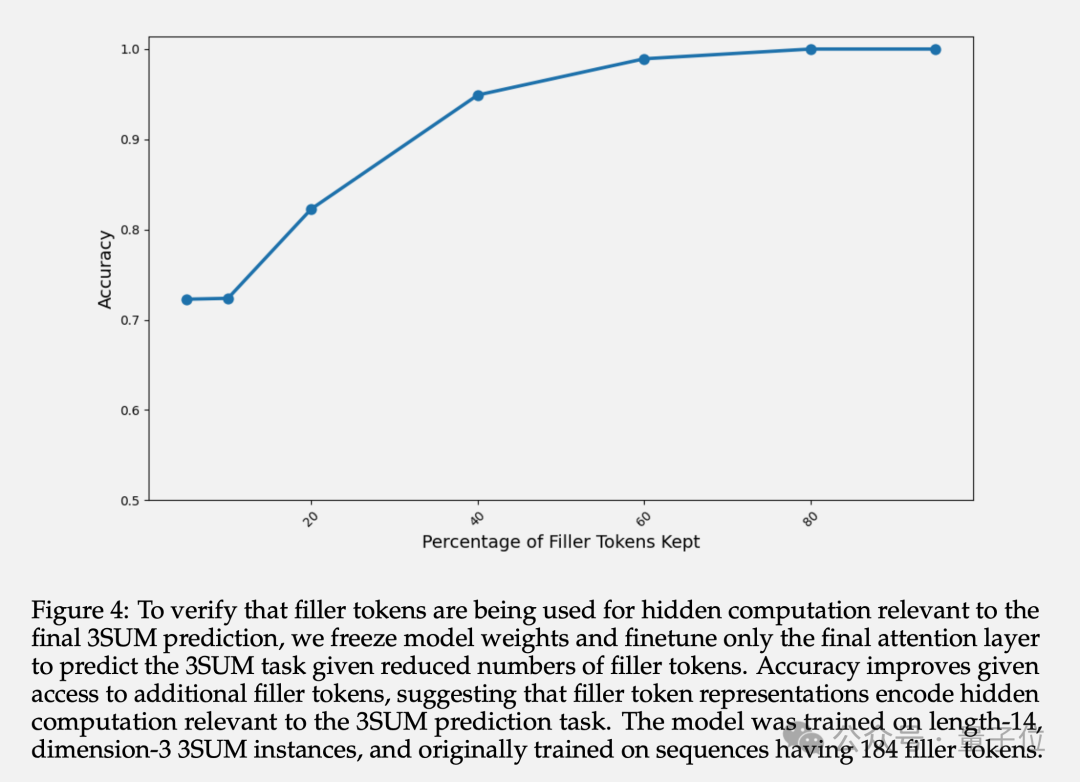

In addition to the final accuracy, the author also studied the hidden layer representation of the filled token. Experiments show that by freezing the parameters of the previous layers and only fine-tuning the last Attention layer, the prediction accuracy increases as the number of available filling tokens increases.

This confirms that the hidden layer representation of the populated token does contain implicit computation related to downstream tasks.

Picture

Picture

Has AI learned to hide its thoughts?

Some netizens wonder, is this paper saying that the "thinking chain" method is actually fake? The prompt word project that I have been studying for so long has been in vain.

Picture

Picture

The team stated that theoretically the role of filling tokens is limited to the scope of TC0 complexity problems.

TC0 is a computational problem that can be solved by a fixed-depth circuit, in which each layer of the circuit can be processed in parallel and can be quickly solved by a few layers of logic gates (such as AND, OR and NOT gates) , which is also the upper limit of computational complexity that Transformer can handle in single forward propagation.

And a long enough thinking chain can extend the expression ability of Transformer beyond TC0.

And it is not easy for a large model to learn to use padding tokens, and specific intensive supervision needs to be provided to converge.

That said, existing large models are unlikely to benefit directly from the padding token method.

But this is not an inherent limitation of current architectures; if provided with sufficient demonstrations in the training data, they should be able to obtain similar benefits from padding symbols.

This research also raises a worrying issue: large models have the ability to perform secret calculations that cannot be monitored, posing new challenges to the explainability and controllability of AI.

In other words, AI can reason on its own in a form invisible to people without relying on human experience.

This is both exciting and terrifying.

Picture

Picture

Finally, some netizens jokingly suggested that Llama 3 first generate 1 quadrillion dots, so that the weight of AGI can be obtained (dog head) .

Picture

Picture

Paper:https://www.php.cn/link/36157dc9be261fec78aeee1a94158c26

Reference Link:

[1]https://www.php.cn/link/e350113047e82ceecb455c33c21ef32a[2]https://www.php.cn/link/872de53a900f3250ae5649ea19e5c381

The above is the detailed content of AI learns to hide its thinking and reason secretly! Solving complex tasks without relying on human experience is more black box. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1658

1658

14

14

1415

1415

52

52

1309

1309

25

25

1257

1257

29

29

1231

1231

24

24

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron