Technology peripherals

Technology peripherals

AI

AI

How are cameras and lidar calibrated? An overview of all mainstream calibration tools in the industry

How are cameras and lidar calibrated? An overview of all mainstream calibration tools in the industry

How are cameras and lidar calibrated? An overview of all mainstream calibration tools in the industry

The calibration of cameras and lidar is a crucial basic work in many tasks. The accuracy of calibration directly affects the upper limit of the effect of subsequent solution fusion. As many autonomous driving and robotics companies invest a lot of manpower and material resources to continuously improve the accuracy of calibration, today we will introduce you to some common Camera-Lidar calibration toolboxes, and we recommend that you collect them!

1.Libcbdetect

Multiple checkerboard detection in one shot: https://www.cvlibs.net/software/libcbdetect/

Use MATLAB The algorithm was written to automatically extract corner points and combine them with sub-pixel accuracy into a rectangular checkerboard-like pattern. This algorithm has the ability to process different types of images (such as pinhole cameras, fisheye cameras, panoramic cameras).

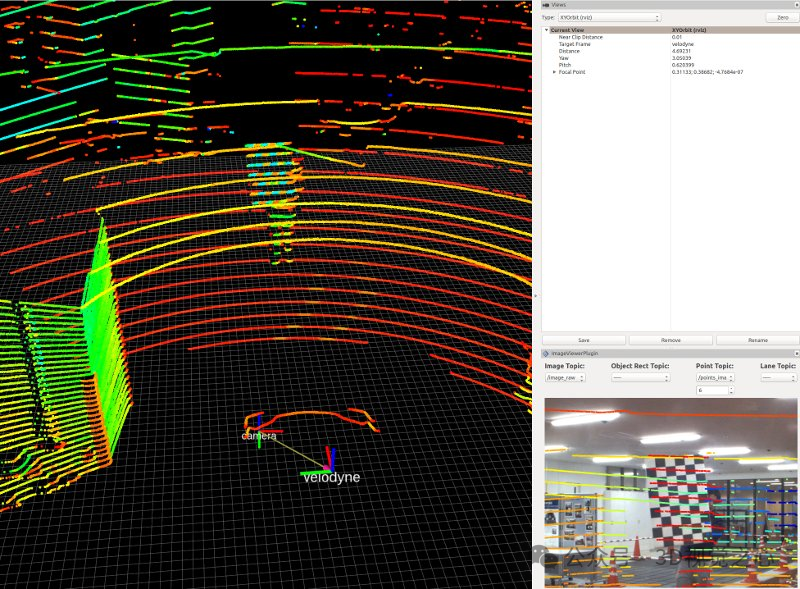

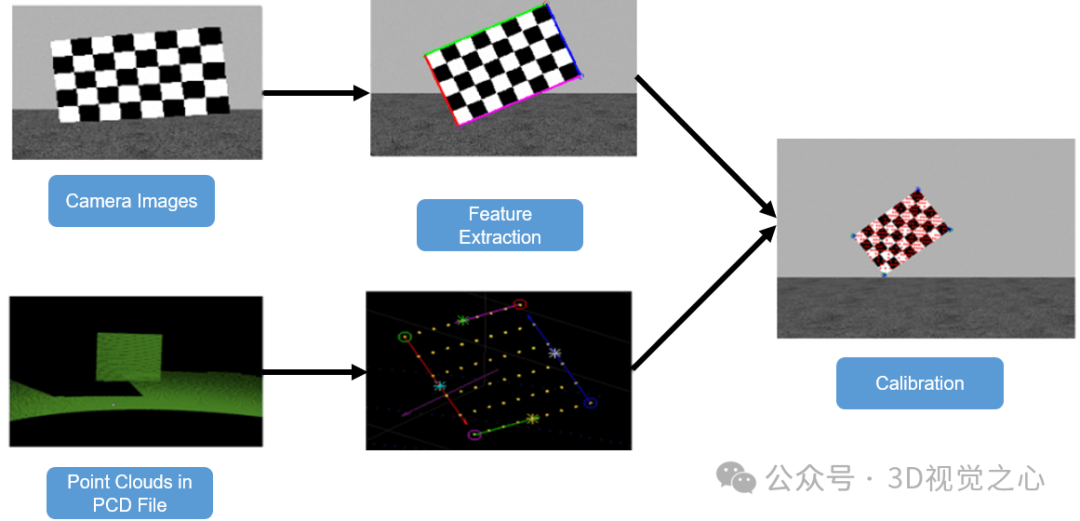

2.Autoware Calibration Package

Autoware framework lidar-camera calibration tool kit.

Link: https://github.com/autowarefoundation/autoware_ai_utilities/tree/master/autoware_camera_lidar_calibrator

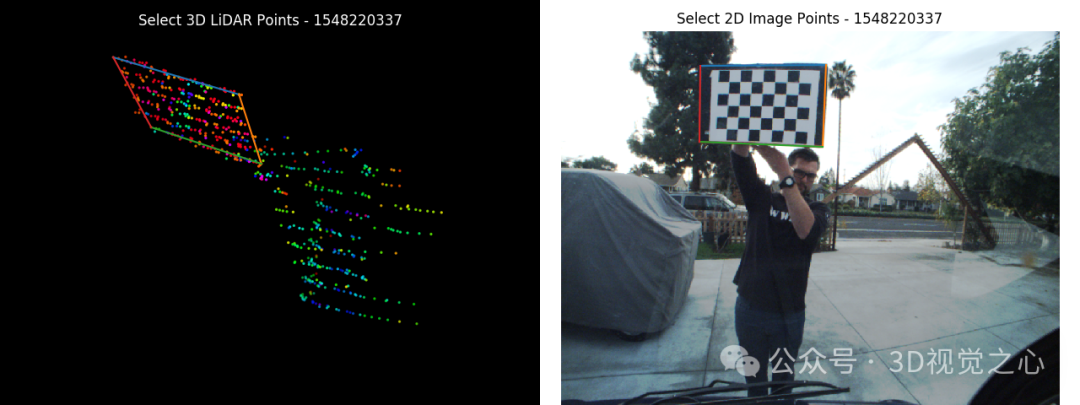

##3. Based on 3D-3D matching Target calibration

LiDAR camera calibration based on 3D-3D point correspondences, ROS package, from the paper "LiDAR-Camera Calibration using 3D-3D Point correspondences"! Link: https://github.com/ankitdhall/lidar_camera_calibration

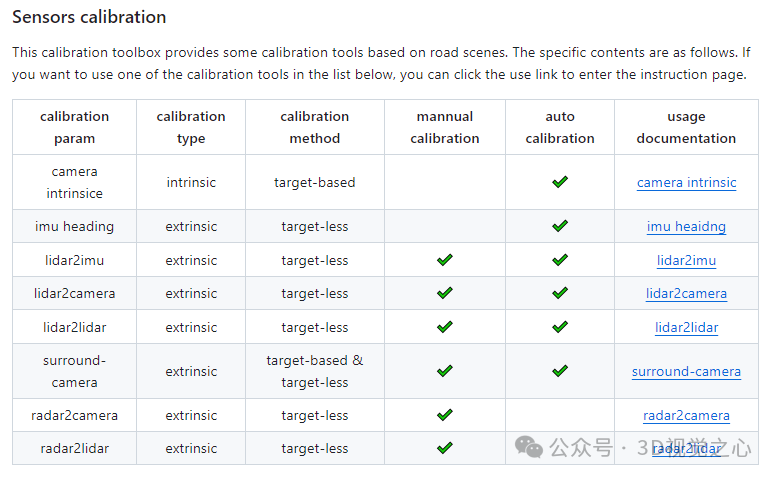

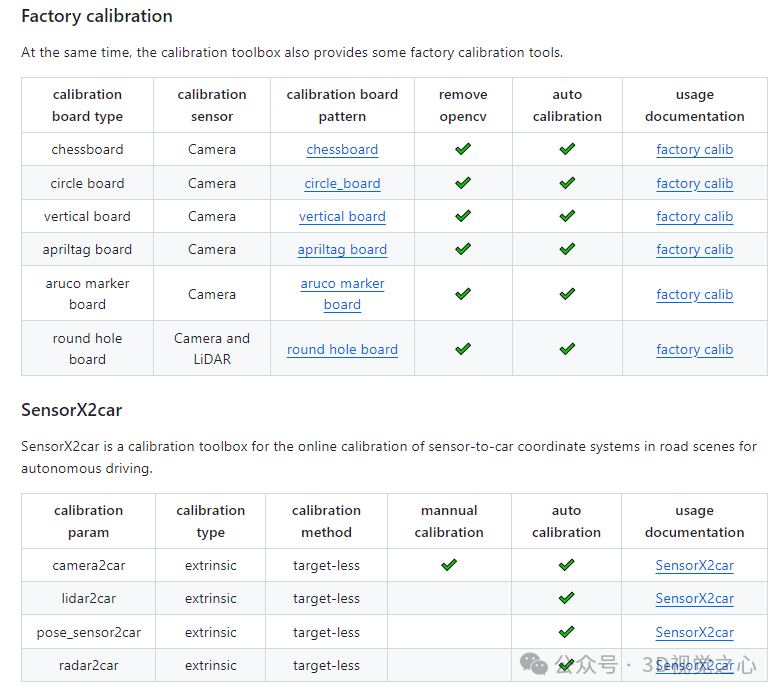

4. Shanghai AI Lab OpenCalib

Produced by Shanghai Artificial Intelligence Laboratory, OpenCalib provides a sensor calibration toolbox. The toolbox can be used to calibrate sensors such as IMU, lidar, camera and radar. Link: https://github.com/PJLab-ADG/SensorsCalibration

Apollo calibration toolbox, link: https://github.com/ApolloAuto/apollo/tree/master/modules/calibration

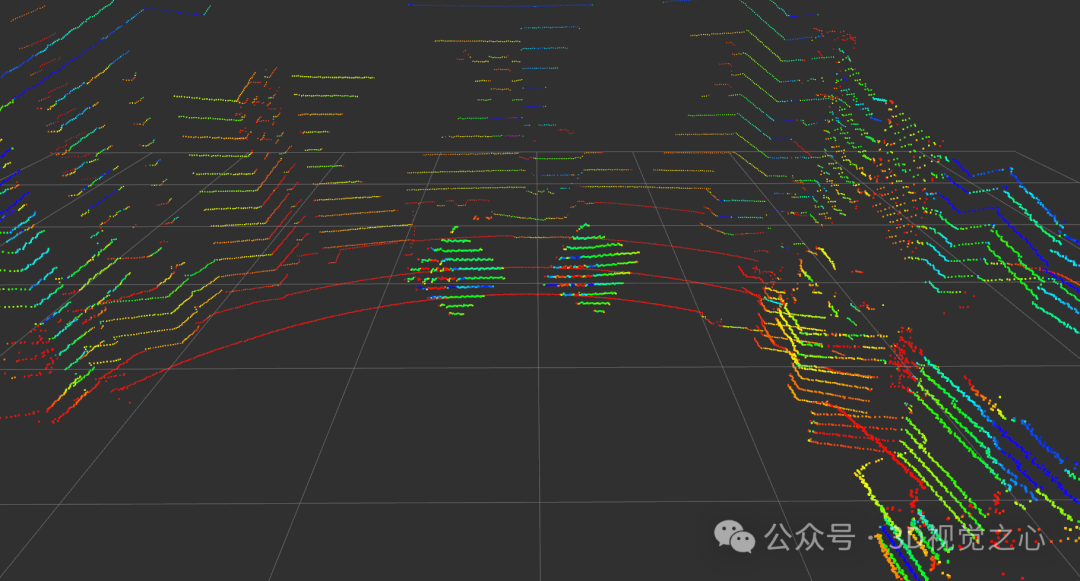

6.Livox -camera calibration toolThis solution provides a method to manually calibrate the external parameters between Livox radar and camera, and has been verified on Mid-40, Horizon and Tele-15. It includes codes related to calculating camera internal parameters, obtaining calibration data, optimizing calculation of external parameters and radar camera fusion applications. In this solution, the corner points of the calibration plate are used as the calibration target. Due to the non-repetitive scanning characteristics of the Livox radar, the density of the point cloud is relatively large, making it easier to find the accurate position of the corner points in the radar point cloud. Calibration and fusion of camera radar can also give good results.

Link: https://github.com/Livox-SDK/livox_camera_lidar_calibration

Chinese documentation: https://github.com/Livox-SDK/livox_camera_lidar_calibration/blob/master/doc_resources/ README_cn.md

7.CalibrationTools

CalibrationTools provides calibration tools for lidar-lidar, lidar camera and other sensor pairs. In addition to this, it also provides:

1) Positioning - Bias estimation tool estimates the parameters of sensors used for dead reckoning (IMU and odometry) for better positioning performance!

2) Visualization and analysis tool for Autoware control output;

3) Calibration tool for fixing vehicle command delays;

Link: https://github.com /tier4/CalibrationTools

##8.Matlab

Matlab’s own toolbox supports lidar and camera calibration, link :https://ww2.mathworks.cn/help/lidar/ug/lidar-and-camera-calibration.html

9.ROS Calibration Tool

ROS Camera LIDAR Calibration Package, link: https://github.com/heethesh/lidar_camera_calibration

10.Direct visual lidar calibration

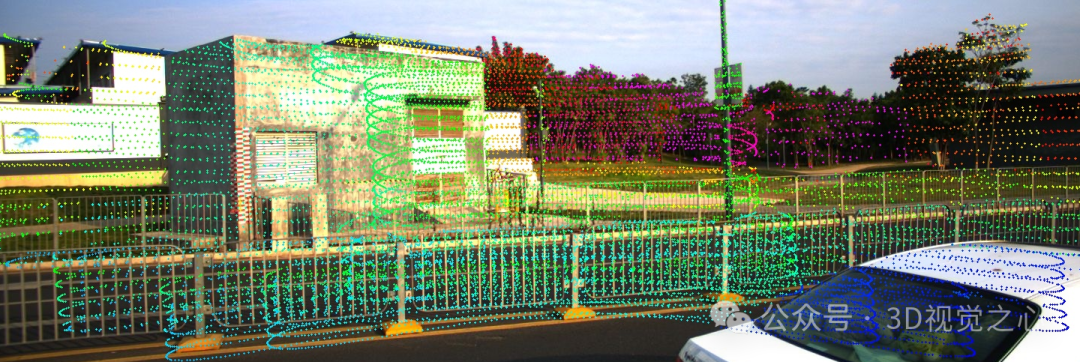

This package provides a toolbox for lidar camera calibration:Universal: It can handle a variety of lidar and camera projection models, including rotation and Non-repetitive scanning lidar, as well as pinhole, fisheye and omnidirectional projection cameras. No target: It does not require calibration targets, but uses environment structures and textures for calibration. Single shot: Calibration only requires at least a pair of lidar point clouds and camera images. Optionally, multiple lidar camera data pairs can be used to improve accuracy. Automatic: The calibration process is automatic and requires no initial guessing. Accurate and robust: It uses a pixel-level direct lidar camera registration algorithm, which is more robust and accurate compared to edge-based indirect lidar camera registration.

Link: https://github.com/koide3/direct_visual_lidar_calibration11.2D lidar-camera toolbox

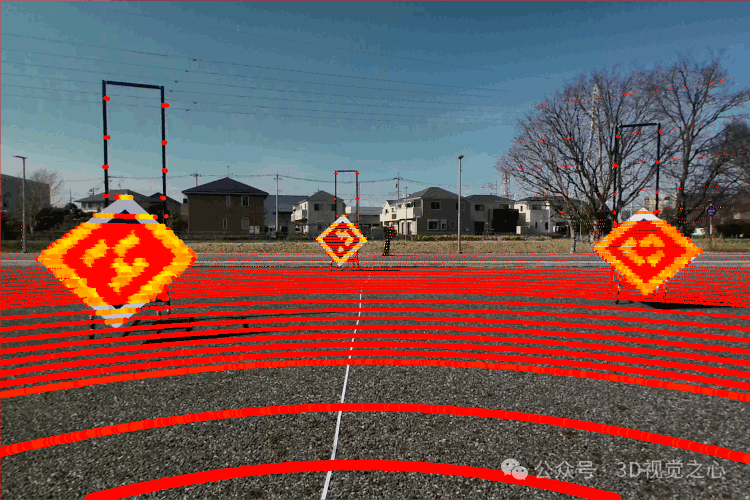

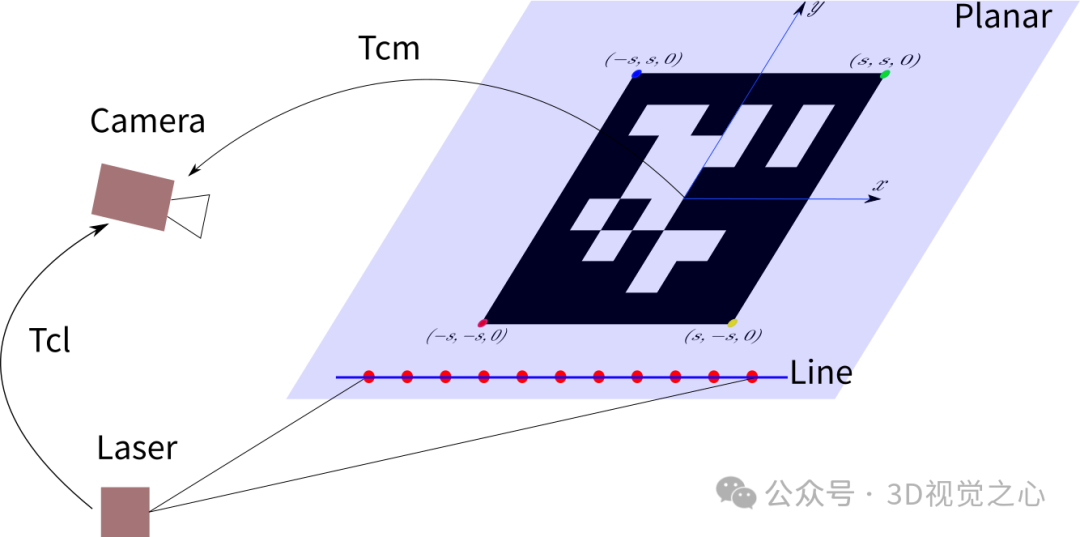

This is a ROS-based Single-line laser and camera external parameters automatic calibration code. The calibration principle is shown in the figure below. The camera estimates the plane equation of the calibration plate plane in the camera coordinate system through the QR code. Since the laser point cloud falls on the plane, the point cloud is converted to the external parameters of the camera coordinate system through the laser coordinate system. In the camera coordinate system, the distance from the constructed point to the plane is used as the error, which is solved using nonlinear least squares. Link: https://github.com/MegviiRobot/CamLaserCalibraTool

The above is the detailed content of How are cameras and lidar calibrated? An overview of all mainstream calibration tools in the industry. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1662

1662

14

14

1418

1418

52

52

1311

1311

25

25

1261

1261

29

29

1234

1234

24

24

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

How to uninstall MySQL and clean residual files

Apr 29, 2025 pm 04:03 PM

How to uninstall MySQL and clean residual files

Apr 29, 2025 pm 04:03 PM

To safely and thoroughly uninstall MySQL and clean all residual files, follow the following steps: 1. Stop MySQL service; 2. Uninstall MySQL packages; 3. Clean configuration files and data directories; 4. Verify that the uninstallation is thorough.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

How to optimize code

Apr 28, 2025 pm 10:27 PM

How to optimize code

Apr 28, 2025 pm 10:27 PM

C code optimization can be achieved through the following strategies: 1. Manually manage memory for optimization use; 2. Write code that complies with compiler optimization rules; 3. Select appropriate algorithms and data structures; 4. Use inline functions to reduce call overhead; 5. Apply template metaprogramming to optimize at compile time; 6. Avoid unnecessary copying, use moving semantics and reference parameters; 7. Use const correctly to help compiler optimization; 8. Select appropriate data structures, such as std::vector.

What kind of software is a digital currency app? Top 10 Apps for Digital Currencies in the World

Apr 30, 2025 pm 07:06 PM

What kind of software is a digital currency app? Top 10 Apps for Digital Currencies in the World

Apr 30, 2025 pm 07:06 PM

With the popularization and development of digital currency, more and more people are beginning to pay attention to and use digital currency apps. These applications provide users with a convenient way to manage and trade digital assets. So, what kind of software is a digital currency app? Let us have an in-depth understanding and take stock of the top ten digital currency apps in the world.

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

The main steps and precautions for using string streams in C are as follows: 1. Create an output string stream and convert data, such as converting integers into strings. 2. Apply to serialization of complex data structures, such as converting vector into strings. 3. Pay attention to performance issues and avoid frequent use of string streams when processing large amounts of data. You can consider using the append method of std::string. 4. Pay attention to memory management and avoid frequent creation and destruction of string stream objects. You can reuse or use std::stringstream.