Technology peripherals

Technology peripherals

AI

AI

Significantly improving GPT-4/Llama2 performance without RLHF, Peking University team proposes Aligner alignment new paradigm

Significantly improving GPT-4/Llama2 performance without RLHF, Peking University team proposes Aligner alignment new paradigm

Significantly improving GPT-4/Llama2 performance without RLHF, Peking University team proposes Aligner alignment new paradigm

Background

Large language models (LLMs) have demonstrated powerful capabilities, but they can also produce unpredictable and harmful output, such as offensive Responses, false information and leakage of private data cause harm to users and society. Ensuring that the behavior of these models aligns with human intentions and values is an urgent challenge.

Although reinforcement learning based on human feedback (RLHF) offers a solution, it faces complex training architecture, high sensitivity to parameters, and reward models Multiple challenges such as instability on different data sets. These factors make RLHF technology difficult to implement, effective, and reproducible. In order to overcome these challenges, the Peking University team proposed a new efficient alignment paradigm-

Aligner, whichThe core is to learn the corrected residual between the aligned and misaligned answers, thereby bypassing the cumbersome RLHF process. Drawing on the ideas of residual learning and scalable supervision, Aligner simplifies the alignment process. It uses a Seq2Seq model to learn implicit residuals and optimize alignment through replication and residual correction steps.

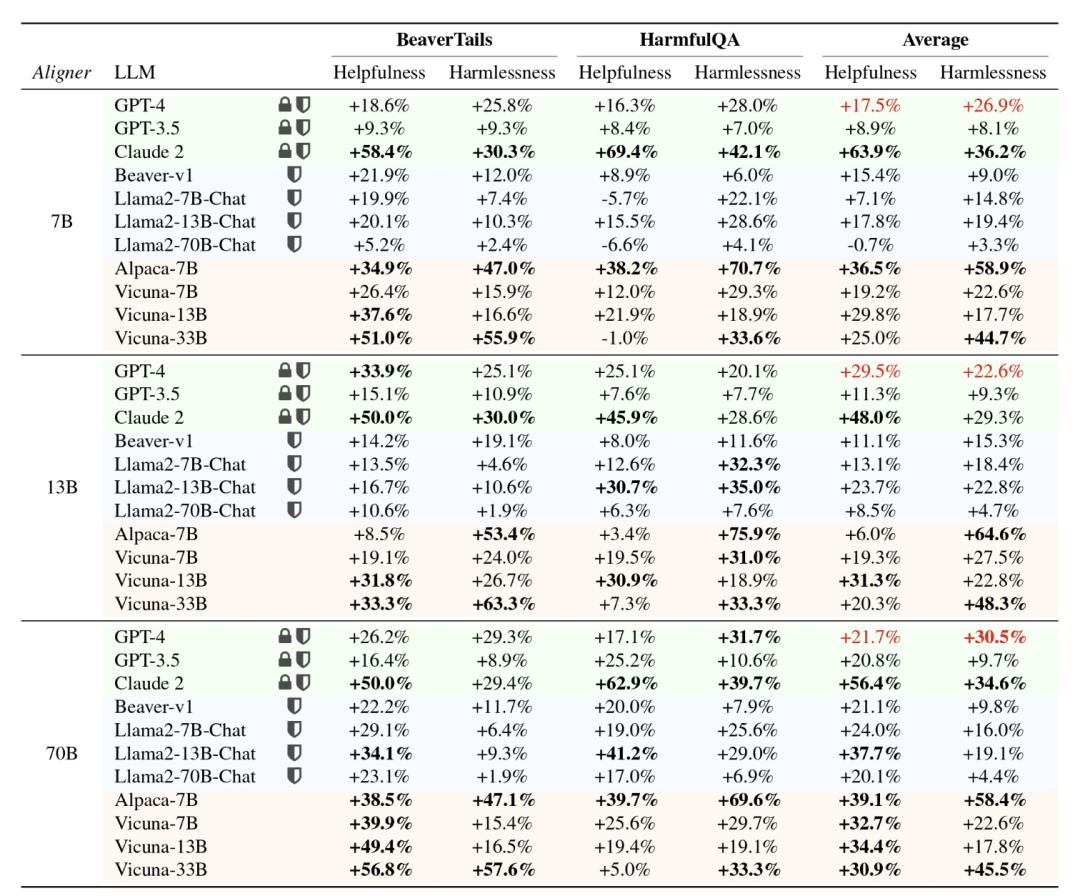

Compared with the complexity of RLHF, which requires training multiple models, the advantage of Aligner is that alignment can be achieved simply by adding a module after the model to be aligned. Furthermore, the computational resources required depend primarily on the desired alignment effect rather than the size of the upstream model. Experiments have proven that using Aligner-7B can significantly improve the helpfulness and security of GPT-4, with the helpfulness increasing by 17.5% and the security increasing by 26.9%. These results show that Aligner is an efficient and effective alignment method, providing a feasible solution for model performance improvement.

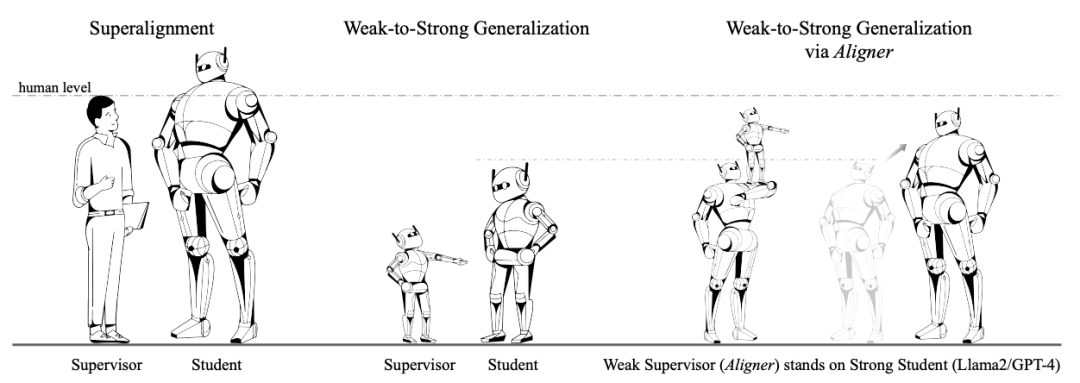

In addition, using the Aligner framework, the author enhances the performance of the strong model (Llama-70B) through the weak model (Aligner-13B) supervision signal, achieving weak-to-strong

Generalization provides a practical solution for super alignment.

##Paper address: https://arxiv.org/abs/2402.02416

##Paper address: https://arxiv.org/abs/2402.02416

- Project homepage & open source address: https://aligner2024.github.io

- Title: Aligner: Achieving Efficient Alignment through Weak-to-Strong Correction

- What is Aligner?

Based on Core Insight:

Correcting unaligned answer is easier than generating aligned answers.

Correcting unaligned answer is easier than generating aligned answers.

As an efficient alignment method, Aligner has the following excellent features:

As an autoregressive Seq2Seq model, Aligner Train on the Query-Answer-Correction (Q-A-C) data set to learn the difference between aligned and unaligned answers, thereby achieving more accurate model alignment. For example, when aligning 70B LLM, Aligner-7B massively reduces the amount of training parameters, which is 16.67 times smaller than DPO and 30.7 times smaller than RLHF.

- The Aligner paradigm realizes generalization from weak to strong. It uses an Aligner model with a high number of parameters and a small number of parameters to supervise LLMs with a large number of signal fine-tuning parameters, which significantly improves the performance of the strong model. For example, fine-tuning Llama2-70B under Aligner-13B supervision improved its helpfulness and safety by 8.2% and 61.6%, respectively.

- Due to the plug-and-play nature of Aligner and its insensitivity to model parameters, it can align models such as GPT3.5, GPT4 and Claude2 that cannot obtain parameters. With just one training session, Aligner-7B aligns and improves the helpfulness and safety of 11 models, including closed-source, open-source, and secure/unsecured aligned models. Among them, Aligner-7B significantly improves the helpfulness and security of GPT-4 by 17.5% and 26.9% respectively.

- Aligner overall performance

The author shows Aligner of various sizes (7B, 13B, 70B ) can improve performance in both API-based models and open source models (including security-aligned and non-security-aligned). In general, as the model becomes larger, the performance of Aligner gradually improves, and the density of information it can provide during correction gradually increases, which also makes the corrected answer safer and more helpful.

How to train an Aligner model?

1.Query-Answer (Q-A) Data Collection

The author obtains Query from various open source data sets, Includes conversations shared by Stanford Alpaca, ShareGPT, HH-RLHF, and others. These questions undergo a process of duplicate pattern removal and quality filtering for subsequent answer and corrected answer generation. Uncorrected answers were generated using various open source models such as Alpaca-7B, Vicuna-(7B,13B,33B), Llama2-(7B,13B)-Chat, and Alpaca2-(7B,13B).

2. Answer correction

The author uses GPT-4, Llama2-70B-Chat and manual annotation to The 3H criteria (helpfulness, safety, honesty) of large language models are used to correct the answers in the Q-A data set.

For answers that already meet the criteria, leave them as is. The modification process is based on a set of well-defined principles that establish constraints for the training of Seq2Seq models, with a focus on making answers more helpful and safer. The distribution of answers changes significantly before and after the correction. The following figure clearly shows the impact of the modification on the data set:

3. Model training

Based on the above process, the author constructed a new revised data set  , where

, where  represents the user’s problem,

represents the user’s problem,  is the original answer to the question, and

is the original answer to the question, and  is the revised answer based on established principles.

is the revised answer based on established principles.

The model training process is relatively simple. The authors train a conditional Seq2Seq model  parameterized by

parameterized by  such that the original answers

such that the original answers  are redistributed to aligned answers.

are redistributed to aligned answers.

The alignment answer generation process based on the upstream large language model is:

The training loss is as follows:

The second item has nothing to do with the Aligner parameter. The training goal of Aligner can be derived as:

The following figure dynamically shows the intermediate process of Aligner:

It is worth noting that Aligner is training and None of the inference stages require access to the parameters of the upstream model. Aligner's reasoning process only needs to obtain the user's questions and the initial answers generated by the upstream large language model, and then generate answers that are more consistent with human values.

Correction of existing answers rather than direct answers allows Aligner to easily align with human values, significantly reducing the requirements on model capabilities.

Aligner vs existing alignment paradigm

Aligner vs SFT

Contrary to Aligner, SFT directly creates a cross-domain mapping from the Query semantic space to the Answer semantic space. This process of learning relies on the upstream model to infer and simulate various contexts in the semantic space, which is much more difficult than learning to modify the signal.

Aligner training paradigm can be considered as a form of residual learning (residual correction). The author created the "copy (correct)" learning paradigm in Aligner. Thus, Aligner essentially creates a residual mapping from the answer semantic space to the revised answer semantic space, where the two semantic spaces are distributionally closer.

To this end, the author constructed Q-A-A data in different proportions from the Q-A-C training data set, and trained Aligner to perform identity mapping learning (also called copy mapping) (called pre- Hot steps). On this basis, the entire Q-A-C training data set is used for training. This residual learning paradigm is also used in ResNet to solve the problem of gradient disappearance caused by stacking too deep neural networks. Experimental results show that the model can achieve the best performance when the preheating ratio is 20%.

Aligner vs RLHF

RLHF trains a reward model (RM) on a human preference dataset and utilizes This reward model is used to fine-tune the LLMs of the PPO algorithm so that the LLMs are consistent with human preferred behavior.

Specifically, the reward model needs to map human preference data from discrete to continuous numerical space for optimization, but compared to Seq2Seq, which has strong generalization ability in text space Model, this kind of numerical reward model has weak generalization ability in the text space, which leads to the unstable effect of RLHF on different models.

Aligner learns the difference (residual error) between aligned and unaligned answers by training a Seq2Seq model, thereby effectively avoiding the RLHF process and achieving better results than RLHF More generalizable performance.

Aligner vs. Prompt Engineering

Prompt Engineering is a common method to stimulate the capabilities of LLMs. However, there are some key problems with this method, such as: it is difficult to design prompts, and different designs need to be carried out for different models. The final effect depends on the capabilities of the model. When the capabilities of the model are not enough to solve the task, multiple iterations may be required, wasting context. Window, the limited context window of small models will affect the effect of prompt word engineering, and for large models, occupying too long context greatly increases the cost of training.

Aligner itself can support the alignment of any model. After one training, it can align 11 different types of models without occupying the context window of the original model. It is worth noting that Aligner can be seamlessly combined with existing prompt word engineering methods to achieve 1 1>2 effects.

In general: Aligner shows the following significant advantages:

1.Aligner Training is simpler. Compared with RLHF’s complex reward model learning and reinforcement learning (RL) fine-tuning process based on this model, Aligner’s implementation process is more direct and easy to operate. Looking back at the multiple engineering parameter adjustment details involved in RLHF and the inherent instability and hyperparameter sensitivity of the RL algorithm, Aligner greatly simplifies the engineering complexity.

#2.Aligner has less training data and obvious alignment effect. Training an Aligner-7B model based on 20K data can improve the helpfulness of GPT-4 by 12% and the security by 26%, and improve the helpfulness of the Vicuna 33B model by 29% and 45.3 % security, while RLHF requires more preference data and refined parameter adjustment to achieve this effect.

3.Aligner does not need to touch the model weights. While RLHF has proven effective in model alignment, it relies on direct training of the model. The applicability of RLHF is limited in the face of non-open source API-based models such as GPT-4 and their fine-tuning requirements in downstream tasks. In contrast, Aligner does not require direct manipulation of the original parameters of the model and achieves flexible alignment by externalizing the alignment requirements in an independent alignment module.

4.Aligner is insensitive to model type. Under the RLHF framework, fine-tuning different models (such as Llama2, Alpaca) not only requires re-collection of preference data, but also requires adjustment of training parameters in the reward model training and RL phases. Aligner can support the alignment of any model through one-time training. For example, by only needing to be trained once on a rectified dataset, Aligner-7B can align 11 different models (including open source models, API models such as GPT) and improve performance by 21.9% and 23.8% in terms of helpfulness and safety respectively.

5.Aligner’s demand for training resources is more flexible. RLHF Fine-tuning a 70B model is still extremely computationally demanding, requiring hundreds of GPU cards to perform. Because the RLHF method also requires additional loading of reward models, actor models, and critic models that are equivalent to the number of model parameters. Therefore, in terms of training resource consumption per unit time, RLHF actually requires more computing resources than pre-training.

In comparison, Aligner provides a more flexible training strategy, allowing users to flexibly choose the training scale of Aligner based on their actual computing resources. For example, for the alignment requirement of a 70B model, users can choose Aligner models of different sizes (7B, 13B, 70B, etc.) based on the actual available resources to achieve effective alignment of the target model.

This flexibility not only reduces the absolute demand for computing resources, but also provides users with the possibility of efficient alignment under limited resources.

Weak-to-strong Generalization

# #Weak-to-strong generalization The issue discussed is whether the labels of the weak model can be used to train a strong model, so that the performance of the strong model can be improved. OpenAI uses this analogy to solve the problem of SuperAlignment. Specifically, they use ground truth labels to train weak models.

OpenAI researchers conducted some preliminary experiments. For example, on the task of text classification (text classification), the training data set was divided into two parts, the input in the first half and the true value. The labels are used to train the weak model, while the second half of the training data only retains the input, labels produced by the weak model. Only the weak labels produced by the weak model are used to provide supervision signals for the strong model when training the strong model.

The purpose of training a weak model using true value labels is to enable the weak model to gain the ability to solve the corresponding task, but the input used to generate weak labels and the input used to train the weak model are not the same. This paradigm is similar to the concept of "teaching", that is, using weak models to guide strong models.

The author proposes a novel weak-to-strong generalization paradigm based on the properties of Aligner.

The author's core point is to let Aligner act as a "supervisor standing on the shoulders of giants." Unlike OpenAI's method of directly supervising the "giant", Aligner will modify stronger models through weak to strong corrections to provide more accurate labels in the process.

Specifically, during Aligner’s training process, the rectified data contains GPT-4, human annotators, and larger model annotations. Subsequently, the author uses Aligner to generate weak labels (i.e. corrections) on the new Q-A data set; and then uses the weak labels to fine-tune the original model.

Experimental results show that this paradigm can further improve the alignment performance of the model.

Experimental resultsAligner vs SFT/RLHF/DPO

##The author uses Aligner’s Query -Answer-Correction training data set, fine-tuning Alpaca-7B through SFT/RLHF/DPO method respectively.When performing performance evaluation, the open source BeaverTails and HarmfulQA test prompt data sets are used, and the answers generated by the fine-tuned model and the answers to the original Alpaca-7B model are corrected using Aligner. The generated answers, compared in terms of helpfulness and security, are as follows:

Analyzing specific experimental cases, it can be found that the alignment model fine-tuned using the RLHF/DPO paradigm may be more inclined to produce conservative answers in order to improve security, but it cannot take security into account in the process of improving helpfulness. sex, leading to an increase in dangerous information in answers. Aligner vs Prompt Engineering ##Comparison of Aligner-13B and CAI/Self-Critique methods on the same upstream model Performance improvement, the experimental results are shown in the figure below: Aligner-13B improves GPT-4 in both helpfulness and security than the CAI/Self-Critique method, which shows that the Aligner paradigm has more advantages than the commonly used prompt engineering method. obvious advantage. It is worth noting that CAI prompts are only used during reasoning in the experiment to encourage them to self-modify their answers, which is also one of the forms of Self-Refine. In addition, the authors also conducted further exploration. They corrected the answers using the CAI method through Aligner, and After direct comparison of the answers before and after Aligner, the experimental results are shown in the figure below. Method A:CAI Aligner Method B:CAI only Use Aligner to correct CAI After the second revision of the answer, the answer has been significantly improved in terms of helpfulness without losing security. This shows that Aligner is not only highly competitive when used alone, but can also be combined with other existing alignment methods to further improve its performance. Weak-to-strong Generalization Method: weak-to -strong The training data set consists of (q, a, a′) triples, where q represents the questions from the Aligner training data set - 50K, a represents the answer generated by the Alpaca-7B model, and a′ represents the Aligner-7B given Alignment answer (q, a). Unlike SFT, which only utilizes a′ as the ground truth label, in RLHF and DPO training, a′ is considered better than a. The author used Aligner to correct the original answer on the new Q-A data set, used the corrected answer as a weak label, and used these weak labels as supervision signals to train a larger model. . This process is similar to OpenAI’s training paradigm. The author trains strong models based on weak labels through three methods: SFT, RLHF and DPO. The experimental results in the table above show that when the upstream model is fine-tuned through SFT, the weak labels of Aligner-7B and Aligner-13B improve the performance of the Llama2 series of strong models in all scenarios. As an innovative alignment method, Aligner has huge research potential. In the paper, the author proposed several Aligner application scenarios, including: 1. Application of multi-turn dialogue scenarios. In multi-round conversations, the challenge of facing sparse rewards is particularly prominent. In question-and-answer conversations (QA), supervision signals in scalar form are typically only available at the end of the conversation. This sparsity problem will be further amplified in multiple rounds of dialogue (such as continuous QA scenarios), making it difficult for reinforcement learning-based human feedback (RLHF) to be effective. Investigating Aligner’s potential to improve dialogue alignment across multiple rounds is an area worthy of further exploration. #2. Alignment of human values to the reward model. In the multi-stage process of building reward models based on human preferences and fine-tuning large language models (LLMs), there are huge challenges in ensuring that LLMs are aligned with specific human values (e.g. fairness, empathy, etc.) challenge. By handing over the value alignment task to the Aligner alignment module outside the model, and using specific corpus to train Aligner, it not only provides new ideas for value alignment, but also enables Aligner to correct the previous Set the model's output to reflect specific values. 3. Streaming and parallel processing of MoE-Aligner. By specializing and integrating Aligners, you can create a more powerful and comprehensive hybrid expert (MoE) Aligner that can meet multiple hybrid security and value alignment needs. At the same time, further improving Aligner’s parallel processing capabilities to reduce the loss of inference time is a feasible development direction. #4. Fusion during model training. By integrating the Aligner layer after a specific weight layer, real-time intervention in the output during model training can be achieved. This method not only improves alignment efficiency, but also helps optimize the model training process and achieve more efficient model alignment. This work was independently completed by Yang Yaodong’s research team at the AI Security and Governance Center of the Institute of Artificial Intelligence of Peking University. The team is deeply involved in the alignment technology of large language models, including the open source million-level safe alignment preference data set BeaverTails (NeurIPS 2023) and the safe alignment algorithm SafeRLHF (ICLR 2024 Spotlight) for large language models. Related technologies have been adopted by multiple open source models. Wrote the industry's first comprehensive review of artificial intelligence alignment and paired it with the resource website www.alignmentsurvey.com (click on the original text to jump directly), systematically expounding on the four perspectives of Learning from Feedback, Learning under Distribution Shift, Assurance, and Governance. AI alignment problem below. The team’s views on alignment and super-alignment were featured on the cover of the 2024 issue 5 of Sanlian Life Weekly.

Outlook: Potential research directions of Aligner

Team Introduction

The above is the detailed content of Significantly improving GPT-4/Llama2 performance without RLHF, Peking University team proposes Aligner alignment new paradigm. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1666

1666

14

14

1426

1426

52

52

1328

1328

25

25

1273

1273

29

29

1255

1255

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

The top 10 digital virtual currency trading platforms are: 1. Binance, 2. OKX, 3. Coinbase, 4. Kraken, 5. Huobi Global, 6. Bitfinex, 7. KuCoin, 8. Gemini, 9. Bitstamp, 10. Bittrex. These platforms all provide high security and a variety of trading options, suitable for different user needs.