Technology peripherals

Technology peripherals

AI

AI

New IT operation and maintenance management requires hard work on both infrastructure and data

New IT operation and maintenance management requires hard work on both infrastructure and data

New IT operation and maintenance management requires hard work on both infrastructure and data

In the AI big model era, data gives IT people a “new mission”

Currently IT people play the role of operational support in enterprises character of. When it comes to operation and maintenance management, I believe everyone has a hard time. They are responsible for tedious, high-load and high-risk operation and maintenance work every day, but they have become "transparent" when it comes to business planning and career development. There is a joking saying in the industry: "Those who only spend money do not deserve to have a say".

#With the popularization of AI large model applications, data has become a key asset and core competitiveness of enterprises. In recent years, the scale of enterprise data has grown rapidly, increasing exponentially from the petabyte level to the hundreds of petabyte level. Data types have also gradually evolved from structured data based on databases to semi-structured and unstructured data based on files, logs, videos, etc. In order to meet the needs of business departments, data storage needs to be classified and accessible like a library, and a more secure and reliable storage method is also needed.

IT people are no longer just passive players responsible for building and managing IT resources and ensuring equipment stability.

The new mission of IT people has evolved into providing high-quality data services, making data easy to use, and helping business departments make good use of data!

"Infrastructure" and "data" are very close, but their "management" is far apart

For infrastructure management, a common approach in the industry is to use AIOps technology to change tedious manual daily operation and maintenance into automated execution using tools, through expert systems and knowledge Intelligent capabilities such as maps can proactively discover system hazards and automatically repair faults. After the popularization of generative AI technology, new applications such as intelligent customer service and interactive operation and maintenance have recently emerged.

In terms of data management, the industry has leading companies such as Informatica, IBM, etc. The representative professional DataOps software supplier supports data integration, data labeling, data analysis, data optimization, data market and other capabilities, and provides services for data analysts, BI analysts, data scientists and other business teams.

The author’s research found that infrastructure operation and maintenance management and data management in most enterprises are currently separated, and are managed by different teams. Responsible, there is no effective collaboration between tool platforms. Business data is stored in IT infrastructure such as storage and should be integrated. However, the actual management of the two is far apart, and even the languages between the two teams are not aligned. This usually brings several disadvantages:

1) Data from different sources: Because they belong to different teams and use different tools, business teams usually use the original data to Copy a copy to the data management platform through ETL and other methods for analysis and processing. This not only causes a waste of storage space, but also causes problems such as data inconsistency and untimely data updates, which affects the accuracy of data analysis.

2) Difficulty in cross-regional collaboration: Nowadays, enterprise data centers are deployed in multiple cities, and data is spread across During regional transmission, replication is currently mainly performed at the host layer through DataOps software. This data transmission method is not only inefficient, but also has serious hidden dangers such as security, compliance, and privacy during the transmission process.

3) Insufficient system optimization: Currently, optimization is usually based on the utilization of infrastructure resources, because It is impossible to perceive the data layout and achieve global optimization. The cost of data storage remains high. The contradiction between the limited growth of the budget and the exponential growth of data scale has become the key contradiction restricting the accumulation of enterprise data assets.

IT people, open up the two channels of "infrastructure" and "data" and start the flywheel of digital intelligence

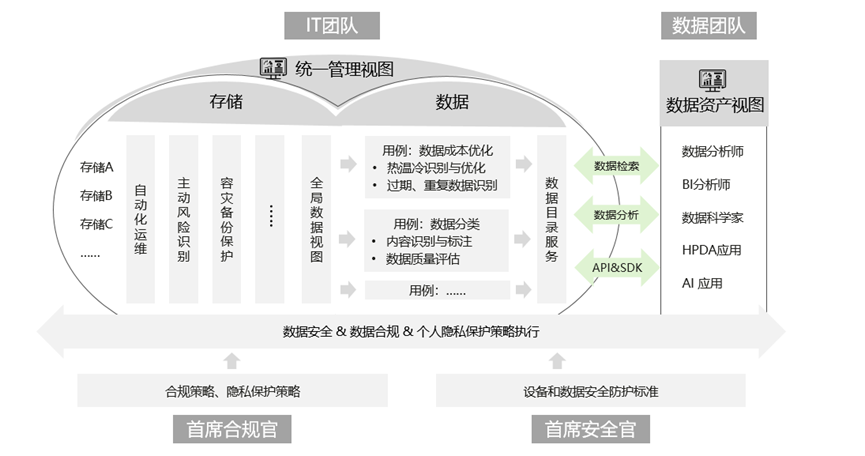

The author believes that the IT team should manage and optimize "infrastructure" and "data" as an organic whole, achieve data origination, global optimization, and safe circulation, and play the role of data asset manager important role.

First, achieve a unified view of global files. Utilize global file system, unified metadata management and other technologies to form a unified global view of data in different regions, different data centers, and different types of equipment. On this basis, global optimization strategies can be formulated according to dimensions such as hot, warm, cold, repetition, expiration, etc., and sent to the storage device for execution. This approach can achieve global optimization. Technologies such as compression and encryption based on storage layer replication can usually achieve dozens of times faster data movement, and both efficiency and security can be guaranteed.

Second, automatically generate a data directory from massive unstructured data. Automatically generate data directory services through metadata, enhanced metadata, etc. to efficiently manage data by category. Based on the catalog, the business team can automatically extract data that meets the conditions for analysis and processing, instead of manually searching for data like a needle in a haystack. The author's research found that the technology for data annotation through AI recognition algorithms is relatively mature. Therefore, open frameworks can be used to integrate AI algorithms for different scenarios, automatically analyze file content to form diversified tags, and use them as enhanced metadata to improve data management capabilities. .

At the same time, when data flows across devices, special considerations need to be given to issues such as data sovereignty and compliance privacy. The data in the storage device should be automatically classified, privacy graded, decentralized and divided into domains, etc. The management software should uniformly manage data access, use, flow and other policies to avoid the leakage of sensitive information and private data. , these will become basic requirements in future data element trading scenarios. For example, when data flows out of a storage device, compliance, personal privacy, etc. must first be determined to determine whether it meets policy requirements, otherwise the enterprise will face serious legal and regulatory risks.

The reference architecture is as follows:

According to After conducting research and consulting peer experts, the author found that leading storage vendors in the industry such as Huawei Storage and NetApp have released product solutions for integrated storage and data management. I believe that more vendors will support this in the future.

Equipment and data must be grasped with both hands, and both hands must be strong. IT people can play a more important role in the AI era.

The above is the detailed content of New IT operation and maintenance management requires hard work on both infrastructure and data. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1671

1671

14

14

1428

1428

52

52

1329

1329

25

25

1276

1276

29

29

1256

1256

24

24

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

Top 10 digital currency trading platforms: Top 10 safe and reliable digital currency exchanges

Apr 30, 2025 pm 04:30 PM

The top 10 digital virtual currency trading platforms are: 1. Binance, 2. OKX, 3. Coinbase, 4. Kraken, 5. Huobi Global, 6. Bitfinex, 7. KuCoin, 8. Gemini, 9. Bitstamp, 10. Bittrex. These platforms all provide high security and a variety of trading options, suitable for different user needs.

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

How to use MySQL functions for data processing and calculation

Apr 29, 2025 pm 04:21 PM

How to use MySQL functions for data processing and calculation

Apr 29, 2025 pm 04:21 PM

MySQL functions can be used for data processing and calculation. 1. Basic usage includes string processing, date calculation and mathematical operations. 2. Advanced usage involves combining multiple functions to implement complex operations. 3. Performance optimization requires avoiding the use of functions in the WHERE clause and using GROUPBY and temporary tables.