Technology peripherals

Technology peripherals

AI

AI

Published in the Nature sub-journal, the University of Waterloo team comments on the present and future of 'quantum computers + large language models'

Published in the Nature sub-journal, the University of Waterloo team comments on the present and future of 'quantum computers + large language models'

Published in the Nature sub-journal, the University of Waterloo team comments on the present and future of 'quantum computers + large language models'

One of the key challenges in simulating today's quantum computing devices is the ability to learn and encode the complex correlations between qubits. Emerging technologies based on machine learning language models have demonstrated the unique ability to learn quantum states.

Recently, researchers from the University of Waterloo published a perspective article titled "Language models for quantum simulation" in "Nature Computational Science", emphasizing the role of language models in building quantum computers. important contributions in this regard and explore their potential role in future competition for quantum superiority. This article highlights the unique value of language models in the field of quantum computing, noting that they can be used to address the complexity and accuracy of quantum systems. Researchers believe that by using language models, the performance of quantum algorithms can be better understood and optimized, and new ideas can be provided for the development of quantum computers. The article also emphasizes the potential role of language models in the competition for quantum advantage, arguing that they can help accelerate the development of quantum computers and are expected to achieve results in solving practical problems

Paper link: https://www.nature.com/articles/s43588-023-00578-0

Quantum computers have begun to mature, and many devices have recently claimed to have Quantum advantage. The continued development of classical computing capabilities, such as the rapid rise of machine learning techniques, has given rise to many exciting scenarios surrounding the interplay between quantum and classical strategies. As machine learning continues to be rapidly integrated into the quantum computing stack, it begs the question: could it transform quantum technology in powerful ways in the future?

One of the main challenges facing current quantum computers is learning quantum states. Recently emerged generative models offer two common strategies to solve the problem of learning quantum states.

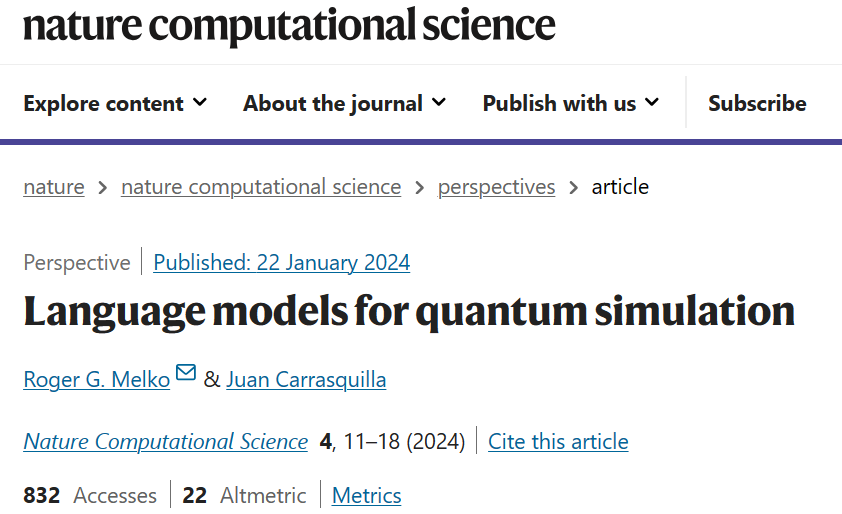

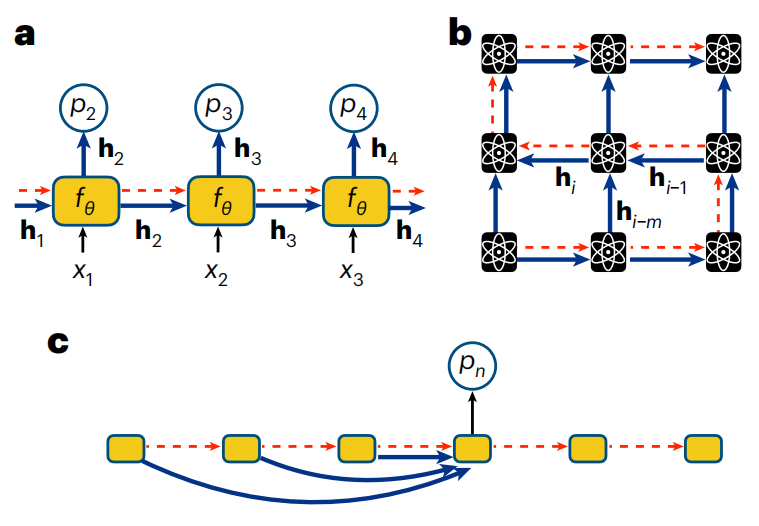

Illustration: Generative models for natural language and other fields. (Source: paper)

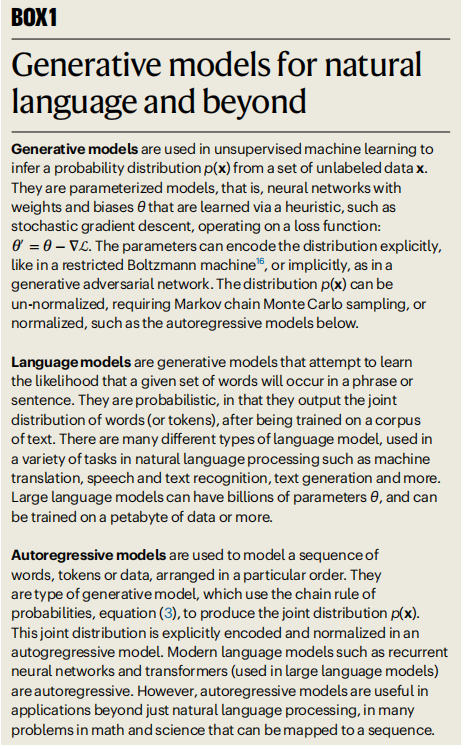

First, traditional maximum likelihood methods can be employed by performing data-driven learning using data sets that represent the output of a quantum computer. Second, we can use a physics approach to quantum states that exploits knowledge of interactions between qubits to define surrogate loss functions.

In either case, an increase in the number of qubits N will cause the size of the quantum state space (Hilbert space) to grow exponentially, which is called the curse of dimensionality. Therefore, there are huge challenges in the number of parameters required to represent quantum states in extended models and in the computational efficiency of finding optimal parameter values. To overcome this problem, artificial neural network generation models are a very suitable solution.

Language models are a particularly promising generative model that have become a powerful architecture for solving high-complexity language problems. Due to its scalability, it is also suitable for problems in quantum computing. Now, as industrial language models move into the trillions of parameter range, it is natural to wonder what similar large-scale models can achieve in physics, whether in applications such as extended quantum computing, or in quantum matter, materials and basic theoretical understanding of equipment.

Illustration: Quantum physics problems and their variational formulas. (Source: Paper)

Autoregressive Models for Quantum Computing

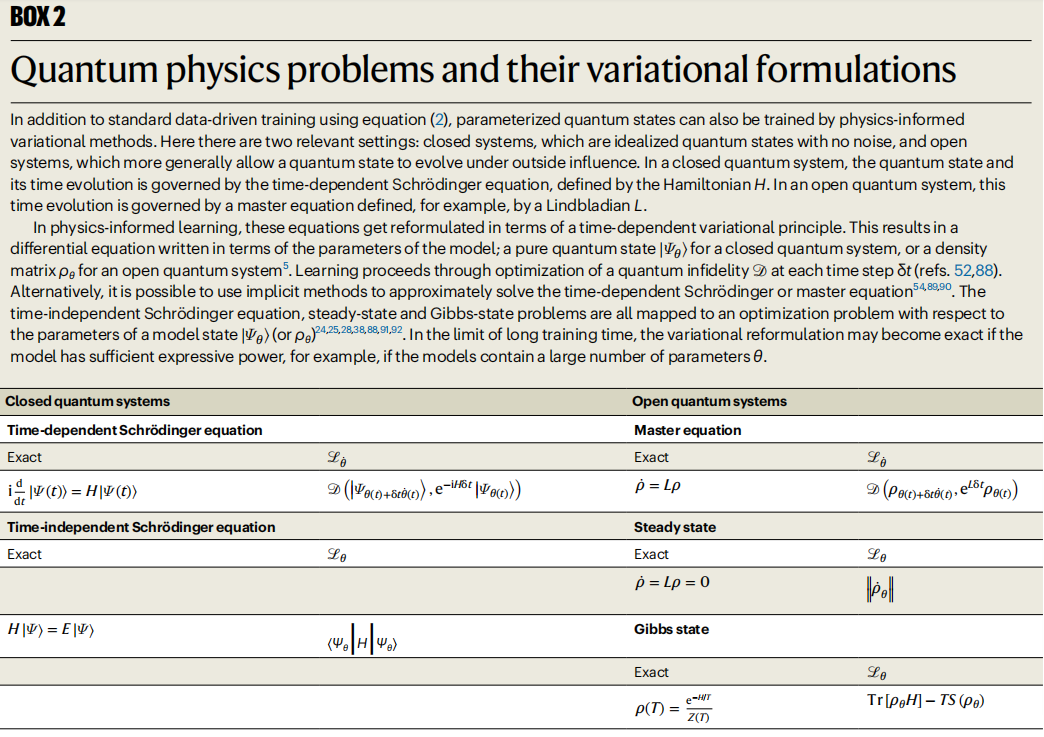

Language models are generative models designed to infer probability distributions from natural language data.

The task of a generative model is to learn probabilistic relationships between words appearing in the corpus, allowing the generation of new phrases one token at a time. The main difficulty lies in modeling all the complex dependencies between words.

Similar challenges apply to quantum computers, where non-local correlations such as entanglement can lead to highly non-trivial dependencies between qubits. Therefore, an interesting question is whether the powerful autoregressive architectures developed in industry can also be applied to solve problems in strongly correlated quantum systems.

Illustration: Autoregressive strategies for text and qubit sequences. (Source: Paper)

RNN Wave Function

RNN is any neural network that contains recurrent connections such that the output of an RNN unit depends on the previous output. Since 2018, the use of RNNs has rapidly expanded to cover a variety of the most challenging tasks in understanding quantum systems.

A key advantage of RNNs suitable for these tasks is their ability to learn and encode highly significant correlations between qubits, including quantum entanglement that is non-local in nature.

#Illustration: RNN for qubit sequences. (Source: paper)

Physicists have used RNNs for a variety of innovative uses related to quantum computing. RNNs have been used for the task of reconstructing quantum states from qubit measurements. RNNs can also be used to simulate the dynamics of quantum systems, which is considered one of the most promising applications of quantum computing and therefore a key task in defining quantum advantage. RNNs have been used as a strategy to build neural error-correcting decoders, a key element in the development of fault-tolerant quantum computers. Additionally, RNNs are able to leverage data-driven and physics-inspired optimizations, enabling an increasing number of innovative uses in quantum simulations.

The physicist community continues to actively develop RNNs, hoping to use them to accomplish the increasingly complex computational tasks encountered in the era of quantum advantage. The computational competitiveness of RNNs with tensor networks in many quantum tasks, coupled with their natural ability to harness the value of qubit measurement data, suggests that RNNs will continue to play an important role in simulating complex tasks on quantum computers in the future.

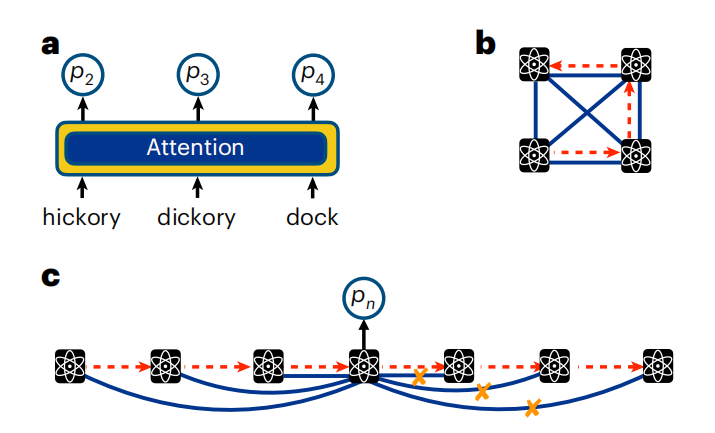

Transformer Quantum State

#While RNNs have achieved great success in natural language tasks over the years, they have recently lost ground in industry due to Transformer Overshadowed by self-attention mechanisms, the Transformer is a key component of today's large language model (LLM) encoder-decoder architecture.

The success of scaling Transformers, and the important questions raised by the non-trivial emergence phenomena they exhibit in language tasks, have long fascinated physicists, for whom, Achieving scaling is a major goal of quantum computing research.

Essentially, Transformer is a simple autoregressive model. However, unlike RNNs, which implicitly encode correlations through hidden vectors, the conditional distribution output by a Transformer model explicitly depends on all other variables in the sequence regarding autoregressive properties. This is accomplished through the self-attention mechanism of causal shielding.

Illustration: Note the text and qubit sequence. (Source: paper)

As with language data, attention in quantum systems is computed by taking qubit measurements and transforming them through a series of parameterized functions. By training a bunch of these parameterized functions, the Transformer can learn the dependencies between qubits. With the attention mechanism, there is no need to relate the geometry of the conveyed hidden states (as in RNNs) to the physical arrangement of the qubits.

By leveraging this architecture, Transformers with billions or trillions of parameters can be trained.

Hybrid two-step optimization that combines data-driven and physics-inspired learning is important for the current generation of quantum computers, and Transformer has been demonstrated to mitigate the problems that arise in today’s imperfect output data. errors and may form the basis for a robust error correction protocol to support the development of truly fault-tolerant hardware in the future.

As the scope of research involving Transformers in quantum physics continues to rapidly expand, a series of interesting questions remain.

The Future of Language Models for Quantum Computing

Although physicists have only been exploring them for a short time, language models are showing promise in their application to quantum computing. It has achieved remarkable success against a wide range of challenges in the field. These results indicate many promising future research directions.

Another key use case for language models in quantum physics comes from their ability to optimize, not through data, but through knowledge of the Hamiltonian or Lindbladian fundamental qubit interactions .

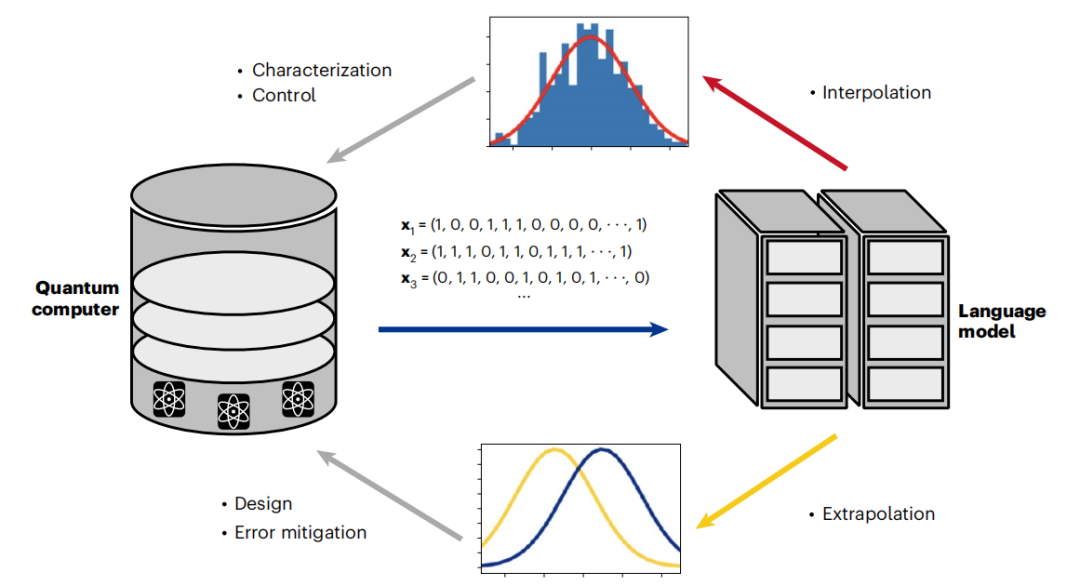

Наконец, языковая модель открывает новую область гибридного обучения за счет сочетания оптимизации на основе данных и оптимизации на основе вариаций. Эти новые стратегии предлагают новые способы уменьшения ошибок и демонстрируют значительные улучшения в вариационном моделировании. Поскольку генеративные модели недавно были адаптированы в квантовые декодеры с исправлением ошибок, гибридное обучение может стать важным шагом на пути к будущему Святому Граалю отказоустойчивых квантовых компьютеров. Это говорит о том, что между квантовыми компьютерами и языковыми моделями, обученными на их выходе, вот-вот возникнет благоприятный цикл.

Иллюстрация: Языковая модель реализует расширение квантовых вычислений посредством эффективного цикла. (Источник: Paper)

Заглядывая в будущее, самые захватывающие возможности связать область языковых моделей с квантовыми вычислениями заключаются в их способности демонстрировать масштаб и появление.

Сегодня, с демонстрацией новых свойств LLM, был пройден новый рубеж, поднимающий множество интересных вопросов. Учитывая достаточное количество обучающих данных, сможет ли LLM изучить цифровую копию квантового компьютера? Как включение языковых моделей в стек управления повлияет на характеристики и конструкцию квантовых компьютеров? Если масштаб достаточно велик, сможет ли LLM показать появление макроскопических квантовых явлений, таких как сверхпроводимость?

Пока теоретики размышляют над этими вопросами, физики-экспериментаторы и вычислительные физики начали всерьез применять языковые модели для проектирования, описания и управления современными квантовыми компьютерами. Переступая порог квантового преимущества, мы также вступаем на новую территорию в расширении языковых моделей. Хотя трудно предсказать, как будет развиваться столкновение квантовых компьютеров и LLM, ясно то, что фундаментальные сдвиги, вызванные взаимодействием этих технологий, уже начались.

The above is the detailed content of Published in the Nature sub-journal, the University of Waterloo team comments on the present and future of 'quantum computers + large language models'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to understand ABI compatibility in C?

Apr 28, 2025 pm 10:12 PM

How to understand ABI compatibility in C?

Apr 28, 2025 pm 10:12 PM

ABI compatibility in C refers to whether binary code generated by different compilers or versions can be compatible without recompilation. 1. Function calling conventions, 2. Name modification, 3. Virtual function table layout, 4. Structure and class layout are the main aspects involved.