Handwriting recognition technology and its algorithm classification

The progress of machine learning technology will definitely promote the development of handwriting recognition technology. This article will focus on handwriting recognition technologies and algorithms that currently perform well.

Capsule Networks (CapsNets)

Capsule networks are one of the latest and most advanced architectures in neural networks and are considered to be an important addition to existing Improvements in machine learning techniques.

Pooling layers in convolutional blocks are used to reduce data dimensionality and achieve spatial invariance for identifying and classifying objects in images. However, a drawback of pooling is that a large amount of spatial information about object rotation, position, scale, and other positional properties is lost in the process. Therefore, although the accuracy of image classification is high, the performance of locating the precise location of objects in the image is poor.

Capsule is a neuron module used to store information about the position, rotation, scale and other information of objects in high-dimensional vector space. Each dimension represents a special characteristic of the object.

The kernel that generates feature maps and extracts visual features works with dynamic routing by combining individual opinions from multiple groups called capsules. This results in equal variance between kernels and improves performance compared to CNNs.

The kernel that generates feature maps and extracts visual features works with dynamic routing by combining individual opinions from multiple groups (called capsules). This leads to equivalence between kernels and improved performance compared to CNNs.

Multi-dimensional Recurrent Neural Network (MDRNN)

RNN/LSTM (Long Short-Term Memory) processing sequential data is limited to processing one-dimensional data, such as Text, they cannot be extended directly to images.

Multidimensional Recurrent Neural Networks can replace a single recurrent connection in a standard Recurrent Neural Network with as many recurrent units as there are dimensions in the data.

During the forward pass, at each point in the data sequence, the hidden layer of the network receives external input and its own activations, which are one step backward from one dimension ongoing.

The main problem in recognition systems is to convert a two-dimensional image into a one-dimensional label sequence. This is done by passing the input data to a hierarchy of MDRNN layers. Selecting the height of the block gradually collapses the 2D image onto a 1D sequence, which can then be labeled by the output layer.

Multi-dimensional recurrent neural networks are designed to make language models robust to every combination of input dimensions, such as image rotation and shearing, ambiguity of strokes and local distortions of different handwriting styles properties and allow them to flexibly model multidimensional contexts.

Connectionist Temporal Classification(CTC)

This is an algorithm that handles tasks such as speech recognition and handwriting recognition, mapping the entire input data to output class/text.

Traditional recognition methods involve mapping images to corresponding text, however we do not know how patches of images are aligned with characters. CTC can be bypassed without knowing how specific parts of the speech audio or handwritten images align with specific characters.

The input to this algorithm is a vector representation of an image of handwritten text. There is no direct alignment between image pixel representation and character sequence. CTC aims to find this mapping by summing the probabilities of all possible alignments between them.

Models trained with CTC typically use recurrent neural networks to estimate the probability at each time step because recurrent neural networks take into account context in the input. It outputs the character score for each sequence element, represented by a matrix.

For decoding we can use:

Best path decoding: involves predicting the sentence by concatenating the most likely characters for each timestamp to form complete words, resulting in the best path. In the next training iteration, repeated characters and spaces are removed for better decoding of the text.

Beam Search Decoder: Suggests multiple output paths with the highest probability. Paths with smaller probabilities are discarded to keep the beam size constant. The results obtained through this method are more accurate and are often combined with language models to give meaningful results.

transformer model

The Transformer model adopts a different strategy and uses self-attention to remember the entire sequence. A non-cyclic handwriting method can be implemented using the transformer model.

The Transformer model combines the multi-head self-attention layer of the visual layer and the text layer to learn the language model-related dependencies of the character sequence to be decoded. The language knowledge is embedded in the model itself, so there is no need for any additional processing steps using a language model. It is also well suited for predicting outputs that are not part of the vocabulary.

This architecture has two parts:

Text transcriber, which outputs decoded characters by paying attention to each other on visual and language-related features.

Visual feature encoder, designed to extract relevant information from handwritten text images by focusing on various character positions and their contextual information.

Encoder-Decoder and Attention Network

Training handwriting recognition systems is always troubled by the scarcity of training data. To solve this problem, this method uses pre-trained feature vectors of text as a starting point. State-of-the-art models use attention mechanisms in conjunction with RNNs to focus on useful features for each timestamp.

The complete model architecture can be divided into four stages: normalize the input text image, encode the normalized input image into a 2D visual feature map, and use bidirectional LSTM for decoding To perform sequential modeling, the output vector of contextual information from the decoder is converted into words.

Scan, Attend and Read

This is a method for end-to-end handwriting recognition using attention mechanism. It scans the entire page at once. Therefore, it does not rely on splitting the entire word into characters or lines beforehand. This method uses a multidimensional LSTM (MDLSTM) architecture as a feature extractor similar to the above. The only difference is the last layer, where the extracted feature maps are folded vertically and a softmax activation function is applied to identify the corresponding text.

The attention model used here is a hybrid combination of content-based attention and location-based attention. The decoder LSTM module takes the previous state and attention maps and encoder features to generate the final output character and state vector for the next prediction.

Convolve, Attend and Spell

This is a sequence-to-sequence model for handwritten text recognition based on the attention mechanism. The architecture contains three main parts:

- An encoder consisting of a CNN and a bidirectional GRU

- Attention mechanism that focuses on relevant features

- The decoder formed by the one-way GRU is able to spell out the corresponding words character by character

Recurrent neural networks are most suitable for the temporal characteristics of the text. When paired with such a recurrent architecture, the attention mechanism plays a crucial role in focusing on the right features at each time step.

Handwritten text generation

Synthetic handwriting generation can generate realistic handwritten text, which can be used to enhance existing datasets.

Deep learning models require large amounts of data to train, and obtaining a large corpus of annotated handwritten images in different languages is a tedious task. We can solve this problem by using generative adversarial networks to generate training data.

ScrabbleGAN is a semi-supervised method for synthesizing handwritten text images. It relies on a generative model that can generate arbitrary-length word images using a fully convolutional network.

The above is the detailed content of Handwriting recognition technology and its algorithm classification. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1670

1670

14

14

1428

1428

52

52

1329

1329

25

25

1276

1276

29

29

1256

1256

24

24

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

15 recommended open source free image annotation tools

Mar 28, 2024 pm 01:21 PM

Image annotation is the process of associating labels or descriptive information with images to give deeper meaning and explanation to the image content. This process is critical to machine learning, which helps train vision models to more accurately identify individual elements in images. By adding annotations to images, the computer can understand the semantics and context behind the images, thereby improving the ability to understand and analyze the image content. Image annotation has a wide range of applications, covering many fields, such as computer vision, natural language processing, and graph vision models. It has a wide range of applications, such as assisting vehicles in identifying obstacles on the road, and helping in the detection and diagnosis of diseases through medical image recognition. . This article mainly recommends some better open source and free image annotation tools. 1.Makesens

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

This article will take you to understand SHAP: model explanation for machine learning

Jun 01, 2024 am 10:58 AM

In the fields of machine learning and data science, model interpretability has always been a focus of researchers and practitioners. With the widespread application of complex models such as deep learning and ensemble methods, understanding the model's decision-making process has become particularly important. Explainable AI|XAI helps build trust and confidence in machine learning models by increasing the transparency of the model. Improving model transparency can be achieved through methods such as the widespread use of multiple complex models, as well as the decision-making processes used to explain the models. These methods include feature importance analysis, model prediction interval estimation, local interpretability algorithms, etc. Feature importance analysis can explain the decision-making process of a model by evaluating the degree of influence of the model on the input features. Model prediction interval estimate

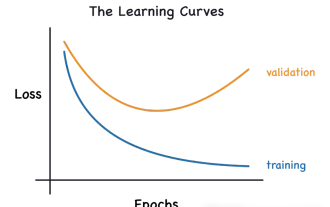

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

Identify overfitting and underfitting through learning curves

Apr 29, 2024 pm 06:50 PM

This article will introduce how to effectively identify overfitting and underfitting in machine learning models through learning curves. Underfitting and overfitting 1. Overfitting If a model is overtrained on the data so that it learns noise from it, then the model is said to be overfitting. An overfitted model learns every example so perfectly that it will misclassify an unseen/new example. For an overfitted model, we will get a perfect/near-perfect training set score and a terrible validation set/test score. Slightly modified: "Cause of overfitting: Use a complex model to solve a simple problem and extract noise from the data. Because a small data set as a training set may not represent the correct representation of all data." 2. Underfitting Heru

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

The evolution of artificial intelligence in space exploration and human settlement engineering

Apr 29, 2024 pm 03:25 PM

In the 1950s, artificial intelligence (AI) was born. That's when researchers discovered that machines could perform human-like tasks, such as thinking. Later, in the 1960s, the U.S. Department of Defense funded artificial intelligence and established laboratories for further development. Researchers are finding applications for artificial intelligence in many areas, such as space exploration and survival in extreme environments. Space exploration is the study of the universe, which covers the entire universe beyond the earth. Space is classified as an extreme environment because its conditions are different from those on Earth. To survive in space, many factors must be considered and precautions must be taken. Scientists and researchers believe that exploring space and understanding the current state of everything can help understand how the universe works and prepare for potential environmental crises

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

Transparent! An in-depth analysis of the principles of major machine learning models!

Apr 12, 2024 pm 05:55 PM

In layman’s terms, a machine learning model is a mathematical function that maps input data to a predicted output. More specifically, a machine learning model is a mathematical function that adjusts model parameters by learning from training data to minimize the error between the predicted output and the true label. There are many models in machine learning, such as logistic regression models, decision tree models, support vector machine models, etc. Each model has its applicable data types and problem types. At the same time, there are many commonalities between different models, or there is a hidden path for model evolution. Taking the connectionist perceptron as an example, by increasing the number of hidden layers of the perceptron, we can transform it into a deep neural network. If a kernel function is added to the perceptron, it can be converted into an SVM. this one

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

Is Flash Attention stable? Meta and Harvard found that their model weight deviations fluctuated by orders of magnitude

May 30, 2024 pm 01:24 PM

MetaFAIR teamed up with Harvard to provide a new research framework for optimizing the data bias generated when large-scale machine learning is performed. It is known that the training of large language models often takes months and uses hundreds or even thousands of GPUs. Taking the LLaMA270B model as an example, its training requires a total of 1,720,320 GPU hours. Training large models presents unique systemic challenges due to the scale and complexity of these workloads. Recently, many institutions have reported instability in the training process when training SOTA generative AI models. They usually appear in the form of loss spikes. For example, Google's PaLM model experienced up to 20 loss spikes during the training process. Numerical bias is the root cause of this training inaccuracy,