Markov process applications in neural networks

The Markov process is a stochastic process. The probability of the future state is only related to the current state and is not affected by the past state. It is widely used in fields such as finance, weather forecasting, and natural language processing. In neural networks, Markov processes are used as modeling techniques to help people better understand and predict the behavior of complex systems.

There are two main aspects of the application of Markov process in neural networks: Markov chain Monte Carlo (MCMC) method and Markov decision process (MDP) method. Application examples of both methods are briefly described below.

1. Application of Markov Chain Monte Carlo (MCMC) method in Generative Adversarial Network (GAN)

GAN is a A deep learning model consists of two neural networks, a generator and a discriminator. The goal of the generator is to generate new data that is similar to the real data, while the discriminator tries to distinguish the generated data from the real data. By continuously iteratively optimizing the parameters of the generator and discriminator, the generator can generate more and more realistic new data, ultimately achieving similar or even the same effect as real data. The training process of GAN can be regarded as a game process. The generator and the discriminator compete with each other, promote each other's improvement, and finally reach a balanced state. Through the training of GAN, we can generate new data with certain characteristics, which has wide applications in many fields, such as image generation, speech synthesis, etc.

In GAN, the MCMC method is used to draw samples from the generated data distribution. The generator first maps a random noise vector into the latent space and then uses a deconvolution network to map this vector back to the original data space. During the training process, the generator and the discriminator are trained alternately, and the generator uses the MCMC method to draw samples from the generated data distribution and compare them with real data. Through continuous iteration, the generator is able to generate new and more realistic data. The advantage of this method is that it can establish good competition between the generator and the discriminator, thereby improving the generative ability of the generator.

The core of the MCMC method is the Markov chain, which is a stochastic process in which the probability of the future state only depends on the current state and is not affected by the past state. In GANs, the generator uses a Markov chain to draw samples from the latent space. Specifically, it uses Gibbs sampling or the Metropolis-Hastings algorithm to walk through the latent space and calculate the probability density function at each location. Through continuous iteration, the MCMC method can draw samples from the generated data distribution and compare them with real data in order to train the generator.

2. Application of Markov Decision Process (MDP) in Neural Networks

Deep reinforcement learning is a method that uses neural networks to Reinforcement learning methods. It uses the MDP method to describe the decision-making process and uses neural networks to learn optimal policies to maximize expected long-term rewards.

In deep reinforcement learning, the key to the MDP method is to describe the state, action, reward and value function. A state is a specific configuration that represents the environment, an action is an operation that can be used to make a decision, a reward is a numerical value that represents the result of the decision, and the value function is a function that represents the quality of the decision.

Specifically, deep reinforcement learning uses neural networks to learn optimal policies. Neural networks receive states as input and output an estimate of each possible action. By using value functions and reward functions, neural networks can learn optimal policies to maximize expected long-term rewards.

The MDP method is widely used in deep reinforcement learning, including autonomous driving, robot control, game AI, etc. For example, AlphaGo is a method that uses deep reinforcement learning. It uses neural networks to learn optimal chess strategies and defeated top human players in the Go game.

In short, Markov processes are widely used in neural networks, especially in the fields of generative models and reinforcement learning. By using these techniques, neural networks can simulate the behavior of complex systems and learn optimal decision-making strategies. The application of these technologies will provide us with better prediction and decision-making tools to help us better understand and control the behavior of complex systems.

The above is the detailed content of Markov process applications in neural networks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1669

1669

14

14

1428

1428

52

52

1329

1329

25

25

1273

1273

29

29

1256

1256

24

24

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

A case study of using bidirectional LSTM model for text classification

Jan 24, 2024 am 10:36 AM

A case study of using bidirectional LSTM model for text classification

Jan 24, 2024 am 10:36 AM

The bidirectional LSTM model is a neural network used for text classification. Below is a simple example demonstrating how to use bidirectional LSTM for text classification tasks. First, we need to import the required libraries and modules: importosimportnumpyasnpfromkeras.preprocessing.textimportTokenizerfromkeras.preprocessing.sequenceimportpad_sequencesfromkeras.modelsimportSequentialfromkeras.layersimportDense,Em

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve

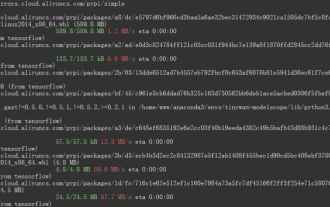

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

Mar 26, 2024 pm 01:00 PM

TensorFlow deep learning framework model inference pipeline for portrait cutout inference

Mar 26, 2024 pm 01:00 PM

Overview In order to enable ModelScope users to quickly and conveniently use various models provided by the platform, a set of fully functional Python libraries are provided, which includes the implementation of ModelScope official models, as well as the necessary tools for using these models for inference, finetune and other tasks. Code related to data pre-processing, post-processing, effect evaluation and other functions, while also providing a simple and easy-to-use API and rich usage examples. By calling the library, users can complete tasks such as model reasoning, training, and evaluation by writing just a few lines of code. They can also quickly perform secondary development on this basis to realize their own innovative ideas. The algorithm model currently provided by the library is:

Twin Neural Network: Principle and Application Analysis

Jan 24, 2024 pm 04:18 PM

Twin Neural Network: Principle and Application Analysis

Jan 24, 2024 pm 04:18 PM

Siamese Neural Network is a unique artificial neural network structure. It consists of two identical neural networks that share the same parameters and weights. At the same time, the two networks also share the same input data. This design was inspired by twins, as the two neural networks are structurally identical. The principle of Siamese neural network is to complete specific tasks, such as image matching, text matching and face recognition, by comparing the similarity or distance between two input data. During training, the network attempts to map similar data to adjacent regions and dissimilar data to distant regions. In this way, the network can learn how to classify or match different data to achieve corresponding

Image denoising using convolutional neural networks

Jan 23, 2024 pm 11:48 PM

Image denoising using convolutional neural networks

Jan 23, 2024 pm 11:48 PM

Convolutional neural networks perform well in image denoising tasks. It utilizes the learned filters to filter the noise and thereby restore the original image. This article introduces in detail the image denoising method based on convolutional neural network. 1. Overview of Convolutional Neural Network Convolutional neural network is a deep learning algorithm that uses a combination of multiple convolutional layers, pooling layers and fully connected layers to learn and classify image features. In the convolutional layer, the local features of the image are extracted through convolution operations, thereby capturing the spatial correlation in the image. The pooling layer reduces the amount of calculation by reducing the feature dimension and retains the main features. The fully connected layer is responsible for mapping learned features and labels to implement image classification or other tasks. The design of this network structure makes convolutional neural networks useful in image processing and recognition.