Technology peripherals

Technology peripherals

AI

AI

Yuntian Lifei has suffered huge losses of 2 billion yuan for five consecutive years! AI chip investment adventure gambling

Yuntian Lifei has suffered huge losses of 2 billion yuan for five consecutive years! AI chip investment adventure gambling

Yuntian Lifei has suffered huge losses of 2 billion yuan for five consecutive years! AI chip investment adventure gambling

Before the rise of large models, the three killer applications of text recognition, speech recognition and image recognition appeared in the 1.0 era of artificial intelligence. In 2015, Shenzhen-based Yuntian Lifei launched a system called "Yuntian Shenmu", becoming the world's first dynamic portrait recognition system. In the past few years, "Yuntian Shenmu" has been widely used in the field of AI security and has successfully assisted the public security organs in solving tens of thousands of cases

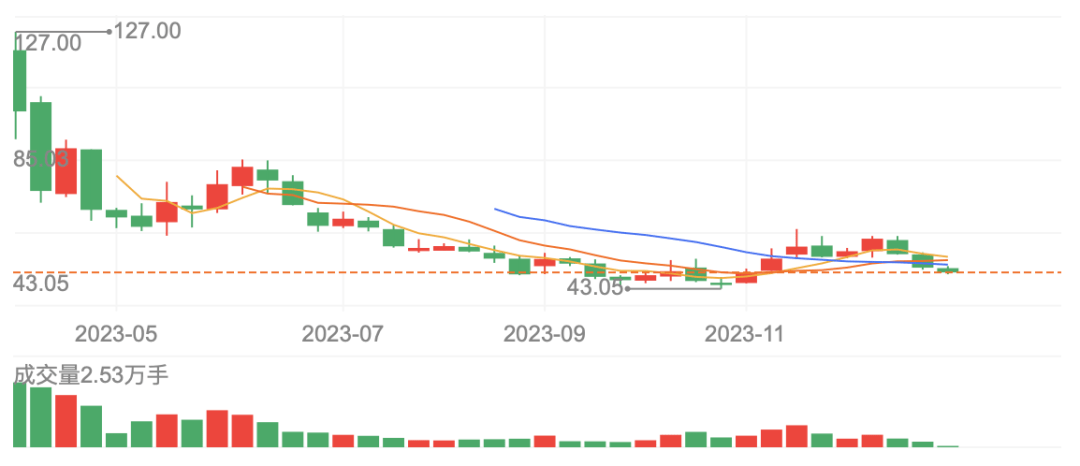

In April 2023, Yuntian Lifei landed on the Science and Technology Innovation Board and quoted a high price of 121 yuan per share. In the collective bidding, it increased by 175.5% from the issue price, with a market value of more than 40 billion yuan, and it is on par with the "AI Four Little Dragons" "Comparable to one of Yuncong Technology. After listing, Yuntian Lifei released a dismal annual report, causing the stock price to plummet, down 60% from its highest point

Entering Artificial Intelligence 2.0, Yuntianli made a series of moves. While launching the AI inference chip DeepEdge10, it also released the "Book of Heaven" large model with hundreds of billions of parameters, launching a technological transformation. Facing giant competitors with annual revenue of only 500 million yuan, how can Yuntian Lifei break through?

Loss of 2 billion yuan in five years, a report of blood loss

Among domestic AI companies, losses have become the norm, while non-losses are the exception

From 2019 to 2022, Yuntian Lifei achieved revenue of 230 million yuan, 430 million yuan, 570 million yuan, and 550 million yuan respectively, and the corresponding net profits were -510 million yuan, -400 million yuan, and -390 million yuan respectively. yuan, -450 million yuan, and the net cash flows generated from operating activities were -190 million yuan, -240 million yuan, -180 million yuan, and -200 million yuan respectively.

In the first three quarters of 2023, Yuntian Lifei’s revenue reached 230 million yuan, a year-on-year decrease of 11.4%; net profit was -300 million yuan, a year-on-year increase of 11.8%. In less than five years, Yuntian Lifei has suffered huge losses, reaching 2 billion yuan, and since 2022, its revenue has been stuck in negative growth

One of the main reasons for the huge losses is that R&D expenses remain high. From 2019 to 2022, R&D expenses will be 200 million yuan, 220 million yuan, 300 million yuan, and 350 million yuan respectively, showing an increasing trend year by year. It is worth noting that although the company invested a lot of R&D funds, it did not bring about an increase in gross profit margin. On the contrary, the gross profit margin declined year by year. From 2019 to 2022, the gross profit margins are 43.6%, 35.6%, 38.8%, and 31.9% respectively. By the first three quarters of 2023, gross profit margin will increase slightly to 32.5%

For Chen Ning, co-founder, chairman and CEO of Yuntian Lifei, balancing the “blood loss” financial statements is undoubtedly a huge challenge

In the dispute over technical routes, the founders parted ways

In terms of technology, Yuntian Lifei has built three major technology platforms: AI algorithm, AI chip, and big data processing. Among them, it has invested heavily in AI chips and promoted algorithm chipization as its main features.

Yuntian Lifei was established in 2014. There are two founders, one is Chen Ning, who holds 70% of the shares; the other is Tian Dihong, who holds 30% of the shares. Chen and Tian both graduated from the Georgia Institute of Technology in the United States and received doctorate degrees. After graduation, Chen Ning first worked at Freescale Semiconductor Company in the United States, and then served as IC technology director at ZTE Corporation. After the establishment of Yuntian Lifei, Chen and Tian had one "outside" person in charge of sales and one "inside person" in charge of research and development. They finally survived with the success of "Yuntian Shenmu".

However, Chen and Tian have huge differences in the future technical direction. Chen Ning, who has worked in the IC industry for a long time, believes that chips should take precedence over algorithms, while Tian Dihong holds the opposite view and believes that algorithms should take precedence over chips. The result of this technical dispute was that when Yuntian Lifei submitted its prospectus, Tian Dihong emptied his equity and exited the company, cashing out less than 35 million yuan. After Yuntian Lifei was listed, Chen Ning held about 25% of the shares, and its market value once exceeded 10 billion yuan

After Tian Dihong’s withdrawal, Yuntian Lifei increased investment and promoted the process of algorithm chipization, hoping to optimize AI technology by combining algorithms with chips. Under the leadership of Chen Ning, Yuntian Lifei has successively completed the research and development of third-generation instruction set architecture and fourth-generation neural network processor, trying to establish a technical barrier

Facial recognition red ocean competition, SenseTime leads the way

Based on the algorithm chip, Yuntian Lifei combines hardware to form industry solutions and initiate commercialization. AI security is the first breakthrough. Since then, Yuntian Lifei has launched applications such as special vehicle supervision, intelligent prediction of traffic events, and urban grassroots governance for urban traffic scenarios. The prospectus shows that more than 80% of the revenue comes from the digital city operation and management segment.

In 2020, Yuntian Lifei released the "1 1 N" self-evolving urban intelligent agent strategy, aiming to build an intelligent and widely aware network for digital cities, as well as a self-learning and self-evolving urban super brain, and at the same time provide Smart applications empower multiple artificial intelligences. However, this strategy also faces two major challenges. One is the increasingly strict facial recognition control policies, and the other is the deep integration of artificial intelligence and industry

There is a hidden worry in the business model, that is, the revenue source brought by digital government is too single. In addition, the project-based engineering delivery has led to insufficient order reserves and declining gross profit margins. More importantly, facial recognition technology has long become an old technology, and various manufacturers are vying to enter the market, and competition is fierce. According to IDC data, the top five companies in the domestic computer vision industry are SenseTime, Megvii, Hikvision, Innovation Qizhi, and Yuncong, with their combined market share reaching 42%

Compared with its opponents, Yuntian Lifei has already fallen behind. Data shows that in the past two years, Yuntian Lifei’s market share is only about 1%. On the other hand, the gross profit margin of comparable companies in the industry is as high as over 50%, while Yuntian Lifei's gross profit margin hovers around 35%, which is difficult to compare with.

One hand chip and one hand model, Yuntian and two fronts break through

The only weapon for technology companies to break through is technology. After the rise of generative AI, Yuntian Lifei released a new generation of AI chips and launched a 100-billion-level "Book of Heaven" large model to launch a new round of technology research and development.

Yuntian Lifei has launched a new generation of AI chip DeepEdge10, which is equipped with the company's self-developed neural network processor NNP400T and can be widely used in AIoT edge video, mobile robots and other scenarios. Yuntian Lifei also relies on the DeepEdge10 chip and the company's self-developed innovative D2D chip architecture to launch the X5000 inference card, which can support the calculation of tens of billions of large models such as Llama2

The "Book of Heaven" large model with hundreds of billions of parameters includes three levels: general large model, industry large model, and scene large model. Specifically, it is based on the algorithm development platform and algorithm chip platform, and uses massive data to create a general large model; on the basis of the general large model, high-quality industry data is introduced to form an industry large model; the industry large model is fine-tuned through segmented scenario data , forming a large scene model.

In December, Yuntian Lifei said when receiving investor surveys that the "Tian Shu" large model has completed two version updates, and the next version will benchmark GPT-4 to further enhance multi-modal capabilities. The company disclosed that the "Tian Shu" large model has reached the industry's advanced level in general question and answer, language understanding, mathematical reasoning, text generation, role playing, etc., ranking first in the C-Eval Chinese large model list in early September.

At the time of listing, Yuntian Lifei raised a net amount of 3.58 billion yuan, 580 million yuan more than originally planned. With an AI chip in hand and a large model in hand, can Yuntian Lifei defeat the battle of hundreds of models? The industry will wait and see.

--Finish--

The above is the detailed content of Yuntian Lifei has suffered huge losses of 2 billion yuan for five consecutive years! AI chip investment adventure gambling. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1672

1672

14

14

1428

1428

52

52

1332

1332

25

25

1277

1277

29

29

1257

1257

24

24

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

New title: NVIDIA H200 released: HBM capacity increased by 76%, the most powerful AI chip that significantly improves large model performance by 90%

Nov 14, 2023 pm 03:21 PM

According to news on November 14, Nvidia officially released the new H200 GPU at the "Supercomputing23" conference on the morning of the 13th local time, and updated the GH200 product line. Among them, the H200 is still built on the existing Hopper H100 architecture. However, more high-bandwidth memory (HBM3e) has been added to better handle the large data sets required to develop and implement artificial intelligence, making the overall performance of running large models improved by 60% to 90% compared to the previous generation H100. The updated GH200 will also power the next generation of AI supercomputers. In 2024, more than 200 exaflops of AI computing power will be online. H200

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

MediaTek is rumored to have won a large order from Google for server AI chips and will supply high-speed Serdes chips

Jun 19, 2023 pm 08:23 PM

On June 19, according to media reports in Taiwan, China, Google (Google) has approached MediaTek to cooperate in order to develop the latest server-oriented AI chip, and plans to hand it over to TSMC's 5nm process for foundry, with plans for mass production early next year. According to the report, sources revealed that this cooperation between Google and MediaTek will provide MediaTek with serializer and deserializer (SerDes) solutions and help integrate Google’s self-developed tensor processor (TPU) to help Google create the latest Server AI chips will be more powerful than CPU or GPU architectures. The industry points out that many of Google's current services are related to AI. It has invested in deep learning technology many years ago and found that using GPUs to perform AI calculations is very expensive. Therefore, Google decided to

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

The next big thing in AI: Peak performance of NVIDIA B100 chip and OpenAI GPT-5 model

Nov 18, 2023 pm 03:39 PM

After the debut of the NVIDIA H200, known as the world's most powerful AI chip, the industry began to look forward to NVIDIA's more powerful B100 chip. At the same time, OpenAI, the most popular AI start-up company this year, has begun to develop a more powerful and complex GPT-5 model. Guotai Junan pointed out in the latest research report that the B100 and GPT5 with boundless performance are expected to be released in 2024, and the major upgrades may release unprecedented productivity. The agency stated that it is optimistic that AI will enter a period of rapid development and its visibility will continue until 2024. Compared with previous generations of products, how powerful are B100 and GPT-5? Nvidia and OpenAI have already given a preview: B100 may be more than 4 times faster than H100, and GPT-5 may achieve super

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

Kneron launches latest AI chip KL730 to drive large-scale application of lightweight GPT solutions

Aug 17, 2023 pm 01:37 PM

KL730's progress in energy efficiency has solved the biggest bottleneck in the implementation of artificial intelligence models - energy costs. Compared with the industry and previous Nerner chips, the KL730 chip has increased by 3 to 4 times. The KL730 chip supports the most advanced lightweight GPT large Language models, such as nanoGPT, and provide effective computing power of 0.35-4 tera per second. AI company Kneron today announced the release of the KL730 chip, which integrates automotive-grade NPU and image signal processing (ISP) to bring safe and low-energy AI The capabilities are empowered in various application scenarios such as edge servers, smart homes, and automotive assisted driving systems. San Diego-based Kneron is known for its groundbreaking neural processing units (NPUs), and its latest chip, the KL730, aims to achieve

AI chips are out of stock globally!

May 30, 2023 pm 09:53 PM

AI chips are out of stock globally!

May 30, 2023 pm 09:53 PM

Google’s CEO likened the AI revolution to humanity’s use of fire, but now the digital fire that fuels the industry—AI chips—is hard to come by. The new generation of advanced chips that drive AI operations are almost all manufactured by NVIDIA. As ChatGPT explodes out of the circle, the market demand for NVIDIA graphics processing chips (GPUs) far exceeds the supply. "Because there is a shortage, the key is your circle of friends," said Sharon Zhou, co-founder and CEO of Lamini, a startup that helps companies build AI models such as chatbots. "It's like toilet paper during the epidemic." This kind of thing. The situation has limited the computing power that cloud service providers such as Amazon and Microsoft can provide to customers such as OpenAI, the creator of ChatGPT.

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

NVIDIA launches new AI chip H200, performance improved by 90%! China's computing power achieves independent breakthrough!

Nov 14, 2023 pm 05:37 PM

While the world is still obsessed with NVIDIA H100 chips and buying them crazily to meet the growing demand for AI computing power, on Monday local time, NVIDIA quietly launched its latest AI chip H200, which is used for training large AI models. Compared with other The performance of the previous generation products H100 and H200 has been improved by about 60% to 90%. The H200 is an upgraded version of the Nvidia H100. It is also based on the Hopper architecture like the H100. The main upgrade includes 141GB of HBM3e video memory, and the video memory bandwidth has increased from the H100's 3.35TB/s to 4.8TB/s. According to Nvidia’s official website, H200 is also the company’s first chip to use HBM3e memory. This memory is faster and has larger capacity, so it is more suitable for large languages.

Kneron announces the launch of its latest AI chip KL730

Aug 17, 2023 am 10:09 AM

Kneron announces the launch of its latest AI chip KL730

Aug 17, 2023 am 10:09 AM

According to the original words, it can be rewritten as: (Global TMT August 16, 2023) AI company Kneron, headquartered in San Diego and known for its groundbreaking neural processing units (NPU), announced the release of the KL730 chip. The chip integrates automotive-grade NPU and image signal processing (ISP), and provides safe and low-energy AI capabilities to various application scenarios such as edge servers, smart homes, and automotive assisted driving systems. The KL730 chip has achieved great results in terms of energy efficiency. A breakthrough, compared with previous Nerner chips, its energy efficiency has increased by 3 to 4 times, and is 150% to 200% higher than similar products in major industries. The chip has an effective computing power of 0.35-4 tera per second and can support the most advanced lightweight GPT large

Microsoft is developing its own AI chip 'Athena'

Apr 25, 2023 pm 01:07 PM

Microsoft is developing its own AI chip 'Athena'

Apr 25, 2023 pm 01:07 PM

Microsoft is developing AI-optimized chips to reduce the cost of training generative AI models, such as the ones that power the OpenAIChatGPT chatbot. The Information recently quoted two people familiar with the matter as saying that Microsoft has been developing a new chipset code-named "Athena" since at least 2019. Employees at Microsoft and OpenAI already have access to the new chips and are using them to test their performance on large language models such as GPT-4. Training large language models requires ingesting and analyzing large amounts of data in order to create new output content for the AI to imitate human conversation. This is a hallmark of generative AI models. This process requires a large number (on the order of tens of thousands) of A