Technology peripherals

Technology peripherals

AI

AI

Transformer revolutionizes 3D modeling, MeshGPT generation effect alarms professional modelers, netizens: revolutionary idea

Transformer revolutionizes 3D modeling, MeshGPT generation effect alarms professional modelers, netizens: revolutionary idea

Transformer revolutionizes 3D modeling, MeshGPT generation effect alarms professional modelers, netizens: revolutionary idea

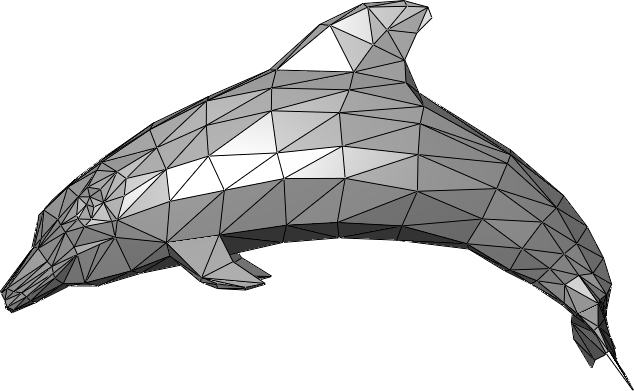

In the field of computer graphics, triangle meshes are the main way to represent 3D geometric objects, and are also the most commonly used 3D resource expression methods in games, movies, and virtual reality interfaces. The industry usually uses triangular meshes to simulate the surfaces of complex objects, such as buildings, vehicles, animals, etc. At the same time, common geometric transformations, geometry detection, rendering and shading operations also need to be performed based on triangle meshes

Compared with other 3D shape representations such as point clouds or voxels, triangles Meshes provide a more coherent surface representation: more controllable, easier to manipulate, more compact, and can be directly applied in modern rendering pipelines, achieving higher visual quality with fewer primitives

Previously, researchers have tried to use representation methods such as voxels, point clouds and neural fields to generate 3D models. These representation methods also need to be converted into meshes through post-processing. for use in downstream applications, such as isosurface processing using the Marching Cubes algorithm

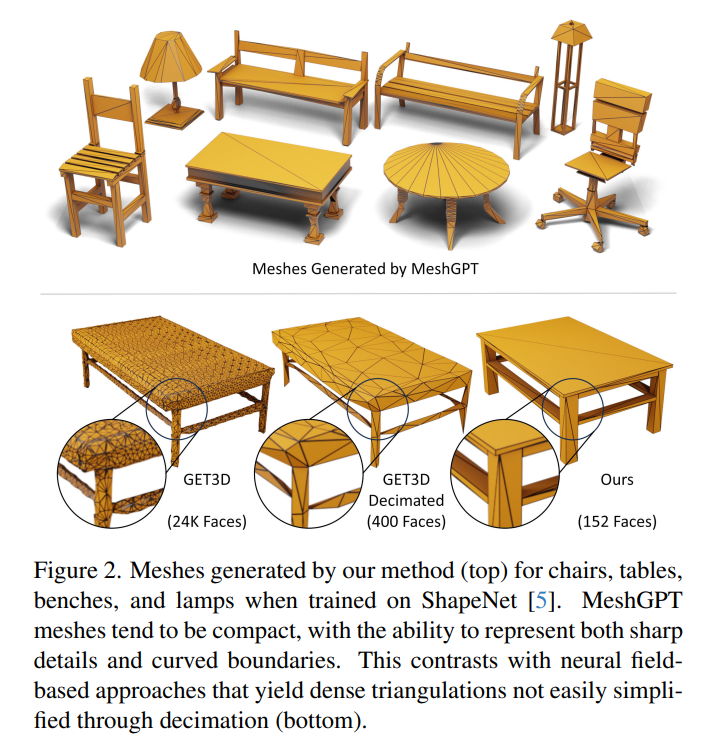

Unfortunately, this approach results in an overly dense mesh and an overly detailed mesh, often Bumpy errors caused by over-smoothing and isosurfacing will appear, as shown in the following image:

Compare 3D meshes modeled by 3D modeling professionals are more compact in representation while maintaining sharp detail with fewer triangles.

Many researchers have long hoped to solve the task of automatically generating triangle meshes to further simplify the process of creating 3D assets.

In a recent paper, researchers proposed a new solution: MeshGPT, which directly generates the mesh representation as a set of triangles.

The paper link can be found at: https://nihalsid.github.io/mesh-gpt/static/MeshGPT.pdf

Inspired by the Transformer language generation model, they adopted a direct sequence generation method to synthesize triangle meshes into triangle sequences

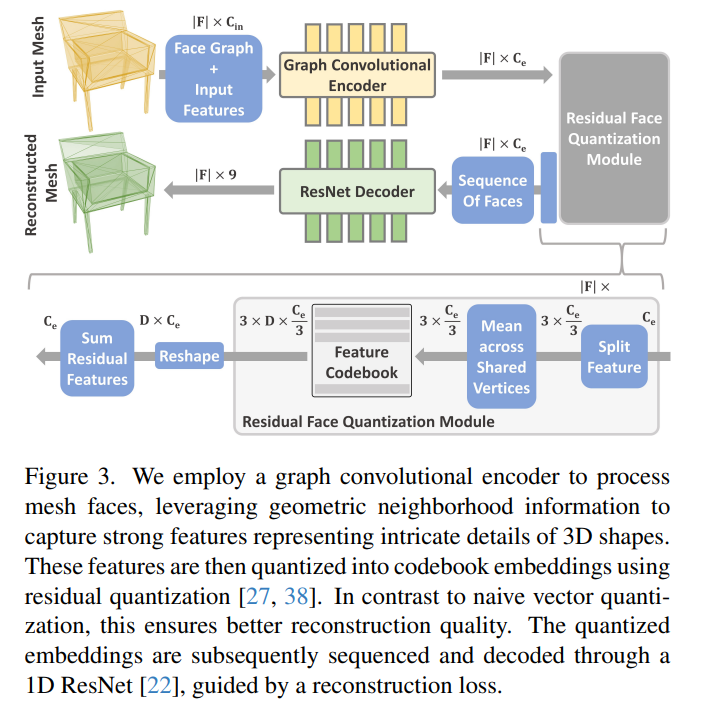

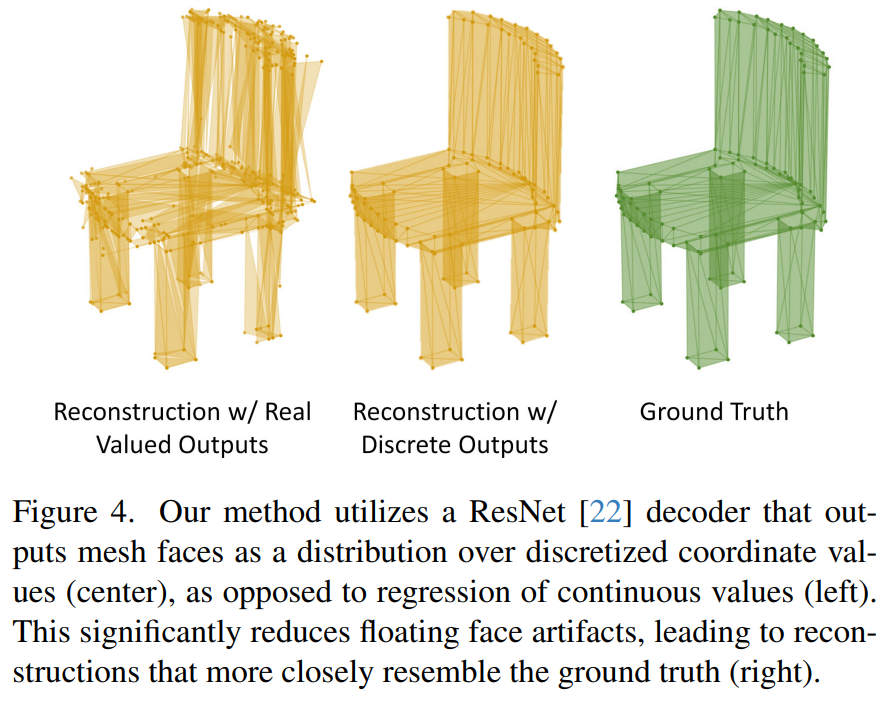

Following the paradigm of text generation, researchers first learned a vocabulary of triangles, where triangles were encoded as latent quantized embeddings. To encourage the learned triangle embeddings to preserve local geometric and topological features, we employ a graph convolutional encoder. These triangle embeddings are then decoded by a ResNet decoder, which processes the sequence of tokens representing the triangles to generate the vertex coordinates of the triangles. Finally, the researchers trained a GPT-based architecture based on the learned vocabulary to automatically generate a sequence of triangles representing the mesh, and achieved the advantages of clear edges and high fidelity.

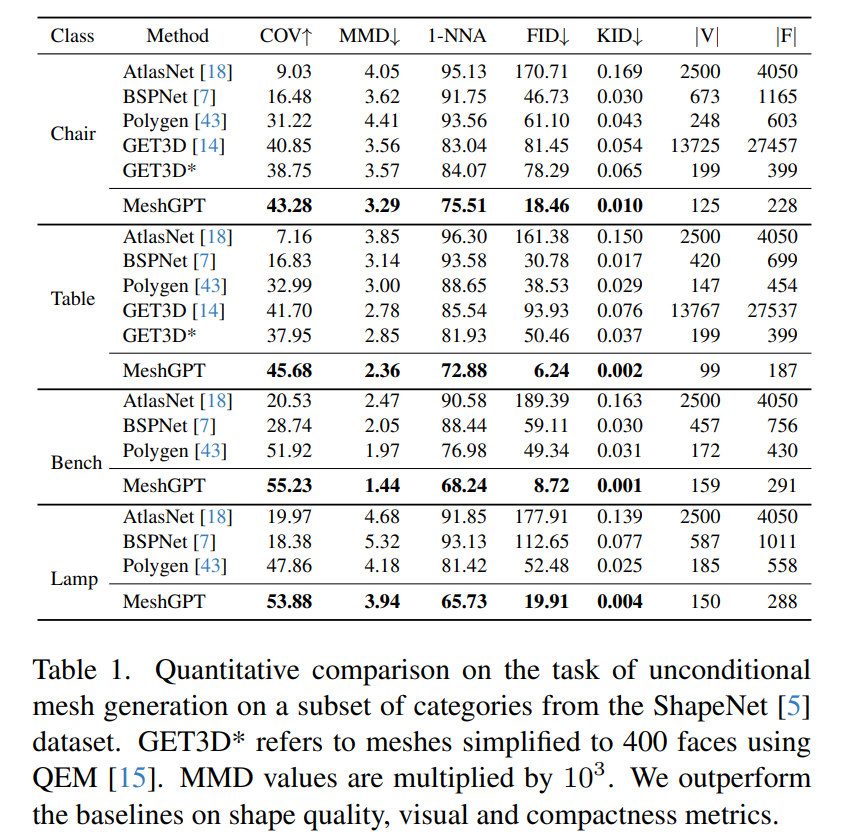

Experiments across multiple categories on the ShapeNet dataset show that MeshGPT significantly improves the quality of generated 3D meshes compared to existing techniques, Shape coverage improved by an average of 9%, and FID scores improved by 30 points.

On social media platforms, MeshGPT has also sparked heated discussions:

Someone once said : "This is the truly revolutionary idea."

One netizen pointed out that the highlight of this method is that it overcomes other The biggest obstacle to the 3D modeling approach is the ability to edit.

Some people boldly predict that maybe all the problems that have not been solved since the 1990s can be inspired by Transformer:

There are also users engaged in 3D/movie production-related industries who expressed concerns about their careers:

However, some people pointed out that based on the generation examples provided in the paper, this method has not yet reached the stage of large-scale application. A professional modeler can create these meshes in less than 5 minutes

This commenter stated,The next step might be to have the LLM control the generation of the 3D seeds and add the image model to the autoregressive part of the architecture. After reaching this step, the production of 3D assets for games and other scenes can be automated on a large scale.

Next, let’s take a look at the research details of the MeshGPT paper.

Overview of Method

Inspired by the progress of large language models, the researchers developed a sequence-based method that uses triangular meshes as Triangular sequences are autoregressively generated. This method produces clean, coherent and compact meshes with sharp edges and high fidelity.

The researchers first learned geometric vocabulary embeddings from large 3D object meshes to be able to encode and decode triangles. Then, based on the learned embedding vocabulary, the Transformer for grid generation is trained in an autoregressive manner for index prediction.

#To learn the triangle vocabulary, the researchers used a graph convolutional encoder that operates on the triangles of the grid and their neighborhoods to extract Rich geometric features capture the intricate details of 3D shapes. These features are quantized as Embedding in the codebook through residual quantization, effectively reducing the sequence length of the grid representation. After sorting, these embedded information are decoded by a one-dimensional ResNet guided by the reconstruction loss. This stage lays the foundation for subsequent training of Transformer.

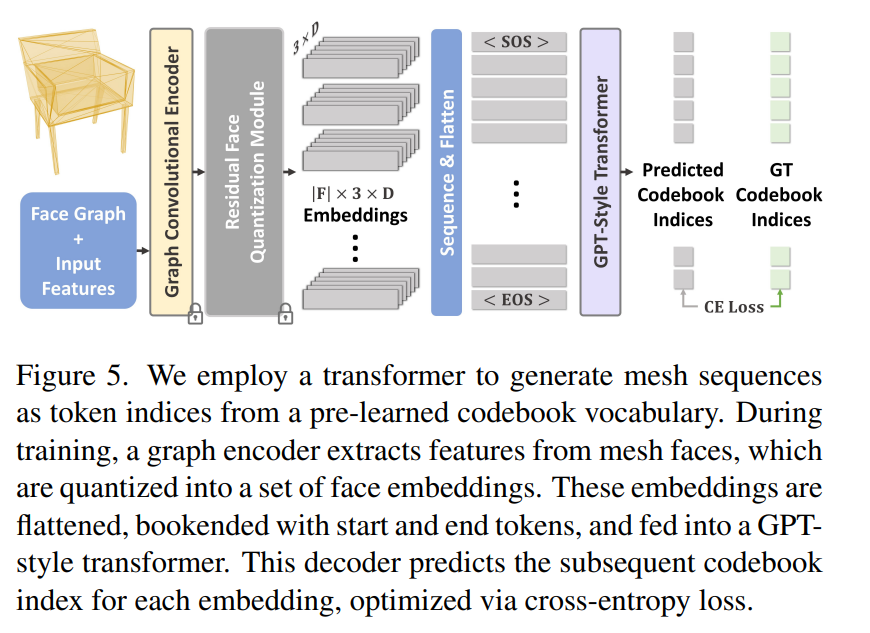

Next, the researchers used these quantized geometric embeddings to train a pure decoder transformer similar to GPT. They do this by extracting a sequence of geometric embeddings in mesh triangles and training the transformer to predict the codebook index of the next embedding in the sequence

After training, the transformer can autoregressively sample to predict the embedding sequences, and then decodes these embeddings to generate novel and diverse mesh structures showing efficient, irregular triangles similar to human-drawn meshes.

MeshGPT uses a graph convolution encoder to process mesh surfaces and uses geometric neighborhood information to capture and represent 3D Strong features of complex shape details are then quantized into codebook embeddings using a residual quantization method. This approach ensures better reconstruction quality compared to simple vector quantization. Guided by the reconstruction loss, MeshGPT sorts and decodes the quantized embeddings via ResNet.

This study uses the Transformer model to generate grid sequences as token indexes from the pre-trained codebook vocabulary library. During training, the image encoder extracts features from mesh surfaces and quantizes them into a set of surface embeddings. These embeddings are tiled, marked with start and end tokens, and then fed into the above GPT type Transformer model. The decoder is optimized with a cross-entropy loss to predict the subsequent codebook index of each embedding

Experimental results

This study combines MeshGPT with common Comparative experiments were conducted on mesh generation methods, including:

- Polygen, which generates polygonal meshes by first generating vertices and then generating faces conditioned on the vertices;

- BSPNet represents the mesh through convex decomposition;

- AtlasNet represents the 3D mesh as the deformation of multiple 2D planes.

Additionally, the study compared MeshGPT with the neural field-based SOTA method GET3D.

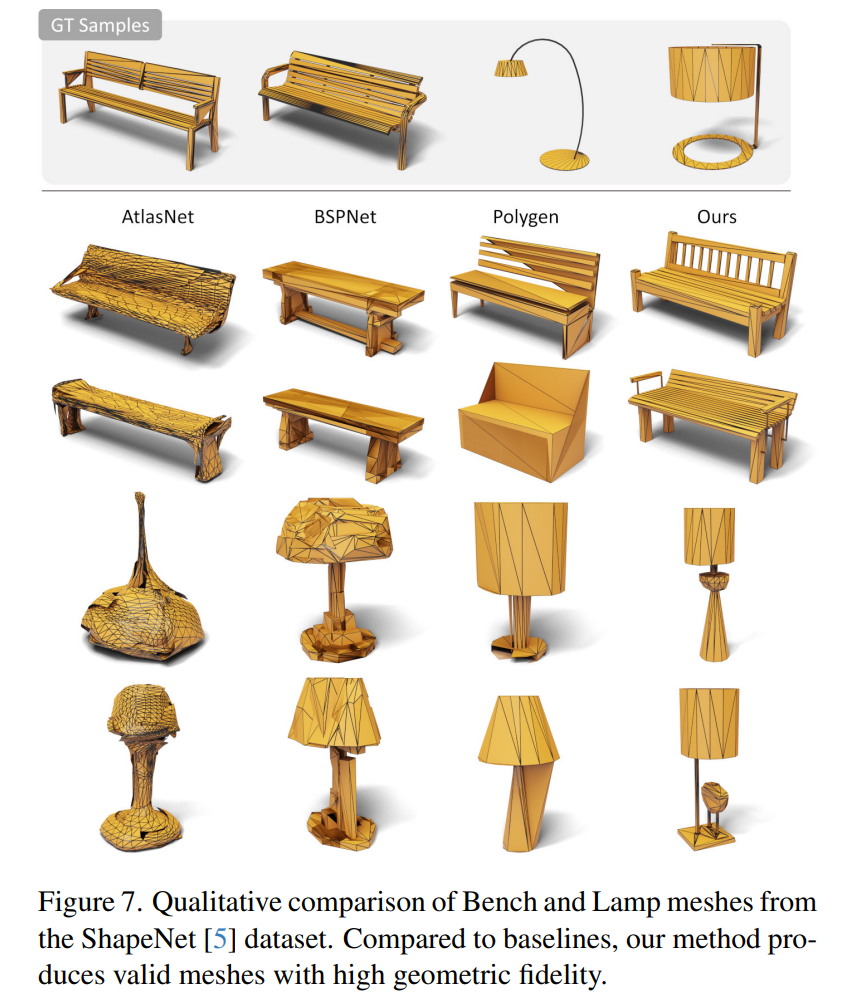

As shown in Figure 6, Figure 7 and Table 1, MeshGPT outperforms the baseline method in all 4 categories. MeshGPT can generate sharp, compact meshes with finer geometric details.

Specifically, compared with Polygen, MeshGPT can generate shapes with more complex details, and Polygen is more likely to accumulate errors during the inference process; AtlasNet often suffers from folding artifacts ), resulting in lower diversity and lower shape quality; BSPNet using planar BSP trees tends to produce blocky shapes with unusual triangulation patterns; GET3D produces good high-level shape structures, but has too many triangles and imperfect planar surfaces .

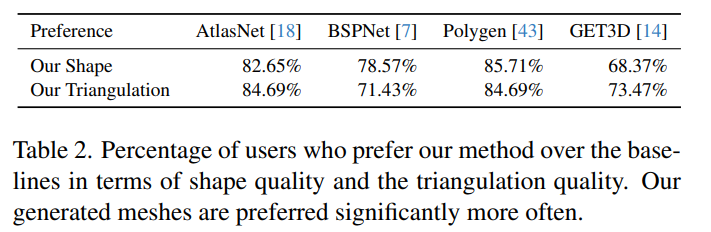

##As shown in the table As shown in 2, the study also allowed users to evaluate the quality of meshes generated by MeshGPT, with MeshGPT significantly outperforming AtlasNet, Polygen, and BSPNet in terms of shape and triangulation quality. Most users preferred the shape quality (68%) and triangulation quality (73%) generated by MeshGPT compared to GET3D.

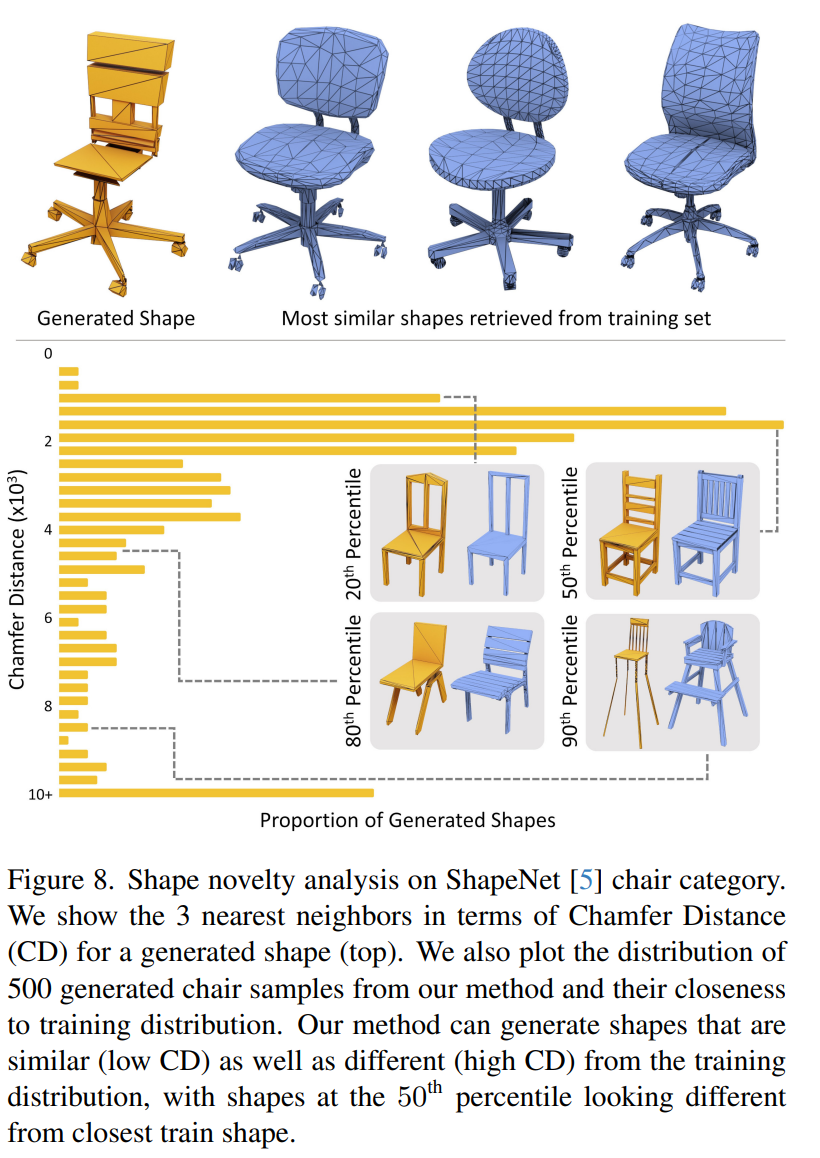

The rewritten content is: novel shape. As shown in Figure 8, MeshGPT is able to generate novel shapes beyond the training dataset, ensuring that the model does more than just retrieve existing shapes

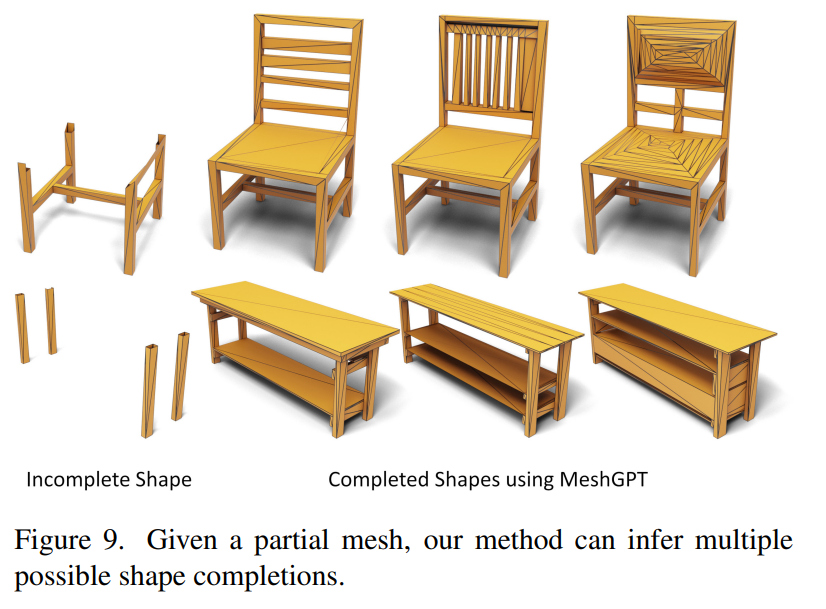

Shape completion. As shown in Figure 9 below, MeshGPT can also infer multiple possible completions based on a given local shape and generate multiple shape hypotheses.

The above is the detailed content of Transformer revolutionizes 3D modeling, MeshGPT generation effect alarms professional modelers, netizens: revolutionary idea. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1317

1317

25

25

1268

1268

29

29

1245

1245

24

24

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

The main steps and precautions for using string streams in C are as follows: 1. Create an output string stream and convert data, such as converting integers into strings. 2. Apply to serialization of complex data structures, such as converting vector into strings. 3. Pay attention to performance issues and avoid frequent use of string streams when processing large amounts of data. You can consider using the append method of std::string. 4. Pay attention to memory management and avoid frequent creation and destruction of string stream objects. You can reuse or use std::stringstream.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.