Technology peripherals

Technology peripherals

AI

AI

What are some classic cases where deep learning is not as effective as traditional methods?

What are some classic cases where deep learning is not as effective as traditional methods?

What are some classic cases where deep learning is not as effective as traditional methods?

As one of the most cutting-edge fields of technology, deep learning is often considered the key to technological progress. However, are there some cases where deep learning is not as effective as traditional methods? This article summarizes some high-quality answers from Zhihu to answer this question

Question link: https://www.zhihu.com/question/451498156

# Answer 1

Author: Jueleizai

Source link: https://www.zhihu.com/question /451498156/answer/1802577845

For fields that require interpretability, basic deep learning is incomparable with traditional methods. I have been working on risk control/anti-money laundering products for the past few years, but regulations require our decisions to be explainable. We have tried deep learning, but explainability is difficult to achieve, and the results are not very good. For risk control scenarios, data cleaning is very important, otherwise it will just be garbage in garbage out.

When writing the above content, I remembered an article I read two years ago: "You don’t need ML/AI, you need SQL"

https://www.php.cn/link/f0e1f0412f36e086dc5f596b84370e86

The author is Celestine Omin, a Nigerian software engineer, the largest e-commerce website in Nigeria One Konga works. We all know that precision marketing and personalized recommendations for old users are one of the most commonly used areas of AI. When others are using deep learning to make recommendations, his method seems extremely simple. He just ran through the database, screened out all users who had not logged in for three months, and pushed coupons to them. It also ran through the product list in the user's shopping cart and decided to recommend related products based on these popular products.

As a result, with his simple SQL-based personalized recommendations, the open rate of most marketing emails is between 7-10%, and when done well, the open rate is close to 25% -30%, three times the industry average open rate.

Of course, this example is not to tell you that the recommendation algorithm is useless and everyone should use SQL. It means that when applying deep learning, you need to consider constraints such as cost and application scenarios. . In my previous answer (What exactly does the implementation ability of an algorithm engineer refer to?), I mentioned that practical constraints need to be considered when implementing algorithms.

https://www.php.cn/link/f0e1f0412f36e086dc5f596b84370e86

And Nigeria’s e-commerce environment, It is still in a very backward state, and logistics cannot keep up. Even if the deep learning method is used to improve the effect, it will not actually have much impact on the company's overall profits.

Therefore, the algorithm must be "adapted to local conditions" when implemented. Otherwise, the situation of "the electric fan blowing the soap box" will occur again.

A large company introduced a soap packaging production line, but found that this production line had a flaw: there were often boxes without soap. They couldn't sell empty boxes to customers, so they had to hire a postdoc who studied automation to design a plan to sort empty soap boxes.

The postdoctoral fellow organized a scientific research team of more than a dozen people and used a combination of machinery, microelectronics, automation, X-ray detection and other technologies, spending 900,000 yuan to successfully solve the problem. Whenever an empty soap box passes through the production line, detectors on both sides will detect it and drive a robot to push the empty soap box away.

There was a township enterprise in southern China that also bought the same production line. When the boss found out about this problem, he got very angry and hired a small worker to say, "You can fix this for me, or else you can get away from me." The worker quickly figured out a way. He spent 190 yuan to place a high-power electric fan next to the production line and blew it hard, so that all the empty soap boxes were blown away.

(Although it’s just a joke)

Deep learning is a hammer, but not everything in the world is a nail.

# Answer 2

Author: Mo Xiao Fourier

Source link: https://www.zhihu.com/question/ 451498156/answer/1802730183

There are two common scenarios:

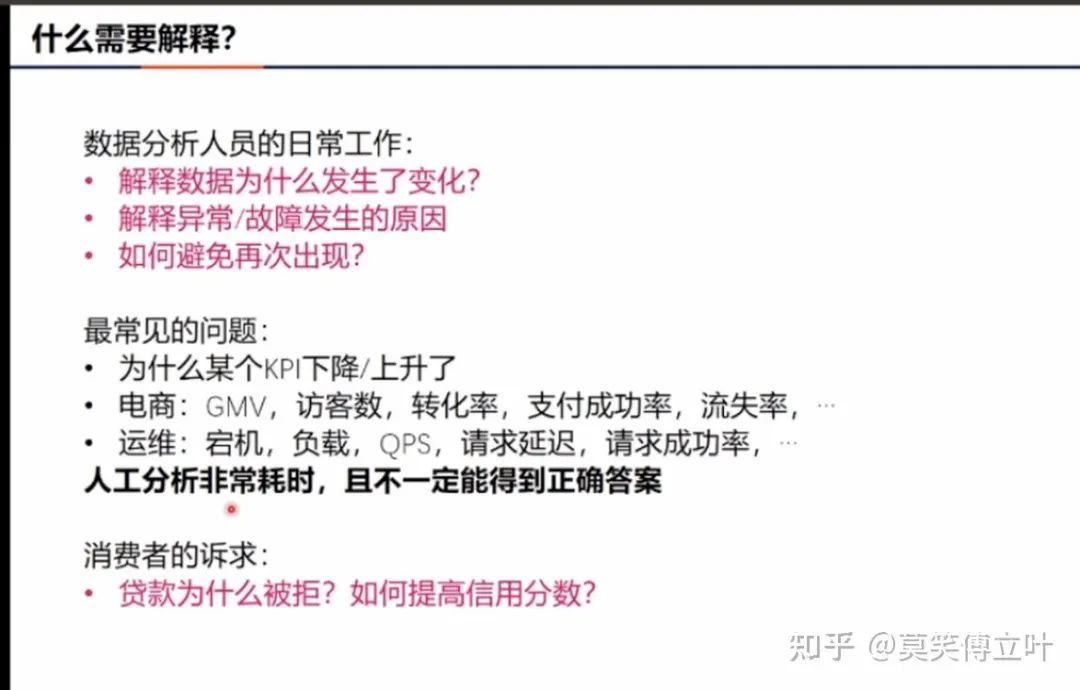

1. Scenarios that pursue explainability.

Deep learning is very good at solving classification and regression problems, but its explanation of what affects the results is very weak. In actual business scenarios, the requirements for interpretability are very high, such as In the following scenarios, deep learning is often overturned.

2. Many operations optimization scenarios

such as scheduling, planning, and allocation problems, often such The problem does not translate well into a supervised learning format, so optimization algorithms are often used. In current research, deep learning algorithms are often integrated into the solution process for better solutions, but in general, the model itself is not yet deep learning as the backbone.

Deep learning is a very good solution, but it is not the only one. Even when implemented, there are still big problems. If deep learning is integrated into the optimization algorithm, it can still be of great use as a component of the solution.

In short,

Answer 3

Author: LinT

Source link: https://www.zhihu.com/question/451498156/answer/1802516688

This question should be looked at based on scenarios. Although deep learning eliminates the trouble of feature engineering, it may be difficult to apply in some scenarios:

- Applications have high requirements on latency, but not so high accuracy. requirements, a simple model may be a better choice at this time;

- Some data types, such as tabular data, may be more suitable to use statistical learning models such as tree-based models instead of deep Learning model;

- Model decisions have a significant impact, such as safety-related and economic decision-making, and the model is required to be interpretable. Then linear models or tree-based models are more suitable than deep learning. Good choice;

- The application scenario determines the difficulty of data collection, and there is a risk of over-fitting when using deep learning

Real Applications are all based on demand. It is unscientific to talk about performance regardless of demand (accuracy, latency, computing power consumption). If the "dry translation" in the question is limited to a certain indicator, the scope of discussion may be narrowed.

Original link: https://mp.weixin.qq.com/s/tO2OD772qCntNytwqPjUsA

The above is the detailed content of What are some classic cases where deep learning is not as effective as traditional methods?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1413

1413

52

52

1306

1306

25

25

1252

1252

29

29

1226

1226

24

24

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.