Technology peripherals

Technology peripherals

AI

AI

Universal data enhancement technology, random quantization is suitable for any data modality

Universal data enhancement technology, random quantization is suitable for any data modality

Universal data enhancement technology, random quantization is suitable for any data modality

Self-supervised learning algorithms have made significant progress in fields such as natural language processing and computer vision. Although these self-supervised learning algorithms are conceptually general, their specific operations are based on specific data modalities. This means that different self-supervised learning algorithms need to be developed for different data modalities. To this end, this paper proposes a general data augmentation technique that can be applied to any data modality. Compared with existing general-purpose self-supervised learning, this method can achieve significant performance improvements, and can replace a series of complex data enhancement methods designed for specific modalities and achieve similar performance.

- ##Paper address: https://arxiv.org/abs/2212.08663

- Code: https://github.com/microsoft/random_quantize

Introduction

Rewritten content: Currently, Siamese representation learning/contrastive learning requires the use of data augmentation techniques to construct different samples of the same data and input them into two parallel network structures to generate a strong enough supervision signal . However, these data augmentation techniques usually rely heavily on modality-specific prior knowledge, often requiring manual design or searching for the best combination suitable for the current modality. In addition to being time-consuming and labor-intensive, the best data augmentation methods found are also difficult to transfer to other areas. For example, the common color jittering for natural RGB images cannot be applied to other data modalities except natural images

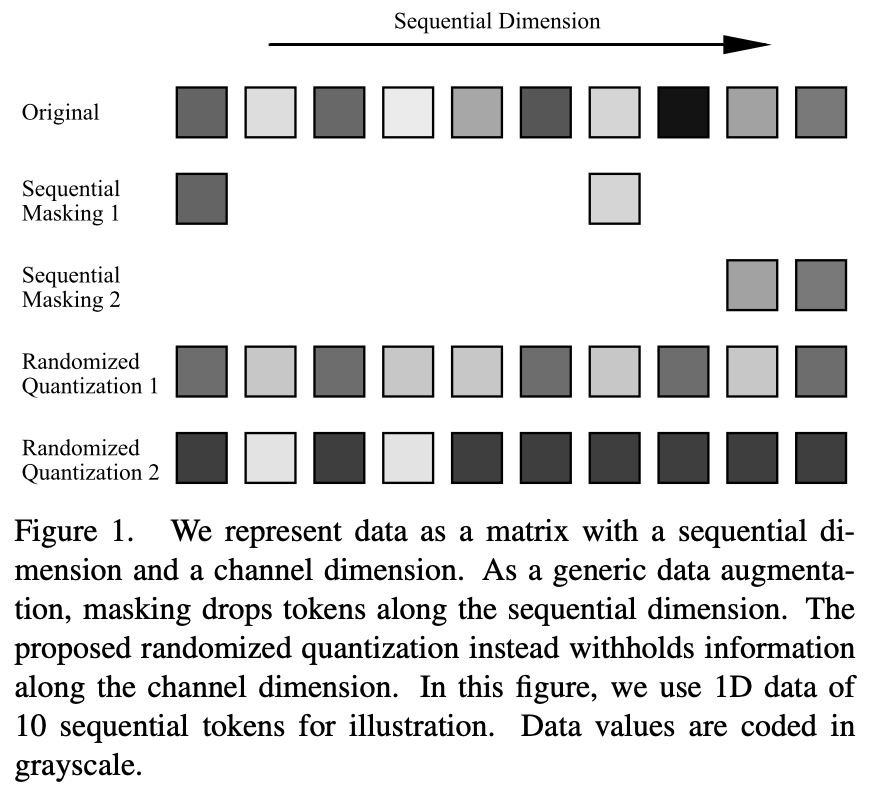

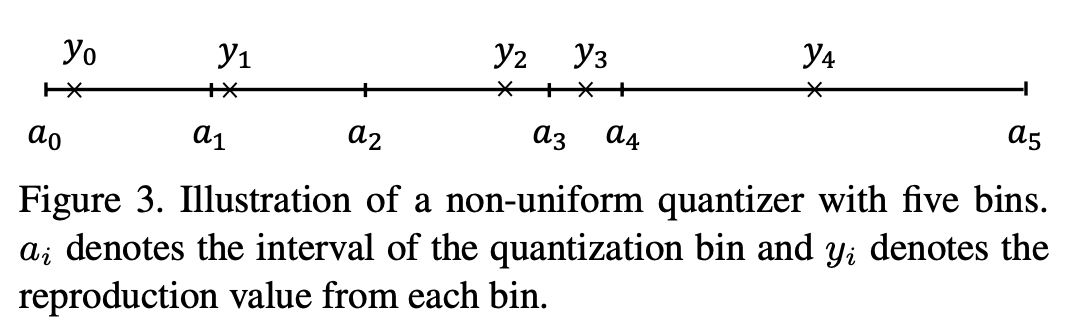

In general, the input data can be represented by A two-dimensional vector composed of sequence dimensions and channel dimensions. The sequence dimension is often related to the modality of the data, such as the spatial dimension of images, the temporal dimension of speech, and the syntactic dimension of language. The channel dimension is independent of the modality. In self-supervised learning, occlusion modeling or using occlusion as data augmentation has become an effective learning method. However, these operations are performed on the sequence dimension. In order to be widely applicable to different data modalities, this paper proposes a data enhancement method that acts on the channel dimension: random quantization. By dynamically quantizing the data in each channel using a non-uniform quantizer, the quantized values are randomly sampled from randomly divided intervals. In this way, the information difference of the original input in the same interval is deleted, while retaining the relative size of data in different intervals, thereby achieving the effect of masking

This method surpasses existing self-supervised learning methods in any modality in various data modalities, including natural images, 3D point clouds, speech, text, sensor data, medical images, etc. In a variety of pre-training learning tasks, such as contrastive learning (such as MoCo-v3) and self-distillation self-supervised learning (such as BYOL), features are learned that are better than existing methods. The method has also been validated for different backbone network structures such as CNN and Transformer.

Method

Quantization refers to using a set of discrete numerical values to represent continuous data to facilitate efficient storage and operation of data. and transmission. However, the general goal of quantization operations is to compress data without losing accuracy, so the process is deterministic and designed to be as close as possible to the original data. This limits its strength as a means of enhancement and the data richness of its output.

This article proposes a randomized quantization operation, which independently divides each input channel data into multiple non-overlapping random intervals ( ), and maps the original input falling within each interval to a constant

), and maps the original input falling within each interval to a constant  randomly sampled from that interval.

randomly sampled from that interval.

The ability of random quantization as masking channel dimension data in self-supervised learning tasks depends on the design of the following three aspects: 1) Randomly divide numerical intervals ;2) Randomly sampled output values and 3) the number of divided numerical intervals.

Specifically, the random process brings richer samples, and the same data can generate different data samples every time a random quantification operation is performed. At the same time, the random process also brings greater enhancement to the original data. For example, large data intervals are randomly divided, or when the mapping point deviates from the median point of the interval, it can cause the original input and output to fall between the interval. greater differences between.

By appropriately reducing the number of divided intervals, the enhancement intensity can be easily increased. In this way, when applied to Siamese representation learning, the two network branches are able to receive input data with sufficient information differences, thereby constructing a strong learning signal and conducive to feature learning

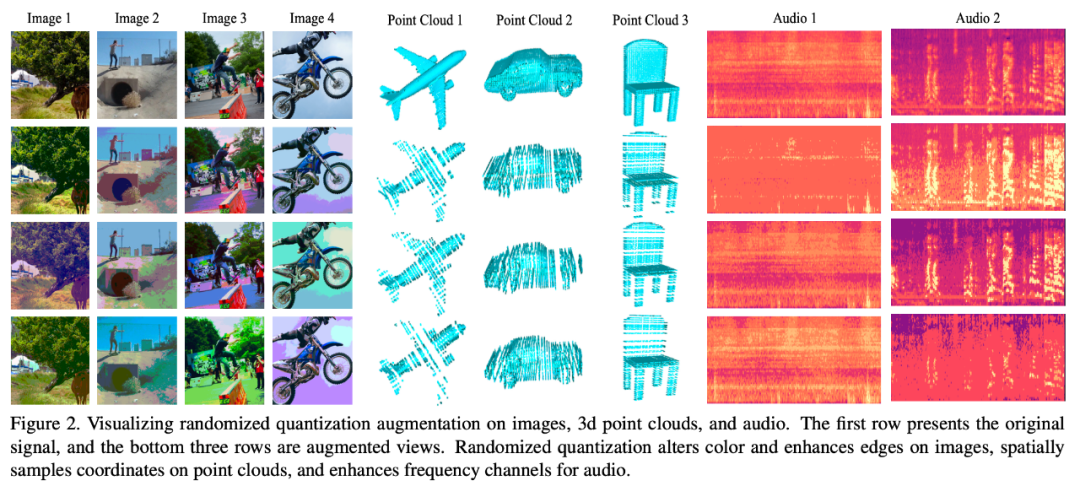

The following figure visualizes the effects of different data modalities after using this data enhancement method:

Experimental results

Rewritten content is: Mode 1: Image

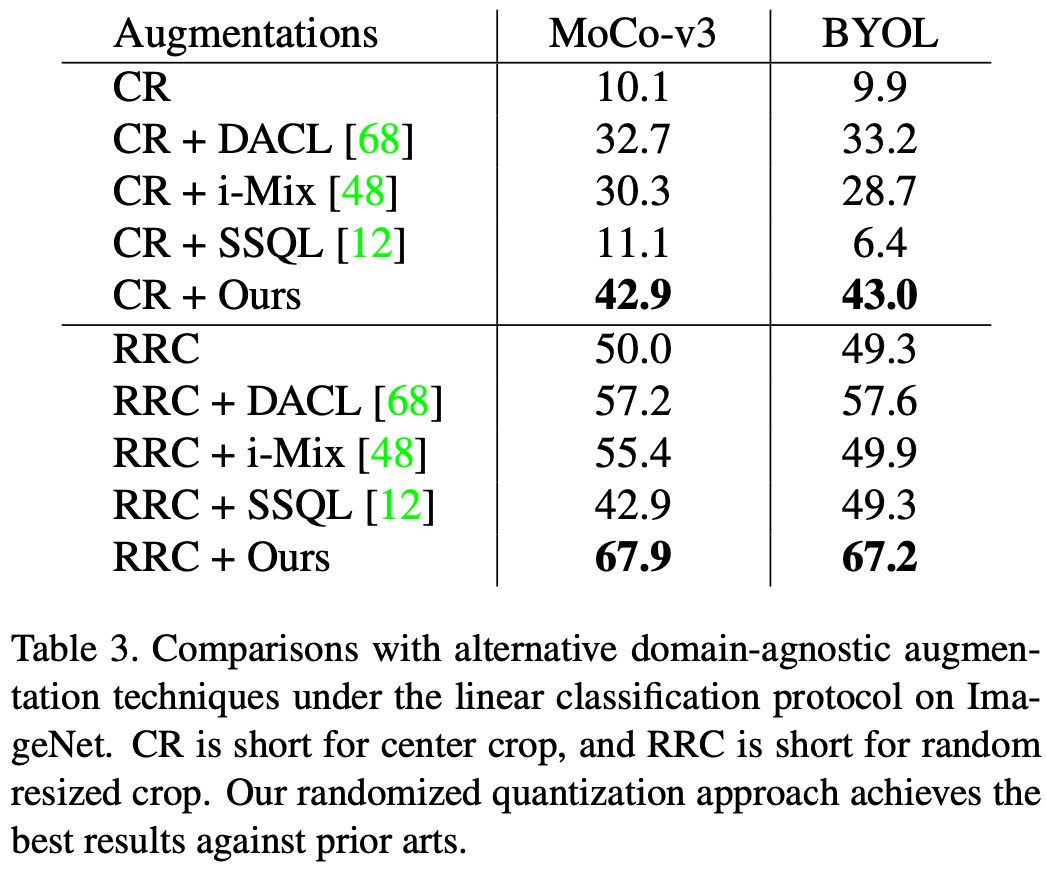

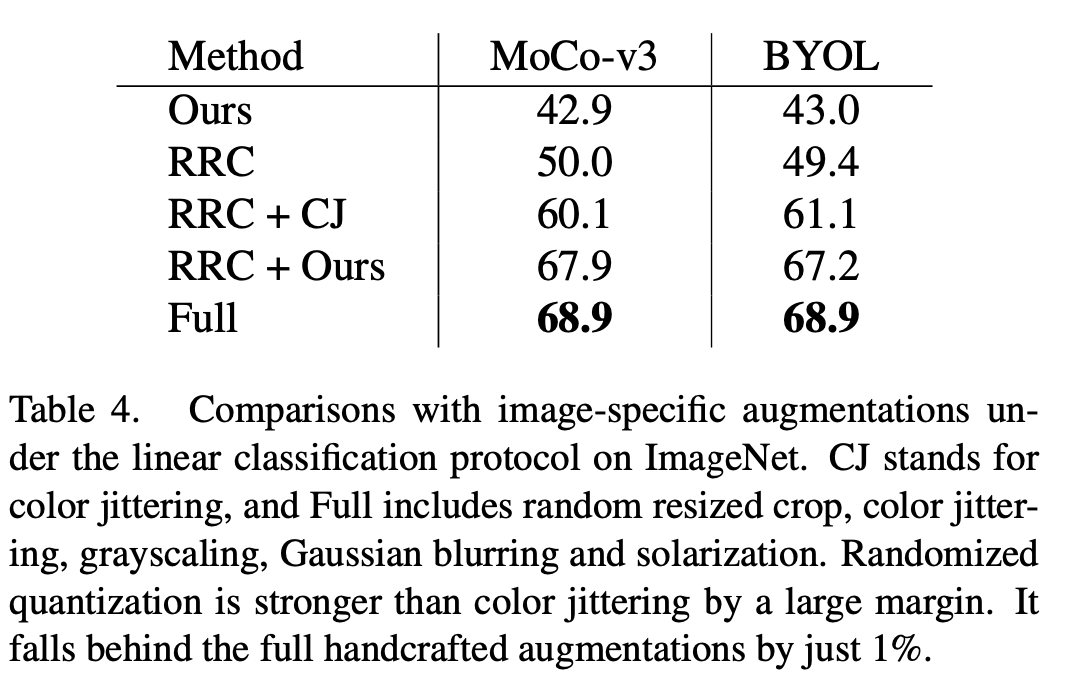

This article evaluates randomized quantization applied to MoCo-v3 and The evaluation index for the effect of BYOL is linear evaluation. When used alone as the only data augmentation method, that is, the augmentation in this article is applied to the center crop of the original image, and when used in conjunction with the common random resized crop (RRC), this method has achieved better results than existing general self-supervised Study methods for better results.

Compared with existing data enhancement methods developed for image data, such as color jittering (CJ), the method in this article has obvious performance Advantage. At the same time, this method can also replace a series of complex data enhancement methods (Full) in MoCo-v3/BYOL, including color jittering, random gray scale, random Gaussian blur, random Exposure (solarization), and achieve similar effects to complex data enhancement methods.

The content that needs to be rewritten is: Mode 2: 3D point cloud

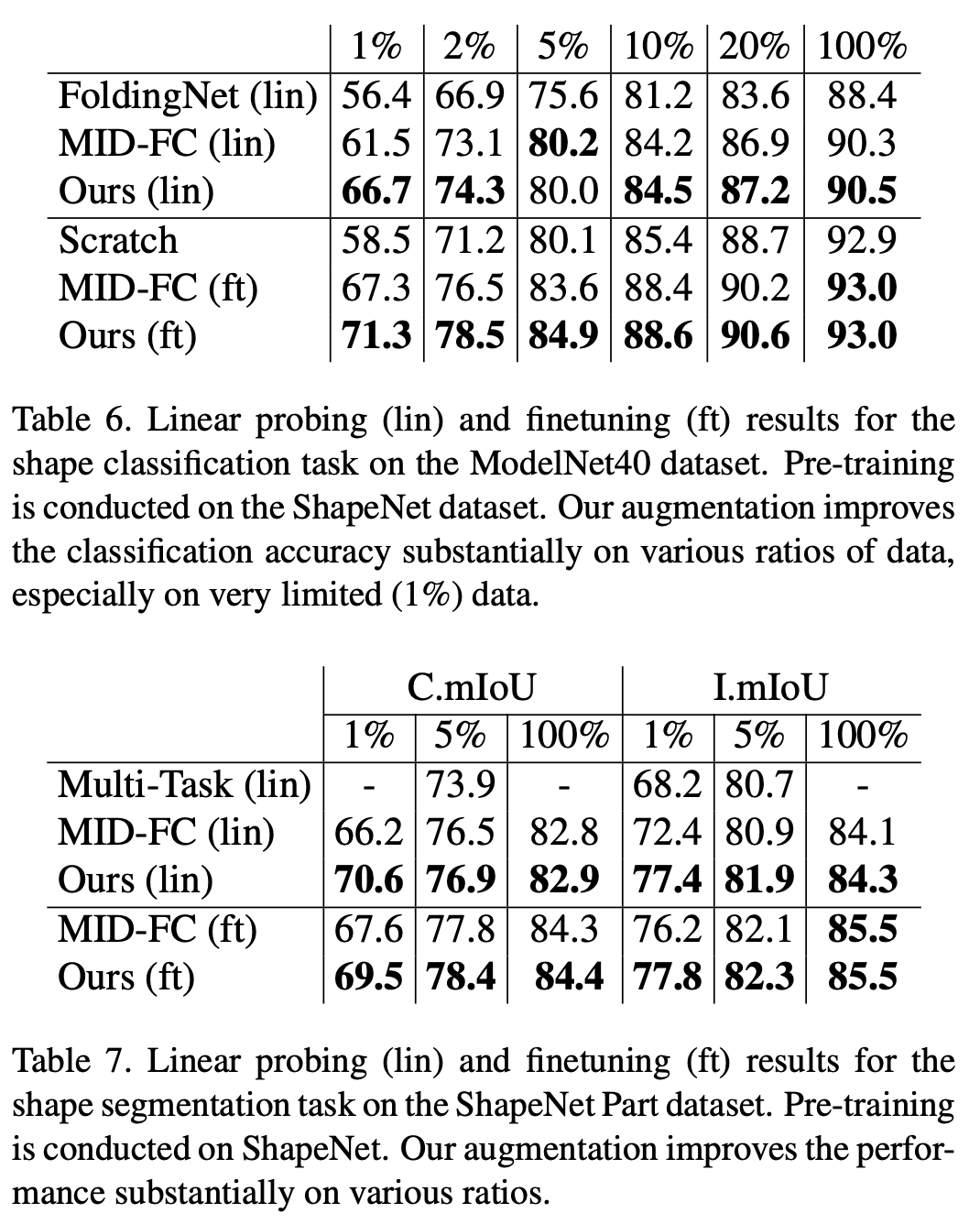

In the classification task of the ModelNet40 dataset and the segmentation task of the ShapeNet Part dataset, this study verified the superiority of random quantization over existing self-supervised methods. Especially when the amount of data in the downstream training set is small, the method of this study significantly exceeds the existing point cloud self-supervised algorithm

Rewritten content: The third mode: speech

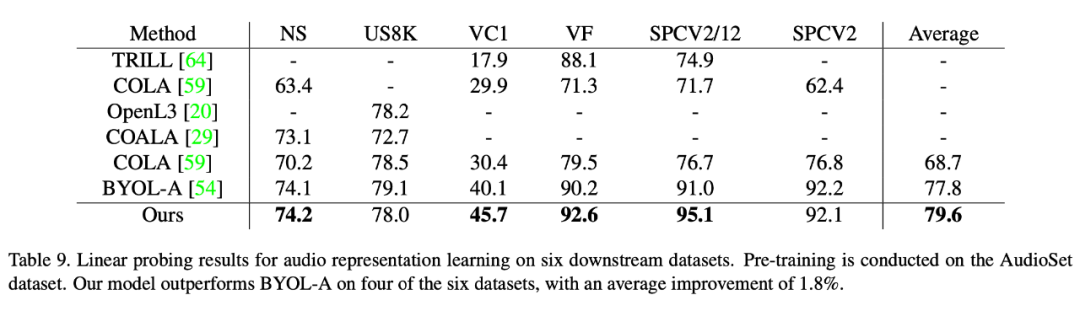

On the speech data set, the method of this article has also achieved better results than existing methods. Better performance of supervised learning methods. This paper verifies the superiority of this method on six downstream data sets. Among them, on the most difficult data set VoxCeleb1 (which contains the largest number of categories and far exceeds the number of other data sets), this method has achieved significant performance improvement (5.6 points).

##The rewritten content is: Mode 4: DABS

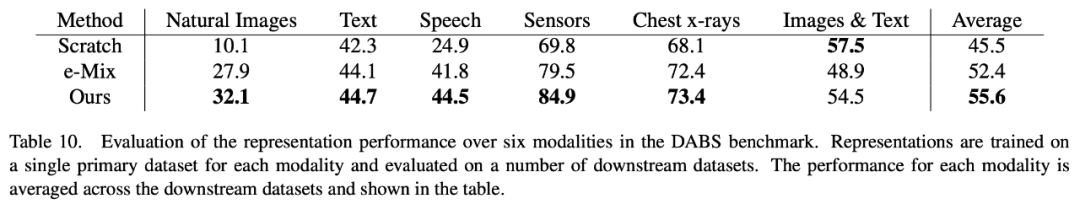

DABS is a general self-supervised learning benchmark covering a variety of modal data, including natural images, text, speech, sensor data, medical images, graphics, etc. On various modal data covered by DABS, our method is also better than any existing modal self-supervised learning method

Interested readers can read the original paper to learn more about the research content

The above is the detailed content of Universal data enhancement technology, random quantization is suitable for any data modality. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1655

1655

14

14

1414

1414

52

52

1307

1307

25

25

1253

1253

29

29

1227

1227

24

24

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

How much is Bitcoin worth

Apr 28, 2025 pm 07:42 PM

Bitcoin’s price ranges from $20,000 to $30,000. 1. Bitcoin’s price has fluctuated dramatically since 2009, reaching nearly $20,000 in 2017 and nearly $60,000 in 2021. 2. Prices are affected by factors such as market demand, supply, and macroeconomic environment. 3. Get real-time prices through exchanges, mobile apps and websites. 4. Bitcoin price is highly volatile, driven by market sentiment and external factors. 5. It has a certain relationship with traditional financial markets and is affected by global stock markets, the strength of the US dollar, etc. 6. The long-term trend is bullish, but risks need to be assessed with caution.

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

Which of the top ten currency trading platforms in the world are the latest version of the top ten currency trading platforms

Apr 28, 2025 pm 08:09 PM

The top ten cryptocurrency trading platforms in the world include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi Global, Bitfinex, Bittrex, KuCoin and Poloniex, all of which provide a variety of trading methods and powerful security measures.

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

What are the top ten virtual currency trading apps? The latest digital currency exchange rankings

Apr 28, 2025 pm 08:03 PM

The top ten digital currency exchanges such as Binance, OKX, gate.io have improved their systems, efficient diversified transactions and strict security measures.

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

Which of the top ten currency trading platforms in the world are among the top ten currency trading platforms in 2025

Apr 28, 2025 pm 08:12 PM

The top ten cryptocurrency exchanges in the world in 2025 include Binance, OKX, Gate.io, Coinbase, Kraken, Huobi, Bitfinex, KuCoin, Bittrex and Poloniex, all of which are known for their high trading volume and security.

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

Decryption Gate.io Strategy Upgrade: How to Redefine Crypto Asset Management in MeMebox 2.0?

Apr 28, 2025 pm 03:33 PM

MeMebox 2.0 redefines crypto asset management through innovative architecture and performance breakthroughs. 1) It solves three major pain points: asset silos, income decay and paradox of security and convenience. 2) Through intelligent asset hubs, dynamic risk management and return enhancement engines, cross-chain transfer speed, average yield rate and security incident response speed are improved. 3) Provide users with asset visualization, policy automation and governance integration, realizing user value reconstruction. 4) Through ecological collaboration and compliance innovation, the overall effectiveness of the platform has been enhanced. 5) In the future, smart contract insurance pools, forecast market integration and AI-driven asset allocation will be launched to continue to lead the development of the industry.

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms. Top 10 digital currency exchanges in the world. 2025

Apr 28, 2025 pm 04:30 PM

Recommended reliable digital currency trading platforms: 1. OKX, 2. Binance, 3. Coinbase, 4. Kraken, 5. Huobi, 6. KuCoin, 7. Bitfinex, 8. Gemini, 9. Bitstamp, 10. Poloniex, these platforms are known for their security, user experience and diverse functions, suitable for users at different levels of digital currency transactions

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

What are the top currency trading platforms? The top 10 latest virtual currency exchanges

Apr 28, 2025 pm 08:06 PM

Currently ranked among the top ten virtual currency exchanges: 1. Binance, 2. OKX, 3. Gate.io, 4. Coin library, 5. Siren, 6. Huobi Global Station, 7. Bybit, 8. Kucoin, 9. Bitcoin, 10. bit stamp.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.